Get Early-Bird Access to the new EEAT tool!

Google’s Helpful Content Updates are a series of algorithm changes designed to ensure search results are more helpful and reliable for users.

“It is now part of a “core ranking system that’s assessing helpfulness on all types of aspects.” Danny Sullivan, Google March 2024

These updates began in August 2022 and have evolved to focus on rewarding content created for people, not just for search engines.

Timeline and Key Changes

“The classifier that determines if a site has a lot of unhelpful content has already been at work understanding content. The classifier didn’t start at launch. It already understood site content over a period of time. At launch, we just began to use the signal in ranking. It does continually work to monitor sites & understand if there’s lasting change.” Danny Sullivan, Google

The updates have seen several milestones:

- August 2022: Launched to reward satisfying user experiences, initially for English searches globally (Google’s Announcement – August 2022).

- December 2022: Expanded globally, improving detection of low-quality content, taking 38 days to roll out (Helpful Content Update).

- September 2023: Loosened AI content guidance, warned against third-party content without oversight, and introduced recovery guidance (Search Engine Journal – September 2023 Update).

- March 2024: Integrated into core updates, reducing low-quality content by 45%, with new spam policies (Google’s March 2024 Update Announcement).

Differences between the original 2022 and current Google Helpful Content Guidelines 2025

Key Differences

-

Addition of Page Experience

-

Original: The original guidelines focused on content quality, expertise, and presentation but did not explicitly address page experience as a ranking factor.

-

Latest: A new section titled “Provide a great page experience” was added, stating, “Google’s core ranking systems look to reward content that provides a good page experience.” It advises site owners to consider multiple aspects of user experience (e.g., site speed, mobile-friendliness, and minimal intrusive elements) rather than focusing on just one or two factors.

-

Impact: This shift broadens the scope beyond content alone, integrating technical and usability factors into the evaluation of “helpful” content.

-

-

Evolution from E-A-T to E-E-A-T

-

Original: The guidelines highlighted E-A-T (Expertise, Authoritativeness, Trustworthiness) as key principles for content evaluation.

-

Latest: The framework evolved to E-E-A-T, adding “Experience” to the mix. The latest version emphasizes that content should “clearly demonstrate first-hand expertise and a depth of knowledge,” such as insights from personal use or real-world application. It also clarifies that trust is the most critical aspect, with experience and expertise contributing to it variably depending on the content.

-

Impact: The inclusion of “Experience” prioritizes practical, hands-on knowledge alongside traditional expertise, making content more actionable and relatable.

-

-

New “Who, How, and Why” Framework

-

Original: While authorship transparency (e.g., bylines) was encouraged, there was no structured approach to evaluating content creation processes or intent.

-

Latest: A new section, “Ask ‘Who, How, and Why’ about your content,” was introduced:

-

Who: Emphasizes clear authorship (e.g., bylines with author background).

-

How: Encourages disclosure of how content was created, especially if automation or AI was used, to build trust.

-

Why: Stresses that content should be created primarily to help people, not to manipulate rankings.

-

-

Impact: This framework enhances transparency, particularly in response to the rise of AI-generated content, ensuring users understand the source, process, and purpose behind the content.

-

-

Stricter Guidelines on Manipulative Practices

-

Original: The “Avoid creating search engine-first content” section warned against prioritizing search rankings over user value but lacked specific examples of manipulative tactics.

-

Latest: Two new questions were added:

-

“Are you changing the date of pages to make them seem fresh when the content has not substantially changed?”

-

“Are you adding a lot of new content or removing a lot of older content primarily because you believe it will help your search rankings overall by somehow making your site seem ‘fresh?'”

-

-

Impact: These additions target tactics that exploit freshness signals, reinforcing that updates must provide genuine value rather than superficial changes.

-

-

Expanded Expertise Definition

-

Original: Asked, “Is this content written by an expert or enthusiast who demonstrably knows the topic well?”

-

Latest: Updated to, “Is this content written or reviewed by an expert or enthusiast who demonstrably knows the topic well?”

-

Impact: The inclusion of “or reviewed” allows for expert oversight rather than requiring the writer to be the sole expert, supporting collaborative content creation while maintaining quality.

-

-

Explicit Guidance on AI-Generated Content

-

Original: Did not mention automation or AI-generated content explicitly.

-

Latest: Addresses AI use in the “How” section, asking:

-

“Is the use of automation, including AI-generation, self-evident to visitors through disclosures or in other ways?”

-

“Are you providing background about how automation or AI-generation was used?”

-

It also warns that using AI to manipulate rankings violates spam policies.

-

-

Impact: Google now accepts AI-generated content if it’s transparent, adds value, and prioritizes user benefit over search engine gaming.

-

Structural Changes

-

Original: Organized into sections like “Content and quality questions,” “Expertise questions,” “Presentation and production questions,” “Focus on people-first content,” “Avoid creating search engine-first content,” and “Get to know E-A-T.”

-

Latest: Adds “Provide a great page experience” and “Ask ‘Who, How, and Why’ about your content,” while moving some presentation-related questions (e.g., spelling, production quality) under “Content and quality questions.”

-

Impact: The latest version is more comprehensive and structured, reflecting a holistic approach to content evaluation.

Current Emphasis in 2025

-

Holistic User Experience

-

Beyond high-quality content, Google now values the entire user journey. A “great page experience” (e.g., fast loading times, mobile optimization, minimal distractions) is integral to ranking success. Content must be both helpful and seamlessly accessible.

-

-

Practical, First-Hand Knowledge

-

With E-E-A-T, there’s a strong push for content that demonstrates real-world experience alongside expertise. Examples like personal product testing or lived insights are favoured, ensuring content is practical and user-focused rather than purely theoretical.

-

-

Transparency and Trust

-

The “Who, How, and Why” framework underscores transparency. Creators must clarify authorship, disclose creation methods (especially AI use), and ensure the primary intent is to help users. This builds trust and combats low-quality or deceptive content.

-

-

Genuine Value Over Manipulation

-

Google is cracking down on tactics like fake freshness updates or mass content production for rankings. The emphasis is on meaningful updates and authentic content that serves a clear audience need.

-

-

Flexibility in Expertise

-

By allowing content to be “written or reviewed” by experts, Google supports diverse creation models while maintaining credibility, and broadening opportunities for quality content production.

-

Caveat: What you read below is theory and investigation.

The HCU

In November 2024, I started seriously investigating the September 2023 Google Helpful Content Update (HCU) and subsequent Spam updates.

It’s a confusing update.

In fact, while some folks had reached out to me beforehand, it was this X post from Lily Ray that started me off:

Fortunately, I got access to lots of impacted sites to analyse many of which were at the summit.

In fact I might be the only person outside Google with access to that many Search Console accounts hit by HCU/SPAM updates.

With access to that many livelihoods, you realise quickly why Google avoids commenting on individual sites.

So, this article has been written very carefully to avoid focusing on any particular site and talking about concepts that do not particularly affect particular sites.

No site in this set is operating “illegally”, for instance, though I may point out that failure to comply with policies also might mean any site that fails, could be in a grey area.

Danny Sullivan, Search Liason for Google talks with Aleyda Solis about big brands and small brands:

“Is it fair that only the larger well-established things can do better than maybe the smaller well-established things? No, we don’t want it to be only the very big (sic “well established”) things rank well.”

He acknowledges how people feel about ranking:

“These large sites or these well-established sites, in some cases, people feel like, ‘Oh, they just can rank for anything.’ Or, ‘I have a small site which will be a small brand by the way; (1). your small site is a brand, (2). you are a brand in a lot of these cases as well.’ And I don’t feel like I can compete.”

He makes it clear that small sites are still brands – being “well-established” is what matters.

From Search Engine Roundtable:

“In Rutledge Daugette’s write-up from the Google Web Creator summit that got a lot of attention last week, he wrote a line that struck a chord with the SEO community. In short, some are taking it to mean that Google does not want site owners, or at least, content creators, to hire SEO agencies or have to purchase SEO audits.”

He wrote:

Danny specifically said, “We don’t want you paying money for SEO audits to try and recover, we should be providing better guidance that removes the need for those outside of instances like site migrations or more complex changes.” We touched on this further in the breakout sections.As a note, I’ve spent a significant amount of money on SEO consulting since 2021.

But does Google really not want you to hire SEOs, SEO agencies, consultants or pay for SEO audits?” Barry asked as did we all.

Remind me in the future to touch on claims that SEO is dead and a new term I came up with – Zombie SEO…

Initial Findings in November 2024

I live-blogged some of my initial findings on X, but essentially by Nov 07 2024 I had noticed a correlation between some drops that might revolve around transparency as previous versions of the quality rater guidelines had alluded to:

I wondered if Google had turned the dial to 11 in this area, so to speak:

“With the core updates, we don’t focus so much on just individual issues, but rather the relevance of the website overall. And that can include things like the usability, and the ads on a page, but it’s essentially the website overall. And usually, that also means some kind of the focus of the content, the way you’re presenting things, the way you’re making it clear to users what’s behind the content. Like what the sources are, all of these things. All of that kind of plays in.” John Mueller, Google 2021

I thought it was a potential issue at least, and it looked to me a familiar direction from Google.

The Google Creator Summit

In 2024, Google invited many creators to the Google Creator Summit.

To be factual, Google held their hands up:

“We’ve got to do a better job for these creators where their hearts are in the right place. Really need to do a better job for them.” Danny Sullivan.

“For the people who have been impacted, especially the HCU sites or whatever (EDIT – note, the “whatever” because HCU is now part of CORE since March 2024 alongside other SPAM algorithms that all work in concert with one another) that are ones where their hearts are in the right place… I really do feel that we’re going to keep, and I hope that we’re going to keep trying to do better for that special group.”

and:

QUOTE: “According to Danny Sullivan, my “content was not the issue” when referencing the Mountain Weekly News so don’t let the HCU update make you automatically question your content. In fact it was clear all the website creators that were in attendance simply got caught up in an algorithm update, nothing more, nothing less. You’re content doesn’t suck, if it did you wouldn’t be reading this. Even if you managed to lose traffic during HCU update.” Mike Hardaker

Long story short, Google says that traffic collapse has little to do with small publishers’ content, or SEO and not to hire an SEO, and it was generally about THEM (Google), not you (independent publisher) so to speak.

The attendees of the Creator Sumit were, far from satisfied I think it fair to record.

Can the Google HCU penalty be lifted or fixed?

“Websites can regain traffic by improving quality, but returning to pre-update levels isn’t realistic.” John Mueller, Google

Yes, we have been told but also told that is not map back to a single change you can make on your website or that all your traffic is ever coming back.

QUOTE “Assuming a site hit by HCU in 2023 has fixed everything that caused the sitewide classifier to be applied, what is the timeframe for the site to start climbing again?” – OP

To be exact:

QUOTE “Fix” is hard to say when it comes to relevancy, but I’d assume bigger changes would be visible when the next CORE UPDATES happen.” JM, Google

Aslo:

However, most folks’ experience as you can see is:

QUOTE “We are many that would really love a reply to why not a single HCU-hit site has begun climbing again and why new articles won’t rank.” Q2

It is indeed the fact that “not a single HCU-hit site has begun climbing again and why new articles won’t rank” piqued my interest in finding out what the issue is.

“I expect if we see incremental improvements, that might be reflected in incremental ranking changes.” Danny Sullivan

Another quote from Google, though, that I think is pertinent:

QUOTE “These are not “recoveries” in the sense that someone fixes a technical issue and they’re back on track – they are essentially changes in a business’s priorities (and, a business might choose not to do that).” Google, John Mueller

As a technical SEO, I can confirm at this end, that Google’s spokesperson John Mueller looks as if he was telling that part straight (from looking at charts and technical audits), anyway,

If you bought a technical SEO audit to fix HCU, while useful, it’s nowhere near enough to help you until you fix the deeper issues I go into here regarding “Helpfulness” – a known QUALITY SIGNAL in Google’s algorithm.

Technical SEO is not the primary problem.

Content isn’t even the primary problem Google has specifically said.

Despite my attempts to focus on on page content, I couldn’t get away from something I detected that was more evident in later Google Updates, noticeably the 2023 October Spam Update.

This update seemed to impact sites hit by HCU, harshly.

I ended up leaving the September HCU content analysis until I had an idea on what the October 2023 SPAM update was all about.

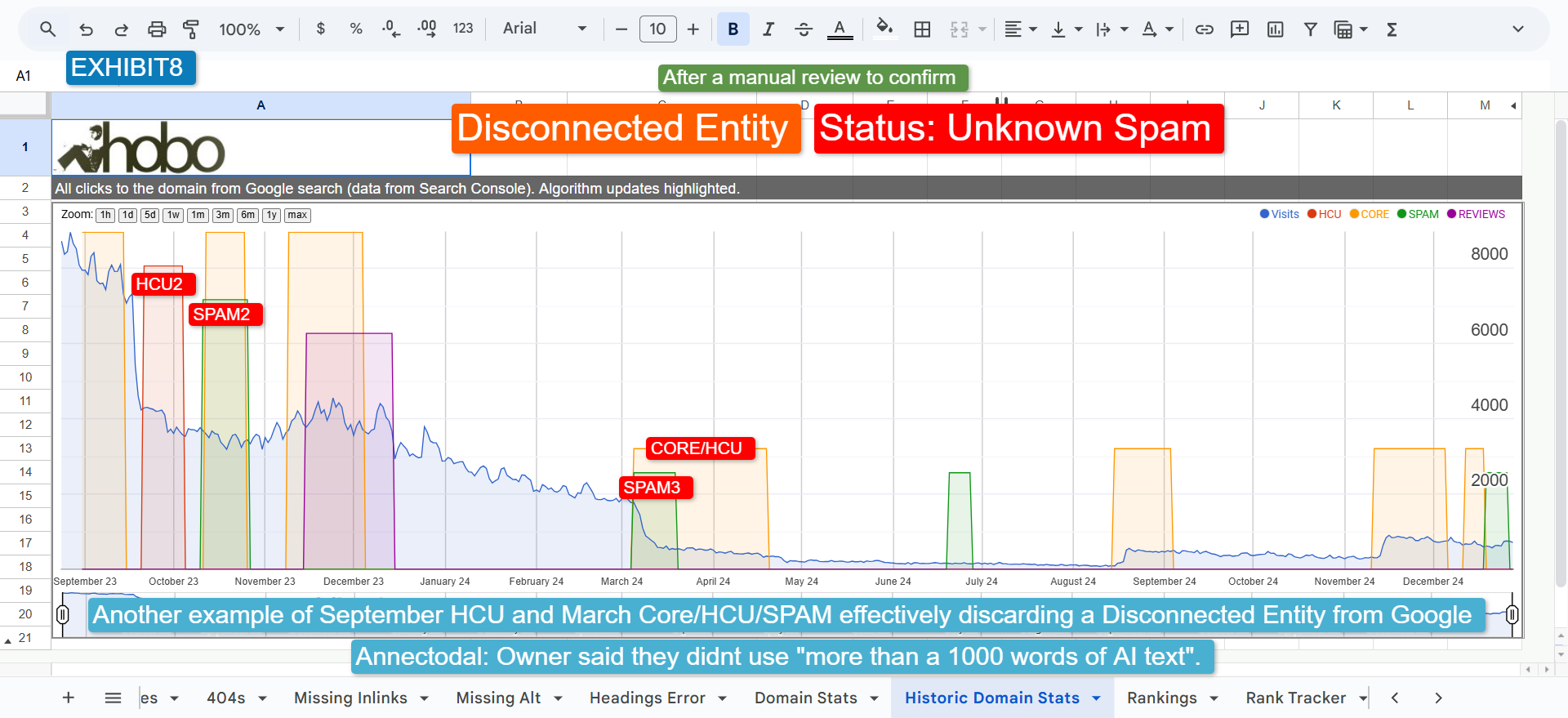

From that analysis, I came up with the Disconnected Entity Hypothesis (which I can certainly pinpoint to the month after Sep 2023 HCU but not quite to HCU directly).

A Disconnected Entity by definition, is Unhelpful Content, and the fact the entity is in this shape and Trust is the most important factor of EEAT, then it looks to me as if A LACK OF TRUST is the biggest lever (at least one of them) to decimate anyone’s rankings.

All roads led back here.

It got me thinking…

What if these guys didn’t lose ALL their traffic to AI?

There was a recent Bloomberg article focusing on Ai overviews etc. I constantly wonder if the whole AI stole my traffic story is a (useful to Google) red herring in any investigation into this traffic apocalypse.

These sites didn’t lose ALL their traffic to AI, they were decimated in a regimented fashion by SPAM updates.

From the article:

“The company has long emphasized that website owners should focus on making content driven by experience, expertise, authoritativeness and trustworthiness, or “EEAT,” as the set of guidelines is known to publishers. This means creators should expect that Google would prioritize travel sites where the writers had actually visited the destinations they featured.”

This is wrong to think that Google would ONLY “prioritize travel sites where the writers had actually visited the destinations they featured”.

It is evident that it isn’t working like that.

You need to understand the question: Exactly which “entity” is vouching for this “author” who visited this place? Which “entity” is Google vouching for here, when it sends a user off Google?

“In the absence of clear answers from the company, creators have struggled to discern how much of their pain is due to AI Overviews and how much is due to other changes in search.”

This is unfortunately 100% correct.

And it is exactly what I am interested in delineating.

And I’m not saying AI Overviews are not impacting traffic they are (I don’t have access to data for any of that).

I am asking can a case can be made for something ELSE happening under the hood? Something Google has declared in policies and guidelines?

I’m also saying the people MOST impacted, decimated since HCU LOOK LIKE TO ME as if if they are being targeted by spam algorithms.

I am also saying I am an experienced SEO of 20 years, and I still can’t tell if this is correlation or causation, like most things in SEO.

Google’s take on most of these claims is somewhat opaque as usual – these sites are not representative of the web, and AI Overviews send lots of traffic to diverse sites and so on.

So let’s focus on what Google says first:

TRUST as the foundation of E-E-A-T

Google explicitly identifies trust as the most crucial factor within its E-E-A-T framework:

“Of these aspects, trust is most important.” Google 2025

Danny Sullivan confirms helpfulness as essential:

“Quality signals-like helpfulness-matter more, and raters help us measure that.” Google 2025

Google further underscores authorship transparency:

“Something that helps people intuitively understand the E-E-A-T of content is when it’s clear who created it… We strongly encourage adding accurate authorship information.”

Failure to transparently display authorship, ownership, or editorial oversight fundamentally impacts trust and helpfulness, negatively affecting rankings.

Let’s focus on TRUST as the foundation of HELPFULNESS.

By Definition, sites that lack Trust, lack E-E-A-T and are SPAM.

You cannot have “helpful content” if you fail this Quality Signal – Helpfulness – which is on the whole based on TRUST – no matter how good your content really is, or even how good your links are.

If a site fails to clarify ownership, authorship and responsibility (bearing in mind the site itself CAN BE AND OFTEN IS be the “PRIMARY AUTHOR”), intent, or user value, IN FULL and APPROPRIATELY it COMPLETELY violates Google’s helpfulness standard FULL STOP.

No need to even ANALYSE THE ACTUAL CONTENT on the page!

I hear if you go to Brighton SEO this week you are told to fake EEAT with fake profiles! That’s an extremely grey area! Black even!

I remember a story where a black hat back 2 decades ago was building links to a fake DR Buckaki profile. You can guess where those links got redirected to!

Imagine EVEN a site with 100 real doctors on it but no information about the creator or entity responsible for the site itself. NOW in Google’s world, the doctors aren’t REAL doctors, as far as Google is concerned, as far as Google will rank the content from THAT site, regardless of links to the site. Given time of course.

It doesn’t matter who the authors are at this point, or the quality of their content.

Quality Raters and Ranking Impact

Cyrus is a great SEO, and very insightful:

That was Search Liason replying to a point I posed to Barry from Seroundtable.

The quality raters do not affect rankings “DIRECTLY” but I will show you entirely why this is a MOOT point, anyway.

It doesn’t matter if these sites at any time were marked “low-quality” by an actual manual reviewer at any point.

OR DOES IT?

Well, it’s impossible to confirm this outside of Google.

Still, even then it is a moot point.

Google Statements

-

“We use responses from Raters to evaluate changes, but they don’t directly impact how our search results are ranked.”

-

Context: This statement from Google’s official support page emphasizes that quality raters provide feedback to assess the effectiveness of proposed algorithm changes, but their ratings are not used as a direct factor in determining search result rankings. This underscores the indirect nature of their impact, as the feedback informs future updates.

-

“The ranking systems are not site specific. We’re not going through and boosting a particular site or lowering particular site.”

-

Context: In this interview, Sullivan clarifies that quality rater feedback is used to improve the overall ranking system rather than to adjust the rankings of individual websites. This reinforces the idea that their influence is indirect, as it contributes to general algorithm improvements that affect all sites over time.

-

“If you want a better idea of what we consider great content, read our raters guidelines.”

-

Context: Sullivan suggests that understanding the quality rater guidelines can help content creators align with Google’s standards for high-quality content. While this doesn’t directly boost rankings, it indirectly benefits sites by preparing them for future algorithm updates, as the guidelines reflect what Google values in its ranking systems.

-

“Google will continue to try to reward good content and Sullivan said Google’s systems could be improved in some areas.”

-

Context: This statement highlights Google’s ongoing effort to refine its algorithms based on feedback, including from quality raters, to better reward high-quality content over time. It implies that quality rater feedback plays a role in identifying areas for improvement, which indirectly affects rankings through subsequent updates.

-

“The web changes. Content changes. People’s expectations change. That’s why we keep looking at ways to improve the search results we show.”

-

Context: Sullivan explains why Google continuously updates its algorithms—to adapt to evolving content and user expectations. Quality rater feedback is part of this process, helping Google identify areas for improvement, which indirectly influences how rankings evolve.

-

“Quality raters do not directly influence rankings; their guidelines are about checking if results are relevant to the query.”

-

Context: This statement reinforces that quality raters’ work is evaluative rather than punitive or rewarding for specific sites, focusing on relevance and usefulness, which indirectly informs algorithm improvements.

-

“Quality raters evaluate changes to Google’s search algorithms but do not affect individual websites.”

-

Context: This aligns with Sullivan’s point, emphasizing that raters’ feedback is about system-wide performance, not site-specific rankings, thus having an indirect impact through algorithm updates.

-

“Valid HTML code and typos are not ranking factors; genuine website quality matters.”

-

Context: While not directly about raters, this underscores what raters prioritize—content substance over technicalities, which indirectly influences how Google evaluates quality in its algorithms.

-

“Quality raters rate AI-generated and scaled content as low quality.”

-

Source: Google On Scaled Content

-

Context: This shows how raters’ feedback influences algorithm priorities by flagging low-effort or spammy content types, which can lead to updates that indirectly affect rankings by deprioritizing such content.

-

-

“Referring to quality rater guidelines can provide insight into creating good content.”

-

Context: Like Sullivan, Mueller encourages using the guidelines as indirect guidance for creating high-quality content, which can align with future algorithm updates and indirectly benefit rankings.

There’s a misconception that if something doesn’t affect you directly, it doesn’t affect you at all.

Logic dictates otherwise.

Something can affect you indirectly through a series of causative steps.

For example, quality raters might not touch your rankings, but their evaluations help shape algorithm updates, affecting your site.

This indirect chain of impact is very real.

If raters shape how algorithms judge quality, and algorithms determine rankings, then rater input-though indirect-is effectively part of what affects you.

The latest quality rater guidelines specifically tell raters to look for expired domain abuse. In short that’s because SPAM algorithms are designed to impact these expired domains with markedly different intent to the previous owner – to disconnect them – from Search.

Forget about the Rater guides, it’s in Google’s Official Documentation

It’s not as if Google is hiding this, and Search Liason kindly linked directly to it in his reply to me.

That standard is described directly in the guidance from official Google documentation the quality rater handbook just expands on the points made:

Helpfulness as a Quality Signal

- “Content should be created primarily to help people … Ask yourself if your content is clear about who created it, why it exists, and how it’s useful to your visitors.”

Context: Lists three elements that define “helpfulness”-who, why, and how. These are assessed by raters as indicators of content quality.

Source: https://developers.google.com/search/docs/fundamentals/creating-helpful-content

Google will not EVER classify opaque PRIMARY AUTHORS as “HELPFUL” – and that has ranking consequences via algorithm updates shaped by rater insights.

You’re content is UNHELPFUL if it is not “clear about who created it, why it exists, and how it’s useful to your visitors”.

FULL STOP.

Just sticking your name and address on an About page is NOT enough.

Fake EEAT won’t work.

Its not enough for Google to know your name, address and go up mountains, review games, cook or review films and stuff with pictures of you there with your kids.

Why?

Because, soon if not now, this can all be faked.

The Meaning of “Helpfulness” According to Google

Ironically, Google wants your best content.

I think it does want to create a good ecosphere for publishers, believe it or not.

“No one who is creating really good content, who doesn’t feel that they were well rewarded in this last update should think well, that’s it. Because our goal is if you’re doing good content, we wanted you to be successful. And if we haven’t been rewarding you as well as we should, that’s part of what we hope this last update would do better on. We want the future update to continue down that path.” Google, Danny Sullivan

In Creating helpful, reliable, people-first content, Google outlines what it considers helpful content – and what it does not.

This guidance is intended for creators but is also used internally by raters and ranking systems to evaluate content quality.

Helpfulness is defined by:

- Who created the content

Content should clearly state who wrote it, with bylines and links to author bios or About pages. Lack of authorship transparency is considered unhelpful. - How the content was created

Content should include methodology or context-especially if automation, AI, or testing is involved. Disclosures build trust and establish credibility. - Why the content exists

The purpose of content should be to help users. If the primary motivation is search traffic or ranking manipulation, it fails the helpfulness test.

Google’s own words:

“You’re creating content primarily to help people, content that is useful to visitors if they come to your site directly. If you’re doing this, you’re aligning with E-E-A-T generally and what our core ranking systems seek to reward.”

Source: Creating helpful, reliable, people-first content

Google also advises avoiding content that:

- Exists purely to attract search traffic

- Summarises others’ work with no added value

- Is created without true expertise

- Is AI-generated to manipulate rankings

- Promises answers that don’t exist (e.g., fake release dates)

In other words: intent, quality, and transparency matter.

Does Helpfulness Apply to Sites or Just Authors?

The Creating helpful, reliable, people-first content guide from Google mostly focuses on individual pieces of content and their authors.

However, it does reference the site in a few specific places:

- Asking whether the site has a clear purpose.

- Assessing the reputation of the site.

- Evaluating whether people would trust the site as a source.

- Suggesting that content be assessed by someone not affiliated with the site.

Meanwhile, the Search Quality Evaluator Guidelines-used by raters-go much further. They explicitly direct raters to examine:

- Who owns the site?

- The site’s overall reputation.

- Whether the site publishes trustworthy, expert-backed content.

- Whether the site’s design and structure contribute to or hinder the user experience.

So while Creating helpful, reliable, people-first content documents focuses on content-level creation, the quality raters’ framework absolutely considers the site-level entity-its ownership, intent, reputation, and content portfolio.

In fact, I believe this SITE TRUST overrides all other metrics in the end.

Hasn’t Google TOLD us this?

YES, in response to myself and Barry Swartz on X Google’s Search Liason, they even linked exactly to the Official Documentation I am on about after proclaiming that focusing on this aspect was “Good!”

Helpfulness Requires Purpose: “Why Was This Content Created?”

Google is unambiguous: content should be created primarily to help people, not to manipulate search rankings.

If your site’s content is designed to attract clicks, traffic, or impressions without offering actual value to a reader, it is not considered helpful.

This principle underpins Google’s people-first guidance. The “why” of content creation is not rhetorical-it’s evaluative.

Google’s systems look for intent. If the intent is user benefit, the content aligns with quality. If the intent is visibility, traffic capture, or keyword targeting with no substance, the content likely signals spam.

Likely, if you are using generative AI as more of an auto-completer for your work like I do, you will probably be fine.

If you use it to add 100000 pages to your site with low-quality content targeting what is trending about your topic you will probably get a manual action, or hit by Doorway Page correction algorithms.

STRATEGY: Believe it or not, you probably have 1% of the content on your site about you and your business and your expertise on your site that you could have. You should be focusing on the other 99% of content about YOU (e.g. case studies, detailed documentation about your experiences or artwork etc) not just whats trending in Google Trends or what competitor keywords you can dig out of Semrush.

Helpfulness Requires Clarity: “Who Created the Content?”

Google explicitly instructs creators to make authorship self-evident. Helpful content discloses who wrote it, provides bylines when expected, and links to author background pages.

Ultimately these authors will be vouched for by the ENTITY that owns the domain, and then if that entity is HEALTHY, Google will vouch for that entity.

If not, the site won’t rank for YMYL terms.

This aligns with the E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) framework, particularly the trust dimension. Trust begins with transparency.

A page that omits authorship details, hides behind a brand that is not “well-established”, or is written anonymously without justification signals lower helpfulness to Google’s evaluative systems-even if the content is accurate – FI Google determines users would expect more about the site when they got there.

Helpfulness Requires Transparency: “How Was This Content Created?”

The “how” of content creation is a major trust cue. Google recommends openly describing your content production process, especially when automation or AI is involved.

Whether it’s manual research, first-hand testing, or automated summarization, the helpful version of that content includes disclosures, methodology, and context.

Google urges creators to show readers how conclusions were drawn, data collected, or reviews conducted.

This applies even more stringently to product reviews and YMYL (Your Money or Your Life) topics, where unverified claims or unclear sourcing damage both perceived helpfulness and real-world trust.

STRATEGY: The good news is you can use AI to help generate that other 99% of the content you can and should rank for. You need to think exactly about what is on your website because that is what AI thinks of you, largely. You need to add EVERY SINGLE KEYWORD that is relevant to your business to your site so AI knows everything about you if you want citing in AI Overviews, too.

Helpfulness Requires Quality and Originality

“Unhelpful content is content that’s generally written for search engine rankings and not for a human audience.” Danny Sullivan, Google

Helpful content, by Google’s standards, is original, substantial, insightful, and complete.

It avoids regurgitating existing information without adding unique value. Content should aim to be the definitive resource on its subject, not a thin variation of better sources. This includes:

- Offering new analysis or interpretation

- Avoiding excessive summarization

- Not relying heavily on automation without input

- Outperforming competing pages in scope, insight, or usability

If your content cannot be bookmarked, recommended, or cited-Google implies-it is not helpful. This frames helpfulness as benchmark-worthy quality.

Google is a links based Search Engine remember.

Helpfulness Requires Satisfying User Experience

Google connects helpfulness to the page experience.

This includes everything from readability and design to performance and layout. Sloppy, rushed, mass-produced, or off-topic content-even if technically accurate-fails the helpfulness standard if it doesn’t offer a good user experience.

Google expects pages to be complete, polished, and satisfying in both form and function.

A satisfying user experience encompasses a LOT not mentioned here, from Ad Density to Technical SEO proficiency.

Helpfulness and YMYL: Higher Standards Apply

Google states that its systems apply even greater weight to helpfulness signals in YMYL topics-those affecting a person’s health, finances, safety, or well-being.

In these cases, failing to demonstrate clear intent, verified expertise, or trustworthy sourcing can disqualify content from being considered helpful, even if it meets baseline technical quality.

Helpfulness is Evaluated Holistically

“We don’t have any specific E-E-A-T factors that we’re checking for, like does the page have a name, photo, phone number, etc. We look at a variety of signals, and they all align with the idea of helpful content.” Danny Sullivan, Google

Google’s systems and raters use a blend of signals to estimate helpfulness. There is no checkbox or formula. Instead, helpfulness is assessed across multiple layers:

- Purpose of the content (why it exists)

- Authorship transparency (who created it)

- Process visibility (how it was made)

- Depth and value of information

- Real-world trust indicators (reputation, accuracy, reviews)

- Overall user satisfaction and clarity.

In short, Google seeks content that is made for users and serves them well-not just content that “looks” good to algorithms.

Helpfulness Is Not Optional – It’s Foundational

Failing Google’s helpfulness criteria directly risks visibility, especially during core algorithm updates.

Google’s Rising Helpfulness Standards:

Content must transparently demonstrate:

- Who created it

- How it was produced

- Why it exists

Without clear answers, content misaligns with Google’s goals, causing ranking declines – even indirectly through algorithmic impacts.

Indirect but Critical Role of Quality Raters:

Raters don’t directly influence rankings but significantly impact them indirectly by:

- Evaluating content quality against defined criteria.

- Informing algorithm refinements.

- Shaping Google’s quality standards.

Thus, consistently failing helpfulness standards – even indirectly – harms your rankings.

Google assesses entire sites (entities), not just individual pages. Even high-quality content suffers if the entity itself isn’t clearly defined, transparent, and trustworthy.

In the The Disconnected Entity Hypothesis framework – or mental model – common reasons sites decline after updates (e.g., HCU, Spam Update):

- Healthy Entities: Transparent, trusted, clearly defined.

- Disconnected Entities: Quality content but unclear ownership and trust signals.

- Bloated Entities: Excessive low-value content.

- Spam Entities: Manipulative or deceptive practices.

- Unknown Entities: Low-signal or unverified sites.

- Greedy Entities: Trusted domains abusing their reputation.

Small sites aren’t penalized for size, but for unclear, unverified identities. Google’s focus on entity health means sites must clearly define:

- Entity or author responsible.

- Expertise and purpose behind content.

- Clear, verifiable site policies and contact details.

Danny Sullivan emphasizes it’s often “not the content,” but weak entity signals causing declines.

Compliance with Section 2.5.2 – Google’s Core Issue:

Google’s Search Quality Evaluator Guidelines (Section 2.5.2) explicitly require clarity on:

- Entity or owner behind the site

- Author of each content piece

- Verifiable contact and entity details

Missing this triggers negative ratings, even if your content is objectively good.

Why 2.5.2 Compliance is Critical:

Addressing 2.5.2 ensures alignment with multiple critical guideline areas:

- Website Reputation (3.3.1)

- Lowest/Lacking E-E-A-T (4.5.2, 5.1)

- High E-E-A-T (7.3)

- Avoiding Failures (13.6)

To rank effectively, explicitly:

- Publish your legal entity or personal identity.

- Provide clear contact details and address.

- Declare editorial ownership.

- Use structured data to confirm entity details consistently.

Proper entity clarity also supports legal compliance (FTC, data protection), reducing Google’s perceived risk, and strengthening overall trustworthiness and visibility.

So many sites aren’t just failing Google’s guidelines but potentially operating illegally (none of the sites in my analysis were but it’s easy to fall into grey areas where you are supposed to be complying with stuff – and this all changes depending on a ton of things, like WHAT YOU SELL, or PROMOTE and to WHO, for a start).

Everything added to a site from a commercial point of view (Affiliate links, Ads, YMYL) potentially takes you into a grey area that leaves you “less protected”.

A content site that adds sponsored posts now needs to comply with the FTC. Selling products? What kind? There are laws and guidelines and best practices for everything.

Google knows this.

And it wants risk out of its SERPs.

HCU Corrects. Spam Discards.

Here’s the main difference I’ve seen between the HCU and the October Spam Update:

- HCU: Corrections to domains abusing topical authority or publishing scaled, unoriginal content although many hit

- Spam Updates: Targeting and demoting entire sites based on trust, identity, or perceived manipulation

If you were hit by both?

It’s not a dip-it’s a termination. And I’ve seen this enough now to say it plainly: you cannot recover unless your entity is defined, transparent, and verifiable.

“If a page only exists to make money, the page is spam to Google.”

Google isn’t just asking who you are (which you might be failing). It’s asking why you exist. And if your answer isn’t compelling-or visible-you’re classified accordingly.

When you think to yourself… “Oh, how do I start to define this on my site” and what information users “expect” to find on a site like YOURS, in particular, things start to become clearer.

For instance, if you sell things, or promote things, aim to comply with laws on dealing with folks’ private data and stuff.

This usually is a domino effect to compliance.

Fixing Content Isn’t Enough – Fix Your Entity

The advice out there to “just improve your content” is surface-level at best. Here’s the deeper truth:

- If Google doesn’t know who’s behind your site.

- If it can’t verify your purpose, ownership, or editorial control.

- If you don’t comply with rater logic or legal norms.

Then your rankings aren’t suppressed – they’re removed.

Because Google can’t and won’t vouch for you.

By definition, I’m afraid a site that fails to meet section 2.5.2, for YMYL terms, CAN NEVER BE A SOURCE OF HELPFUL CONTENT AS GOOGLE DEFINES IT, no matter how hard some Googlers would like for it to be different.

You must have E-E-A-T, demonstrate it, and place it where users expect to see it. This is what defines Entity Health in 2025.

This is not about penalties. It’s about classification. Healthy entity? You can recover. Disconnected entity? You get filtered but can you recover? I think so….

Unknown or spam entity? You’re toast.

The Helpful Content Update

So the final takeaway is this: If Google can’t vouch for you – you will not rank.

That includes you, flipped and expired domains. In fact, that’s the entire target of Google in all this not publishers I believe.

Google’s documentation, its spokespeople, and its algorithm behaviour all point to the same truth: the search engine rewards well-defined, trustworthy, people-first entities.

If you don’t look like one, you’re not in the game.

I posit with regards to the Search Quality Evaluator Guidelines and the statement raters do not “directly” influence rankings – what affects you indirectly, affects you directly – and whether or not this is true is a moot point – the very definition of “helpful content” is in Google’s official guidelines.

I still think the Search Quality Evaluator Guidelines are a blueprint how to be avoid being classed as spam by Google’s brutal algorithm updates.

Ask yourself if your content is “clear about who created it, why it exists, and how it’s useful to your visitors” and if you are the type of site users “expect” to see certain things about you to earn trust.

Source (https://developers.google.com/search/docs/fundamentals/creating-helpful-content).

A site that fails to meet section 2.5.2. of the quality rater guidelines cannot produce helpful content, as Google defines it, although for sure, pages on affected sites can rank well for some queries (pages with independent links).

If OWNERSHIP and RESPONSIBILITY are not clearly defined, your site in this current Disconnected Entity cannot ever have HELPFUL CONTENT.

EVER.

There is no bigger lever to found here when it comes to rankings.

“Quality signals-like helpfulness-matter more, and raters help us measure that.”

– X, April 7, 2025

Being rated low quality (either by algorithm or human quality rater) by Google in a matter like HELPFULNESS “matter more” than just about anything else.

A site that fails to meet section 2.5.2. Is by definitions, LOW QUALITY Unhelpful Content for YMYL terms.

LOW QUALITY has to negatively impact your quality score.

Then, bung on top of that DISCONNECT in TRUST AND HELPFULNESS, targeting trending keywords (search engine first content) and Ad density violations, crappy backlinks, affiliate links and you now have a spam site which is cut from SERPS in updates like the March 2024 spam update!

The Helpful Content Update SURFACES high-quality content by REDUCING unhelpful content.

HCU does not promote HELPFUL content – it DEMOTES UNHELPFUL CONTENT.

Its just nothing to do with actually how helpful the individual articles on your site are at this point.

The biggest lever is the health, or more accurately the TRUST of the Entity and that’s zero to do with authorship and everything to do with ownership, transparency and clarity.

How helpful the content is is determined by the site itself not the author of an individual piece of content.

A lack of EEAT is designed by Google to take you out.

Real-World Observations

With over 20 years of SEO experience and access to numerous Search Console accounts between 2023–2025, a clear pattern emerged post-October 2023 Spam Update.

Sites experienced sharp, targeted declines – not due to spam or AI-generated content – but due to insufficiently defined entities.

This real-world impact led to the formulation of the Disconnected Entity Hypothesis, reinforcing that it’s fundamentally about trust.

Conclusion: Entity Trust defines SEO success – Now, and in the future

Creating helpful content alone isn’t enough.

Robust entity health – clear identity, transparency, and verified expertise – is crucial for sustainable SEO success.

Google’s evaluation now prioritizes entity trustworthiness making clear and verifiable entity definitions essential.

Conclusion: A site that continually fails to meet the needs laid out in section 2.5.2. Google Quality Evaluator Guidelines, by very definition, cannot be a producer of Helpful Content. Content from such domains can rank (sub-optimally) because of other quality factors eg independent links.

Any recovery would start with this basic compliance if that is what is lacking because TRUST is the overarching signal from EEAT, and you can’t have trust without compliance in this area.

Time will tell if sites can recover, but it is worth noting:

“You can’t predict that every site will recover to exactly where they were in September 2023 because September doesn’t exist anymore. And our ranking systems are different, and among other things, our ranking systems are also rewarding other kind of content too, including forum content and social content, because that’s an important part of providing a good set of diverse results.” Google, 2024

The End.

PS – I will be publishing case studies of affected sites over the coming months as we track their progress.

Get Early-Bird Access to the new EEAT tool!