Get Early-Bird Access to the new EEAT tool!

Hypothesis

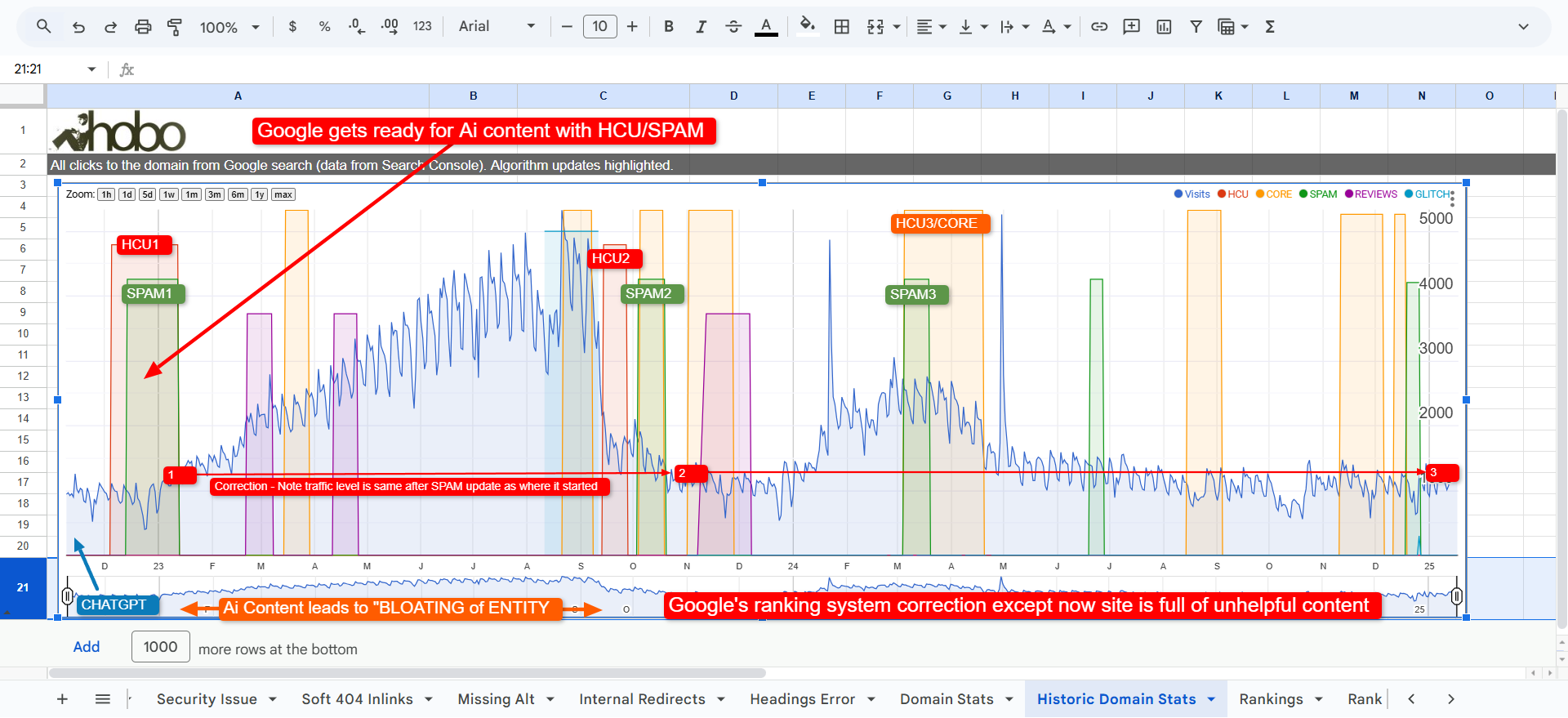

Google’s algorithm updates, the HCU (Helpful Content Update) and regular SPAM and CORE updates negatively impact sites that have a search engine-first content strategy and for instance use generative AI (like Chatgpt) to artificially boost their visibility on Google.

Working in concert together, these corrective algorithms impact most “Unhealthy Entities” and seek to demote and remove them from Search.

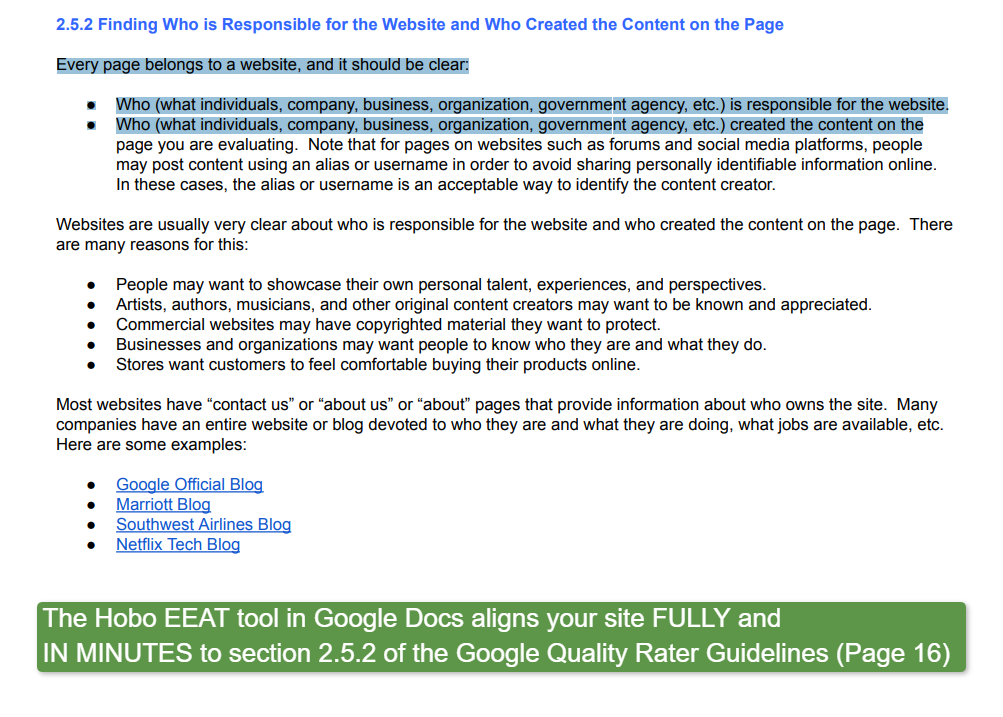

A “Disconnected Entity” is a “Unhealthy Entity”, and is continually devalued in status to UNKNOWN/SPAM status. A Disconnected Entity is a site that fails to meet the standards required in section 2.5.2. page 16 of Google Quality Rater guidelines.

Small independent publishers who have not properly defined their Entity from the ground up properly and sufficiently enough to align with Google Quality Rater Guidelines, can fall into “Unhealthy” status.

You cannot SEO an Unhealthy Entity effectively, for it will regularly be demoted in Google Search in future updates, as its quality score dwindles.

If you have been working on a Disconnected Entity since the October 2023 spam update and have seen some improvement in each Google Core update in the last half of 2024, even with a lot of work and investment on the content on the site, this HCU recovery is also probably temporary.

In this hypothesis, Google’s updates all do the same thing in this area.

Correct rankings and terminate and discard spam.

Spam, to Google, is an unknown, disconnected, unhealthy entity.

Most sites will have been impacted by Google’s Helpful Content Update in September 2023, but MANY will also have been hit harshly in the October 2023 SPAM update.

Getting hit by the October 2023 Spam Update is even more indicative of this hypothesis.

It doesn’t really matter.

Google is no longer vouching for your “entity” in its SERPS.

But WHY?

Small Sites V Well-Established Brands

Danny Sullivan, Search Liason for Google talks with Aleyda Solis about big brands and small brands:

“Is it fair that only the larger well-established things can do better than maybe the smaller well-established things? No, we don’t want it to be only the very big (sic “well established”) things rank well.”

He acknowledges how people feel about ranking:

“These large sites or these well-established sites, in some cases, people feel like, ‘Oh, they just can rank for anything.’ Or, ‘I have a small site which will be a small brand by the way; (1). your small site is a brand, (2). you are a brand in a lot of these cases as well.’ And I don’t feel like I can compete.”

He makes it clear that small sites are still brands – being “well-established” is what matters.

Danny calls out the frustration of small sites competing with big ones:

“These are more generic types of publications that have very well-established brands that are recognizable… but their domain of expertise is not really about that particular topic.”

Some brands rank just because of their reputation, or “online business authority” Matt Cutts called it over a decade ago, even if they lack true authority on the subject.

He doesn’t shy away from the issue:

“They (sic Big sites) also write about that topic, but they tend to write about everything lately just to get that type of traffic, and they piggyback on being a very well-established brand.”

This is where smaller, niche sites feel pushed out – larger brands can dominate rankings even when they aren’t the best source of information.

The takeaway? Small sites ARE brands, and being WELL-ESTABLISHED is the real key to success.

The challenge is getting recognized for your expertise, especially in a search landscape where bigger names often take priority.

Core Updates

Google announces a few major algorithm updates every year. These are called Core Updates, and when announced, you can expect carnage.

“One way to think of how a core update operates is to imagine you made a list of the top 100 movies in 2015. A few years later in 2019, you refresh the list. It’s going to naturally change. Some new and wonderful movies that never existed before will now be candidates for inclusion. You might also reassess some films and realize they deserved a higher place on the list than they had before. The list will change, and films previously higher on the list that move down aren’t bad. There are simply more deserving films that are coming before them.” Danny Sullivan, Google 2020

That part of the Core Update is “helpful”, but Core updates also come with a lot of punishment and discard algorithms “baked in” too – and these are designed to raise the quality bar to entry and to fend off newcomers ignoring Google’s guidelines.

“In 2022, we began tuning our ranking systems to reduce unhelpful, unoriginal content on Search and keep it at very low levels. We’re bringing what we learned from that work into the March 2024 core update.” Google 2024.

Like most announced algorithm updates, HCU (the correction of topical authority abuse on a domain) is now part of Google Core updates, alongside Penguin (unnatural links) and whatever Panda turned into (quality score) and Pagerank (a measure of the web vouching for you). I posit the Spam update terminates unhealthy entities although this might be part of Core now, too.

“With the core updates we don’t focus so much on just individual issues, but rather the relevance of the website overall. And that can include things like the usability, and the ads on a page, but it’s essentially the website overall. And usually that also means some kind of the focus of the content, the way you’re presenting things, the way you’re making it clear to users what’s behind the content. Like what the sources are, all of these things. All of that kind of plays in.” John Mueller, Google 2021

This is all heavily simplified of course, in this mental model.

Here is what is pertinent for small publishers.

Google is targeting a certain type of niche site

And taking a lot of good sites with them apparently.

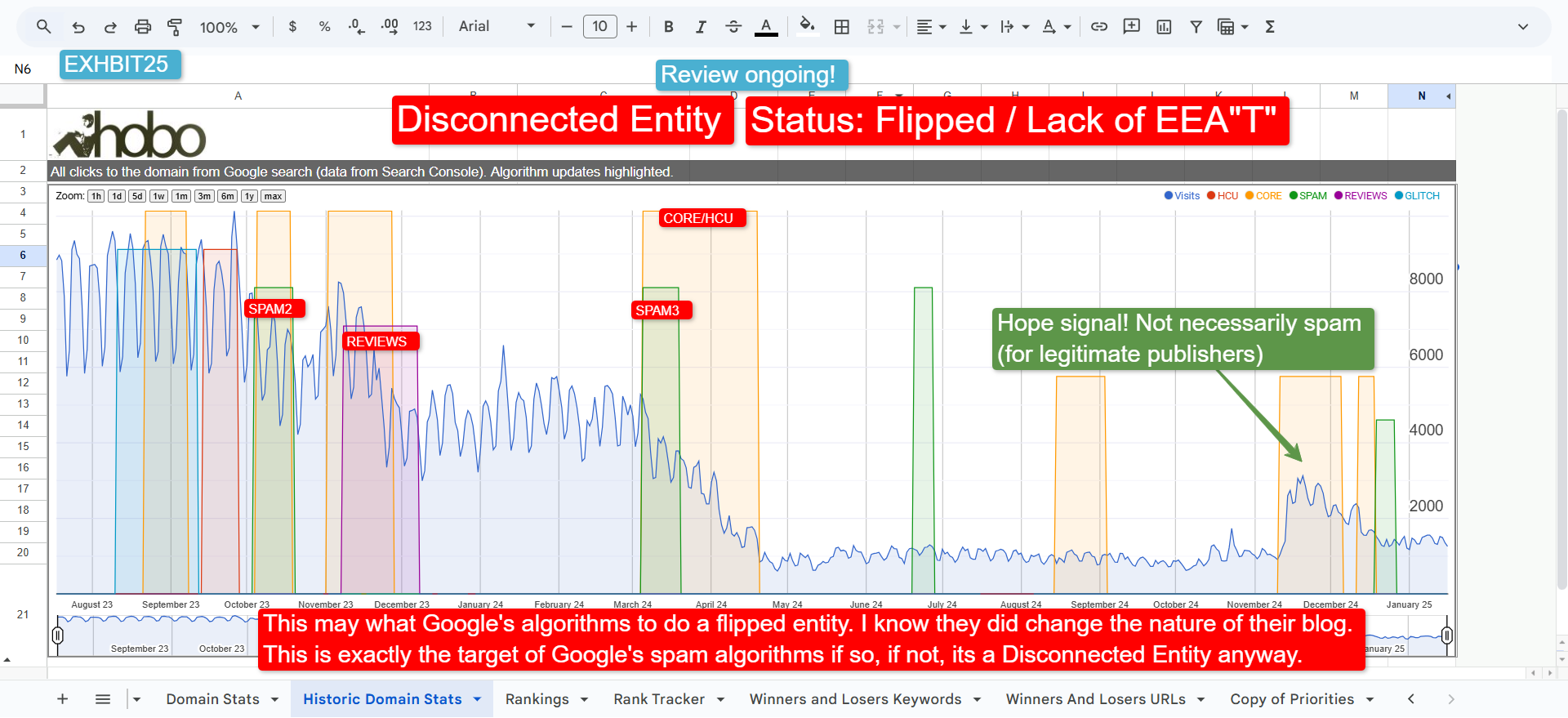

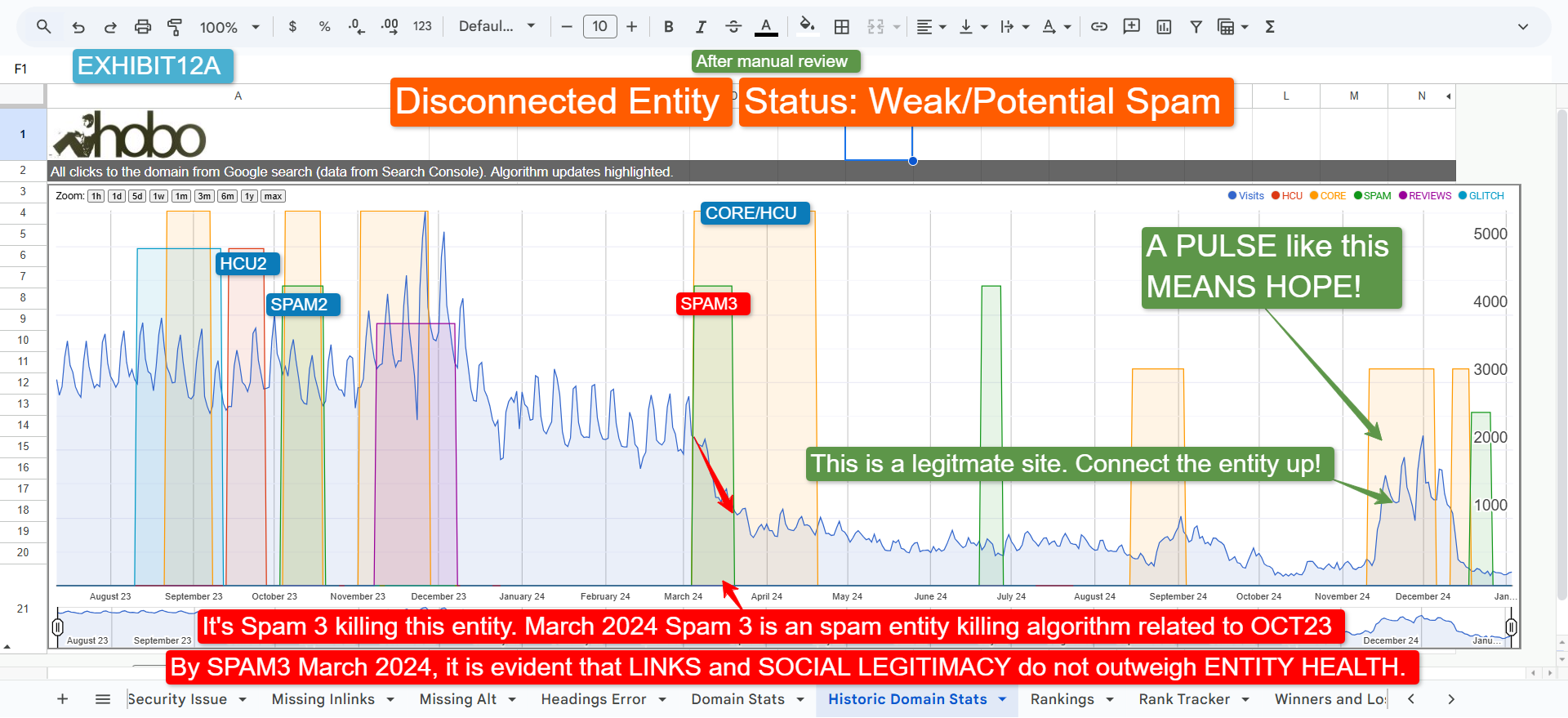

Just LOOK below at Exhibit 12a (a publisher “with their hearts in the right places”) and how close the Search Console data chart above for exhibit 25 (above) looks.

They are almost identical!

At least, Google has noticed.

“And I’ve said, you know, these sites are where hearts are in the right place.” Google 2025

Danny calls out the difference between niche sites with real expertise and those purely for search rankings.

This is incredibly important to understand.

“I want to create a blog just to make a lot of money off of search traffic and I don’t necessarily have my heart behind it, and I don’t really care about what’s involved with it.”

This distinction is key – niche sites that exist only for traffic don’t provide real value, and Google doesn’t want to reward them.

Google has no desire to help the flip–a-site economy.

He directly acknowledges that some niche site owners do have genuine expertise and passion:

“Creators who have their hearts in the right place, even if you think of yourself as a niche blog, understand the difference between that and someone else who’s like, ‘I want to create this thing, and I’m going to flip it on a place I just sell it off. That’s all I really care about, right?'”

The act of flipping a domain could make it a disconnected, unhealthy entity, in this paradigm.

Google isn’t targeting all niche sites – it is filtering out the ones built solely for quick SEO gains rather than true authority, but unfortunately, they look a lot like you, if you are not a well-established entity.

Danny also connects this issue with traditional SEO content strategies that focus more on rankings than value:

“That’s really your more traditional SEO type of thing. That this group of the creators over here don’t care to do that, don’t want anything to do with that.”

Many niche site owners aren’t thinking about gaming the system – they just want to share useful content.

He emphasizes that rewarding authentic creators is a priority:

“We’ve got to do a better job for these creators where their hearts are in the right place. Really need to do a better job for them.”

This is a direct acknowledgement that Google’s updates have affected smaller niche sites, and they want to “fix it”.

At the same time, he makes it clear that Google isn’t going to prioritize niche sites built purely for flipping and monetization:

“For the fly-by-night niche type of thing – sorry, probably NOT going to want to try to do a better job for YOU. Probably shouldn’t.”

The takeaway? Niche sites can succeed, but only if they are built on genuine expertise, passion, and trust AND only if they are well-established.

Sites that focus solely on making money from search traffic without adding value won’t get the same recognition.

KEY TAKEAWAY – Google needs to do better at separating entities – “where their hearts are in the right place” but are not “well-established” – from SPAM.

Note that how “well-established” you are (in the context of my hypothesis) is different from “your domain of experience”.

What a Niche Site Is and What It Is NOT

Danny is clear – not all niche sites are the same. Some are built with passion and expertise, while others exist purely to be flipped for profit.

He acknowledges that some small, independent sites are thriving:

“I see really good content there. I wish they were doing better, but I can also see co-occurring in some of the same queries that I’m given other independent sites that are doing well but that are small sites that are doing the same kinds of things and that they are being successful as well.”

In other words, well-established niche sites can still succeed. Other well-established sites are doing just fine.

But he draws a line:

“A niche site is not one you are flipping.”

If the goal is quick rankings, fast ad revenue, and a sell-off, that’s not the kind of site Google wants to reward.

Key takeaway: A true niche site is built for the long run, with real expertise and value. A flipped site is just chasing rankings – and Google sees the difference.

There are 2 types of niche sites.

One type Google WANTS to show users and the other, Google is NOT vouching for these sites anymore.

And as Danny said, with reference to the September 2023 Helpful Content Update:

“September traffic is not coming back”.

The impact of the September 2023 HCU update

Danny acknowledges the impact of Google’s Helpful Content Update (HCU) on many sites, particularly those run by independent creators:

“For the people who have been impacted, especially the HCU sites or whatever (note, the “whatever” because HCU is now part of CORE alongside other SPAM algorithms that all work in concert with one another) that are ones where their hearts are in the right place… I really do feel that we’re going to keep, and I hope that we’re going to keep trying to do better for that special group.”

He recognizes that not every affected site was low quality:

“These authentic voices that people want to hear… they’re on the web, and they are out there, and I want to see us reward them even more.”

Google knows that HCU has caught some real, valuable sites in its net, and Danny suggests they are working on improvements.

At the same time, he pushes back against low-effort content:

“Part of the reason you’re seeing more of this UGC content is because people feel more comfortable with it or they feel like it isn’t got some kind of ulterior motive that goes with it.”

Your intent matters.

I have another reason Reddit etc has a lot of exposure at the moment (and no, not the secret deal).

SHOWERTHOUGHT: If you were clearing out “Unhealthy Entities” from Serps, you would 1. Clear them out then 2. Deal with “Greedy Entities” already at the top, faced with the prospect of MORE Search Visibility because Google took out independent publishers (so that’s why we see manual actions for Forbes etc).

UGC forums would be a good stopgap to buffer against the next level of “spam” coming in to replace the gap left by independent publishers with unhealthy entities. Time is a good weapon on Google’s hands to deal such a blow to spammers.

This is a direct shot at sites that prioritized SEO over genuine usefulness – Google is leaning toward more authentic, firsthand experiences, from well-established entities.

Key takeaway: While it targeted low-value content, even Danny admits some good sites were affected. Google says it’s trying to adjust – but for now, authenticity and expertise matter more than ever.

An underlying point of this, is how you define “content” and “information”.

“The classifier is always running. If it sees a site has reduced unhelpful content, the site might start performing better at any time. IE: maybe someone made a change to reduce this several months ago. The classifier is checking checking checking and basically says, “Oh, I kind of think this has been a long term change” as the assessment shifts. It’s not like you have to wait until the next time the classifier itself might get updated. We do update it from time to time to make it better, but sites that improve could see change independently of that.”

At this point in the journey, we must ponder exactly what modifications spell out to Google (in so few months) that a site was on the road to recovery. A long term change?

That doesn’t sound much like “content” to me, as folk define “content”.

Maybe it isn’t just about “content”?

Useful content is the new helpful content

Get 50% OFF Hobo SEO Dashboard

Danny breaks it down with a real-world analogy:

“I’m a local mechanic, so I’m going to write 25 different things, and I’m an expert local mechanic to do it. Except the mechanic didn’t write it. The mechanic hired an SEO, who wrote it. The mechanic probably didn’t even look at it at all.”

This is the problem – content that looks useful on the surface but isn’t actually created by the real expert.

He calls out the disconnect:

“Then the stuff is not actually helpful to the mechanic’s audience, which is the local business that they serve. It feels weird to somebody else who comes into the page to land on some of these types of things, like, ‘What is this business? Why are you showing me this sort of content?'” The issue isn’t just that the content exists – it’s that it doesn’t align with what people actually want from that business.

Danny gets to the heart of what useful content really is:

“What I would love is if you’re a local mechanic and you have a passion about fixing things, I would like to just hear your voice – your authentic voice – about what car you worked on or what’s going on or whatever it is. If you’ve got your passion that you want to share, that is what people are wanting to hear.”

This is the real takeaway: Authenticity matters. SEO-driven content written just to rank won’t cut it if it’s disconnected from the actual expertise behind it. Google wants to reward content that comes from real experience, not just content created for the sake of traffic.

While I am not sure Liason gets it that your average mechanic doesn’t do blog posts, real case studies, that lead to INFORMATION GAIN for a user would be a good content strategy for a business, if the expertise is there.

The glaring issue also is that a lot of independent publishers do this, but it is all for nought if Google thinks you are a weak entity.

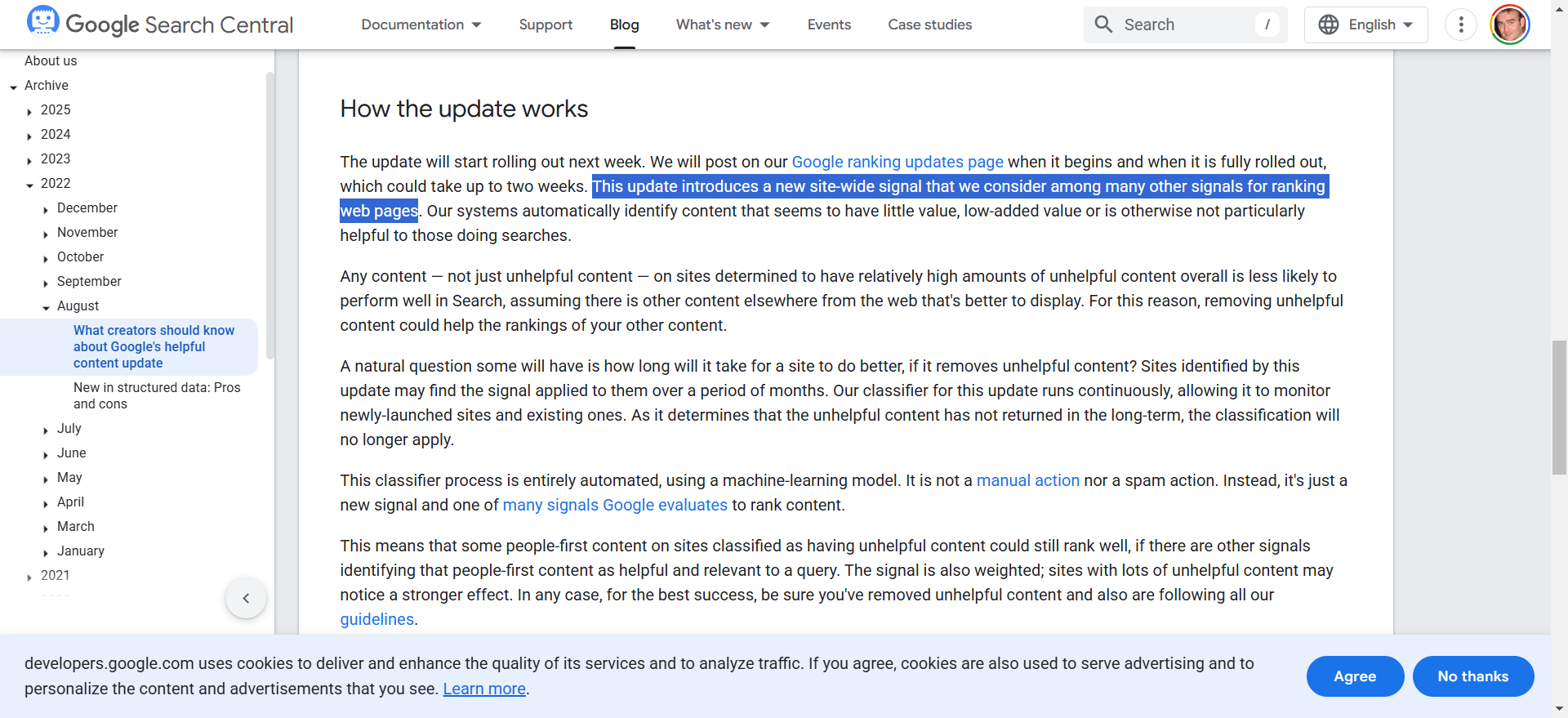

Is HCU a Sitewide penalty?

“There are not site-wide penalties only page-level classifiers” Google’s 2024

It WAS announced as a sitewide signal, at least.

Even in 2025, it can be still found online in Google’s announcements documentation from 2022 calling it a sitewide signal.

A SPAM label is a sitewide classifier.

If these sites are getting caught up in a SPAM algorithm, then of course it’s a sitewide impact.

And don’t page-level classifiers all add up to a sitewide classifier, signal or score?

A quality score?

Yes.

Take this from Google:

JOHN MUELLER: “Then maybe that’s something where you can collect some metrics and say, well, everything that’s below this threshold, we’ll make a decision whether or not to significantly improve it or just get rid of it.”

Maybe Google does the same.

A Quality Score

Nice work Mark Williams Cook.

If your QUALITY SCORE, for instance, takes a drop, that’s going to impact you across the entire site. It will look like a penalty, too (and in effect it is).

A low quality score means you will not rank in the top listings in Google.

You will not be able to win featured snippets, and you will not appear in all of Google’s enhancements in the SERP.

These benefits are exclusively for sites that Google “trusts” that have a high quality score and it looks like that does come down to a number somewhere.

Was it ever, primarily about “content”?

QUOTE: According to Danny Sullivan, my “content was not the issue” when referencing the Mountain Weekly News so don’t let the HCU update make you automatically question your content. In fact it was clear all the website creators that were in attendance simply got caught up in an algorithm update, nothing more, nothing less. You’re content doesn’t suck, if it did you wouldn’t be reading this. Even if you managed to lose traffic during HCU update.

Hang on a second, “content was not the issue”?

“We’re now 13 months since the HCU update rolled out, I don’t believe a single website has recovered.”

And this was backed up by other attendees of the Google Creator Summit 2024.

It’s not independent publishers’ content the Google Helpful Content Update was targeting?

And it wasn’t about SEO, Googlers said that, too.

It probably wasn’t the Ads either or surely a Googler would have complained in front of the attendees at least knowing that they can’t offer individual help like that.

A wink, or a cough or something…

The sites I checked had no serious technical SEO issues, either, beyond poor UX and poor Ad density.

Not enough for a complete traffic slide.

Verification

“You have good content. You are good creators…. But they really wanted to go off on different tangents about setting up a profile or getting you verified”. Morgan, of Charleston Crafted, testified.

Now, that is interesting.

Verified.

“I feel they were going down the wrong trail… a Google Profile, a bio….” Morgan.

Because it aligns with my hypothesis, too.

A lack of verification of the entity responsible for the domain.

This entire article was about HCU and during my analysis I just couldn’t get away from the fact most punishment algorithms seemed to do one thing.

To a certain type of site.

Terminate them.

What I am saying is the Google HCU might not entirely be about helpful content as you think it might mean (although, it does in part) and even this entire article might end up not being about only HCU (it hasn’t).

Ultimately, this is about spam.

It is about the health of Entities.

The noise is from largely collateral damage (small publishers who are often – not always – but often – Bloated, Disconnected or Weak Entities).

You don’t hear much from the spammers.

The good ones, at least.

The Unhealthy Entity Hypothesis

Every entity has a lifecycle.

The health of an Entity is the defining metric that governs SEO Success in 2025.

Do note, that Google doesn’t have these labels in their documentation.

This is a mental model (the way E.E.A.T. is a mental model) to understand what is happening in Google with these updates.

I made all these terms up, by looking at TRENDS and the sites in question.

I’ve been an SEO for over 20 years.

I did not use Third-Party tools to come up with this. Every site I looked at I had access to Search Console (about 100 with all different kinds of issues).

I used one tool.

Google Sheets.

This is what it LOOKS LIKE, to me.

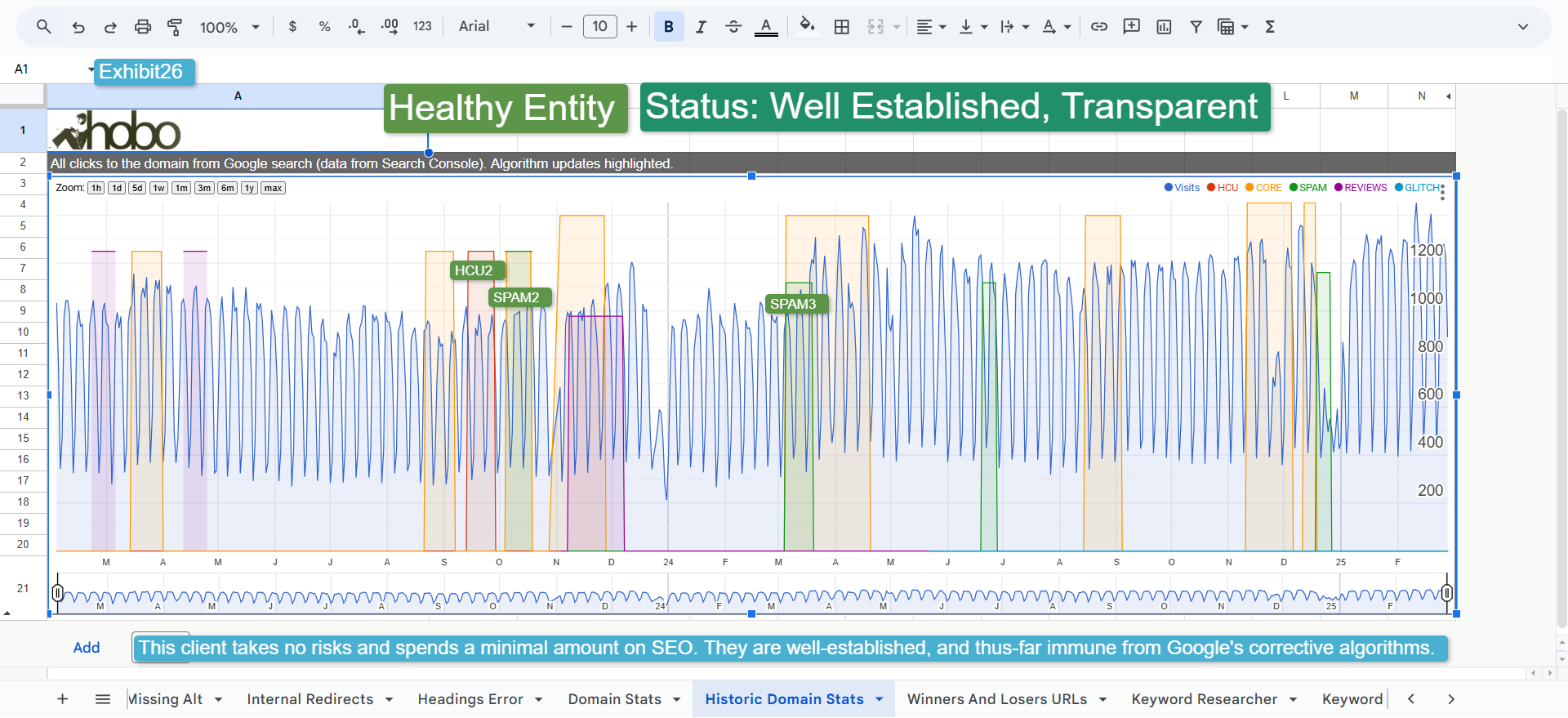

A Healthy Entity

A Healthy Entity has a domain attached to it that is NOT buffeted by Google’s algorithm impacts.

SEO works as expected.

Depending on metrics, 1. the web vouches for you (links), and 2. Google vouches for you, by showing your site to its users.

Google vouching for you here, is a big takeaway.

In past, it let the web vouch for you, on its own, and if you had good links, you ranked.

Your “quality score” is good, and increasing, all using sensible SEO practices.

In fact, when things are going well, you think SEO is easy.

Google wants all sites in its index to have a “Healthy” status.

And note how Google talks about Entities.

So, the first mental model is to believe that Google wants Healthy Entites in its index, or why would these things be in the Quality Raters Guide in the first place?

A SERP with healthy entities ensures Google’s users are PROTECTED because Google is SENDING USERS to sites that demonstrate TRANSPARENCY and RESPONSIBILITY – TRUST.

The T in EEAT.

“E-E-A-T is not for creators, it’s for your readers” Google 2024

EEAT is easier to calculate, too for sites that are well-established (bearing in mind most of that is about links to your site from trusted sources and Google’s users seeking your site out using Google Search and being SATISFIED with the content you are presenting).

How many UNIQUE QUERIES does your site generate?

I’m happy to have a chance to highlight the work of the esteemed Bill Swalski (RIP) here:

Google spokespeople do tell us all this too (now we kind of know):

“One piece of advice I tend to give people is to aim for a niche within your niche where you can be the best by a long stretch. Find something where people explicitly seek YOU out, not just “cheap X” (where even if you rank, chances are they’ll click around to other sites anyway).” John Mueller, Google 2018

For the rest, we rely on SEOs like AJ Kohn to document and record SEO fundamental mental models like this proxy for “search success” to Google:

Leaving links aside, these are some of the proxies (at least) or mental models Google uses for quality and search success, it is undeniable.

A Bloated Entity

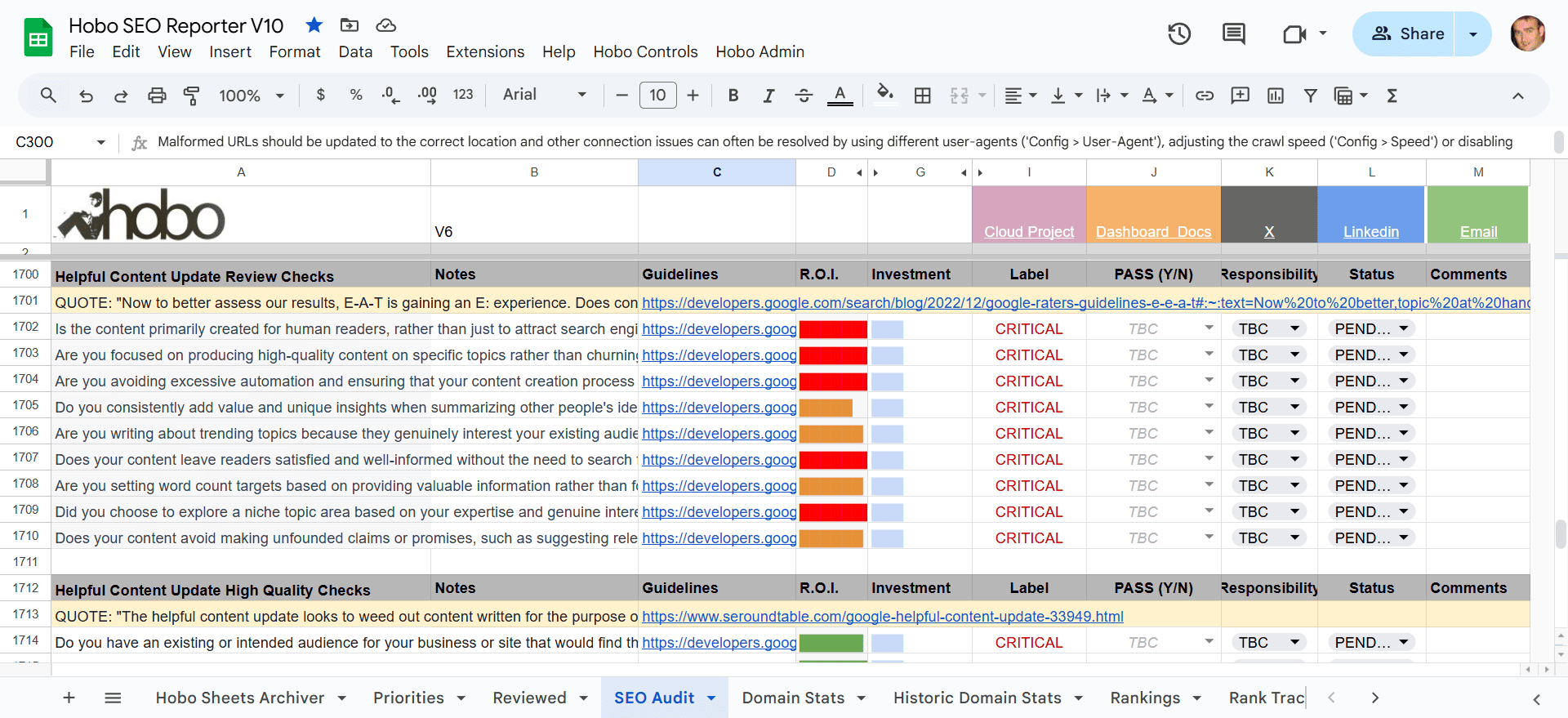

This is the exact thing that the Google Helpful Content Update targets.

Many sites think they have a Healthy Entity, until an algorithm update like HCU or SPAM or now, CORE identifies that they in fact do NOT. Some folk DO take a Healthy Entity and then BLOAT it with AI content, like the example here.

I would call this a BLOATED ENTITY.

The HCU was at least partly to do with what you would call unhelpful content.

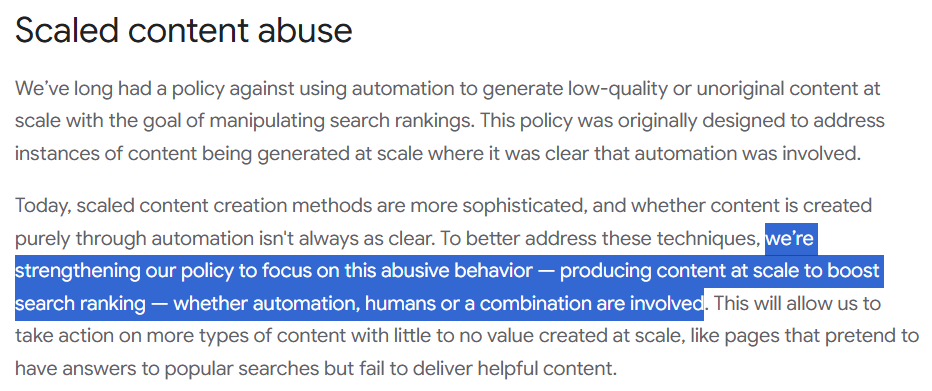

A bloated entity is an entity where the owner has used, in their ignorance, AI to create text to win Google rankings.

For example, let’s say you have 100 good pages, and then publish 1000 crap AI articles, depending on how healthy the entity is, you may end up with the same amount of traffic going in the end to 1100 pages (eg, you are spreading the same amount of traffic around a wider set of pages).

QUOTE: “Usually you can’t just arbitrarily expand a site and expect the old pages to rank the same, and the new pages to rank as well. All else being the same, if you add pages, you spread the value across the whole set, and all of them get a little bit less. Of course, if you’re building up a business, then more of your pages can attract more attention, and you can sell more things, so usually “all else” doesn’t remain the same. At any rate, I’d build this more around your business needs – if you think you need pages to sell your products/services better, make them.” John Mueller, Google 2018

That’s probably less traffic to your core pages if you think about it.

That’s another example of Google not penalising you. You penalise yourself.

Google probably wants you to clean this up, or it knobbles your ranking in some way, for your insolence.

HCU Sept 2023 update hit a lot of these types of sites HARD.

In simple terms, AI is the new thin content, when used to target search visibility. AI content, or even any type of topic expansion around, for instance, doorway-type pages, will be corrected. It always has been.

You need to be very careful with how you use AI when creating text for your blog – see my article Can Chatgpt do your SEO?

This was an example of a healthy but bloated entity.

A bloated but weak entity is a surefire combination for trouble, though.

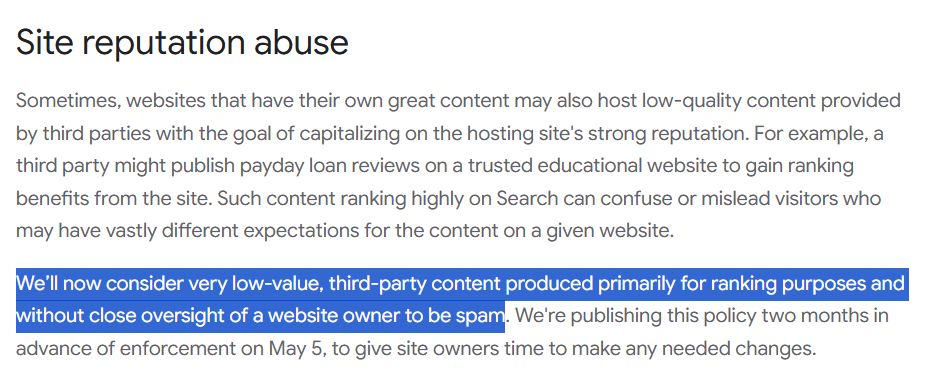

The Greedy Entity

A Greedy Entity is the exact thing that Google Site Reputation Abuse guidelines target:

A Greedy Entity, like Forbes, is an entity where the owner has used their health status (and the perks that give them) to rank for lots and lots of keyword phrases, with its core area of expertise in Google rankings.

SEOs called it Domain Authority, as I mentioned in my 2018 SEO ebook.

A Greedy Entity is usually managed by in-house teams who know a thing about SEO. When a Greedy Entity is mismanaged, it can wander into Google’s new Site Reputation Abuse guidelines.

“Site reputation abuse is the practice of publishing third-party pages on a site in an attempt to abuse search rankings by taking advantage of the host site’s ranking signals.” Google 2025

It can pick up Parasites, too.

Parasite SEO

Parasite SEO (I like that name) is the art of Site Reputation Abuse (SRA).

In simple terms, Parasites live off Healthy Entities and take advantage “of the host site’s ranking signals”.

Parasites need Healthy Entities to survive (as does Google), and they essentially publish third-party pages to a host, which is the definition of SRA.

When Google raises the quality bar on sites previously winning, this gives the Greedy Entity MORE ranking ability in this system.

The only way to deal with these sites is manual actions, evidently, or you have just cleared the way for big entities like Forbes to suck up all the space the small independent publishers were just cleared out of (and of course, the new Spam that takes Google months to deal with, that it hasn’t caught up with.

Perhaps, as I said, deals with Reddit aside, that is why there are so many forums in Google for the time being.

To suppress spam ironically.

At least we know where the traffic went.

The Unhealthy Entity

This is the exact thing (amongst others) that the Google Spam Update targets.

Light spam signal. Google starts vouching for you less, for YMYL topics.

An “Unhealthy Entity” to Google is a site moving from a 1. healthy to an unhealthy status, to 2. a journey culminating in being flagged as SPAM by algorithms.

This will happen OVER TIME (sometimes years) to LEGITIMATE independent publishers when an Entity DECOUPLES from what made it HEALTHY in the first place, or, as has happened at the end of 2023.

Google raises the barrier to entry for sites featuring in its SERPs and relatively new players (after Panda) start to feel the real effect of Quality Score on their domains.

Think of a business that has gone through a change, but the website has not entirely kept up with that change.

SEO works, but the traffic trend will be DOWN over the years.

Google starts vouching for your site less.

Your quality score is dropping. Indexing new content is not as fast.

(Here’s another page from my 2018 ebook featuring ex-Mozzer Rand Fishkin of Sparktoro, discussing Google Quality Score, at a time when it was a hotly debated topic for some).

Google’s algorithms start NEGATIVELY impacting an entity in this shape.

Why?

Well, I can give you one reason.

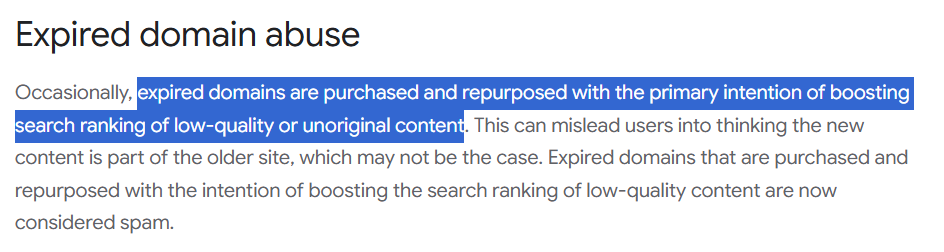

Blackhats go for expired domains.

So does Google:

Expired domains would be in this unhealthy entity phase now because Google knows why Blackhats want these domains – because the web vouches for them, in terms of links etc.

And Google aims to stop that.

With differing levels of success.

Charles is the man for Parasite SEO – kudos to the chap – and for what he shares – as an aside, he competed with me in some SERPS as a teenager lol. The only person in SEO I’m aware of to have a worse 2012/2013 than me.

Folk like Charles is the exact reason the SPAM algorithm exists, and that domain will soon be classed as an Unhealthy, Disconnected, Unknown and therefore Spam Entity, at worst.

I can predict what will happen to that domain using this hypothesis.

Even high-quality links won’t save the site, if it has them, if it has Entity Health issues.

Note that most folk impacted by HCU would have been “Unhealthy Entities” to Google for quite some time before HCU penalties (or SPAM penalties) took them out.

In fact, some of them have ALWAYS BEEN “Unhealthy Entities” with regard to any sitewide classifier like HCU.

A Disconnected Entity is an Unhealthy Entity.

An expired, or dropped domain, is a disconnected entity.

An independent publisher with no clear entity association is a disconnected entity.

An independent publisher must move away from this classification area to avoid collaterally being labelled as spam.

Takeaway – Don’t look like a spam entity, to Google.

An Unknown Entity

Spam Signal. An Unknown Entity is an entity that is an entity entirely decoupled from its previous Healthy status and is now, not much better rated than a spam entity.

The trend will be down. Google starts vouching for you less. Your quality score is dropping. Indexing new content is DIFFICULT.

Google is NOT vouching for you except on narrow terms, brand terms etc. Commercial pages etc are hard to index and keep indexed.

Its going to be impossible to rank for YMYL terms unless you make your entity “known”.

Kiss goodbye to non-brand keyword traffic the type of traffic that was never “ours” anyway.

A Spam Entity

Spam Signal. This is the basement. Total traffic collapse. You are spam.

You will always be Spam without a transparent Entity vouching for the domain to Google so Google can vouch for you to its users.

The Disconnected Entity Hypothesis

if you have been impacted by SPAM updates, you at best (and hopefully so, in this paradigm) a Disconnected Entity and at worst an Unknown or Spam Entity (Not much difference here, to be honest, between the last two).

A lot of the Google Creator Conference attendees would be in this camp – Disconnected Entities of varying degrees – I have some of them in my analysis – as shared in Part 1.

Some have good brand signals – including high-quality links – and are treated differently based on those signals, with a slide in rankings over time rather than a sharp drop – but note brand signals are not the ultimate issue here. A “brand” is only a part of an “entity”.

The brand can be well-established in a traditional sense, but the entity responsible for the domain might not be.

Google Creator Conference attendees are real content-creator sites.

Their “hearts are in the right places“.

That’s no longer enough though, is it?

If you do not want your site to die, you better hope you are a Disconnected Entity and not a Spam Entity.

Recognise if you are and take action.

The onus is on small publishers, not Google.

In fact I believe this is Google actually trying to help folk in some cases.

Some sites track downward for years.

A Disconnected Entity with a Brand Signal – like links and clicks – has some interesting patterns (they mostly still all trend downward though) but the effect is less pronounced than some.

A Disconnected Entity is treated a lot less harshly in Google than a spam entity. I think looking at the data for some sites Google is giving folk months or years even to sort the issue out.

However, a Disconnected Domain I’d wager is a site with a diminishing quality score which leads to less traffic over time from Google, because the trust is gone.

What is a Weak or Disconnected Entity?

A Weak or Disconnected Entity is a Domain that is disconnected from either the Entity that garnered the reputation the domain has (think an expired domain),

AND/OR,

in light of Google raising the quality bar in SERPs, and this is a LOT OF INDEPENDENT PUBLISHERS, a Domain that has INSUFFICIENT TRANSPARENCY in connection to the entity that is responsible for the Domain.

AND/OR

The Entity elected responsible for the Domain is not DEFINED and MANAGED satisfyingly enough for a User.

And NO.

This is not JUST about DEMONSTRATING EEAT on your website or adding author bios – although, you are doing that, naturally.

This is where most small independent publishers FAIL.

This is why some people don’t think EEAT works, YET, when you take a Disconnected Entity and Reconnect it using EEAT principles, it works! Here, in fact is another nuance.

Google’s ranking systems: Entity Health Status > Links > Relevance

It does not matter how good your links or content is if your domain is classed as an Unhealthy, Unknown or SPAM entity.

EVENTUALLY = SEO will just turn OFF.

The Health Status of an Entity DICTATES if it will appear in Google at all, even with links.

A disconnected entity is an entity that can be recovered (where as most other examples on this page, cannot).

Independent Publishers build a Brand on a Domain but neglect Entity Transparency.

Indy publishers are great at brand building.

Their content is (when not keyword-led) as good as it gets.

Most of them are better SEOs than most SEOs.

They are often FOUNDER LED, too, which makes interacting with the Brand and its Creators easy.

But this is not sufficient, on its own, any more.

They build a DOMAIN and then build BRAND RECOGNITION for it, and make it easy to interact with the BRAND on the Domain but NOT the ENTITY RESPONSIBLE FOR THE DOMAIN.

When the Entity responsible for the Domain is not CLEARLY DEFINED and EASY TO INTERACT WITH then the Domain is part of an UNHEALTHY ENTITY classification.

It is not enough in 2025 to have a BRAND recognised by the web, that is not part of a HEALTHY ENTITY.

Old SEO = BRAND.

New SEO = BRAND + HEALTY ENTITY STATUS.

Your brand, like a local business, must be recognisable and stand for something.

Google’s Dilemma

Imagine Google sends a user to a site and something really bad happens. Something criminal, even. The destination site MUST hold responsibility for collecting the USER from Google and keeping them safe.

These are GOOGLE’s USERS at this point.

“Our goal is to surface great content for users. I suspect there is a lot of great content you guys are creating that we are not surfacing to our users, but I can’t give you any guarantees unfortunately. We are focused on things for our users, that is not going to change.” Google 2024

WHY would Google send a visitor to a site where responsibility for the domain is either not clearly defined or easily interactable with?

Who is responsible for the domain is not clearly verifiable, but more importantly, not interactable with it.

Mix of Entity Health in SERPs

At any one point, you have a mix of domains with varying entity health status in Google’s Serps, as it can take months for Google to work it all out. Some are trending down and some up.

Some are disconnected, with ENTITY ENTROPY.

You also have domains expiring and returning to Google, with a different ENTITY PROFILE attached to the domain, blackhats use.

At any one time in Google results you have healthy entities, greedy entities and weak or unhealthy entities, and what we see at this side of the SERPS, as an observer is a constant mix of these entities and statuses as Google tries to protect HEALTHY SERPS, tries to throw a bone to disconnected entities and tries to weed out spam entities.

Develop Your Brand

Danny makes it clear that small sites can and should develop themselves:

“If you’re a smaller site that feels like you haven’t really developed your brand – develop it.”

He’s not saying growth happens overnight, but he is saying that being recognized as well-established isn’t just for the big players.

It’s about doing the right things, not just focusing on rankings:

“That’s not because we’re going to rank you because of your brand, but because it’s probably the things that cause people externally to recognize you as a good brand may in turn co-occur or be alongside the kinds of things that our ranking systems are kind of looking to reward.”

He ties this directly into E-E-A-T:

“The whole E-A-T stuff that we’ve talked about – expertise, experience, trustworthiness, and authority – those are the kind of characteristics we’re trying to see, and those are the kind of characteristics that you can often find with brands that are well recognized and trusted by consumers.”

Developing your site means proving your legitimacy, authority and trust, not just chasing rankings.

The Core/October Spam Update 2023

The October 2023 Google Spam update was worse than HCU, for some sites.

See here for analysis on the October 2023 spam update, the March 2024 Core/Spam Update and the December 2024 Google Spam Update.

Do HCU or SPAM sites have other things wrong with them?

Yes, often.

But nothing (if you are free of sloppy AI content targeting trending keywords) trumps this classifier.

How to “Fix” a Disconnected Entity – Coming in Part 3

I will publish my final thoughts on the HCU/SPAM update in the coming weeks, but essentially this would be a good place for you to start:

Check out my article on how to create actual helpful content.