Our Looking into the Crystal Ball of 2013 Predications Post

It’s hard to believe it is the middle of December and a whole year has blown by. To say 2012 was an interesting year would be an understatement, one thing is for sure, it was never dull!

Some of the SEOBook Moderators and I want to share what we feel will be hot (or not) in 2013, we have a diverse mixture of topics, opinions and practical marketing tactics for you to consider. First up is our fearless leader Aaron Wall!

Aaron Wall

Google Verticals

I believe we’ll see additional Google verticals launched (soon) and they’ll be added to the organic search listings. My guess is that (now that the advisor ad units are below AdWords) we can expect to see Google seriously step into education & insurance next year. I also expect them to vastly expand their automotive category in 2013.

SEO’s Move On

Tired by the pace of change & instability in the search ecosystem (along with the “2 books of guidelines” approach of enforcement in the search ecosystem), many people who are known as SEOs will move on in 2013. Many of these will be via acquisitions, and many more will be due to people simply hanging it up & moving on.

Rebranding Away From SEO

A company in the SEO niche that has long been known as an SEO company will rebrand away from the term SEO. After that happens, that will lead to a further polarization of public discourse (where most anything that is effective and profitable gets branded as being spam), only further fueling #2.

Geordie Carswell from Clearly Canadian (err, we mean here)

Adwords

Regarding Adwords, Google will aggressively dial up Quality Scores on keywords that have languished in activity due to low-QS but haven’t yet been deleted by advertisers. They seem to have given Quality Scores a bump across the board in Q4, bringing in unexpected increases in traffic and cost to advertisers who weren’t aware ‘dormant’ keywords were even still in their accounts. It’s a fantastic revenue generator almost on-demand for Google if the quarterly numbers aren’t looking good.

Peter DaVanzo (aka Kiwi)

Building Brand

More focus on building brands i.e. the people behind the site, their story, their history.

This is to encourage higher levels of engagement, leading to increased loyalty. Making the most of the traffic we already have

Eric Covino (aka vanillacoke)

SEO Diversifies

I think the industry will continue to become more divisive as more people either get out or go more underground and those that continue to remain overly-public will continue to invent language to serve their own commercial purposes while chastising those who do not fall in line; labeling these folks as spammers and bad for the industry in desperate attempts at differentiation so they can continue to try and sell to brands and the lower part of the consumer pyramid (read: mindless sheep)

There will be exceptions where the company will do and probably continue to do really well, but largely those who try and move from being pure SEO agencies to full service [insert new term here] ad-type agencies will fail at delivering real value to their clients. These people will resort to more outing and public spam report filings despite their amusing posts on how they are “different” and “clean”. I believe this will spawn a return of enterprise-level SEO services to competent SEO’s and SEO firms but not to the “point, link, report ranking agencies”. I believe the latter will die a faster death in 2013. Technical proficiency in SEO will become more and more valuable as well, especially if enterprise-level SEO returns as I think it will.

SEO Pricing Structures Will Change

A fractured search landscape where data is harder to come by (not provided, rank checking issues, mobile disruption) in addition to frequent algo shifts and confusion with local rankings will make low-cost SEO much harder to justify and measure, especially in the local area. These issues, coupled with the rising cost of doing business online, will make low to even moderate budget SEO (really low 4 figures or high 3 figures per month) difficult to provide effectively and profitably over a sustained period of time.

The closing window will stay somewhat wide for those that stay around and can afford to take down the margins a bit on some projects. This would be a result of a fairy sizable exodus from the industry as a whole (the self-SEO crowd, for lack of a better term)

Debra Mastaler from Alliance Link

Mobile Applications (apps) and Content for Them

Doesn’t it seem like everyone has been talking about the mobile explosion for years now? I’m jumping on that bandwagon but from a slightly different angle.

If your product lends itself to having an app, I’d urge you to get one started, even if it’s a basic program or you have to partner with someone to make it happen. Recent statistics show there are one billion smart phone users and five billion mobile phone users in the world; being seen on mobile devices is no longer a novelty when those kinds of numbers are involved. So how do you get your content in front of mobile users?

For Android fans, you can turn your best content into an Android App by using tools like AppsGeyser. Their simple three step process allows you to create apps by using content you’ve already written or showcasing a widget you have in service. If you have evergreen content or a popular widget a lot of people download, create an app to keep them one click away and receiving fresh streams of content from your site.

If you’re in a space already filled with apps or can’t create one, consider creating unique content to go with what is out there. For example, novelist Robin Sloan created an iPhone app for “tappable” content. To move the story along, you tap the screen to the next page. It is a super simple concept that has exploded over the Internet. (For more tappable story examples visit here) creating this kind of content sets you apart from your competitors and provides you with a fresh news angle to pitch the media.

If you do create an app, add it to popular download sites like iTunes but make it exclusively available on your website first.

(Tip: Search on “content for iPad” for ideas on creating unique content for tablets and then use the suggestions above to promote them)

Video

OK, more impressive stats to start this section:

“In general, we know that 800 million people around the world use YouTube each month, a stat that I’m sure we’re going to see increase to a billion soon. And nearly all 100 of AdAge’s top 100 advertisers have run ad campaigns on YouTube and Google Display Network–98 in fact.”

There is the word “billion” again! But there’s more and it comes appropriately, right after my pitch for mobile apps:

…”mobile access, which gets over 600 million views a day, tripled in 2011.”

They are talking about access to YouTube here, that’s an astonishing number of views per day. Add to it video results have a tendency to:

- be shown in the first fold of the organic search results (so annoying)

- help make a site “sticky”

- are easily passed around social media sites “like” Facebook

Three sound reasons why you should be involved in making and promoting video in 2013. Since video works well on smartphones, I’d focus equal resources on creating, optimizing and promoting video and written content in 2013. Check out what top brands are doing on YouTube for promotion ideas, where they’re pimping their vids and how. (And an app to play them on, see above) J

Content Partnerships and Variety is a Search Spice

I think everyone will agree using “content” is the tactic du jour when it comes to attracting links and traffic. I expect the trend to continue and with good reason, online news outlets, magazines and topical blogs are as eager to run good content as webmasters are to place it. Finding good outlets will be key, when you do, consider developing a “content partnership” with a set number of sites and negotiate to place more than written content.

What is a content partnership? In a nutshell it’s an exclusive commitment you have to provide content to a set number of sites. You find a handful of authoritative sites to write for and negotiate the amount and type of content you want to submit. They in turn, get a steady stream of well-produced content and build a solid editorial team. Win-win!

In a perfect world it’s best to be the only one writing on a topic but we all know perfection is hard to achieve. In that case, zero in on what you want to write about and approach an outlet with a narrow focus. For example, instead of saying “I’ll write all your baby food articles”, say, “I’ll provide articles, podcasts and videos on natural and organic baby food”. You are much more likely to get what you want if you agree to create content on a specific subject rather than a broad or general topic.

Authentic networking will be key in the future, lock down your sources early and take advantage of the popularity boost you’ll receive associating with highly visible, authority sites in your niche. Use a variety of content methods, the public doesn’t live on written content alone. Video, news and images dominate universal search results; create this type of content so you improve your chances of being seen especially if brands dominate your sector. (So annoying!)

Will Spencer from Tech FAQ

The Value of Links

With Google penalizing obviously generated links instead of simply ignoring them, the value of less-obviously generated links will continue to rise. This will result in higher prices for paid links and an improved return on investment for those links. It will also bring link trading back in vogue, particularly with three-way linking.

We’ll be paying more (or charging more) for links than ever before. With most links being discounted or penalized, it will require fewer links to rank — but those links will have to be acquired at higher prices.

Anita Campbell from Small Business Trends

Website Design Goes Pinterest

We are seeing more websites and blogs designed and displayed a la Pinterest. Content appears in visual boxes with limited text. With that comes a lot more of the infinite scroll – the page that never ends. The new Mashable design is an example. It is hard to tell whether this is a short term fad or a long term trend – but when you have an infinite scrolling page the footer often goes. So all those footer links – well, many may go away.

Social Media Gets the Blender Effect

Social media aggregators are popping up like mushrooms. Tools like Rebelmouse, Scoop.it, Paper.li and a dozen more grab Facebook posts, tweets, retweets and/or blog posts, and mix them all together in a visually appealing presentation that you can embed on your own domain. Some of these tools are not so hot for SEO (all the content is in javascript and/or iframes) or they duplicate a page that resides on the tool’s own site, and search agencies will have to get good at sorting them out and explaining the pros and cons to clients who say “I want one!”

The Line Between Content and Advertising Further Blurs:

The CPM rates of banner ads continue to drop, and the standard banner ad sizes are less appealing except as AdWords. The hot types of advertising today are:

- Rich media such as ads that slide out or down when you slide over or large videos that begin to play, and larger sizes that take up a lot of space on the page (even Google this year introduced the 300 x 600 “half-page” size ad);

- “Native ads” which are ads that sites like Twitter and Facebook sell such as sponsored tweets and sponsored posts – leading to the further commercialization of social media;

- Sponsored content, such as sponsored blog posts and sponsored content features on news sites.

There are different schools of thought around sponsored content, and publishers and agencies need to understand the differences and figure out where they want to play. The natural tendency of many SEO professionals is to think of sponsored content purely as link building. But in my experience, sponsors can have many goals and they may have nothing to do with link building. For many sponsors, their goals are branding, product launch exposure, co-citation/co-reference, thought leadership, sales lead generation, general PR, and/or building positive social media sentiment.

Depending on the sponsor’s goals, sponsored content covers a wide range. It can range from run-of-the-mill link buying and selling, to various levels of guest blog posting (“spun” junk to high quality well-researched articles), to custom-written content pieces such as articles, eBooks and webinars that are clearly labeled as sponsored, designed to build thought leadership, reach out to new audiences, and to associate the sponsor’s name with certain topics.

Marketing agencies will want to sort out the client’s objectives, and also educate clients on the broader benefits to be had from sponsored content.

Now you know our thoughts for 2013, what are yours?

On behalf of everyone here at SEOBook, we wish you a joyous holiday season and much success in 2013!

Debra Mastaler is an experienced link building & publicity expert who has trained clients for over a decade at Alliance-Link. She is the link building moderator of our SEO Community & can be found on Twitter @DebraMastaler.

Introducing Data Highlighter for event data

Webmaster Level: All

Update 19 February 2013: Data Highlighter for events structured markup is available in all languages in Webmaster Tools.

At Google we’re making more and more use of structured data to provide enhanced search results, such as rich snippets and event calendars, that help users find your content. Until now, marking up your site’s HTML code has been the only way to indicate structured data to Google. However, we recognize that markup may be hard for some websites to deploy.

Today, we’re offering webmasters a simpler alternative: Data Highlighter. At initial launch, it’s available in English only and for structured data about events, such as concerts, sporting events, exhibitions, shows, and festivals. We’ll make Data Highlighter available for more languages and data types in the months ahead. Update 19 February 2013: Data Highlighter for events structured markup is available in all languages in Webmaster Tools.

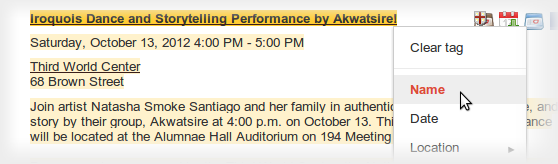

Data Highlighter is a point-and-click tool that can be used by anyone authorized for your site in Google Webmaster Tools. No changes to HTML code are required. Instead, you just use your mouse to highlight and “tag” each key piece of data on a typical event page of your website:

If your page lists multiple events in a consistent format, Data Highlighter will “learn” that format as you apply tags, and help speed your work by automatically suggesting additional tags. Likewise, if you have many pages of events in a consistent format, Data Highlighter will walk you through a process of tagging a few example pages so it can learn about their format variations. Usually, 5 or 10 manually tagged pages are enough for our sophisticated machine-learning algorithms to understand the other, similar pages on your site.

When you’re done, you can review a sample of all the event data that Data Highlighter now understands. If it’s correct, click “Publish.”

From then on, as Google crawls your site, it will recognize your latest event listings and make them eligible for enhanced search results. You can inspect the crawled data on the Structured Data Dashboard, and unpublish at any time if you’re not happy with the results.

Here’s a short video explaining how the process works:

To get started with Data Highlighter, visit Webmaster Tools, select your site, click the “Optimization” link in the left sidebar, and click “Data Highlighter”.

If you have any questions, please read our Help Center article or ask us in the Webmaster Help Forum. Happy Highlighting!

Posted by Justin Boyan, Product Manager

Helping Webmasters with Hacked Sites

Webmaster Level : Intermediate/Advanced

Having your website hacked can be a frustrating experience and we want to do everything we can to help webmasters get their sites cleaned up and prevent compromises from happening again. With this post we wanted to outline two common types of attacks as well as provide clean-up steps and additional resources that webmasters may find helpful.

To best serve our users it’s important that the pages that we link to in our search results are safe to visit. Unfortunately, malicious third-parties may take advantage of legitimate webmasters by hacking their sites to manipulate search engine results or distribute malicious content and spam. We will alert users and webmasters alike by labeling sites we’ve detected as hacked by displaying a “This site may be compromised” warning in our search results:

We want to give webmasters the necessary information to help them clean up their sites as quickly as possible. If you’ve verified your site in Webmaster Tools we’ll also send you a message when we’ve identified your site has been hacked, and when possible give you example URLs.

Occasionally, your site may become compromised to facilitate the distribution of malware. When we recognize that, we’ll identify the site in our search results with a label of “This site may harm your computer” and browsers such as Chrome may display a warning when users attempt to visit. In some cases, we may share more specific information in the Malware section of Webmaster Tools. We also have specific tips for preventing and removing malware from your site in our Help Center.

Two common ways malicious third-parties may compromise your site are the following:

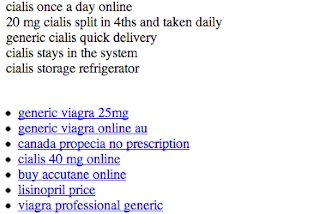

Injected Content

Hackers may attempt to influence search engines by injecting links leading to sites they own. These links are often hidden to make it difficult for a webmaster to detect this has occurred. The site may also be compromised in such a way that the content is only displayed when the site is visited by search engine crawlers.

Example of injected pharmaceutical content

If we’re able to detect this, we’ll send a message to your Webmaster Tools account with useful details. If you suspect your site has been compromised in this way, you can check the content your site returns to Google by using the Fetch as Google tool. A few good places to look for the source of such behavior of such a compromise are .php files, template files and CMS plugins.

Redirecting Users

Hackers might also try to redirect users to spammy or malicious sites. They may do it to all users or target specific users, such as those coming from search engines or those on mobile devices. If you’re able to access your site when visiting it directly but you experience unexpected redirects when coming from a search engine, it’s very likely your site has been compromised in this manner.

One of the ways hackers accomplish this is by modifying server configuration files (such as Apache’s .htaccess) to serve different content to different users, so it’s a good idea to check your server configuration files for any such modifications.

This malicious behavior can also be accomplished by injecting JavaScript into the source code of your site. The JavaScript may be designed to hide its purpose so it may help to look for terms like “eval”, “decode”, and “escape”.

Cleanup and Prevention

If your site has been compromised, it’s important to not only clean up the changes made to your site but to also address the vulnerability that allowed the compromise to occur. We have instructions for cleaning your site and preventing compromises while your hosting provider and our Malware and Hacked sites forum are great resources if you need more specific advice.

Once you’ve cleaned up your site you should submit a reconsideration request that if successful will remove the warning label in our search results.

As always, if you have any questions or feedback, please tell us in the Webmaster Help Forum.

Posted by Oliver Barrett, Search Quality Team

Manage Your Reputation

How much value do you place on your good reputation?

If we looked at it purely from a financial point of view, our reputations help us get work, make money, and be more influential. On a personal level, a good name is something of which you can be proud. It is something tangible that makes you feel good.

You’re Everywhere

As it becomes increasingly easy for people to make their feelings known and published far and wide, many businesses are implementing reputation management strategies to help protect their good name.

This area used to be the domain of big business, who employed teams of PR and legal specialists to nurture, defend and promote established brands. Unlike small business, which didn’t have to worry about what someone on the other side of the country might have said about them as it didn’t affect business in their locality, larger entities were exposed nationally, and often internationally. It was also difficult for an individual to spread their grievance, unless it was picked up by mainstream media.

These days, everything is instant and international. Those with a grievance can be heard far and wide, without the need to get media involved. We hear about problems with brands across the other side of the country, or the world, just as easily as we hear about them in our own regions, or market niches. If someone is getting hammered in the search industry, you and I probably both hear about it, at roughly the same time. And so will everyone else.

Media stories don’t even have to be true, of course. False information travels just as fast, if not faster, than truth. Given the potential, it’s a wonder reputation problems don’t occur more often that they do.

This is why reputation management is becoming increasingly important for smaller firms and individuals. No matter how good you are at what you do, it’s impossible to please everyone all the time, so it’s quite possible someone could damage your good name at some point.

Much of the reputation management area is obvious and common sense, but certainly worth taking time to consider, especially if you haven’t looked at reputation issues up until now. When people search on your name, do they find an accurate representation of who you are and what you’re about? Is the information outdated? Are you seen in the same places as you competition? How does their reputation compare to yours?

Also, some marketers offer reputation monitoring and management as an add-one service to clients so it can be a potential new revenue stream for those offering consultancy services.

The Indelible Nature Of The Internet

In some respects, I’m glad the internet – as we know it – wasn’t around when I was at school. There were far too many regrettable nights that, these days, would be recorded from various angles on smartphones and uploaded to YouTube before anyone can say “that isn’t mine, officer!”

You’ve got to feel sorry for some of the kids today. Kids being kids, they sometimes do stupid things, but these days a record of stupidity is likely to hang around “forever”. Perhaps their grand-kids will get a laugh one day. Perhaps the recruiter won’t.

Something similar could happen to you, or your firm. One careless employee saying the wrong thing and the record could show up in search engines for a long time. If you’re building a brand, whether personal or related to a business, you need to look after it, nurture it, and defend it, if need be. We’ll look at a few practical ways to do so.

On the flip side, of course, the internet can help establish and spread your good reputation very quickly. We’ll also look at ways to push your good reputation.

Modern Media Is A Conversation

People talk.

These days, no matter how big a firm is, they can’t hide behind PR and receptionists. If they don’t want to join the conversation, so be it – it will go on all around them, regardless. If they aren’t part of it, then they risk the conversation being dictated by others.

So a big part of online reputation management is about getting involved in the conversation, and framing it, where possible i.e. have the conversation on your terms.

Be Proactive

Most us haven’t got time to constantly monitor everything that might be said about us or our brands. One of the most cost-effective ways to manage reputation is to get out in front of problems before they arise. If there is enough good things said about you, then the occasional critical voice won’t carry as much weight by comparison.

The first step is to audit your current position. Search on your name and/or brand. What do you see in the top ten? Do the results reflect what you’re about? Is there anything negative showing up? If so, can you respond to it by way of a comment section? This is the exact same information your customers will see, of course, when they look you up.

If you’re not seeing accurate content, you may need to update or publish more appropriate content on your own sites, and those sites that come up in the top ten, where possible. More aggressive SEO approaches involve flooding the SERPs with positive content in an attempt to push down any negative stories below the fold so they are less likely to be seen. This is probably not quite as effective as addressing the underlying issues that caused the negative press in the first place, unless the criticisms were malicious, in which case, game on.

Next, conduct the same set of searches on your competitors. How does their reputation compare? Are they being seen in places you aren’t? Are they getting positive press mentions that you could get, too? How does your reputation stack up, relatively speaking?

Listen

You can monitor mentions using services such as Google Alerts, Hootsuite, Tweetdeck, and various other tools. There’s another big list of tools here. Google runs “Me On The Web” as part of the Google Dashboard.

Monitor trends related to your industry. Get involved in fast breaking, popular trends and discussions. Be seen where potential customers would expect to see you. The more other people see you engaged on important issues, in a positive light, the more credibility you’re banking for the future. If you build up a high volume of “good stuff”, any occasional critical voice will likely get lost in the noise, rather than stand out. A lot of reputation management has to do with building positive PR ahead of any negatives that may arise later. You should be everywhere your customers expect to see you.

This is a common tactic used by authors selling on Amazon. They “encourage” good reviews, typically by handing out free review copies to friends, in order to stack the positive review side in their favor. The occasional negative review may hurt them, but not quite as much as if the number of negative reviews match the number of positive reviews. Some of them overdo it, of course, as twenty 5 star reviews, and nothing else, looks somewhat suspicious. When it comes to PR, it’s best to be believable!

Engage

Create a policy for engagement, for yourself, and other people who work for you. Keep it simple, and principle based, as principles are easier to remember and apply. For example, a good principle is to post in haste only if what you are saying is positive. If something is negative, pause. Leave it for a few hours. If it still feels right, then post. It’s so easy to post in haste, and then regret it for years afterwards.

Seek feedback often. Ask people how you’re doing, especially if you suspect you’ve annoyed someone or let them down in some way. If you give people permission to vent where you control the environment it means they are less likely to let off steam somewhere else. It may also highlight potential trouble-spots in your process, that you can fix and thus avoid repeats in future. I’ve run sites where the sales process has occasionally broken down, and had customers complain. It happens. I make a point of letting them vent, giving them more than they originally ordered, and apologizing to them for the problems. Not only does going over-and-above expectations prevent negative press, it has often turned disgruntled customers into advocates. They’ve increased their business, and referred others. Pretty simple, right, but good customer service is all part of the reputation management process.

Figure out who the influential people are in your industry and try and get onside with them. In a crisis, they may well help you out, especially if they see you’re being hard done by. If influential names weigh in on your behalf, this can easily marginalize the person who is being critical.

Security

Secure your stuff. Check out this awful story on Wired:

In the space of one hour, my entire digital life was destroyed. First my Google account was taken over, then deleted. Next my Twitter account was compromised, and used as a platform to broadcast racist and homophobic messages. And worst of all, my AppleID account was broken into, and my hackers used it to remotely erase all of the data on my iPhone, iPad, and MacBook.

Explaining what happened and getting it published on Wired is a pretty good crisis management response, of course. When you look up “Mat Horan”, you find that article. Separate your social media business and personal profiles. Secure your mobile phone. Check that your privacy settings are correct across social media. Simple stuff that goes a long way to protecting your existing reputation.

What To Do If You Do Hit Trouble

We can’t please everyone, all the time.

A critical factor is speed. If you spot trouble, get into the conversation early. This can prevent the problem festering and gathering it’s own momentum. However, before you leap in, make sure you understand the issue. Ask “what do these people want to happen that is not currently happening?”.

Also consider who is saying it. What’s their reach? If it’s just a ranter on noname.blogspot.com, or a troll attempt, it’s probably not worth your time, and engaging trolls is counter-productive. Someone influential, of course, requires kid glove treatment. One common tactic, especially if the situation is escalating beyond your control, is to try and take it offline and reach resolution that way. You can then go back to the online conversation once it has been resolved, rather than having the entire firefight a matter of indelible public record.

It’s illegal for people to defame you, so you could also consider legal action if the problem is bad enough. You could also consider engaging some PR help, particularly if the problem occurs in mainstream media. PR can be a bit hit and miss, but reputable PR professionals tend to have extensive networks of contacts, so may get you seen where it might be difficult for you to do so on your own. There are also dedicated reputation management companies, such as reputation.com, reputationchanger.com, and reputationmanagementagency.com who handle monitoring and public relations functions. NB: Included for illustration purposes. We have no relationship with these firms.

Practical examples of constructive responses to negative criticism can often be seen in the Amazon reviews.

For example, a writer can respond to any reviews made about their book. A good approach to negative statements is to thank the reviewer for taking the time to provide feedback, regardless of what they said, and address the issue raised in a calm, informative manner. Future customers will see this, of course, which provides yet another opportunity to sway their opinion. One great example I’ve seen was when the writer did all of the above AND offered the person providing the negative review an hour of free consulting so the reviewer could get the specific information he felt he was missing! One downside of this strategy, however, might be more copycat negative reviews aimed at getting the reviewer free consulting!

The same principle applies to any negative comment in other contexts. When a reader sees your reply, they get editorial balance that would otherwise be missing.

It’s obvious, yet important, stuff. If you’ve got examples of how you’ve handled reputation issues in the past, or your ideas on how best to manage reputation going forward, please add them to the comments to help others.

I’m sure they’ll remember you for it :)

Counterspin on Shopping Search: Shady Paid Inclusion

Bing caused a big stink today when they unveiled Scroogled, a site that highlights how Google Shopping has went paid-inclusion only. A couple weeks ago Google announced that they would be taking their controvercial business model global, in spite of it being “a mess.”

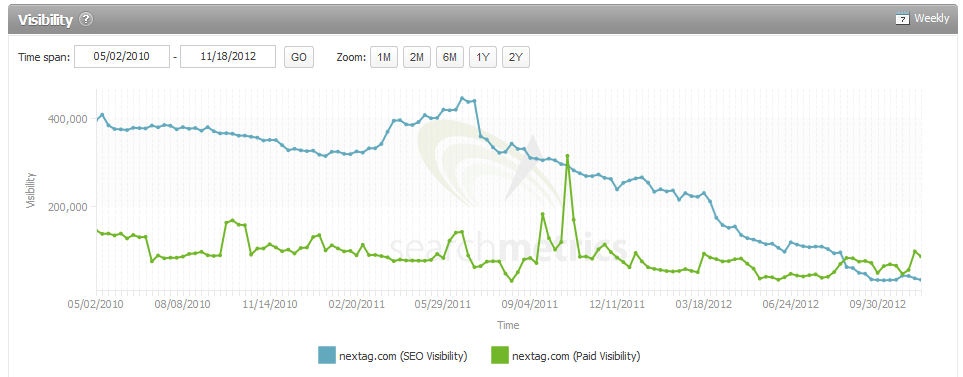

Nextag has long been critical of Google’s shifts on the shopping search front. Are their complaints legitimate, or are they just whiners?

Data, More Reliable Than Spin

Nothing beats data, so lets start with that.

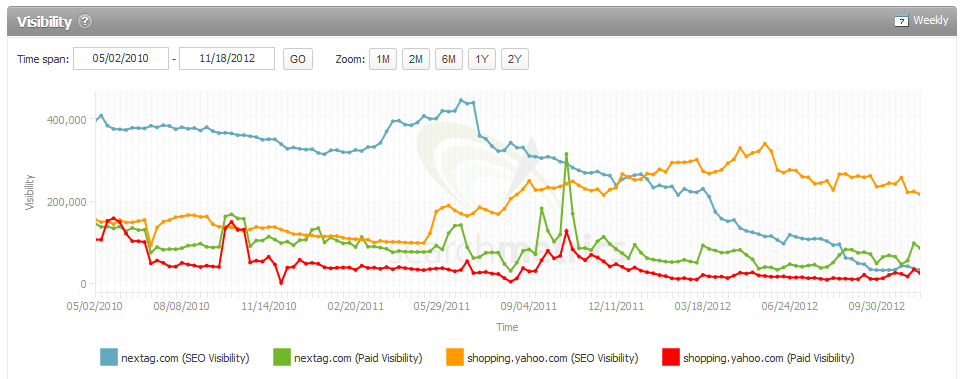

This is what Nextag’s search exposure has done over the past few years, according to SearchMetrics.

If Google did that to any large & politically connected company, you can bet regulators would have already took action against Google, rather than currently negotiating with them.

What’s more telling is how some other sites in the shopping search vertical have performed.

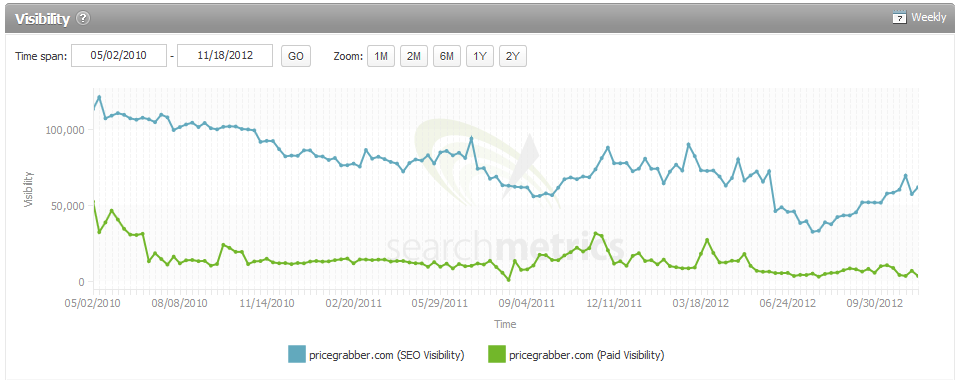

PriceGrabber, another player in the shopping search market, has also slowly drifted downward (though at a much slower rate).

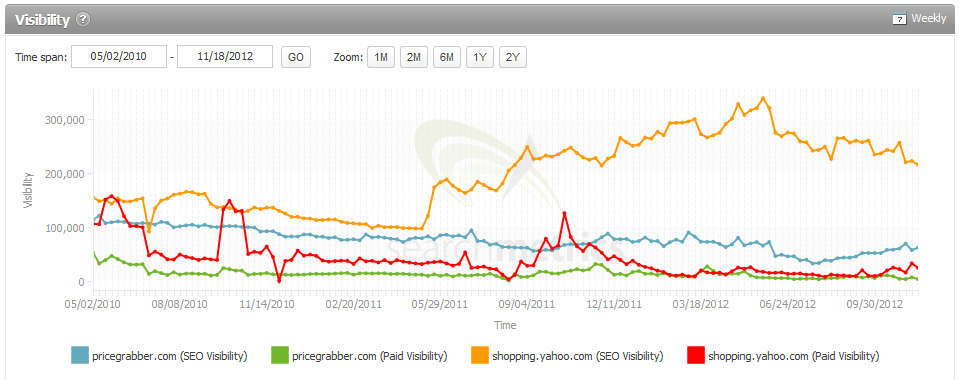

One of the few shopping search engines that has seen a big lift over this time period was Yahoo! Shopping.

What is interesting about that rise is that Yahoo! outsourced substantially all of their shopping search product to PriceGrabber.

A Self-Destructing Market Dynamic

The above creates an interesting market dynamic…

- the long established market leader can wither on the vine for being too focused on their niche market & not broadening out in ways that increase brand awareness

- a larger site with loads of usage data can outsource the vertical and win based on the bleed of usage data across services & the ability to cross promote the site

- the company investing in creating the architecture & baseline system that powers other sites continues to slide due to limited brand & a larger entity gets to displace the data source

- Google then directly enters the market, further displacing some of the vertical players

The above puts Nextag’s slide in perspective, but the problem is that they still have fixed costs to manage if they are going to maintain their editorial quality. Google can hand out badges for people willing to improve their product for free or give searchers a “Click any fact to locate it on the web. Click Wrong? to report a problem” but others who operated with such loose editorial standards would likely be labeled as a spammer of one stripe or another.

Scrape-N-Displace

Most businesses have to earn the right to have exposure. They have to compete in the ecosystem, built awareness & so on. But Google can come in from the top of the market with an inferior product, displace the competition, economically starve them & eventually create a competitive product over time through a combination of incremental editorial improvements and gutting the traffic & cash flow to competing sites.

“The difference between life and death is remarkably small. And it’s not until you face it directly that you realize your own mortality.” – Dustin Curtis

The above quote is every bit as much true for businesses as it is for people. Nothing more than a threat of a potential entry into a market can cut off the flow of investment & paralyze businesses in fear.

- If you have stuff behind a paywall or pre-roll ads you might have “poor user experience metrics” that get you hit by Panda.

- If you make your information semi-accessible to Googlebot you might get hit by Panda for having too much similar content.

- If you are not YouTube & you have a bunch of stolen content on your site you might get hit by a copyright penalty.

- If you leave your information fully accessible publicly you get to die by scrape-n-displace.

- If you are more clever about information presentation perhaps you get a hand penlty for cloaking.

None of those is a particularly desirable way to have your business die.

Editorial Integrity

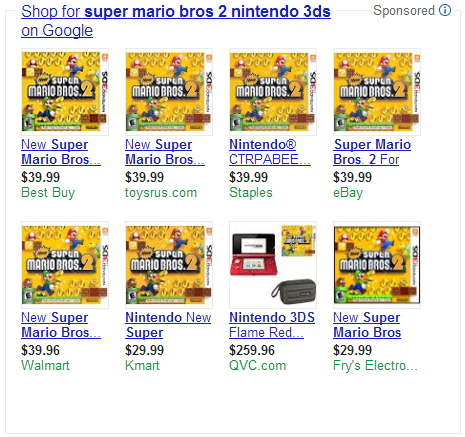

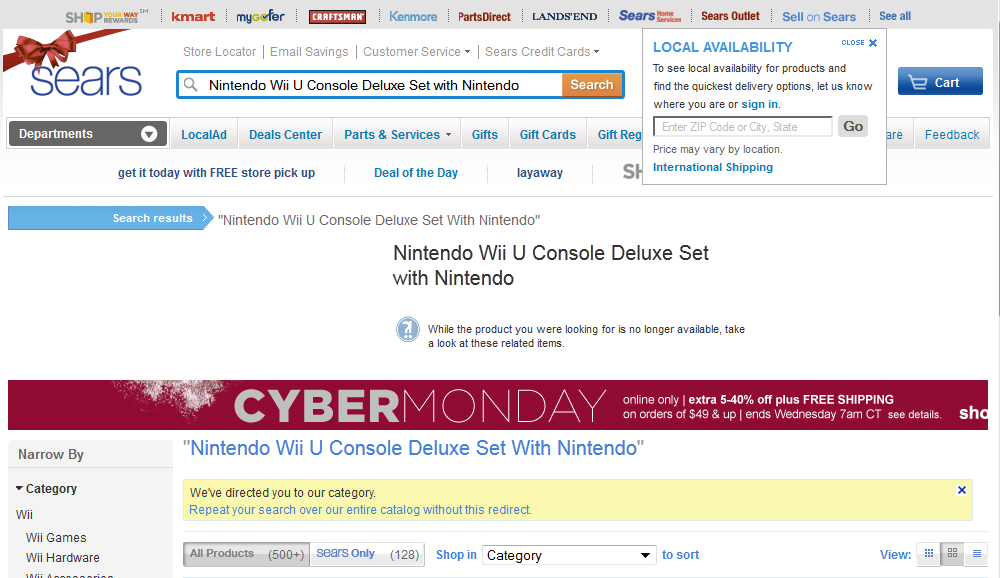

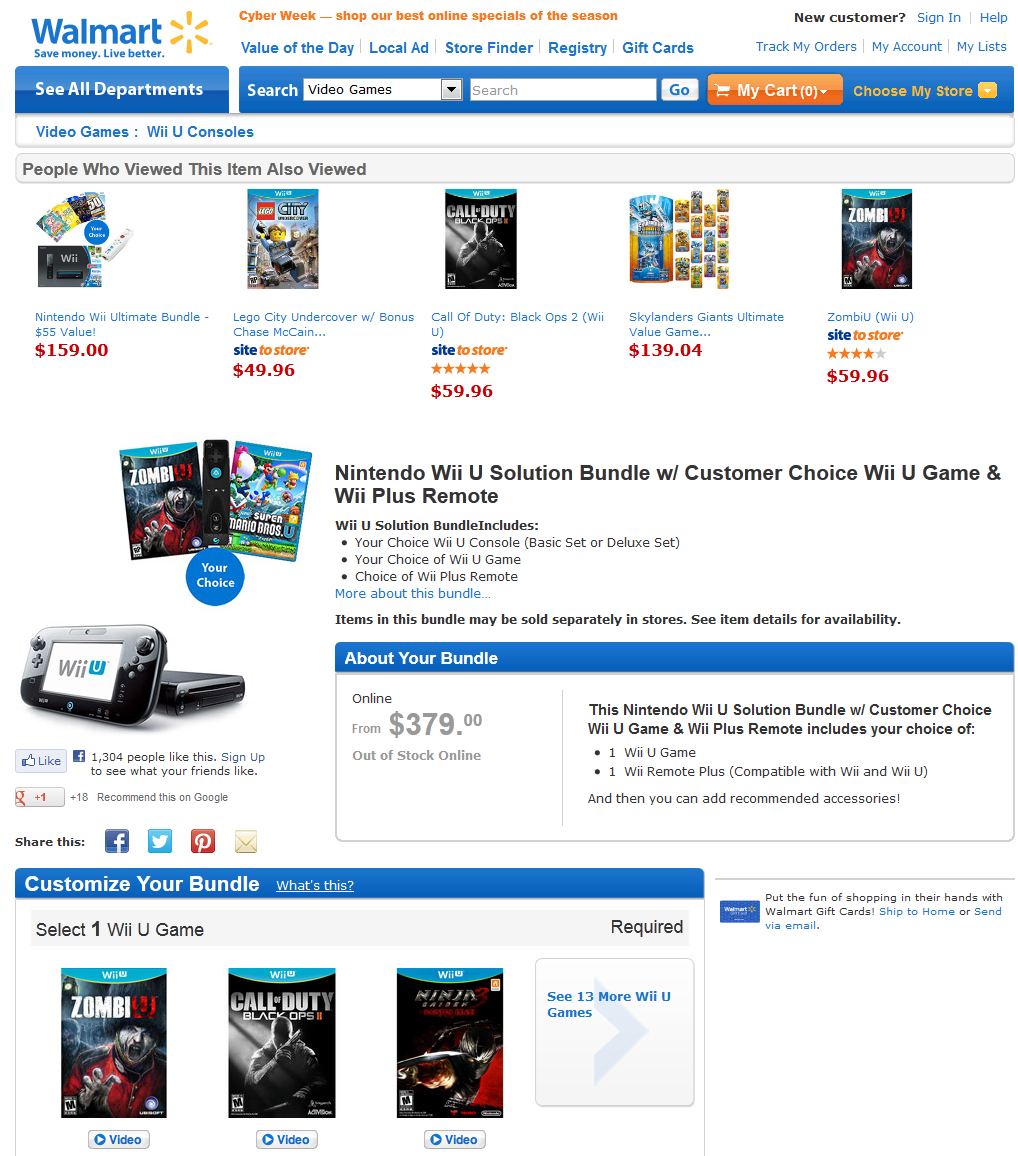

In addition to having a non-comprehensive database, Google Shopping also suffers from the problem of line extension (who buys video games from Staples?).

The bigger issue is that issue of general editorial integrity.

Are products in stock? Sometimes no.

It is also worth mentioning that some sites with “no product available” like Target or Toys R Us might also carry further Google AdSense ads.

Then there are also issues with things like ads that optimize for CTR which end up promoting things like software piracy or the academic versions of software (while lowering the perceived value of the software).

Over the past couple years Google has whacked loads of small ecommerce sites & the general justification is that they don’t add enough that is unique, and that they don’t deserve to rank as their inventory is unneeded duplication of Amazon & eBay. Many of these small businesses carry inventory and will be driven into insolvency by the sharp shifts in traffic. And while a small store is unneeded duplication, Google still allows syndicated press releases to rank great (and once again SEOs get blamed for Google being Google – see the quote-as-headline here).

Let’s presume Google’s anti-small business bias is legitimate & look at Google Shopping to see how well they performed in terms of providing a value add editorial function.

A couple days ago I was looking for a product that is somewhat hard to find due to seasonal shopping. It is often available at double or triple retail on sites like eBay, but Google Shopping helped me locate a smaller site that had it available at retail price. Good deal for me & maybe I was wong about Google.

… then again …

The site they sent me to had the following characteristics:

- URL – not EMD & not a brand, broken English combination

- logo – looks like I designed it AND like I was in a rush when I did it

- about us page – no real information, no contact information (on an ecommerce site!!!), just some obscure stuff about “direct connection with China” & mention of business being 15 years old and having great success

- age – domain is barely a year old & privacy registered

- inbound links – none

- product price – lower than everywhere else

-

product level page content – no reviews, thin scraped editorial, editorial repeats itself to fill up more space, 3 adsense blocks in the content area of the page

- no reviews, thin scraped editorial, editorial repeats itself to fill up more space, 3 adsense blocks in the content area of the page

- no reviews, thin scraped editorial, editorial repeats itself to fill up more space, 3 adsense blocks in the content area of the page

- no reviews, thin scraped editorial, editorial repeats itself to fill up more space, 3 adsense blocks in the content area of the page

- the above repetition is to point out the absurdity of the formatting of the “content” of said page

- site search – yet again the adsense feed, searching for the product landing page that was in Google Shopping I get no results (so outside of paid inclusion & front/center placement, Google doesn’t even feel this site is worth wasting the resources to index)

-

checkout – requires account registration, includes captcha that never matches, hoping you will get frustrated & go back to earlier pages and click an ad

It actually took me a few minutes to figure it out, but the site was designed to look like a phishing site, with intent that perhaps you will click on an ad rather than trying to complete a purchase. The forced registration will eat your email & who knows what they will do with it, but you can never complete your purchase, making the site a complete waste of time.

Looking at the above spam site with some help of tools like NetComber it was apparent that this “merchant” also ran all sorts of scraper sites driven on scraping content from Yahoo! Answers & similar, with sites about Spanish + finance + health + shoes + hedge funds.

It is easy to make complaints about Nextag being a less than perfect user experience. But it is hard to argue that Google is any better. And when other companies have editorial costs that Google lacks (and the other companies would be labeled as spammers if they behaved like Google) over time many competing sites will die off due to the embedded cost structure advantages. Amazon has enoug scale that people are willing to bypass Google’s click circus & go directly to Amazon, but most other ecommerce players don’t. The rest are largely forced to pay Google’s rising rents until they can no longer afford to, then they just disappear.

Bonus Prize: Are You Up to The Google Shopping Test?

The first person who successfully solves this captcha wins a free month membership to our site.

Giving Tablet Users the Full-Sized Web

Webmaster level: All

Since we announced Google’s recommendations for building smartphone-optimized websites, a common question we’ve heard from webmasters is how to best treat tablet devices. This is a similar question Android app developers face, and for that the Building Quality Tablet Apps guide is a great starting point.

Although we do not have specific recommendations for building search engine friendly tablet-optimized websites, there are some tips for building websites that serve smartphone and tablet users well.

When considering your site’s visitors using tablets, it’s important to think about both the devices and what users expect. Compared to smartphones, tablets have larger touch screens and are typically used on Wi-Fi connections. Tablets offer a browsing experience that can be as rich as any desktop or laptop machine, in a more mobile, lightweight, and generally more convenient package. This means that, unless you offer tablet-optimized content, users expect to see your desktop site rather than your site’s smartphone site.

Our recommendation for smartphone-optimized sites is to use responsive web design, which means you have one site to serve all devices. If your website uses responsive web design as recommended, be sure to test your website on a variety of tablets to make sure it serves them well too. Remember, just like for smartphones, there are a variety of device sizes and screen resolutions to test.

Another common configuration is to have separate sites for desktops and smartphones, and to redirect users to the relevant version. If you use this configuration, be careful not to inadvertently redirect tablet users to the smartphone-optimized site too.

Telling Android smartphones and tablets apart

For Android-based devices, it’s easy to distinguish between smartphones and tablets using the user-agent string supplied by browsers: Although both Android smartphones and tablets will include the word “Android” in the user-agent string, only the user-agent of smartphones will include the word “Mobile”.

In summary, any Android device that does not have the word “Mobile” in the user-agent is a tablet (or other large screen) device that is best served the desktop site.

For example, here’s the user-agent from Chrome on a Galaxy Nexus smartphone:

Mozilla/5.0 (Linux; Android 4.1.1; Galaxy Nexus Build/JRO03O) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Mobile Safari/535.19

Or from Firefox on the Galaxy Nexus:

Mozilla/5.0 (Android; Mobile; rv:16.0) Gecko/16.0 Firefox/16.0

Compare those to the user-agent from Chrome on Nexus 7:

Mozilla/5.0 (Linux; Android 4.1.1; Nexus 7 Build/JRO03S) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19

Or from Firefox on Nexus 7:

Mozilla/5.0 (Android; Tablet; rv:16.0) Gecko/16.0 Firefox/16.0

Because the Galaxy Nexus’s user agent includes “Mobile” it should be served your smartphone-optimized website, while the Nexus 7 should receive the full site.

We hope this helps you build better tablet-optimized websites. As always, please ask on our Webmaster Help forums if you have more questions.

Posted by Pierre Far, Webmaster Trends Analyst, and Scott Main, lead tech writer for developer.android.com

A new tool to disavow links

Today we’re introducing a tool that enables you to disavow links to your site. If you’ve been notified of a manual spam action based on “unnatural links” pointing to your site, this tool can help you address the issue. If you haven’t gotten this notification, this tool generally isn’t something you need to worry about.

First, a quick refresher. Links are one of the most well-known signals we use to order search results. By looking at the links between pages, we can get a sense of which pages are reputable and important, and thus more likely to be relevant to our users. This is the basis of PageRank, which is one of more than 200 signals we rely on to determine rankings. Since PageRank is so well-known, it’s also a target for spammers, and we fight linkspam constantly with algorithms and by taking manual action.

If you’ve ever been caught up in linkspam, you may have seen a message in Webmaster Tools about “unnatural links” pointing to your site. We send you this message when we see evidence of paid links, link exchanges, or other link schemes that violate our quality guidelines. If you get this message, we recommend that you remove from the web as many spammy or low-quality links to your site as possible. This is the best approach because it addresses the problem at the root. By removing the bad links directly, you’re helping to prevent Google (and other search engines) from taking action again in the future. You’re also helping to protect your site’s image, since people will no longer find spammy links pointing to your site on the web and jump to conclusions about your website or business.

If you’ve done as much as you can to remove the problematic links, and there are still some links you just can’t seem to get down, that’s a good time to visit our new Disavow links page. When you arrive, you’ll first select your site.

One great place to start looking for bad links is the “Links to Your Site” feature in Webmaster Tools. From the homepage, select the site you want, navigate to Traffic > Links to Your Site > Who links the most > More, then click one of the download buttons. This file lists pages that link to your site. If you click “Download latest links,” you’ll see dates as well. This can be a great place to start your investigation, but be sure you don’t upload the entire list of links to your site — you don’t want to disavow all your links!

We would reiterate that we built this tool for advanced webmasters only. We don’t recommend using this tool unless you are sure that you need to disavow some links to your site and you know exactly what you’re doing.

Q: Will most sites need to use this tool?

A: No. The vast, vast majority of sites do not need to use this tool in any way. If you’re not sure what the tool does or whether you need to use it, you probably shouldn’t use it.

Q: If I disavow links, what exactly does that do? Does Google definitely ignore them?

A: This tool allows you to indicate to Google which links you would like to disavow, and Google will typically ignore those links. Much like with rel=”canonical”, this is a strong suggestion rather than a directive—Google reserves the right to trust our own judgment for corner cases, for example—but we will typically use that indication from you when we assess links.

Q: How soon after I upload a file will the links be ignored?

A: We need to recrawl and reindex the URLs you disavowed before your disavowals go into effect, which can take multiple weeks.

Q: Can this tool be used if I’m worried about “negative SEO”?

A: The primary purpose of this tool is to help clean up if you’ve hired a bad SEO or made mistakes in your own link-building. If you know of bad link-building done on your behalf (e.g., paid posts or paid links that pass PageRank), we recommend that you contact the sites that link to you and try to get links taken off the public web first. You’re also helping to protect your site’s image, since people will no longer find spammy links and jump to conclusions about your website or business. If, despite your best efforts, you’re unable to get a few backlinks taken down, that’s a good time to use the Disavow Links tool.

In general, Google works hard to prevent other webmasters from being able to harm your ranking. However, if you’re worried that some backlinks might be affecting your site’s reputation, you can use the Disavow Links tool to indicate to Google that those links should be ignored. Again, we build our algorithms with an eye to preventing negative SEO, so the vast majority of webmasters don’t need to worry about negative SEO at all.

Q: I didn’t create many of the links I’m seeing. Do I still have to do the work to clean up these links?

A: Typically not. Google normally gives links appropriate weight, and under normal circumstances you don’t need to give Google any additional information about your links. A typical use case for this tool is if you’ve done link building that violates our quality guidelines, Google has sent you a warning about unnatural links, and despite your best efforts there are some links that you still can’t get taken down.

Q: I uploaded some good links. How can I undo uploading links by mistake?

A: To modify which links you would like to ignore, download the current file of disavowed links, change it to include only links you would like to ignore, and then re-upload the file. Please allow time for the new file to propagate through our crawling/indexing system, which can take several weeks.

Q: Should I create a links file as a preventative measure even if I haven’t gotten a notification about unnatural links to my site?

A: If your site was affected by the Penguin algorithm update and you believe it might be because you built spammy or low-quality links to your site, you may want to look at your site’s backlinks and disavow links that are the result of link schemes that violate Google’s guidelines.

Q: If I upload a file, do I still need to file a reconsideration request?

A: Yes, if you’ve received notice that you have a manual action on your site. The purpose of the Disavow Links tool is to tell Google which links you would like ignored. If you’ve received a message about a manual action on your site, you should clean things up as much as you can (which includes taking down any spammy links you have built on the web). Once you’ve gotten as many spammy links taken down from the web as possible, you can use the Disavow Links tool to indicate to Google which leftover links you weren’t able to take down. Wait for some time to let the disavowed links make their way into our system. Finally, submit a reconsideration request so the manual webspam team can check whether your site is now within Google’s quality guidelines, and if so, remove any manual actions from your site.

Q: Do I need to disavow links from example.com and example.co.uk if they’re the same company?

A: Yes. If you want to disavow links from multiple domains, you’ll need to add an entry for each domain.

Q: What about www.example.com vs. example.com (without the “www”)?

A: Technically these are different URLs. The disavow links feature tries to be granular. If content that you want to disavow occurs on multiple URLs on a site, you should disavow each URL that has the link that you want to disavow. You can always disavow an entire domain, of course.

Q: Can I disavow something.example.com to ignore only links from that subdomain?

A: For the most part, yes. For most well-known freehosts (e.g. wordpress.com, blogspot.com, tumblr.com, and many others), disavowing “domain:something.example.com” will disavow links only from that subdomain. If a freehost is very new or rare, we may interpret this as a request to disavow all links from the entire domain. But if you list a subdomain, most of the time we will be able to ignore links only from that subdomain.

Posted by Jonathan Simon, Webmaster Trends Analyst

Automatic Google Pagerank Update Monitor

Google Pagerank isn’t as useful a metric as it used to be, and on it’s own, has little to do with where you actually rank for a particular keyword. However, while Google themselves state it an indication of the reputation of a page, it is worth having…

Make the web faster with mod_pagespeed, now out of Beta

If your page is on the web, speed matters. For developers and webmasters, making your page faster shouldn’t be a hassle, which is why we introduced mod_pagespeed in 2010. Since then the development team has been working to improve the functionality, quality and performance of this open-source Apache module that automatically optimizes web pages and their resources. Now, after almost two years and eighteen releases, we are announcing that we are taking off the Beta label.

We’re committed to working with the open-source community to continue evolving mod_pagespeed, including more, better and smarter optimizations and support for other web servers. Over 120,000 sites are already using mod_pagespeed to improve the performance of their web pages using the latest techniques and trends in optimization. The product is used worldwide by individual sites, and is also offered by hosting providers, such as DreamHost, Go Daddy and content delivery networks like EdgeCast. With the move out of beta we hope that even more sites will soon benefit from the web performance improvements offered through mod_pagespeed.

mod_pagespeed is a key part of our goal to help make the web faster for everyone. Users prefer faster sites and we have seen that faster pages lead to higher user engagement, conversions, and retention. In fact, page speed is one of the signals in search ranking and ad quality scores. Besides evangelizing for speed, we offer tools and technologies to help measure, quantify, and improve performance, such as Site Speed Reports in Google Analytics, PageSpeed Insights, and PageSpeed Optimization products. In fact, both mod_pagespeed and PageSpeed Service are based on our open-source PageSpeed Optimization Libraries project, and are important ways in which we help websites take advantage of the latest performance best practices.

To learn more about mod_pagespeed and how to incorporate it in your site, watch our recent Google Developers Live session or visit the mod_pagespeed product page.

Posted by Joshua Marantz and Ilya Grigorik, Google PageSpeed Team

Rich snippets guidelines

Webmaster level: All

Traditional, text-only, search result snippets aim to summarize the content of a page in our search results. Rich snippets (shown above) allow webmasters to help us provide even better summaries using structured data markup that they can add to their pages. Today we’re introducing a set of guidelines to help you implement high quality structured data markup for rich snippets.

Once you’ve correctly added structured data markup to you site, rich snippets are generated algorithmically based on that markup. If the markup on a page offers an accurate description of the page’s content, is up-to-date, and is visible and easily discoverable on your page and by users, our algorithms are more likely to decide to show a rich snippet in Google’s search results.

Alternatively, if the rich snippets markup on a page is spammy, misleading, or otherwise abusive, our algorithms are much more likely to ignore the markup and render a text-only snippet. Keep in mind that, while rich snippets are generated algorithmically, we do reserve the right to take manual action (e.g., disable rich snippets for a specific site) in cases where we see actions that hurt the experience for our users.

To illustrate these guidelines with some examples:

- If your page is about a band, make sure you mark up concerts being performed by that band, not by related bands or bands in the same town.

- If you sell products through your site, make sure reviews on each page are about that page’s product and not the store itself.

- If your site provides song lyrics, make sure reviews are about the quality of the lyrics, not the quality of the song itself.

In addition to the general rich snippets quality guidelines we’re publishing today, you’ll find usage guidelines for specific types of rich snippets in our Help Center. As always, if you have any questions or feedback, please tell us in the Webmaster Help Forum.

Posted by Jeremy Lubin, Consumer Experience Specialist, & Pierre Far, Webmaster Trends Analyst

Google Webmaster Guidelines updated

Webmaster level: All

Today we’re happy to announce an updated version of our Webmaster Quality Guidelines. Both our basic quality guidelines and many of our more specific articles (like those on links schemes or hidden text) have been reorganized and expanded to provide you with more information about how to create quality websites for both users and Google.

The main message of our quality guidelines hasn’t changed: Focus on the user. However, we’ve added more guidance and examples of behavior that you should avoid in order to keep your site in good standing with Google’s search results. We’ve also added a set of quality and technical guidelines for rich snippets, as structured markup is becoming increasingly popular.

We hope these updated guidelines will give you a better understanding of how to create and maintain Google-friendly websites.

Posted by Betty Huang & Eric Kuan, Google Search Quality Team

Keeping you informed of critical website issues

Webmaster level: All

Having a healthy and well-performing website is important, both to you as the webmaster and to your users. When we discover critical issues with a website, Webmaster Tools will now let you know by automatically sending an email with more information.

We’ll only notify you about issues that we think

have significant impact on your site’s health or search performance and

which have clear actions that you can take to address the issue. For

example, we’ll email you if we detect malware on your site or see a

significant increase in errors while crawling your site.

For most sites these kinds of issues will occur

rarely. If your site does happen to have an issue, we cap the number of

emails we send over a certain period of time to avoid flooding your inbox.

If you don’t want to receive any email from Webmaster Tools you can

change your email delivery

preferences.

We hope that you find this change a useful way to

stay up-to-date on critical and important issues regarding your site’s

health. If you

have any questions, please let us know via our

Webmaster

Help Forum.

Posted by John Mueller, Webmaster Trends Analyst, Google Zürich

Structured Data Testing Tool

Today we’re excited to share the launch of a shiny new version of the rich snippet testing tool, now called the structured data testing tool. The major improvements are:

- We’ve improved how we display rich snippets in the testing tool to better match how they appear in search results.

- The brand new visual design makes it clearer what structured data we can extract from the page, and how that may be shown in our search results.

- The tool is now available in languages other than English to help webmasters from around the world build structured-data-enabled websites.

Here’s what it looks like:

The new structured data testing tool works with all supported rich snippets and authorship markup, including applications, products, recipes, reviews, and others.

Try it yourself and, as always, if you have any questions or feedback, please tell us in the Webmaster Help Forum.

Written by Yong Zhu on behalf of the rich snippets testing tool team

Answering the top questions from government webmasters

Webmaster level: Beginner – Intermediate

Government sites, from city to state to federal agencies, are extremely important to Google Search. For one thing, governments have a lot of content — and government websites are often the canonical source of information that’s important to citizens. Around 20 percent of Google searches are for local information, and local governments are experts in their communities.

That’s why I’ve spoken at the National Association of Government Webmasters (NAGW) national conference for the past few years. It’s always interesting speaking to webmasters about search, but the people running government websites have particular concerns and questions. Since some questions come up frequently I thought I’d share this FAQ for government websites.

Question 1: How do I fix an incorrect phone number or address in search results or Google Maps?

Although managing their agency’s site is plenty of work, government webmasters are often called upon to fix problems found elsewhere on the web too. By far the most common question I’ve taken is about fixing addresses and phone numbers in search results. In this case, government site owners really can do it themselves, by claiming their Google+ Local listing. Incorrect or missing phone numbers, addresses, and other information can be fixed by claiming the listing.

Most locations in Google Maps have a Google+ Local listing — businesses, offices, parks, landmarks, etc. I like to use the San Francisco Main Library as an example: it has contact info, detailed information like the hours they’re open, user reviews and fun extras like photos. When we think users are searching for libraries in San Francisco, we may display a map and a listing so they can find the library as quickly as possible.

If you work for a government agency and want to claim a listing, we recommend using a shared Google Account with an email address at your .gov domain if possible. Usually, ownership of the page is confirmed via a phone call or post card.

Question 2: I’ve claimed the listing for our office, but I have 43 different city parks to claim in Google Maps, and none of them have phones or mailboxes. How do I claim them?

Use the bulk uploader! If you have 10 or more listings / addresses to claim at the same time, you can upload a specially-formatted spreadsheet. Go to www.google.com/places/, click the “Get started now” button, and then look for the “bulk upload” link.

If you run into any issues, use the Verification Troubleshooter.

Question 3: We’re moving from a .gov domain to a new .com domain. How should we move the site?

We have a Help Center article with more details, but the basic process involves the following steps:

- Make sure you have both the old and new domain verified in the same Webmaster Tools account.

- Use a 301 redirect on all pages to tell search engines your site has moved permanently.

- Don’t do a single redirect from all pages to your new home page — this gives a bad user experience.

- If there’s no 1:1 match between pages on your old site and your new site (recommended), try to redirect to a new page with similar content.

- If you can’t do redirects, consider cross-domain canonical links.

- Make sure to check if the new location is crawlable by Googlebot using the Fetch as Google feature in Webmaster Tools.

- Use the Change of Address tool in Webmaster Tools to notify Google of your site’s move.

- Have a look at the Links to Your Site in Webmaster Tools and inform the important sites that link to your content about your new location.

- We recommend not implementing other major changes at the same time, like large-scale content, URL structure, or navigational updates.

- To help Google pick up new URLs faster, use the Fetch as Google tool to ask Google to crawl your new site, and submit a Sitemap listing the URLs on your new site.

- To prevent confusion, it’s best to retain control of your old site’s domain and keep redirects in place for as long as possible — at least 180 days.

What if you’re moving just part of the site? This question came up too — for example, a city might move its “Tourism and Visitor Info” section to its own domain.

In that case, many of the same steps apply: verify both sites in Webmaster Tools, use 301 redirects, clean up old links, etc. In this case you don’t need to use the Change of Address form in Webmaster Tools since only part of your site is moving. If for some reason you’ll have some of the same content on both sites, you may want to include a cross-domain canonical link pointing to the preferred domain.

Question 4: We’ve done a ton of work to create unique titles and descriptions for pages. How do we get Google to pick them up?

First off, that’s great! Better titles and descriptions help users decide to click through to get the information they need on your page. The government webmasters I’ve spoken with care a lot about the content and organization of their sites, and work hard to provide informative text for users.

Google’s generation of page titles and descriptions (or “snippets”) is completely automated and takes into account both the content of a page as well as references to it that appear on the web. Changes are picked up as we recrawl your site. But you can do two things to let us know about URLs that have changed:

- Submit an updated XML Sitemap so we know about all of the pages on your site.

- In Webmaster Tools, use the Fetch as Google feature on a URL you’ve updated. Then you can choose to submit it to the index.

- You can choose to submit all of the linked pages as well — if you’ve updated an entire section of your site, you might want to submit the main page or an index page for that section to let us know about a broad collection of URLs.

Question 5: How do I get into the YouTube government partner program?

For this question, I have bad news, good news, and then even better news. On the one hand, the government partner program has been discontinued. But don’t worry, because most of the features of the program are now available to your regular YouTube account. For example, you can now upload videos longer than 10 minutes.

Did I say I had even better news? YouTube has added a lot of functionality useful for governments in the past year:

- You can now broadcast live streaming video to YouTube via Hangouts On Air (requires a Google+ account).

- You can link your YouTube account with your Webmaster Tools account, making it the “official channel” for your site.

- Automatic captions continue to get better and better, supporting more languages.

I hope this FAQ has been helpful, but I’m sure I haven’t covered everything government webmasters want to know. I highly recommend our Webmaster Academy, where you can learn all about making your site search-engine friendly. If you have a specific question, please feel free to add a question in the comments or visit our really helpful Webmaster Central Forum.

Posted by Jason Morrison, Search Quality Team

Site Errors Breakdown

Webmaster level: All

Today we’re announcing more detailed Site Error information in Webmaster Tools. This information is useful when looking for the source of your Site Errors. For example, if your site suffers from server connectivity problems, your server may simply be misconfigured; then again, it could also be completely unavailable! Since each Site Error (DNS, Server Connectivity, and Robots.txt Fetch) is comprised of several unique issues, we’ve broken down each category into more specific errors to provide you with a better analysis of your site’s health.

Site Errors will display statistics for each of your site-wide crawl errors from the past 90 days. In addition, it will show the failure rates for any category-specific errors that have been affecting your site.

If you’re not sure what a particular error means, you can read a short description of it by hovering over its entry in the legend. You can find more detailed information by following the “More info” link in the tooltip.

We hope that these changes will make Site Errors even more informative and helpful in keeping your site in tip-top shape. If you have any questions or suggestions, please let us know through the Webmaster Tools Help Forum.

Written by Cesar Cuenca and Tiffany Wang, Webmaster Tools Interns

Search Queries Alerts in Webmaster Tools

We know many of you check Webmaster Tools daily (thank you!), but not everybody has the time to monitor the health of their site 24/7. It can be time consuming to analyze all the data and identify the most important issues. To make it a little bit easier we’ve been incorporating alerts into Webmaster Tools. We process the data for your site and try to detect the events that could be most interesting for you. Recently we rolled out alerts for Crawl Errors and today we’re introducing alerts for Search Queries data.

The Search Queries feature in Webmaster Tools shows, among other things, impressions and clicks for your top pages over time. For most sites, these numbers follow regular patterns, so when sudden spikes or drops occur, it can make sense to look into what caused them. Some changes are due to differing demand for your content, other times they may be due to technical issues that need to be resolved, such as broken redirects. For example, a steady stream of clicks which suddenly drops to zero is probably worth investigating.

The alerts look like this:

We’re still working on the sensitivity threshold of the messages and welcome your feedback in our help forums. We hope the new alerts will be useful. Don’t forget to sign up for email forwarding to receive them in your inbox.

Posted by Javier Tordable, Tech Lead, Webmaster Tools

Configuring URL Parameters in Webmaster Tools

Webmaster Level: Intermediate to Advanced

We recently filmed a video (with slides available) to provide more information about the URL Parameters feature in Webmaster Tools. The URL Parameters feature is designed for webmasters who want to help Google crawl their site more efficiently, and who manage a site with — you guessed it — URL parameters! To be eligible for this feature, the URL parameters must be configured in key/value pairs like item=swedish-fish or category=gummy-candy in the URL http://www.example.com/product.php?item=swedish-fish&category=gummy-candy.

Guidance for common cases when configuring URL Parameters. Music in the background masks the ongoing pounding of my neighbor’s construction!

URL Parameter settings are powerful. By telling us how your parameters behave and the recommended action for Googlebot, you can improve your site’s crawl efficiency. On the other hand, if configured incorrectly, you may accidentally recommend that Google ignore important pages, resulting in those pages no longer being available in search results. (There’s an example provided in our improved Help Center article.) So please take care when adjusting URL Parameters settings, and be sure that the actions you recommend for Googlebot make sense across your entire site.

Written by Maile Ohye, Developer Programs Tech Lead