The Benefits Of Thinking Like Google

Shadows are the color of the sky.

It’s one of those truths that is difficult to see, until you look closely at what’s really there.

To see something as it really is, we should try to identify our own bias, and then let it go.

“Unnatural” Links

This article tries to make sense of Google’s latest moves regarding links.

It’s a reaction to Google’s update of their Link Schemes policy. Google’s policy states “Any links intended to manipulate PageRank or a site’s ranking in Google search results may be considered part of a link scheme and a violation of Google’s Webmaster Guidelines.” I wrote on this topic, too.

Those with a vested interest in the link building industry – which is pretty much all of us – might spot the problem.

Google’s negative emphasis, of late, has been about links. Their message is not new, just the emphasis. The new emphasis could pretty much be summarized thus:”any link you build for the purpose of manipulating rank is outside the guidelines.” Google have never encouraged activity that could manipulate rankings, which is precisely what those link building, for the purpose of SEO, attempt to do. Building links for the purposes of higher rank AND staying within Google’s guidelines will not be easy.

Some SEOs may kid themselves that they are link building “for the traffic”, but if that were the case, they’d have no problem insisting those links were scripted so they could monitor traffic statistics, or at very least, no-followed, so there could be no confusion about intent.

How many do?

Think Like Google

Ralph Tegtmeier: In response to Eric’s assertion “I applaud Google for being more and more transparent with their guidelines”, Ralph writes- “man, Eric: isn’t the whole point of your piece that this is exactly what they’re NOT doing, becoming “more transparent”?

Indeed.

In order to understand what Google is doing, it can be useful to downplay any SEO bias i.e. what we may like to see from an SEO standpoint, rather try to look at the world from Google’s point of view.

I ask myself “if I were Google, what would I do?”

Clearly I’m not Google, so these are just my guesses, but if I were Google, I’d see all SEO as a potential competitive threat to my click advertising business. The more effective the SEO, the more of a threat it is. SEOs can’t be eliminated, but they can been corralled and managed in order to reduce the level of competitive threat. Partly, this is achieved by algorithmic means. Partly, this is achieved using public relations. If I were Google, I would think SEOs are potentially useful if they could be encouraged to provide high quality content and make sites easier to crawl, as this suits my business case.

I’d want commercial webmasters paying me for click traffic. I’d want users to be happy with the results they are getting, so they keep using my search engine. I’d consider webmasters to be unpaid content providers.

Do I (Google) need content? Yes, I do. Do I need any content? No, I don’t. If anything, there is too much content, and lot of it is junk. In fact, I’m getting more and more selective about the content I do show. So selective, in fact, that a lot of what I show above the fold content is controlled and “published”, in the broadest sense of the word, by me (Google) in the form of the Knowledge Graph.

It is useful to put ourselves in someone else’s position to understand their truth. If you do, you’ll soon realise that Google aren’t the webmasters friend if your aim, as a webmaster, is to do anything that “artificially” enhances your rank.

So why are so many SEOs listening to Google’s directives?

Rewind

A year or two ago, it would be madness to suggest webmasters would pay to remove links, but that’s exactly what’s happening. Not only that, webmasters are doing Google link quality control. For free. They’re pointing out the links they see as being “bad” – links Google’s algorithms may have missed.

Check out this discussion. One exasperated SEO tells Google that she tries hard to get links removed, but doesn’t hear back from site owners. The few who do respond want money to take the links down.

It is understandable site owners don’t spend much time removing links. From a site owners perspective, taking links down involves a time cost, so there is no benefit to the site owner in doing so, especially if they receive numerous requests. Secondly, taking down links may be perceived as being an admission of guilt. Why would a webmaster admit their links are “bad”?

The answer to this problem, from Google’s John Mueller is telling.

A shrug of the shoulders.

It’s a non-problem. For Google. If you were Google, would you care if a site you may have relegated for ranking manipulation gets to rank again in future? Plenty more where they came from, as there are thousands more sites just like it, and many of them owned by people who don’t engage in ranking manipulation.

Does anyone really think their rankings are going to return once they’ve been flagged?

Jenny Halasz then hinted at the root of the problem. Why can’t Google simply not count the links they don’t like? Why make webmasters jump through arbitrary hoops? The question was side-stepped.

If you were Google, why would you make webmasters jump through hoops? Is it because you want to make webmasters lives easier? Well, that obviously isn’t the case. Removing links is a tedious, futile process. Google suggest using the disavow links tool, but the twist is you can’t just put up a list of links you want to disavow.

Say what?

No, you need to show you’ve made some effort to remove them.

Why?

If I were Google, I’d see this information supplied by webmasters as being potentially useful. They provide me with a list of links that the algorithm missed, or considered borderline, but the webmaster has reviewed and thinks look bad enough to affect their ranking. If the webmaster simply provided a list of links dumped from a link tool, it’s probably not telling Google much Google doesn’t already know. There’s been no manual link review.

So, what webmasters are doing is helping Google by manually reviewing links and reporting bad links. How does this help webmasters?

It doesn’t.

It just increases the temperature of the water in the pot. Is the SEO frog just going to stay there, or is he going to jump?

A Better Use Of Your Time

Does anyone believe rankings are going to return to their previous positions after such an exercise? A lot of webmasters aren’t seeing changes. Will you?

Maybe.

But I think it’s the wrong question.

It’s the wrong question because it’s just another example of letting Google define the game. What are you going to do when Google define you right out of the game? If your service or strategy involves links right now, then in order to remain snow white, any links you place, for the purposes of achieving higher rank, are going to need to be no-followed in order to be clear about intent. Extreme? What’s going to be the emphasis in six months time? Next year? How do you know what you’re doing now is not going to be frowned upon, then need to be undone, next year?

A couple of years it would be unthinkable webmasters would report and remove their own links, even paying for them to be removed, but that’s exactly what’s happening. So, what is next year’s unthinkable scenario?

You could re-examine the relationship and figure what you do on your site is absolutely none of Google’s business. They can issue as many guidelines as they like, but they do not own your website, or the link graph, and therefore don’t have authority over you unless you allow it. Can they ban your site because you’re not compliant with their guidelines? Sure, they can. It’s their index. That is the risk. How do you choose to manage this risk?

It strikes me you can lose your rankings at anytime whether you follow the current guidelines or not, especially when the goal-posts keep moving. So, the risk of not following the guidelines, and following the guidelines but not ranking well is pretty much the same – no traffic. Do you have a plan to address the “no traffic from Google” risk, however that may come about?

Your plan might involve advertising on other sites that do rank well. It might involve, in part, a move to PPC. It might be to run multiple domains, some well within the guidelines, and some way outside them. Test, see what happens. It might involve beefing up other marketing channels. It might be to buy competitor sites. Your plan could be to jump through Google’s hoops if you do receive a penalty, see if your site returns, and if it does – great – until next time, that is.

What’s your long term “traffic from Google” strategy?

If all you do is “follow Google’s Guidelines”, I’d say that’s now a high risk SEO strategy.

Winning Strategies to Lose Money With Infographics

Google is getting a bit absurd with suggesting that any form of content creation that drives links should include rel=nofollow. Certainly some techniques may be abused, but if you follow the suggested advice, you are almost guaranteed to have a negative ROI on each investment – until your company goes under.

Some will ascribe such advice as taking a “sustainable” and “low-risk” approach, but such strategies are only “sustainable” and “low-risk” so long as ROI doesn’t matter & you are spending someone else’s money.

The advice on infographics in the above video suggests that embed code by default should include nofollow links.

Companies can easily spend at least $2,000 to research, create, revise & promote an infographic. And something like 9 out of 10 infographics will go nowhere. That means you are spending about $20,000 for each successful viral infographic. And this presumes that you know what you are doing. Mix in a lack of experience, poor strategy, poor market fit, or poor timing and that cost only goes up from there.

If you run smaller & lesser known websites, quite often Google will rank a larger site that syndicates the infographic above the original source. They do that even when the links are followed. Mix in nofollow on the links and it is virtually guaranteed that you will get outranked by someone syndicating your infographic.

So if you get to count as duplicate content for your own featured premium content that you dropped 4 or 5 figures on AND you don’t get links out of it, how exactly does the investment ever have any chance of backing out?

Sales?

Not a snowball’s chance in hell.

An infographic created around “the 10 best ways you can give me your money” won’t spread. And if it does spread, it will be people laughing at you.

I also find it a bit disingenuous the claim that people putting something that is 20,000 pixels large on their site are not actively vouching for it. If something was crap and people still felt like burning 20,000 pixels on syndicating it, surely they could add nofollow on their end to express their dissatisfaction and disgust with the piece.

Many dullards in the SEO industry give Google a free pass on any & all of their advice, as though it is always reasonable & should never be questioned. And each time it goes unquestioned, the ability to exist in the ecosystem as an independent player diminishes as the entire industry moves toward being classified as some form of spam & getting hit or not depends far more on who than what.

Does Google’s recent automated infographic generator give users embed codes with nofollow on the links? Not at all. Instead they give you the URL without nofollow & those URLs are canonicalized behind the scenes to flow the link equity into the associated core page.

No cost cut-n-paste mix-n-match = direct links. Expensive custom research & artwork = better use nofollow, just to be safe.

If Google actively adds arbitrary risks to some players while subsidizing others then they shift the behaviors of markets. And shift the markets they do!

Years ago Twitter allowed people who built their platform to receive credit links in their bio. Matt Cutts tipped off Ev Williams that the profile links should be nofollowed & that flow of link equity was blocked.

It was revealed in the WSJ that in 2009 Twitter’s internal metrics showed an 11% spammy Tweet rate & Twitter had a grand total of 2 “spam science” programmers on staff in 2012.

With smaller sites, they need to default everything to nofollow just in case anything could potentially be construed (or misconstrued) to have the intent to perhaps maybe sorta be aligned potentially with the intent to maybe sorta be something that could maybe have some risk of potentially maybe being spammy or maybe potentially have some small risk that it could potentially have the potential to impact rank in some search engine at some point in time, potentially.

A larger site can have over 10% of their site be spam (based on their own internal metrics) & set up their embed code so that the embeds directly link – and they can do so with zero risk.

@phillian Like all empires, ultimately Google will be the root of its own demise.— Cygnus SEO (@CygnusSEO) August 13, 2013

I just linked to Twitter twice in the above embed. If those links were directly to Cygnus it may have been presumed that either he or I are spammers, but put the content on Twitter with 143,199 Tweets in a second & those links are legit & clean. Meanwhile, fake Twitter accounts have grown to such a scale that even Twitter is now buying them to try to stop them.

Typically there is no presumed intent to spam so long as the links are going into a large site (sure there are a handful of token counter-examples shills can point at). By and large it is only when the links flow out to smaller players that they are spam. And when they do, they are presumed to be spam even if they point into featured content that cost thousands of Dollars. You better use nofollow, just to play it safe!

That duality is what makes blind unquestioning adherence to Google scripture so unpalatable. A number of people are getting disgusted enough by it that they can’t help but comment on it: David Naylor, Martin Macdonald & many others DennisG highlighted.

Oh, and here’s an infographic for your pleasurings.

Google: Press Release Links

So, Google have updated their Webmaster Guidelines.

Here are a few common examples of unnatural links that violate our guidelines:….Links with optimized anchor text in articles or press releases distributed on other sites.

For example: There are many wedding rings on the market. If you want to have a wedding, you will have to pick the best ring. You will also need to buy flowers and a wedding dress.

In particular, they have focused on links with optimized anchor text in articles or press releases distributed on other sites. Google being Google, these rules are somewhat ambiguous. “Optimized anchor text”? The example they provide includes keywords in the anchor text, so keywords in the anchor text is “optimized” and therefore a violation of Google’s guidelines.

Ambiguously speaking, of course.

To put the press release change in context, Google’s guidelines state:

Any links intended to manipulate PageRank or a site’s ranking in Google search results may be considered part of a link scheme and a violation of Google’s Webmaster Guidelines. This includes any behavior that manipulates links to your site or outgoing links from your site

So, links gained, for SEO purposes – intended to manipulate ranking – are against Google Guidelines.

Google vs Webmasters

Here’s a chat…

In this chat, Google’s John Muller says that, if the webmaster initiated it, then it isn’t a natural link. If you want to be on the safe side, John suggests to use no-follow on links.

Google are being consistent, but what’s amusing is the complete disconnect on display from a few of the webmasters. Google have no problem with press releases, but if a webmaster wants to be on the safe side in terms of Google’s guidelines, the webmaster should no-follow the link.

Simple, right. If it really is a press release, and not an attempt to link build for SEO purposes, then why would a webmaster have any issue with adding a no-follow to a link?

He/she wouldn’t.

But because some webmasters appear to lack self-awareness about what it is they are actually doing, they persist with their line of questioning. I suspect what they really want to hear is “keyword links in press releases are okay.” Then, webmasters can continue to issue pretend press releases as a link building exercise.

They’re missing the point.

Am I Taking Google’s Side?

Not taking sides.

Just hoping to shine some light on a wider issue.

If webmasters continue to let themselves be defined by Google, they are going to get defined out of the game entirely. It should be an obvious truth – but sadly lacking in much SEO punditry – that Google is not on the webmasters side. Google is on Google’s side. Google often say they are on the users side, and there is certainly some truth in that.

However,when it comes to the webmaster, the webmaster is a dime-a-dozen content supplier who must be managed, weeded out, sorted and categorized. When it comes to the more “aggressive” webmasters, Google’s behaviour could be characterized as “keep your friends close, and your enemies closer”.

This is because some webmasters, namely SEOs, don’t just publish content for users, they compete with Google’s revenue stream. SEOs offer a competing service to click based advertising that provides exactly the same benefit as Google’s golden goose, namely qualified click traffic.

If SEOs get too good at what they do, then why would people pay Google so much money per click? They wouldn’t – they would pay it to SEOs, instead. So, if I were Google, I would see SEO as a business threat, and manage it – down – accordingly. In practice, I’d be trying to redefine SEO as “quality content provision”.

Why don’t Google simply ignore press release links? Easy enough to do. Why go this route of making it public? After all, Google are typically very secret about algorithmic topics, unless the topic is something they want you to hear. And why do they want you to hear this? An obvious guess would be that it is done to undermine link building, and SEOs.

Big missiles heading your way.

Guideline Followers

The problem in letting Google define the rules of engagement is they can define you out of the SEO game, if you let them.

If an SEO is not following the guidelines – guidelines that are always shifting – yet claim they do, then they may be opening themselves up to legal liability. In one recent example, a case is underway alleging lack of performance:

Last week, the legal marketing industry was aTwitter (and aFacebook and even aPlus) with news that law firm Seikaly & Stewart had filed a lawsuit against The Rainmaker Institute seeking a return of their $49,000 in SEO fees and punitive damages under civil RICO

…..but it’s not unreasonable to expect a somewhat easier route for litigants in the future might be “not complying with Google’s guidelines”, unless the SEO agency disclosed it.

SEO is not the easiest career choice, huh.

One group that is likely to be happy about this latest Google push is legitimate PR agencies, media-relations departments, and publicists. As a commenter on WMW pointed out:

I suspect that most legitimate PR agencies, media-relations departments, and publicists will be happy to comply with Google’s guidelines. Why? Because, if the term “press release” becomes a synonym for “SEO spam,” one of the important tools in their toolboxes will become useless.

Just as real advertisers don’t expect their ads to pass PageRank, real PR people don’t expect their press releases to pass PageRank. Public relations is about planting a message in the media, not about manipulating search results

However, I’m not sure that will mean press releases are seen as any more credible, as press releases have never enjoyed a stellar reputation pre-SEO, but it may thin the crowd somewhat, which increases an agencies chances of getting their client seen.

Guidelines Honing In On Target

One resource referred to in the video above was this article, written by Amit Singhal, who is head of Google’s core ranking team. Note that it was written in 2011, so it’s nothing new. Here’s how Google say they determine quality:

we aren’t disclosing the actual ranking signals used in our algorithms because we don’t want folks to game our search results; but if you want to step into Google’s mindset, the questions below provide some guidance on how we’ve been looking at the issue:

- Would you trust the information presented in this article?

- Is this article written by an expert or enthusiast who knows the topic well, or is it more shallow in nature?

- Does the site have duplicate, overlapping, or redundant articles on the same or similar topics with slightly different keyword variations?

- Are the topics driven by genuine interests of readers of the site, or does the site generate content by attempting to guess what might rank well in search engines?

- Does the article provide original content or information, original reporting, original research, or original analysis?

- Does the page provide substantial value when compared to other pages in search results?

- How much quality control is done on content?

….and so on. Google’s rhetoric is almost always about “producing high quality content”, because this is what Google’s users want, and what Google’s users want, Google’s shareholders want.

It’s not a bad thing to want, of course. Who would want poor quality content? But as most of us know, producing high quality content is no guarantee of anything. Great for Google, great for users, but often not so good for publishers as the publisher carries all the risk.

Take a look at the Boston Globe, sold along with a boatload of content for a 93% decline. Quality content sure, but is it a profitable business? Emphasis on content without adequate marketing is not a sure-fire strategy. Bezos has just bought the Washington Post, of course, and we’re pretty sure that isn’t a content play, either.

High quality content often has a high upfront production cost attached to it, and given measly web advertising rates, the high possibility of invisibility, getting content scrapped and ripped off, then it is no wonder webmasters also push their high quality content in order to ensure it ranks. What other choice have they got?

To not do so is also risky.

Even eHow, well known for cheap factory line content, is moving toward subscription membership revenues.

The Somewhat Bigger Question

Google can move the goal- posts whenever they like. What you’re doing today might be frowned upon tomorrow. One day, your content may be made invisible, and there will be nothing you can do about it, other than start again.

Do you have a contingency plan for such an eventuality?

Johnon puts it well:

The only thing that matters is how much traffic you are getting from search engines today, and how prepared you are for when some (insert adjective here) Googler shuts off that flow of traffic”

To ask about the minuate of Google’s policies and guidelines is to miss the point. The real question is how prepared are you when Google shuts off you flow of traffic because they’ve reset the goal posts?

Focusing on the minuate of Google’s policies is, indeed, to miss the point.

This is a question of risk management. What happens if your main site, or your clients site, runs foul of a Google policy change and gets trashed? Do you run multiple sites? Run one site with no SEO strategy at all, whilst you run other sites that push hard? Do you stay well within the guidelines and trust that will always be good enough? If you stay well within the guidelines, but don’t rank, isn’t that effectively the same as a ban i.e. you’re invisible? Do you treat search traffic as a bonus, rather than the main course?

Be careful about putting Google’s needs before your own. And manage your risk, on your own terms.

Google Keyword(Not Provided) Now Over 50%

Most Organic Search Data is Now Hidden

Over the past couple years since its launch, Google’s keyword (not provided) has received quite a bit of exposure, with people discussing all sorts of tips on estimating its impact & finding alternate sources of data (like competitive research tools & webmaster tools).

What hasn’t received anywhere near enough exposure (and should be discussed daily) is that the sole purpose of the change was anti-competitive abuse from the market monopoly in search.

The site which provided a count for (not provided) recently displayed over 40% of queries as (not provided), but that percentage didn’t include the large percent of mobile search users that were showing no referrals at all & were showing up as direct website visitors. On July 30, Google started showing referrals for many of those mobile searchers, using keyword (not provided).

According to research by RKG, mobile click prices are nearly 60% of desktop click prices, while mobile search click values are only 22% of desktop click prices. Until Google launched enhanced AdWords campaigns they understated the size of mobile search by showing many mobile searchers as direct visitors. But now that AdWords advertisers can’t opt out of mobile ads, Google has every incentive to promote what a big growth channel mobile search is for their business.

Looking at the analytics data for some non-SEO websites over the past 4 days I get Google referring an average of 86% of the 26,233 search visitors, with 13,413 being displayed as keyword (not provided).

Hiding The Value of SEO

Google is not only hiding half of their own keyword referral data, but they are hiding so much more than half that even when you mix in Bing and Yahoo! you still get over 50% of the total hidden.

Google’s 86% of the 26,233 searches is 22,560 searches.

Keyword (not provided) being shown for 13,413 is 59% of 22,560. That means Google is hiding at least 59% of the keyword data for organic search. While they are passing a significant share of mobile search referrers, there is still a decent chunk that is not accounted for in the change this past week.

Not passing keywords is just another way for Google to increase the perceived risk & friction of SEO, while making SEO seem less necessary, which has been part of “the plan” for years now.

When one digs into keyword referral data & ad blocking, there is a bad odor emitting from the GooglePlex.

Buy AdWords ads and the data gets sent. Rank organically and most the data is hidden.

Subsidizing Scammers Ripping People Off

A number of the low end “solutions” providers scamming small businesses looking for SEOs are taking advantage of the opportunity that keyword (not provided) offers them. A buddy of mine took over SEO for a site that had showed absolutely zero sales growth after a year of 15% monthly increase in search traffic. Looking at the on-site changes, the prior SEOs did nothing over the time period. Looking at the backlinks, nothing there either.

So what happened?

Well, when keyword data isn’t shown, it is pretty easy for someone to run a clickbot to show keyword (not provided) Google visitors & claim that they were “doing SEO.”

And searchers looking for SEO will see those same scammers selling bogus solutions in AdWords. Since they are selling a non-product / non-service, their margins are pretty high. Endorsed by Google as the best, they must be good.

Google does prefer some types of SEO over others, but their preference isn’t cast along the black/white divide you imagine. It has nothing to do with spam or the integrity of their search results. Google simply prefers ineffective SEO over SEO that works. No question about it. They abhor any strategies that allow guys like you and me to walk into a business and offer a significantly better ROI than AdWords.

This is no different than the YouTube videos “recommended for you” that teach you how to make money on AdWords by promoting Clickbank products which are likely to get your account flagged and banned. Ooops.

Anti-competitive Funding Blocking Competing Ad Networks

John Andrews pointed to Google’s blocking (then funding) of AdBlock Plus as an example of their monopolistic inhibiting of innovation.

sponsoring Adblock is changing the market conditions. Adblock can use the money provided by Google to make sure any non-Google ad is blocked more efficiently. They can also advertise their addon better, provide better support, etc. Google sponsoring Adblock directly affects Adblock’s ability to block the adverts of other companies around the world. – RyanZAG

Turn AdBlock Plus on & search for credit cards on Google and get ads.

Do that same search over at Bing & get no ads.

How does a smaller search engine or a smaller ad network compete with Google on buying awareness, building a network AND paying the other kickback expenses Google forces into the marketplace?

They can’t.

Which is part of the reason a monopoly in search can be used to control the rest of the online ecosystem.

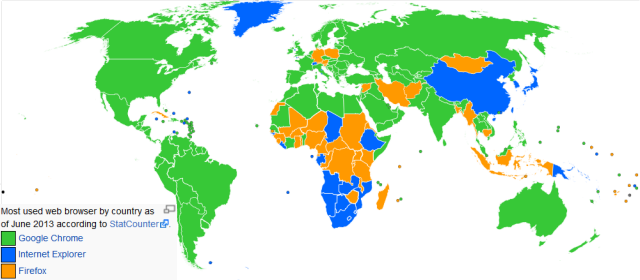

Buying Browser Marketshare

Already the #1 web browser, Google Chrome buys marketshare with shady one-click bundling in software security installs.

If you do that stuff in organic search or AdWords, you might be called a spammer employing deceptive business practices.

When Google does it, it’s “good for the user.”

Vampire Sucking The Lifeblood Out of SEO

Google tells Chrome users “not signed in to Chrome (You’re missing out – sign in).” Login to Chrome & searchers don’t pass referral information. Google also promotes Firefox blocking the passage of keyword referral data in search, but when it comes to their own cookies being at risk, that is unacceptable: “Google is pulling out all the stops in its campaign to drive Chrome installs, which is understandable given Microsoft and Mozilla’s stance on third-party cookies, the lifeblood of Google’s display-ad business.”

What do we call an entity that considers something “its lifeblood” while sucking it out of others?

What Is Your SEO Strategy?

How do you determine your SEO strategy?

Actually, before you answer, let’s step back.

What Is SEO, Anyway?

“Search engine optimization” has always been an odd term as it’s somewhat misleading. After all, we’re not optimizing search engines.

SEO came about when webmasters optimized websites. Specifically, they optimized the source code of pages to appeal to search engines. The intent of SEO was to ensure websites appeared higher in search results than if the site was simply left to site designers and copywriters. Often, designers would inadvertently make sites uncrawlable, and therefore invisible in search engines.

But there was more to it than just enhancing crawlability.

SEOs examined the highest ranking page, looked at the source code, often copied it wholesale, added a few tweaks, then republished the page. In the days of Infoseek, this was all you needed to do to get an instant top ranking.

I know, because I used to do it!

At the time, I thought it was an amusing hacker trick. It also occurred to me that such positioning could be valuable. Of course, this rather obvious truth occurred to many other people, too. A similar game had been going on in the Yahoo Directory where people named sites “AAAA…whatever” because Yahoo listed sites in alphabetical order. People also used to obsessively track spiders, spotting fresh spiders (Hey Scooter!) as they appeared and….cough……guiding them through their websites in a favourable fashion.

When it comes to search engines, there’s always been gaming. The glittering prize awaits.

The new breed of search engines made things a bit more tricky. You couldn’t just focus on optimizing code in order to rank well. There was something else going on.

So, SEO was no longer just about optimizing the underlying page code, SEO was also about getting links. At that point, SEO jumped from being just a technical coding exercise to a marketing exercise. Webmasters had to reach out to other webmasters and convince them to link up.

A young upstart, Google, placed heavy emphasis on links, making use of a clever algorithm that sorted “good” links from, well, “evil” links. This helped make Google’s result set more relevant than other search engines. Amusingly enough, Google once claimed it wasn’t possible to spam Google.

Webmasters responded by spamming Google.

Or, should I say, Google likely categorized what many webmasters were doing as “spam”, at least internally, and may have regretted their earlier hubris. Webmasters sought links that looked like “good” links. Sometimes, they even earned them.

And Google has been pushing back ever since.

Building links pre-dated SEO, and search engines, but, once backlinks were counted in ranking scores, link building was blended into SEO. These days, most SEO’s consider link building a natural part of SEO. But, as we’ve seen, it wasn’t always this way.

We sometimes get comments on this blog about how marketing is different from SEO. Well, it is, but if you look at the history of SEO, there has always been marketing elements involved. Getting external links could be characterized as PR, or relationship building, or marketing, but I doubt anyone would claim getting links is not SEO.

More recently, we’ve seen a massive change in Google. It’s a change that is likely being rolled out over a number of years. It’s a change that makes a lot of old school SEO a lot less effective in the same way introducing link analysis made meta-tag optimization a lot less effective.

My takeaways from Panda are that this is not an individual change or something with a magic bullet solution. Panda is clearly based on data about the user interacting with the SERP (Bounce, Pogo Sticking), time on site, page views, etc., but it is not something you can easily reduce to 1 number or a short set of recommendations. To address a site that has been Pandalized requires you to isolate the “best content” based on your user engagement and try to improve that.

Google is likely applying different algorithms to different sectors, so the SEO tactics used in on sector don’t work in another. They’re also looking at engagement metrics, so they’re trying to figure out if the user really wanted the result they clicked on. When you consider Google’s work on PPC landing pages, this development is obvious. It’s the same measure. If people click back often, too quickly, then the landing page quality score drops. This is likely happening in the SERPs, too.

So, just like link building once got rolled into SEO, engagement will be rolled into SEO. Some may see that as a death of SEO, and in some ways it is, just like when meta-tag optimization, and other code optimizations, were deprecated in favour of other, more useful relevancy metrics. In others ways, it’s SEO just changing like it always has done.

The objective remains the same.

Deciding On Strategy

So, how do you construct your SEO strategy? What will be your strategy going forward?

Some read Google’s Webmaster Guidelines. They’ll watch every Matt Cutts video. They follow it all to the letter. There’s nothing wrong with this approach.

Others read Google’s Guidelines. They’ll watch every Matt Cutts video. They read between the lines and do the complete opposite. Nothing wrong with that approach, either.

It depends on what strategy you’ve adopted.

One of the problems with letting Google define your game is that they can move the goalposts anytime they like. The linking that used to be acceptable, at least in practice, often no longer is. Thinking of firing off a press release? Well, think carefully before loading it with keywords:

This is one of the big changes that may have not been so clear for many webmasters. Google said, “links with optimized anchor text in articles or press releases distributed on other sites,” is an example of an unnatural link that violate their guidelines. The key are the examples given and the phrase “distributed on other sites.” If you are publishing a press release or an article on your site and distribute it through a wire or through an article site, you must make sure to nofollow the links if those links are “optimized anchor text.

Do you now have to go back and unwind a lot of link building in order to stay in their good books? Or, perhaps you conclude that links in press releases must work a little too well, else Google wouldn’t be making a point of it. Or conclude that Google is running a cunning double-bluff hoping you’ll spend a lot more time doing things you think Google does or doesn’t like, but really Google doesn’t care about at all, as they’ve found a way to mitigate it.

Bulk guest posting were also included in Google’s webmaster guidelines as a no no. Along with keyword rich anchors in article directories. Even how a site monetizes by doing things like blocking the back button can be considered “deceptive” and grounds for banning.

How about the simple strategy of finding the top ranking sites, do what they do, and add a little more? Do you avoid saturated niches, and aim for the low-hanging fruit? Do you try and guess all the metrics and make sure you cover every one? Do you churn and burn? Do you play the long game with one site? Is social media and marketing part of your game, or do you leave these aspects out of the SEO equation? Is your currency persuasion?

Think about your personal influence and the influence you can manage without dollars or gold or permission from Google. Think about how people throughout history have sought karma, invested in social credits, and injected good will into their communities, as a way to “prep” for disaster. Think about it.

We may be “search marketers” and “search engine optimizers” who work within the confines of an economy controlled (manipulated) by Google, but our currency is persuasion. Persuasion within a market niche transcends Google

It would be interesting to hear the strategies you use, and if you plan on using different strategy going forward.

Authority Labs Review

There are quite a few rank tracking options on the market today and selecting one (or two) can be difficult. Some have lots of integrations, some have no integrations. Some are trustworthy, some are not.

Deciding on the feature set is tough enough bu…

Pandas And Loyalty

SEOs debate ranking metrics over and over, but if there’s one thing for sure, it’s that Google no longer works the same way it used to.

The fundamental shift in the past couple of years has been more emphasis on what could be characterized as engagement factors.

I became convinced that Panda is really the public face of a much deeper switch towards user engagement. While the Panda score is sitewide the engagement “penalty” or weighting effect on also occurs at the individual page. The pages or content areas that were hurt less by Panda seem to be the ones that were not also being hurt by the engagement issue.

Inbound links to a page still count, as inbound links are engagement factors. How about a keyword in the title tag? On-page text? They are certainly basic requirements, but of low importance when it comes to determining ranking. This is because the web is not short of content, so there will always likely to be on-topic content to serve against a query. Rather, Google refines in order to deliver the most relevant content.

Google does so by checking a range of metrics to see what people really think about the content Google is serving, and the oldest form of this check is an inbound link, which is a form of vote by users. Engagement metrics are just a logical extension of the same idea.

Brands appear to have an advantage, not because they fit into an arbitrary category marked “brand,” but because of signals that define them as being more relevant i.e. a brand keyword search likely results in a high number of click-thrus, and few click-backs. This factor, when combined with other metrics, such as their name in the backlink, helps define relevance.

Social signals are also playing a part, and likely measured in the same way as brands. If enough people talk about something, associate terms with it, and point to it, and users don’t click-back in sufficient number, then it’s plausible that activity results in higher relevance scores.

We don’t know for sure, of course. We can only speculate based on limited blackbox testing which will always be incomplete. However, even if some SEOs don’t accept the ranking boost that comes from engagement metrics, there’s still a sound business reason to pay attention to the main difference between brand and non-brand sites.

Loyalty

Investing In The Return

Typically, internet marketers place a lot of emphasis, and spend, on getting a new visitor to a site. They may also place emphasis on converting the buyer, using conversion optimization and other persuasion techniques.

But how much effort are they investing to ensure the visitor comes back?

Some may say ensuring the visitor comes back isn’t SEO, but in a post-Panda environment, SEO is about a lot more than the first click. As you build up brand searches, bookmarking, and word-of-mouth metrics, you’ll likely create the type of signals Google favours.

Focusing on the returning visitor also makes sense from a business point of view. Selling to existing customers – whether you’re selling a physical thing or a point of view – is cheaper than selling to a new customer.

Acquiring new customers is expensive (five to ten times the cost of retaining an existing one), and the average spend of a repeat customer is a whopping 67 percent more than a new one

So, customer loyalty pays off on a number of levels.

Techniques To Foster Loyalty

Return purchasers, repeat purchasers and repeat visitors can often be missed in analytics, or their importance not well understood. According to the Q2 2012 Adobe analysis, “8% of site visitors, they generated a disproportionately high 41% of site sales. What’s more, return and repeat purchasers had higher average order values and conversion rates than shoppers with no previous purchase history

One obvious technique, if you’re selling products, is to use loyalty programs. Offer points, discounts and other monetary rewards. One drawback of this approach is is that giving rewards and pricing discounts is essentially purchasing loyalty. Customers will only be “loyal” so long as they think they’re getting a bargain, so this approach works best if you’re in a position to be price competitive. Contrast this with the deeper loyalty that can be achieved through an emotional loyalty to a brand, by the likes of Apple, Google and Coke.

Fostering deeper loyalty, then, is about finding out what really matters to people, hopefully something other than price.

Take a look at Zappos. What makes customers loyal to Zappos? Customers may get better prices elsewhere, but Zappos is mostly about service. Zappos is about ease of use. Zappos is about lowering the risk of purchase by offering free returns. Zappos have identified and provided what their market really wants – high service levels and reasonable pricing – so people keep coming back.

Does anyone think the engagement metrics of Zappos would be overlooked by Google? If Zappos were not seen as relevant by Google, then there would be something badly wrong – with Google. Zappos have high brand awareness in the shoe sector, built on solving a genuine problem for visitors. They offer high service levels, which keeps people coming back, and keeps customers talking about them.

Sure, they’re a well-funded, outlier internet success, but the metrics will still apply to all verticals. The brands who engage customers the most, and continue to do so, are, by definition, most relevant.

Another thing to consider, especially if you’re a small operator competing against big players, is closely related to service. Try going over-the-top in you attentiveness to customers. Paul Graham, of Y Combinator, talks about how start-ups should go well beyond what big companies do, and the payback is increased loyalty:

But perhaps the biggest thing preventing founders from realizing how attentive they could be to their users is that they’ve never experienced such attention themselves. Their standards for customer service have been set by the companies they’ve been customers of, which are mostly big ones. Tim Cook doesn’t send you a hand-written note after you buy a laptop. He can’t. But you can. That’s one advantage of being small: you can provide a level of service no big company can

That strategy syncs with Seth Godin’s Purple Cow notion of “being remarkable” i.e do something different – good different – so people remark upon it. These days, and in the context of SEO, that translates into social media mentions and links, and brand searches, all of which will help keep the Google Gods smiling, too.

The feedback loop of high engagement will also help you refine your relevance:

Over-engaging with early users is not just a permissible technique for getting growth rolling. For most successful startups it’s a necessary part of the feedback loop that makes the product good. Making a better mousetrap is not an atomic operation. Even if you start the way most successful startups have, by building something you yourself need, the first thing you build is never quite right…..

Gamification

Gamification has got a lot of press in the last few years as a means of fostering higher levels of engagement and return visits.

The concept is called gamification – that is, implementing design concepts from games, loyalty programs, and behavioral economics, to drive user engagement”. M2 research expects that US companies alone will be spending $3b per year on gamification technologies and services before the end of the decade

People have natural desires to be competitive, to achieve, to gain status, closure and feel altruistic. Incorporating game features helps fulfil these desires.

And games aren’t just for kids. According to The Gamification Revolution, by Zichermann and Linder – a great read on gamification strategy, BTW – the average “gamer” in the US is a 43 year old female. Gaming is one of the few channels where levels of attention are increasing. Contrast this with content-based advertising, which is often rendered invisible by repetition.

This is not to say everything must be turned into a game. Rather, pay attention to the desires that games fulfil, and try to incorporate those aspects into your site, where appropriate. Central to the idea of gamification is orienting around the deep desires of a visitor for some form of reward and status.

The user may want to buy product X, but if they can feel a sense of achievement in doing so, they’ll be engaging at a deeper level, which could then lead to brand loyalty.

eBay, a pure web e-commerce play dealing in stuff, have a “chief engagement officer”, someone who’s job it is to tweak eBay so it becomes more-gamelike. This, in turn, drives customer engagement and loyalty. If your selling history becomes a marker of achievement and status, then how likely are you to start anew at the competition?

This is one of the reasons eBay has remained so entrenched.

Gamification has also been used as a tool for customer engagement, and for encouraging desirable website usage behaviour. Additionally, gamification is readily applicable to increasing engagement on sites built on social network services. For example, in August 2010, one site, DevHub, announced that they have increased the number of users who completed their online tasks from 10% to 80% after adding gamification elements. On the programming question-and-answer site Stack Overflow users receive points and/or badges for performing a variety of actions, including spreading links to questions and answers via Facebook and Twitter. A large number of different badges are available, and when a user’s reputation points exceed various thresholds, he or she gains additional privileges, including at the higher end, the privilege of helping to moderate the site

Gamification, in terms of the web, is relatively new. It didn’t even appear in Google Trends until 2010. But it’s not just some buzzword, it has practical application, and it can help improve ranking by boosting engagement metrics through loyalty and referrals. Loyalty marketing guru Fredrick Reichheld has claimed a strong link between customer loyalty marketing and customer referral.

Obviously, this approach is highly user-centric. Google orient around this principle, too. “Focus on the user and all else will follow.”

Google has always had the mantra of ‘focus on the user and all else will follow,’ so the company puts a significant amount of effort into researching its users. In fact, Au estimates that 30 to 40 per cent of her 200-strong worldwide user experience team is compromised of user researchers

Google fosters return visits and loyalty by giving the user what they want, and they use a lot of testing to ensure that happens. Websites that focus on keywords, but don’t give the user what they want, either due to a lack of focus, lack of depth, or by using deliberate bait-and-switch, are going against Google’s defining principles and will likely ultimately lose the SEO game.

The focus on the needs and desires of the user, both before their first click, to their return visits, should be stronger than ever.

Attention

According to Microsoft research, the average new visitor gives your site 10 seconds or less. Personally, I think ten seconds sounds somewhat generous! If a visitor makes it past 30 seconds, you’re lucky to get two minutes of their attention, in total. What does this do to your engagement metrics if Google is counting click backs, and clicks to other pages in the same domain?

And these metrics are even worse for mobile.

There’s been a lot of diversification in terms of platforms, and many users are stuck in gamified silo environments, like Facebook, so it’s getting harder and harder to attract people out of their comfort zone and to your brand.

So it’s no longer just about building brand, we also need to think about more ways to foster ongoing engagement and attention. We’ve seen that people are spending a lot of attention on games. In so doing, they have been conditioned to expect heightened rewards, stimulation and feedback as a reward for that attention.

Do you reward visitors for their attention?

If not, think about ways you can build reward and status for visitors into your site.

Sites like 99 Designs use a game to solicit engagement from suppliers as a point of differentiation for buyers. Challenges, such as “win the design” competition, delivers dozens of solutions at no extra cost to the user. The winners also receive a form of status, which is also a form of “payment” for their efforts. We could argue that this type of gamification is weighed heavily against the supplier, but there’s no doubting the heightened level of engagement and attraction for the buyer. Not only do they get multiple web design ideas for the price of one, they get to be the judge in a design version of the X-Factor.

Summary

Hopefully, this article has provided some food for thought. If we were going to measure success of loyalty and engagement campaigns, we might look at recency i.e. how long ago did the users last visit, frequency i.e. how often do they visit in a period of time, and duration i.e. when do they come, and how long do they stay. We could then map these metrics back against rankings, and look for patterns.

But even if we’re overestimating the effect of engagement on rankings, it still makes good sense from a business point of view. It costs a lot to get the first visit, but a whole lot less to keep happy visitors coming back, particularly on brand searches.

Think about ways to reward visitors for doing so.

Building And Selling An SEO Business

There’s an in-depth discussion going on in the members area about how to sell an SEO business. There will surely be readers of the blog interested in the topic, too, so I thought I’d look at the more general issues of selling a business – SEO, or otherwise. Specifically, how to structure a business so it can be sold.

Service-Based Businesses

Service based businesses are attractive because they’re easy to establish.

Who can sell a service? The answer is simple–anyone and everyone. Everyone is qualified because each of us has skills, knowledge or experience that other people are willing to pay for in the form of a service; or they’re willing to pay you to teach them your specific skill or knowledge. Selling services knows no boundaries–anyone with a need or desire to earn extra money, work from home, or start and operate a full-time business can sell a service, regardless of age, business experience, education or current financial resources

The downside of a service based business is that they’re easy to establish, so any service area that’s worth any money soon gets flooded with competition. The ease with which competitors can enter service-based markets is one of the reasons why service-based business can be more difficult to sell for a reasonable price.

Selling A Consultancy

Some businesses are more difficult to sell than others. Agency business, such as SEO consulting, can be especially problematic if they’re oriented around highly customized services.

In Built To Sell: Creating Business That Can Thrive Without You, John Warrillow outlines the reasons why, and what can be done about it. The book is an allegory about the troubles the founder of a design agency experiences when, after eight years, he is fed up with the demands of the business and decides to sell, only to find it’s essentially worthless. His business creates logos, does SEO, web design, and brochures, so many of his trials and tribulations will sound familiar to readers of SEOBook.com

Smart businesspeople believe that you should build a company to be sold even if you have no intention of cashing out or stepping back anytime soon

There are 23 million businesses in the US, yet only a few hundred thousand sell each year. Is this simply because the owners want to hold onto them? Yes, in some cases. But mainly it’s because a lot of them can’t be sold due to structural issues. They might be worth something to the seller, but they’re not worth much to to anyone else.

If You Were To Buy A Business, Would You Buy Yours?

If you put yourself in the shoes of a buyer, what would you be looking for in an SEO-related business? What are the traps?

We might start by looking at turnover. Let’s say turnover looks good. We may look at the customer list. Let’s say the customer list looks good, too. There are forward contracts. Typically, owners of businesses place a lot of value on goodwill – their established reputation of a business regarded as a quantifiable asset.

Frequently, goodwill is overvalued and here’s why:

It is fleeting.

A company may have happy customers, happy staff, and people may say good things about them, but that might all change next week. Let’s say Update Zebra, or whatever black n white exotic animal is heading our way next, is rolled out next month and trashes all the good SEO work built up over years. Is everyone still happy? Clients still happy? Staff still happy? Were there performance guarantees in place that will no longer be met? The most difficult thing about the SEO business is that critical delivery aspects are beyond the SEOs control.

Goodwill is so subjective and ephemeral that many investors deduct it completely when valuing a company. This is not to say a good name and reputation has no tangible value, but the ephemeral nature perhaps illustrates why buyers may place less value on goodwill than sellers. If you think most of your business value lies in goodwill, then you may have trouble selling for the price you desire.

….the only aspect of goodwill that can unequivocally offer comfort to an investor is the going-concern value of a company. This represents things such as the value of assets in place, institutional knowledge, reputational value not already captured by trade names, and superior location. All these attributes can lead to sources of competitive advantage and sustainable results; and/or they can give an entity the ability to develop hot products, as well as to achieve above-average earnings.

If a buyer discounts most or all of the goodwill, then what is left? There is staff. But staff can leave. There are forward contracts. How long do these contracts last? What are they worth? Will they roll over? Can they be cancelled or exited? A lot of the value of an agency businesses will lay in those forward contracts. What if the customers really like the founder on a personal level, and that is why they do business with him or her? A service business that is dependent on a small group of clients, who demand personal attention of the founder, and where the business competes with a lot of other players offering similar services is, in the words of John Warrillow “virtually worthless”.

But there are changes that can be made to make it valuable.

Thinking Of Service Provision In Terms Of Product

Warrillow argues that a business can be made more valuable if they create a standard service offering. Package services into a consistent, repeatable process that staff can follow without depending on you. The service should be something that clients need on a regular basis, so revenue is recurring.

His key point is to think like a product company, rather than a service company.

Good service companies have some unique approaches and talented people. But as long as they customize their approach to solving client problems, there is no scale to the business and it’s operations are contingent on people. When people are the main assets of the business – and they can come and go every night – the business is not worth very much

That’s not to say a service business can’t be sold for good money. However, Warrillow points out that they’re typically purchased on less than ideal terms, often involving earn-outs. An earn-out is when the owner gets some money up front, but to get the full price, they need to hit earnings targets, and that may involve staying on for years. In that time, anything can happen, and the people buying the company may make those targets difficult or impossible to achieve. This doesn’t necessarily happen through malice – although sometimes it does – but can arise out of conflicting incentives.

There are other stories of entrepreneurs going through the change from service to products, although the process may not be quite as straightforward as the character in the book experiences:

So I’m sure there’s a lot of entrepreneurs out there that want to make the switch from consultancy to selling products. Belgian entrepreneur Inge Geerdens did exactly that: she pivoted successfully from providing services to selling a product…….A product is entirely different. You have costs that you can’t cut. In a service company, you can downsize everyone if you want, and run it at basically zero cost. It’s impossible to do that with a product. There’s hosting, development, upgrades, bug fixes, support: those are costs that you can’t flatten in any way. Your developers need new PC’s a lot sooner than consultants!

Nonetheless, the book offers seventeen tips on how to adjust a service based business to make it more saleable, and there are a lot more great ideas in it. Hopefully, outlining these tips will encourage you to buy the book – I’m not on commission, honest, but it’s a great read for anyone starting or running a business with the intention to sell it one day.

Let’s look at these tips in the context of SEO-related businesses.

1. Specialize

It’s difficult for small firms to be generalists.

Large firms can offer many services simply by having many specialists on the payroll. If a small business tries to do likewise, small business end up with staff wearing many hats. Someone who is a generalist is unlikely to be as proficient as a specialist, and this makes it more difficult to establish a point of difference and outperform the competition.

In terms of SEO, it’s already a pretty specialized area. The businesses that might be more difficult to sell in this market sector are the businesses offering multiple service lines including SEO, web design, brochures, etc, unless they have some local advantage that can’t easily be replicated.

However, positioning as a generalist can have it’s advantages, especially if the ecosystem changes:

Despite the corporate world’s insistence on specialization, the workers most likely to come out on top are generalists—but not just because of their innate ability to adapt to new workplaces, job descriptions or cultural shifts. Instead, according to writer Carter Phipps, author of 2012’sEvolutionaries generalists will thrive in a culture where it’s becoming increasingly valuable to know “a little bit about a lot.” Meaning that where you fall on the spectrum of specialist to generalist could be one of the most important aspects of your personality—

This is perhaps more true of individual workers than entities.

2. Make Sure No One Client Makes Up More Than 15% Of Your Revenue

If a business is too reliant on one client, then risk is increased. If the business loses the client, then a big chunk of the business value walks out the door.

Even though we usually land an annual contract, once that runs out, the client can cut us loose without any of the messiness involved in firing employees — that is, no severance pay, no paying unemployment benefits, no risk of being sued for discrimination or harassment or any of the other three million reasons why an ex-employee sues an ex-employer

3. Owning A Process Makes It Easier To Pitch And Puts You In Control

It is more difficult and time consuming to sell highly configured solutions than it is to sell packaged services. Highly configured services are also harder to scale, as this usually involves adding highly skilled and therefore expensive staff.

In SEO, it can be difficult to implement packaged, repeatable processes. Another way of looking at it might be to focus on adaptive processes, as used in Agile:

Reliable processes focus on outputs, not inputs. Using a reliable process, team members figure out ways to consistently achieve a given goal even though the inputs vary dramatically. Because of the input variations, the team may not use the same processes or practices from one project, or even one iteration, to the next. Reliability is results driven. Repeatability is input driven.

4. Don’t Become Synonymous With Your Company

Yahoo lived on without its founders. As will Google and Microsoft. The founders created “machines” that will “go” whether the founders are there or not.

Often, small consulting businesses are built around the founder, and this can make selling the company more difficult than need be. If customers want the founder handling or overseeing their account, then a buyer is going to wonder how much of the customer list will be left after the founder exits. It can even happen to big companies, like Apple, although their worry is perhaps more about the ability of successors to lead innovation.

If you never returned to your business, could it keep running?

Test yourself simply by asking yourself these questions and if you can respond yes to all of them you are well prepared:

- Do you have a strategy in place should you, or a key staff member, be unable to return to work for a long period, or never?

- Is this strategy documented and has it been communicated effectively to the business?

- Do you have a process in place that ensures qualified and appropriately trained people are able to take over competently when the current generation of managers and key people retire or move on?

- Has this strategy been documented and communicated to the key people involved?

- Do you have a ‘vision’ for your business? Does it link easily to the ‘values’ of the business and the behaviours of the people within the business?

- Has your ‘vision’ been well articulated and communicated with the people in the business?

- Are you able to demonstrate your business plans for a clearly-defined viable future?

- Have these plans been clearly articulated, documented and communicated to the key people within your organisation?

5. Avoid The Cash Suck

Essentially, try to get payment up-front. This is a lot easier to do for products than services. Alternatively, use progress billing. Either way, you need to be cash-flow positive.

Poor cashflow is the silent killer of many businesses, and poor, lumpy cashflow looks especially bad when a business is being packaged up for sale. It’s difficult to make accurate forward revenue predictions when looking at sporadic cashflow.

6. Don’t Be Afraid To Say No To Projects

It can be difficult to turn down work, but if the work doesn’t fit into your existing processes, then you need to find extra resources to do it. Above all else, it’s a distraction from your core function, which will also likely be your competitive advantage.

This point is also highlighted well in The Pumpkin Plan:

Never, ever let distractions – often labelled as new opportunities – take hold. Weed them out fast

7. Take Time To Figure Out How Many Pipeline Prospects Will Likely Lead To Sales

What’s your conversion rate? This helps a buyer determine the market potential. They want to know if they can expect the same rate of sales when they take it over.

8. Two Sales Reps Are Always Better Than One

The reasoning for this is that sales people are naturally competitive, so will compete against each other, which benefits the business.

Most of us would agree that salespeople are competitive by nature. This is obvious and necessary. After all, these are the people we put on the front lines to win the day and bring back revenue-producing opportunities for the company. They are assessed on their sales performance via metrics and measurements, and they’re incentivized with compensation and perks. Many organizations even have annual sales drives or competitions to quantify the level of performance and measure who is the best.

9. Hire People Who Are Good At Selling Products, Not Services

If you’ve gone to the trouble of systematizing your services to turn it into a product, then you don’t want salespeople agreeing to meet a customers demands by bending the product to those demands. Either the product meets their demands, or it doesn’t. I have known some service-oriented salespeople sell solutions that the company doesn’t even offer, reasoning the sale is the important thing, and the “back office” will work it out somehow!

Part of the rationale is that product based salespeople will filter out clients who want something else, and focus on those who are best served by the product, and likely to want more of it in future.

10. Ignore Your Profit And Loss Statement In The Year You Make A Switch To The Standardized Offering

It will likely show losses due to restructuring around a repeatable process or product. In any case, the future buyer is not buying the previous service business, they’re buying the new product business, and it is on these figures alone, going forward, the business will be judged.

11. You Need At Least Two Years Financial Statements Reflecting Your Standardized Model

See above.

12. Build A Management Team And Offer A Long Term Incentive Plan That Rewards Their Loyalty

Just like a buyer doesn’t want to see a business dependent on the founder, a buyer doesn’t want a management team abandoning ship after they’ve bought a company, either, unless the buyer is happy putting their own management in place.

13. Find An Adviser For Whom You Will Be Neither Their Largest Nor Smallest Client. Ensure They Know The Industry

Warrillow advises using a boutique mergers and acquisitions firm, unless you business is worth well under $5 million, in which case a broker is likely to handle the sale.

Between 1995 and 2006 about a quarter of merging firms hired boutique banks as their advisors on mergers and acquisitions (M&A). Boutique advisors, often specialized by industry, are generally smaller and more independent than full-service banks. This paper investigates firms’ choice between boutique and full-service advisors and the impact of advisor choice on deal outcomes. We find that both acquirers and targets are more likely to choose boutique advisors in complex deals, suggesting that boutique advisors are chosen for their skill and expertise.

14. Avoid An Advisor Who Offers To Broker A Discussion With A Single Client. You Need To Ensure (Buyer) Competition

Sometimes, advisors are scouts for favoured clients. This can create a conflict of interest as the advisor may be trying to limit the bidding competition as a favor to the buyer, or because they’re earning higher margins from that one client for introducing deals.

15. Think Big. Write A Three-Year Business Plan That Paints A Picture Of What Is Possible For Your Business

Think in terms of what the business could be, not necessarily what it is within your capabilities. For example, if the business is regional, what are the possibilities if it was scaled to every state? Or the world?

The buyer may have resources to leverage that you do not, such as established agencies in different markets. What happens if they sell your product to all their existing customers? Suddenly the scope of the business is increased, and the possible value is highlighted. Imagine what it would be like if you had the networks that were possible, as opposed to those you have at present.

16. If You Want A Sellable, Product Oriented Business, You Need To Use The Language Of One

“Clients” become “customers”, “firm” becomes “business”. It’s not just a change of positioning, it’s also a change of mindset and rhetoric, which in turn helps frame the company in the right light for the buyer.

17. Don’t Issue Stock Options To Retain Key Employees After Acquisition. Instead, Use A Simple Bonus.

Stock options can be complicated, although pretty common in the tech world. Warrillow’s argument against stock options is that they can complicate the sales process, as it’s reasonable all stockholders should get some say in the terms of the sale. This probably isn’t such an issue for larger businesses, as buyers would expect it.

Instead, Warrillow recommends a stay bonus, which is a cash reward for key staff if you sell the company. There should also be bonuses beyond the transition in order to inceentivise them to stay.

Conclusion

There are a lot of good tips and ideas in Warrillow’s book, and I’ve really only scratched the surface with this summary. These tips require context to get the most out of them, but hopefully they’ve provided a good starting point.

Have you bought or sold an SEO business? It would be great to hear your experience of doing so. Do you agree with some of these tips, or disagree? Please feel free to add to the comments!

Scaling Your SEO Business in 2013 and Beyond

Is “it” over? No.

For SEO practitioners, it’s been quite a bumpy ride over the past few years. Costs have gone up, the broader economy has continued to go south, and margins may have gotten a bit tighter.

Algorithms have gotten more wild, more comple…

Buying A Business

As Google makes life more difficult for SEOs, pure-play SEO business models, such as affiliate and Adsense, can start to lose their shine. Google can remove you from Adsense without warning, and the affiliate model has always had hooks.

One of the problems with affiliate and Adsense has always been that it is difficult to lock in and build value using these models. If the customer is “owned” by someone else, then a lot of the value of the affiliate/Adsense middle-man lies in the SERP placement. When it comes time to sell, apart from possible type-in domain value, how much intrinsic value does such a site have? Rankings are by no means assured.

So, if these areas are no longer earning you what they once did, it makes sense to explore other options, including vertical integration. Valuable online marketing skills can be readily bolted onto an existing business, preferably to a business operating in an area that hasn’t taken full advantage of search marketing in the past.

Even if you plan on building a business as opposed to buying, looking at businesses for sale in the market you intend to build can supply you with great information. You can gauge potential income, level of competition, and undertaking a thorough business analysis can help you discover the hidden traps before you experience them yourself. If there are a lot of businesses for sale in the market you’re looking to enter, and their figures aren’t that flash, then that’s obviously a warning sign.

Such analysis can also help you formulate your own exit strategy. What would make the business you’re building look attractive to a buyer further down the track? It can be useful to envision the criteria for a business you’d like to buy, and then analyse backwards to give you ideas on how to get there.

In this article, we’ll take the 3,000 ft view and look at the main considerations and the type of advice you’ll need. We’ll also take a look at the specifics of buying an existing SEO business.

Build Or Buy?

There are a number of pros and cons for either option and a lot depends on your current circumstances.

You might be an existing owner-operator who wants to scale up, or perhaps add another revenue stream. Can you get there faster and more profitably by taking over a competitor, rather than scaling up your own business?

If you’re an employee thinking of striking out on your own and becoming your own boss, can you afford the time it takes to build revenue from scratch, or would you prefer instant cashflow?

The Advantages Of Building From Scratch

Starting your own business is low cost. Many online businesses cost next to nothing to start. Register the business. Open a bank account. Fill out a few forms and get a business card. You’re in business.

You don’t need to pay for existing assets or a customer base, and you won’t get stuck with any of the negatives an existing business may have built up, like poor contracts, bad debts and a tainted reputation. You can design the business specifically for the market opportunity you’ve spotted. You won’t have legacy issues. It’s yours. It will reflect you and no one else, at least to start with. The decisions are yours. You don’t have to honor existing contracts, deal with clients or suppliers you had no part in being contractually obliged to in the first place.

In short, you don’t have legacy issues.

What’s not to like?

There is more risk. You don’t yet know if your business will work, so it’s going to require time and money to find out. There are no guarantees. It can be difficult to get funding, as banks like to see a trading history before they’ll lend. It can be very difficult to get the right employees, especially early on, as highly skilled employees don’t tend to favor uncertain startups, unless they’re getting equity share. You have to start a structure from scratch. Is the structure appropriate? How will you know? You need to make a myriad of decisions, from advertising, to accommodation, to wages, to pricing, and with little to go on, apart from well-meaning advice and a series of hunches and experiments. Getting the numbers right is typically arrived at via a lot of trial and error, usually error. You have no cashflow. You have no customers. No systems. No location.

Not that the downsides should stop anyone from starting their own business. If it was easy, everyone would do it, but ask anyone who has started a business, and they’ll likely tell you that sure, it’s hard, but also fun, and they wouldn’t go back to being an employee.

There is another option.

Buy It

On the plus side, you have cash flow from day one. The killer of any business is cash flow. You can have customers signed up. People may be saying great things about you. You may have a great idea, and other people see that it is, indeed, a great idea.

But if the cash flow doesn’t turn up on time, the lights go out.

If you buy an existing business with sound cashflow, you not only keep the lights on, you’re more likely to raise finance. In many cases, the seller can finance you. If that’s the case, then for a small deposit you get the cashflow you need, based on the total business value, from day one.

You’ve got a structure in place. If the business is profitable and running well, then you don’t need to experiment to find out what works. You know your costs, how much you need to spend, and how much to allocate to which areas. You can then optimize it. You have customers, likely assistance from the vendor, and the knowledge from existing suppliers and employees. There is a reduced risk of failure. Of course, you pay a price for such benefits.

To buy a business, you need money. Whatsmore, you’re betting that money on someone elses idea, not your own, and it can be difficult to spot the traps. You can, of course, reshape and respin the business in your own image. You can get stuck with a structure that wasn’t built to your specifications. You might not like some of the legacy issues, including suppliers, existing contracts or employees.

If you decide buying a business is the right thing for you, then you’ll need good advice.

Advice

According to a survey conducted by businessforsale.com, businesses can take an average of nine months to sell:

- 28% of brokers said within 6 months

- 31% of brokers said within 9 months

- 21% of brokers said within 12 months

- 10.5% of brokers said that more than 12 months was required to sell a business