Publisher Blocking: How the Web Was Lost

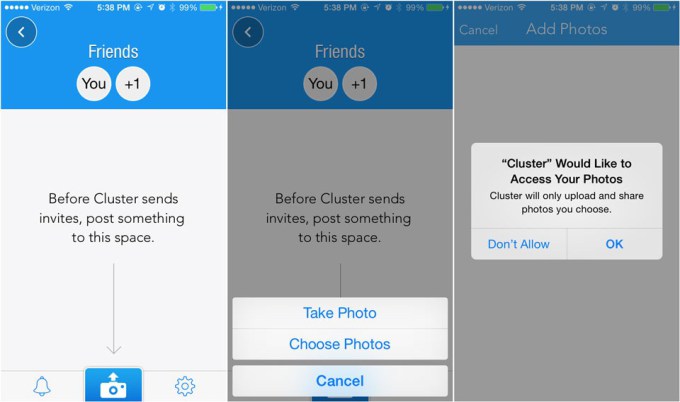

Streaming Apps

Google recently announced app streaming, where they can showcase & deep link into apps in the search results even if users do not have those apps installed. How it works is rather than users installing the app, Google has the app installed on a computer in their cloud & then shows users a video of the app. Click targets, ads, etc. remain the same.

In writing about the new feature, Danny Sullivan wrote a section on “How The Web Could Have Been Lost”

Imagine if, in order to use the web, you had to download an app for each website you wanted to visit. To find news from the New York Times, you had to install an app that let you access the site through your web browser. To purchase from Amazon, you first needed to install an Amazon app for your browser. To share on Facebook, installation of the Facebook app for your browser would be required. That would be a nightmare.

…

The web put an end to this. More specifically, the web browser did. The web browser became a universal app that let anyone open anything on the web.

To meaningfully participate on those sorts of sites you still need an account. You are not going to be able to buy on Amazon without registration. Any popular social network which allows third party IDs to take the place of first party IDs will quickly become a den of spam until they close that loophole.

In short, you still have to register with sites to get real value out of them if you are doing much beyond reading an article. Without registration it is hard for them to personalize your experience & recommend relevant content.

Desktop Friendly Design

App indexing & deep linking of apps is a step in the opposite direction of the open web. It is supporting proprietary non-web channels which don’t link out. Further, if you thought keyword (not provided) heavily obfuscated user data, how much will data be obfuscated if the user isn’t even using your site or app, but rather is interacting via a Google cloud computer?

- Who visited your app? Not sure. It was a Google cloud computer.

- Where were they located? Not sure. It was a Google cloud computer.

- Did they have problems using your app? Not sure. It was a Google cloud computer.

- What did they look at? Can you retarget them? Not sure. It was a Google cloud computer.

Is an app maker too lazy to create a web equivalent version of their content? If so, let them be at a strategic disadvantage to everyone who put in the extra effort to publish their content online.

If Google has their remote quality raters consider a site as not meeting users needs because they don’t publish a “mobile friendly” version of their site, how can one consider a publisher who creates “app only” content as an entity which is trying hard to meet end user needs?

We know Google hates app install interstitials (unless they are sold by Google), thus the only reason Google would have for wanting to promote these sorts of services would be to justify owning, controlling & monetizing the user experience.

App-solutely Not The Answer

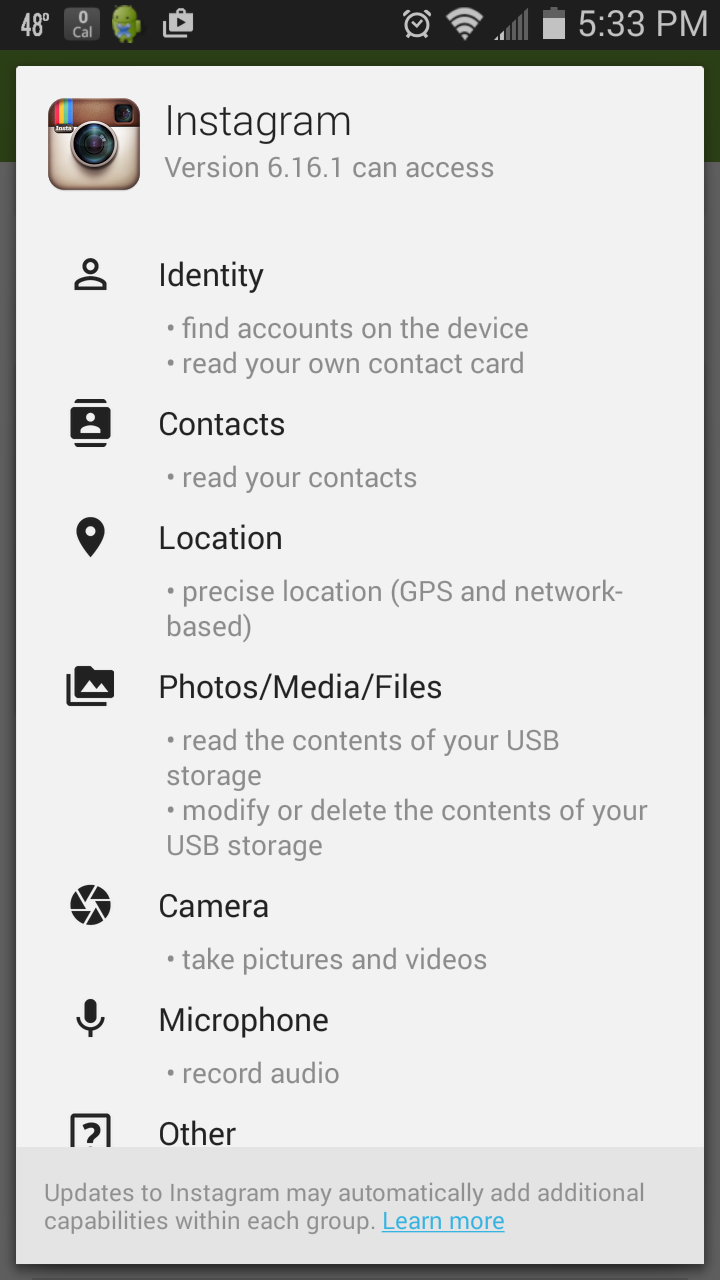

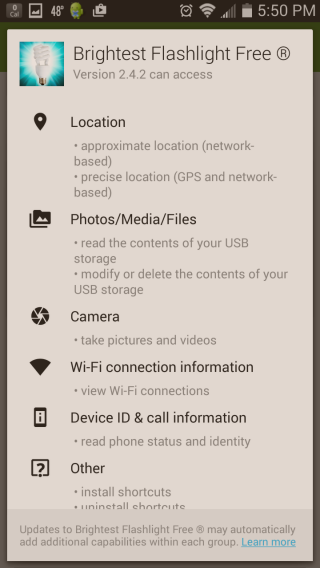

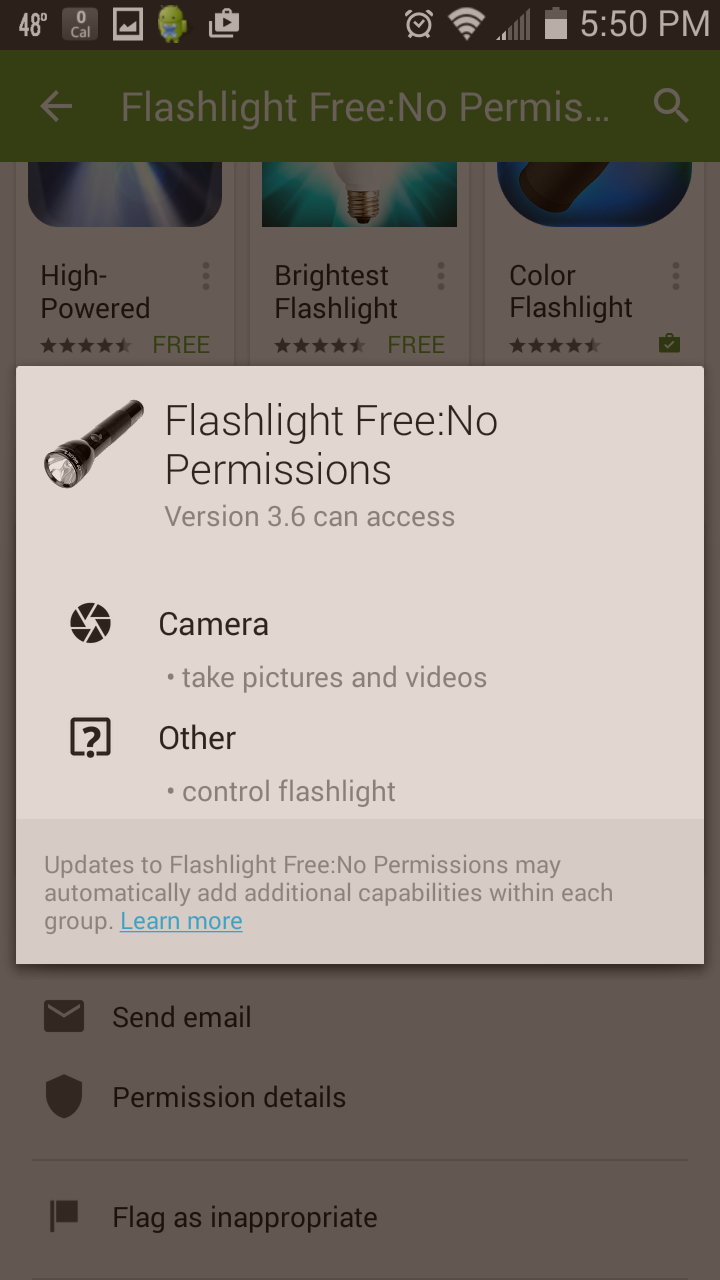

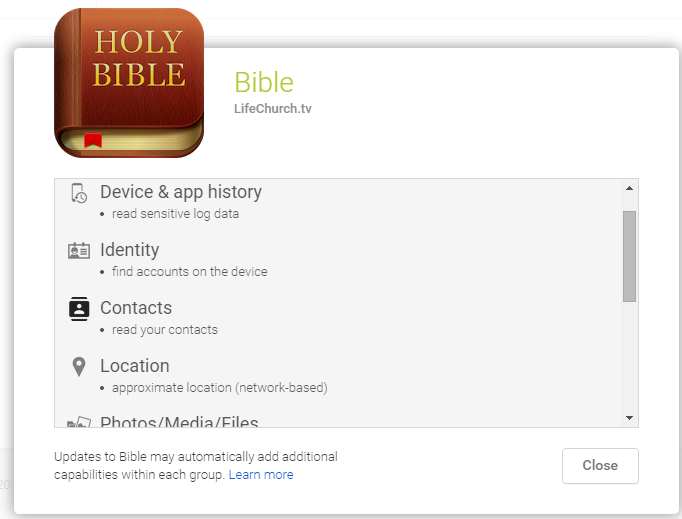

Apps are sold as a way to lower channel risk & gain direct access to users, but the companies owning the app stores are firmly in control.

- Google is being investigated by regulators in multiple markets over their Android bundling contracts. And while bundling is a core feature of the OS, others who bundle are given the boot.

- Google Now on Tap embeds Google search in third party apps.

- The low pricepoints for consumer apps in app stores makes it hard for businesses to justify selling B2B apps for a high enough price to offset the smaller addressable audience.

- It has become harder to sell consumer apps as the app stores have saturated with competition.

2008 I’ll sell apps for $2.99 & make millions

2010 At $0.99 I’ll make $1000s

2012 Ads might cover my rent

2014 Kickstart my app

2015 Hire me— Nick Lockwood (@nicklockwood) August 3, 2015 - Exceptionally popular apps are disabled for interfering with business models of the platforms. Apps and extensions can be disabled at any time, even after the fact, due to violating guidelines or rule changes that turn what was once fine into a guideline violation. In some cases when they are disabled it is done with no option to re-enable.

- The Amazon app on iOS allows you to buy physical goods, but good luck buying an ebook in it. Even Amazon was removed from Google’s Play store after allowing digital purchases. Apple TV doesn’t support Amazon Prime Video. In turn, Amazon has stopped selling some streaming items from Apple and Google.

Everyone wants to “own” the user, but none of the platforms bother to ask if the user wants to be owned:

We’re rapidly moving from an internet where computers are ‘peers’ (equals) to one where there are consumers and ‘data owners’, silos of end user data that work as hard as they can to stop you from communicating with other, similar silos.

…

If the current trend persists we’re heading straight for AOL 2.0, only now with a slick user interface, a couple more features and more users.

You’ve Got AOL

The AOL analogy is widely used:

Katz of Gogobot says that “SEO is a dying field” as Google uses its “monopoly” power to turn the field of search into Google’s own walled garden like AOL did in the age of dial-up modems.

Almost 4 years ago a Google engineer described SEO as a bug. He suggested one shouldn’t be able to rank highly without paying.

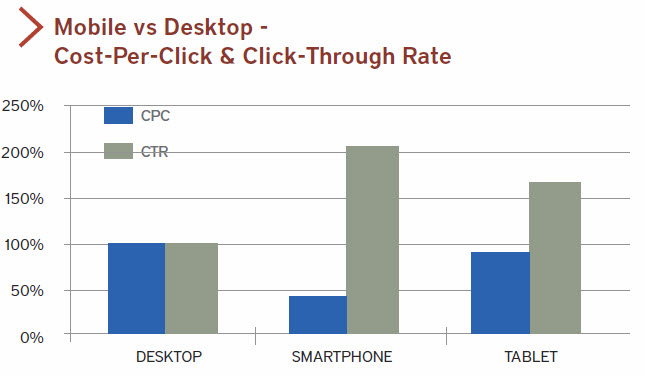

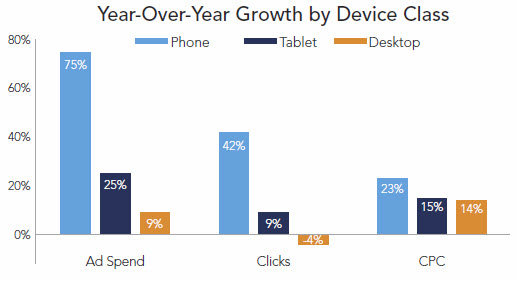

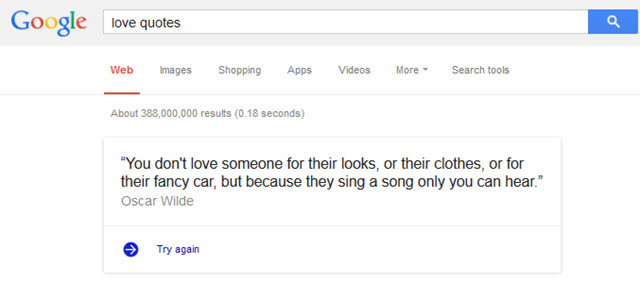

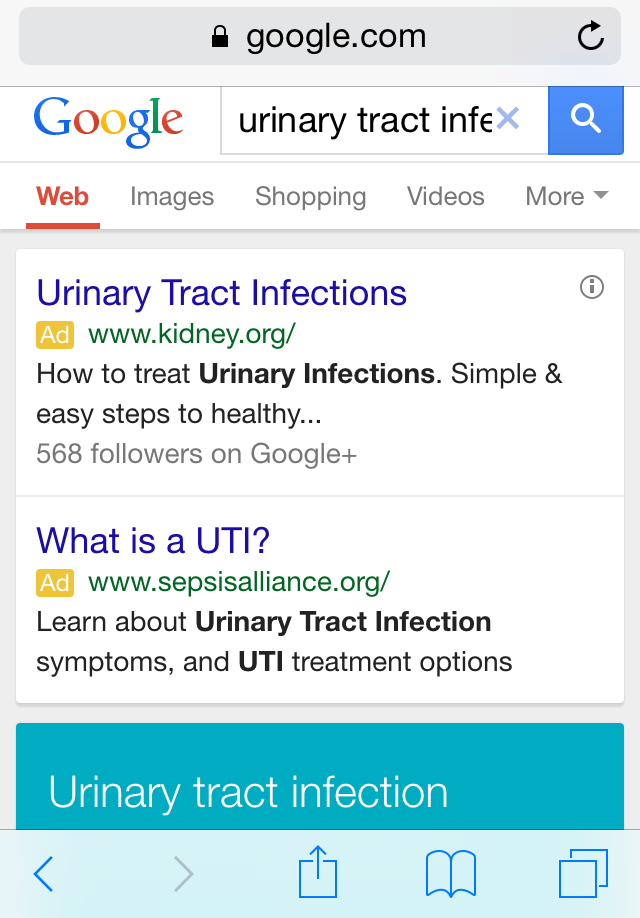

It looks like he was right. Google’s aggressive ad placement on mobile SERPs “has broken the will of users who would have clicked on an organic link if they could find one at the top of the page but are instead just clicking ads because they don’t want to scroll down.”

In the years since then we’ve learned Google’s “algorithm” has concurrent ranking signals & other forms of home cooking which guarantees success for Google’s vertical search offerings. The “reasonable” barrier to entry which applies to third parties does not apply to any new Google offerings.

And “bugs” keep appearing in those “algorithms,” which deliver a steady stream of harm to competing businesses.

From Indy to Brand

The waves of algorithm updates have in effect increased the barrier to entry, along with the cost needed to maintain rankings. The stresses and financial impacts that puts on small businesses makes many of them not worth running. Look no further than MetaFilter’s founder seeing a psychologist, then quitting because he couldn’t handle the process.

When Google engineers are not focused on “breaking spirits” they emphasize the importance of happiness.

The ecosystem instability has made smaller sites effectively disappear while delivering a bland and soulless result set which is heavy on brand:

there’s no reason why the internet couldn’t keep on its present course for years to come. Under those circumstances, it would shed most of the features that make it popular with today’s avant-garde, and become one more centralized, regulated, vacuous mass medium, packed to the bursting point with corporate advertising and lowest-common-denominator content, with dissenting voices and alternative culture shut out or shoved into corners where nobody ever looks. That’s the normal trajectory of an information technology in today’s industrial civilization, after all; it’s what happened with radio and television in their day, as the gaudy and grandiose claims of the early years gave way to the crass commercial realities of the mature forms of each medium.

If you participate on the web daily, the change washes over you slowly, and the cumulative effects can be imperceptible. But if you were locked in an Iranian jail for years the change is hard to miss.

These sorts of problems not only impact search, but have an impact on all the major tech channels.

iPhone autocorrect inserted “showgirl” for “shows” and “POV” for “PPC”. This crowd sourcing of autocorrect is not welcomed.— john andrews (@searchsleuth998) November 10, 2015

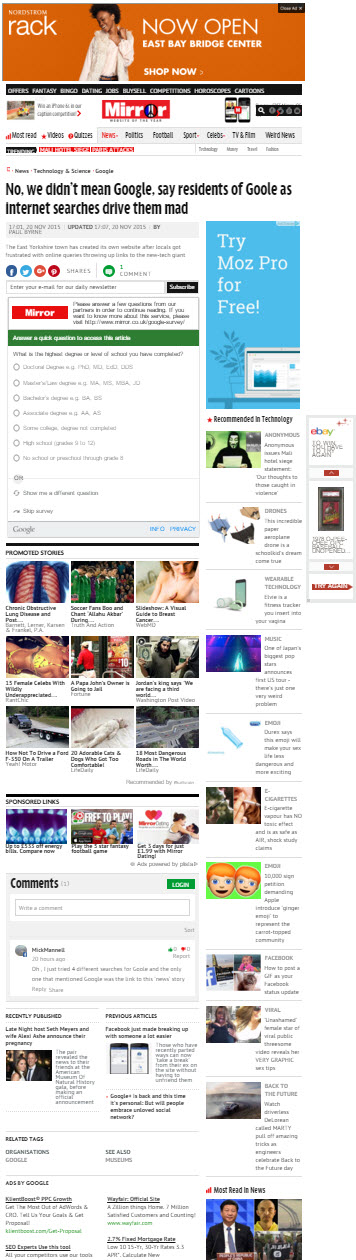

If you live in Goole, these issues strike close to home.

And there are almost no counter-forces to the well established trend:

Eventually they might even symbolically close their websites, finishing the job they started when they all stopped paying attention to what their front pages looked like. Then, they will do a whole lot of what they already do, according to the demands of their new venues. They will report news and tell stories and post garbage and make mistakes. They will be given new metrics that are both more shallow and more urgent than ever before; they will adapt to them, all the while avoiding, as is tradition, honest discussions about the relationship between success and quality and self-respect.

…

If in five years I’m just watching NFL-endorsed ESPN clips through a syndication deal with a messaging app, and Vice is just an age-skewed Viacom with better audience data, and I’m looking up the same trivia on Genius instead of Wikipedia, and “publications” are just content agencies that solve temporary optimization issues for much larger platforms, what will have been point of the last twenty years of creating things for the web?

A Deal With the Devil

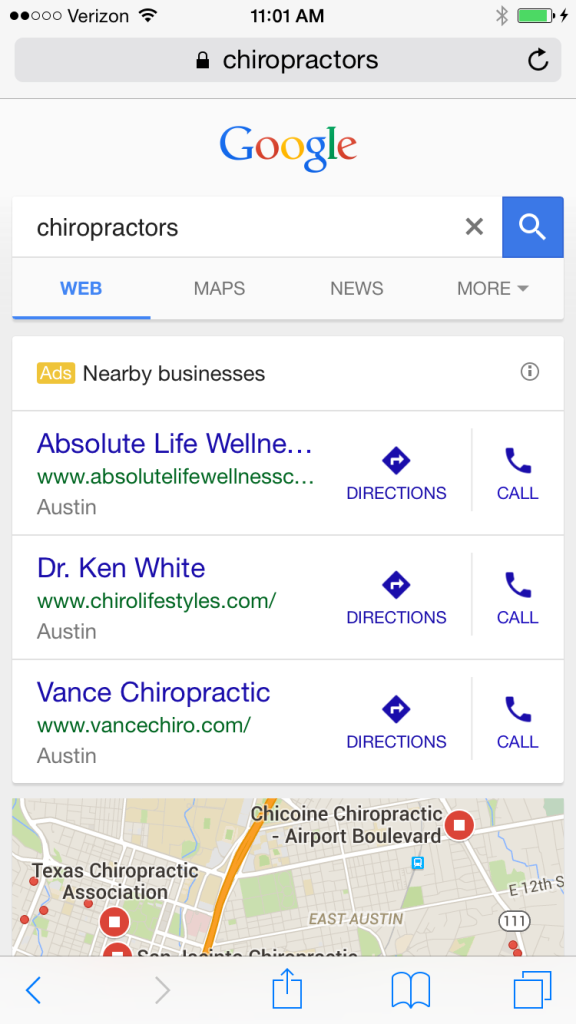

![]() As ad blocking has grown more pervasive, some publishers believe the solution to the problem is through gaining distribution through the channels which are exempt from the impacts of ad blocking. However those channels have no incentive to offer exceptional payouts. They make more by showing fewer ads within featured content from partners (where they must share ad revenues) and showing more ads elsewhere (where they keep all the ad revenues).

As ad blocking has grown more pervasive, some publishers believe the solution to the problem is through gaining distribution through the channels which are exempt from the impacts of ad blocking. However those channels have no incentive to offer exceptional payouts. They make more by showing fewer ads within featured content from partners (where they must share ad revenues) and showing more ads elsewhere (where they keep all the ad revenues).

So far publishers have been underwhelmed with both Facebook Instant Articles and Apple News. The former for stringent ad restrictions, and the latter for providing limited user data. Google Now is also increasing the number of news stories they show. And next year Google will roll out their accelerated mobile pages offering.

The problem is if you don’t control the publishing you don’t control the monetization and you don’t control the data flow.

Your website helps make the knowledge graph (and other forms of vertical search) possible. But you are paid nothing when your content appears in the knowledge graph. And the knowledge graph now has a number of ad units embedded in it.

A decade ago, when Google pushed autolink to automatically insert links in publisher’s content, webmasters had enough leverage to “just say no.” But now? Not so much. Google considers in-text ad networks spam & embeds their own search in third party apps. As the terms of deals change, and what is considered “best for users” changes, content creators quietly accept, or quit.

Many video sites lost their rich snippets, while YouTube got larger snippets in the search results. Google pays YouTube content creators a far lower revenue share than even the default AdSense agreement offers. And those creators have restrictions which prevent them from using some forms of monetization while forces them to accept other types of bundling.

The most recent leaked Google rater documents suggested the justification for featured answers was to make mobile search quick, but if that were the extent of it then it still doesn’t explain why they also appear on desktop search results. It also doesn’t explain why the publisher credit links were originally a light gray.

With Google everything comes down to speed, speed, speed. But then they offer interstitial ad units, lock content behind surveys, and transform the user intent behind queries in a way that leads them astray.

As Google obfuscates more data & increasingly redirects and monetizes user intent, they promise to offer advertisers better integration of online to offline conversion data.

At the same time, as Google “speeds up” your site for you, they may break it with GoogleWebLight.

If you don’t host & control the user experience you are at the whim of (at best, morally agnostic) self-serving platforms which could care less if any individual publication dies.

It’s White Hat or Bust…

What was that old white hat SEO adage? I forget the precise wording, but I think it went something like…

Don’t buy links, it is too risky & too uncertain. Guarantee strong returns like Google does, by investing directly into undermining the political process by hiring lobbyists, heavy political donations, skirting political donation rules, regularly setting policy, inserting your agents in government, and sponsoring bogus “academic research” without disclosing the payments.

Focus on the user. Put them first. Right behind money.

Ad Network Ménage à Trois: Bing, Yahoo!, Google

Yahoo! Tests Google Again

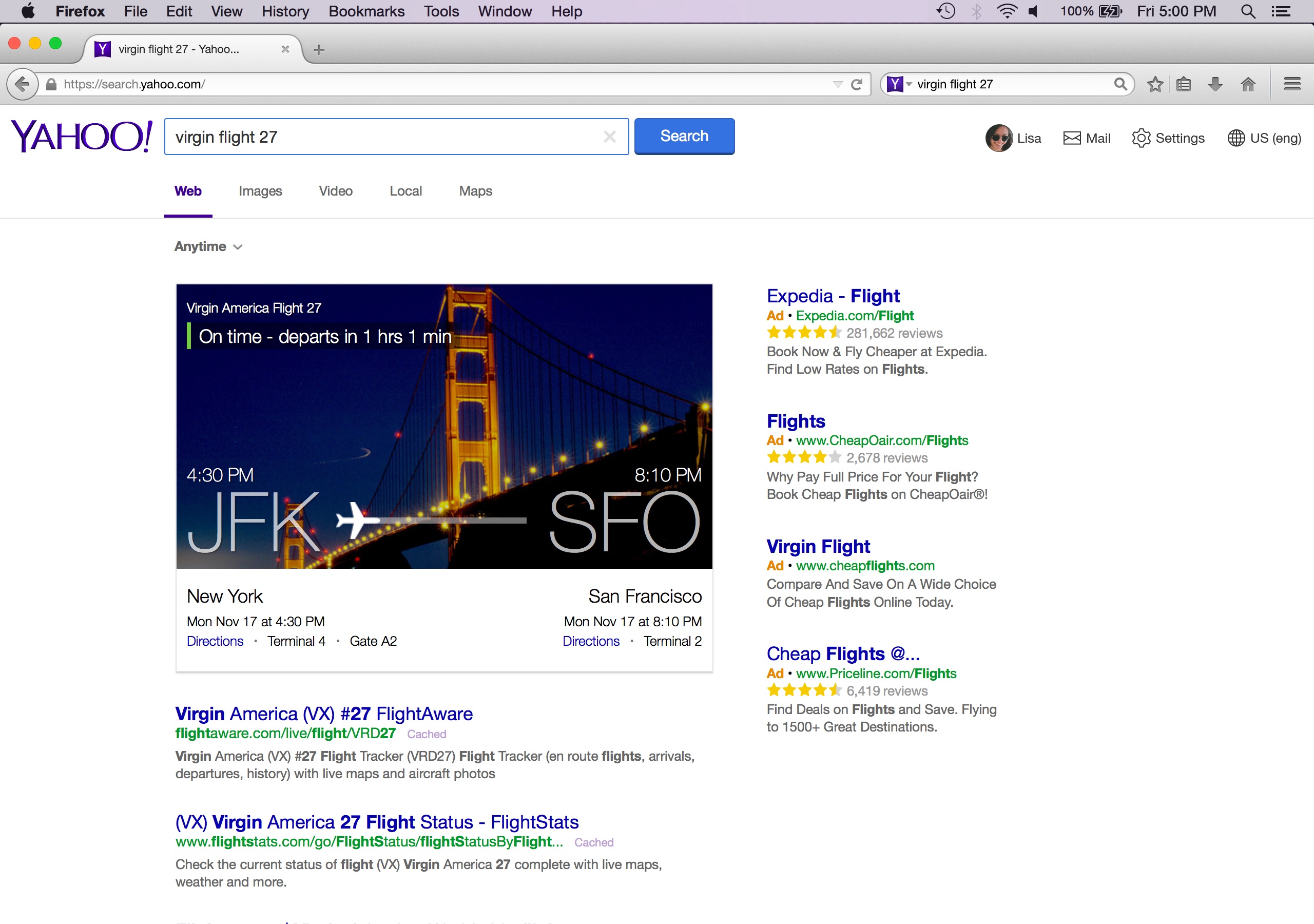

Back in July we noticed Yahoo! was testing Google-powered search results. From that post…

When Yahoo! recently renewed their search deal with Microsoft, Yahoo! was once again allowed to sell their own desktop search ads & they are only required to give 51% of the search volume to Bing. There has been significant speculation as to what Yahoo! would do with the carve out. Would they build their own search technology? Would they outsource to Google to increase search ad revenues? It appears they are doing a bit of everything – some Bing ads, some Yahoo! ads, some Google ads.

The Growth of Gemini

Since then Gemini has grown significantly:

Yahoo has moved quickly to bring search ad traffic under Gemini for advertisers that have adopted the platform. For some perspective, in September 2015, Yahoo.com produced a little over 50 percent of the clicks that took place across the Bing Ads and Gemini platforms. For advertisers adopting Gemini, Gemini produced 22 percent of combined Bing and Gemini clicks. Given the device breakdown of Yahoo’s traffic, this amounts to about two-thirds of the traffic it is able to control under the renegotiated agreement.

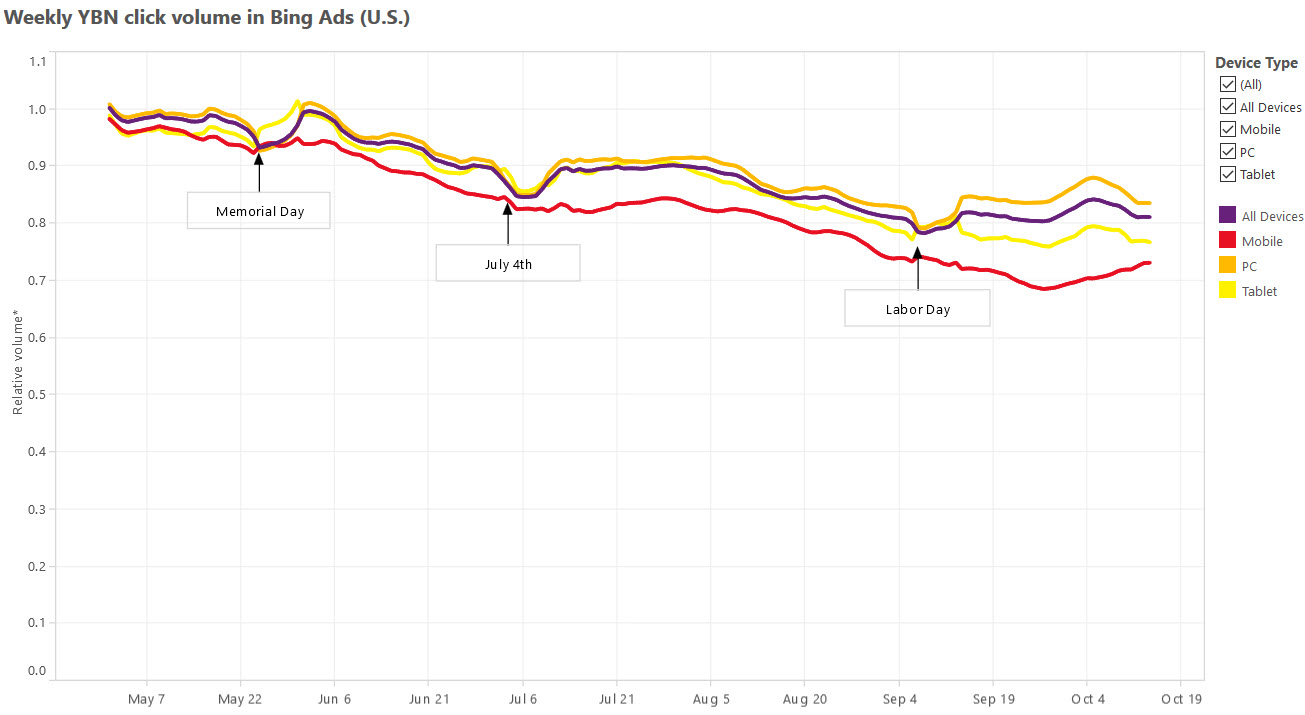

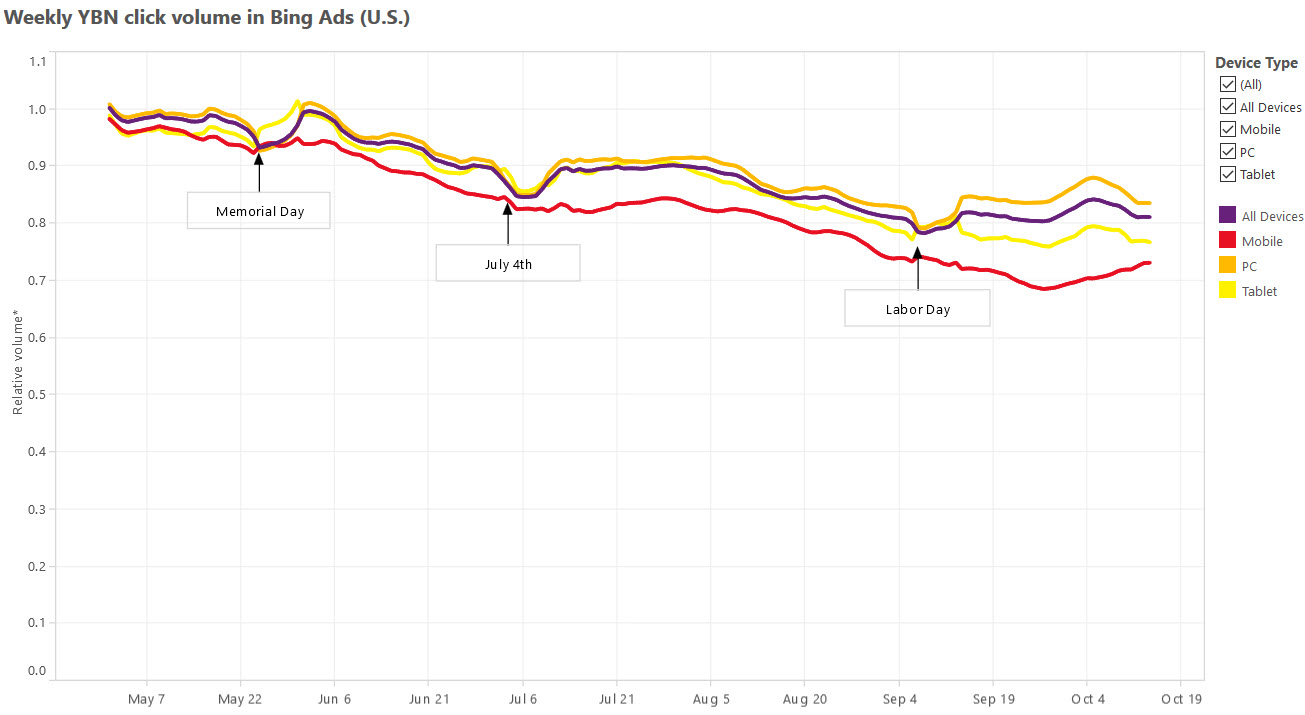

That growth has come at the expense of Bing ad clicks, which have fallen significantly:

Shared Scale to Compete

Years ago Microsoft was partnered into the Yahoo!/Overture ad network to compete against Google. The idea was the companies together would have better scale to compete against Google in search & ads. Greater scale would lead to a more efficient marketplace, which would lead to better ad matching, higher advertiser bids, etc. This didn’t worked as well as anticipated. Originally under-monetization was blamed on poor ad matching. Yahoo! Panama was a major rewrite of their ad system which was supposed to fix the problem, but it didn’t.

Even if issues like bid jamming were fixed & ad matching was more relevant, it still didn’t fix issues with lower ad depth in emerging markets & arbitrage lowering the value of expensive keywords in the United States.

Understanding the Value of Search Clicks

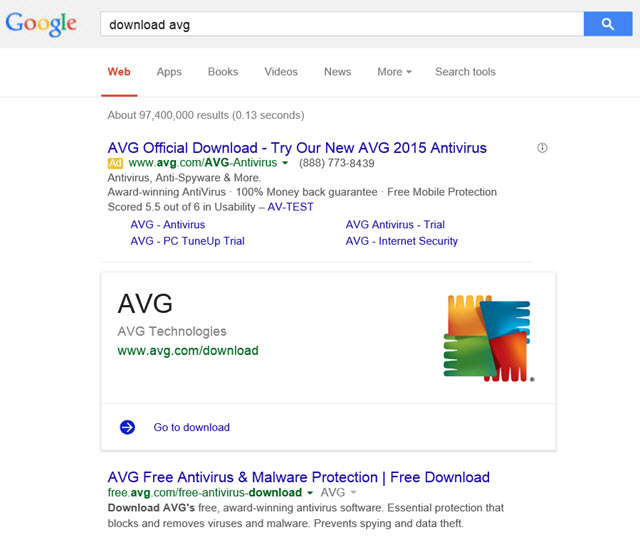

When a person types a keyword into a search box they are expressing significant intent. When a person clicks a link to land on a page they may still have significant interest, but generally there is at least some level of fall off. If I search for a keyword the value of my click is $x, but if I click a link on a “top searches” box, the value of that click may perhaps only be 5% or 10% what the value of a hand typed search. There is less intent.

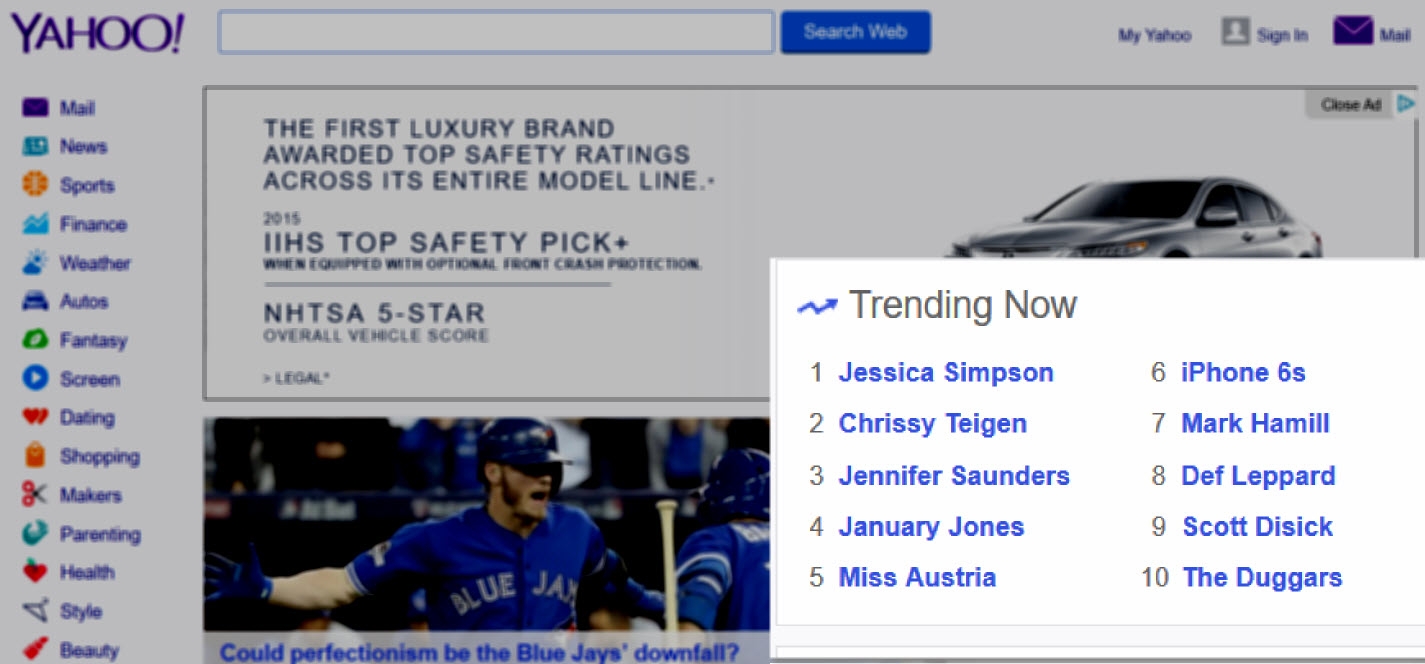

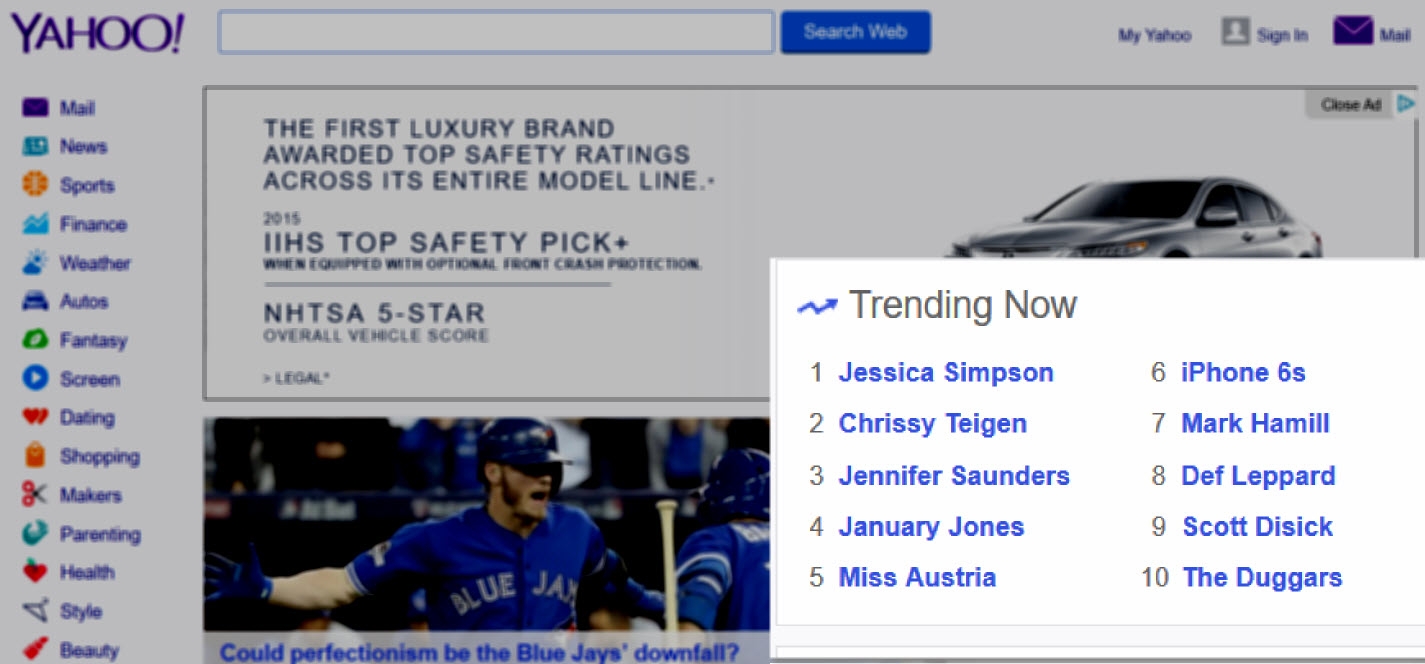

Here is a picture of the sort of “trending now” box which appears on the Yahoo! homepage.

Typically those sorts of searches include a bunch of female celebrities, but then in any such box there will be one or two money terms added, like [lower blood pressure] or [iPhone 6s]. People who search for those terms might have $5 or $10 of intent, but people who click those links might only have a quarter or 50 cents of intent.

That difference in value can utterly screw an advertiser who gets their high-value keyword featured while they are sleeping or not actively monitoring & managing their ad campaign.

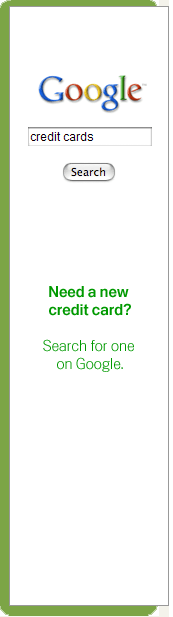

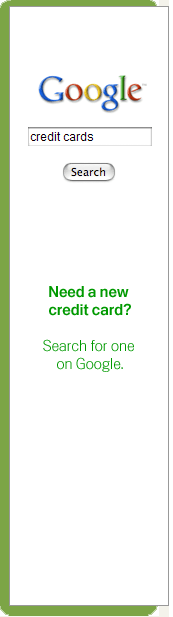

For what it is worth, even Google has tested some of these sort of these “search” traffic generation approaches during the last recession. On the Google AdSense network Google was buying banner ads telling people to search for [credit cards] & if they clicked on those banner ads they ended up on a search result page for [credit cards].

To this day many companies run contextual ads that drive search volume, but the difference between today & the Yahoo! which failed to monetize search is there is (at least currently) a greater focus on traffic quality.

Under-performance Due to Shady Traffic Partners

Yahoo! continued to under-perform in large part because Yahoo! had a lot of “search” partners with many lower quality traffic sources mixed in their traffic stream & they didn’t even allow advertisers to opt out of the partner network until after Yahoo! decided to exit the search market. As bad as the above sounds, it is actually worse, as some larger partners had access to advertiser information in a way that allowed them to aggressively arbitrage away the value of high advertiser bids wherever and whenever an advertiser overbid.

So you would bid thinking you were buying primarily search traffic based on the user intent of a person searching for something, but you might have been getting various layers of arbitrage of lower quality traffic, traffic from domain lander pages, or even some mix of robotic traffic from clickbots. Those $30 search ad clicks are a sure money loser if it is a clickbot software program doing the click.

And not only were some of Yahoo!’s partners driving down the value of clicks on Yahoo! itself, but Yahoo! was paying some of the larger partners in the high 80s to low 90s percent of revenue. Here is a (made up) example chart for illustration purposes, where the (made up) partner is getting a 90% TAC

| Advertiser Bid | Y! Search Clicks | Partner Clicks | Total Clicks | Total Revs | TAC | Rev after TAC | |

| No Partners | $30 | 3,000 | 0 | 3,000 | $90,000 | $0 | $90,000 |

| Bit of Arb | $25 | 3,000 | 1,000 | 4,000 | $100,000 | $22,500 | $77,500 |

| Heavy Arb | $10 | 3,000 | 6,000 | 9,000 | $90,000 | $54,000 | $36,000 |

Why did Yahoo! allow the above sort of behavior to go on? It is hard to believe they were completely unaware of what was going on, particularly when it was so obvious to outside observers. More likely it was that they were rapidly losing search share & wanted the topline revenue growth to make their quarterly number. By the time they realized what damage they had already done to their ecosystem, they were already too far down the path to correct it & were afraid to do anything which significantly hit revenues.

The rapid rise and fall of a large Yahoo! search partner named Geosign was detailed by the Canadian Financial Post, in an article which is now offline, but available via the Internet Archive Wayback Machine:

Companies fail all the time. Sometimes with little warning. But companies that are highly profitable and only weeks removed from a record-setting venture capital investment? Not so much. Yet in Geosign’s case, the cuts that began last May continued through the summer. Late last year, fewer than 100 employees remained. Today, Geosign itself no longer exists, its still-functioning website an empty reminder of its former promise. And while the national business media has, until now, overlooked the story – surprising, given the size of the investment and the fact that Google played a direct role in the outcome – within Canada’s technology and venture-capital communities, the $160-million investment is known as the deal “that didn’t go well.” When the collapse happened, even jaded industry watchers accustomed to financial debacles in the tech sector were stunned. “I’ve seen a lot of meltdowns,” says Duncan Stewart, a technology and investment analyst in Toronto. “But something happening like this, over just a few weeks, that’s unprecedented in my experience.”

Other traffic sources like domain parking have also sharply declined, due to a variety of factors like: web browsers replacing address bars with multi-purpose search boxes, shift of consumer internet traffic to mobile devices (which increases reliance on search over direct navigation & apps replace some segment of direct navigation), increased smart pricing, lower revenue sharing percentages, and Yahoo! no longer being able to offer a competitive bid against Google.

When Yahoo! shifted their search ads to Microsoft, Microsoft allowed advertisers to opt out of the partner network. Microsoft also clamped down on some of the lower quality traffic sources with smart pricing, which hit some of the arbitrage businesses hard & even forced Yahoo! to seek refunds from some of their partners for delivering low quality traffic.

Shared Scale to Compete

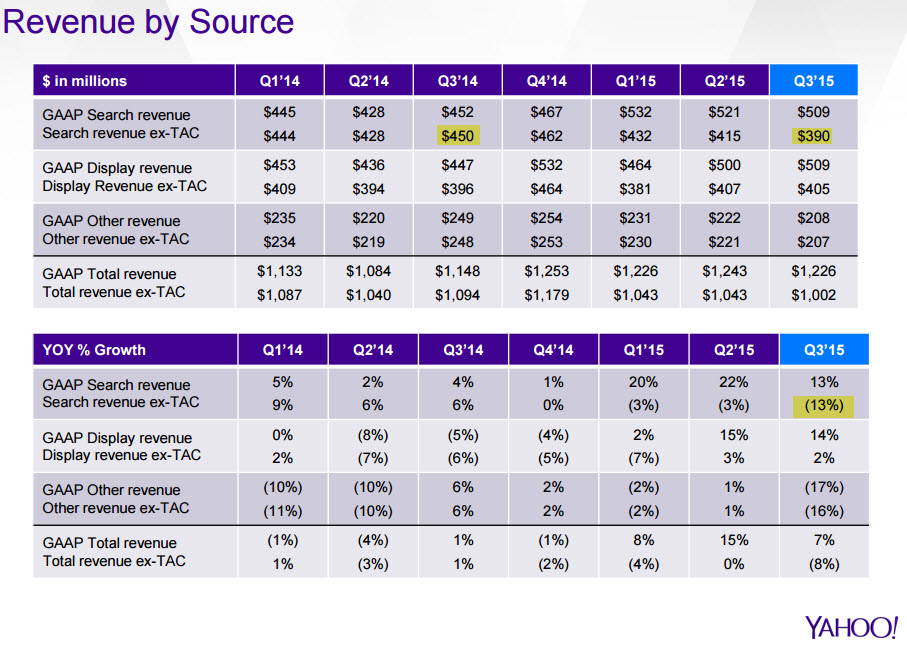

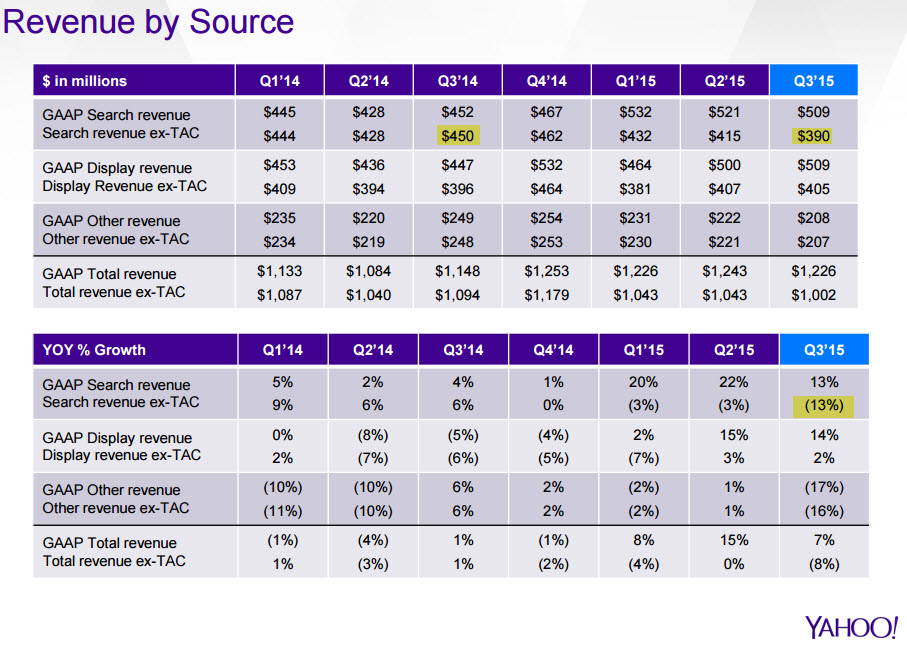

Microsoft launched their own algorithmic search results on Live Search & their own Microsoft adCenter search ads. Microsoft continued to lose share in search at least until they gave their search engine a memorable name in Bing. The Yahoo! Bing ad network seemed to be gaining momentum when Yahoo! signed a deal with Mozilla to become the default search provider for Firefox, but it appears Yahoo! overpaid for the deal as Yahoo! search revenues ex-TAC were off $60 million YoY in the most recent quarter.

In spite of using an ad-heavy search interface Yahoo! has not grown search ad revenues as quickly as the search market has grown. Yahoo! has continually lost marketshare for years (up until the Mozilla Firefox deal). And even as Microsoft has followed Google in broadened their ad matching, a lot of the other “search” traffic partners Yahoo! once relied on to make their numbers are no longer in the marketplace to augment their data.

The Bing / Yahoo! network search traffic is now much cleaner than the Yahoo! “search” traffic quality of many years ago, but Yahoo! hasn’t replaced some of the old search partners which have died off.

Shared Scale No Longer Important?

Yahoo! increasing the share of their ad clicks which are powered by Gemini lowers the network efficiency of the Yahoo!/Bing ad network. All the talk of “synergy” driving value sort of goes up in smoke when Yahoo! shifts a significant share of their ad clicks away from the original network.

Yahoo! announced a new search deal with Google. Here’s the Tweet version…

$YHOO has signed a 3 year partnership with Google to bolster our search capabilities. This is in addition to our relationship with Microsoft— Yahoo Inc. (@YahooInc) October 20, 2015

…the underlying ethos…

“If you love something, set it free; if it comes backs it’s yours, if it doesn’t, it never was.”

…and the long version…

On October 19, 2015, Yahoo! Inc., a Delaware corporation (“Yahoo”), and Google Inc., a Delaware corporation (“Google”), entered into a Google Services Agreement (the “Services Agreement”). The Services Agreement is effective as of October 1, 2015 and expires on December 31, 2018. Pursuant to the Services Agreement, Google will provide Yahoo with search advertisements through Google’s AdSense for Search service (“AFS”), web algorithmic search services through Google’s Websearch Service, and image search services. The results provided by Google for these services will be available to Yahoo for display on both desktop and mobile platforms. Yahoo may use Google’s services on Yahoo’s owned and operated properties (“Yahoo Properties”) and on certain syndication partner properties (“Affiliate Sites”) in the United States (U.S.), Canada, Hong Kong, Taiwan, Singapore, Thailand, Vietnam, Philippines, Indonesia, Malaysia, India, Middle East, Africa, Mexico, Argentina, Brazil, Colombia, Chile, Venezuela, Peru, Australia and New Zealand.

Under the Services Agreement, Yahoo has discretion to select which search queries to send to Google and is not obligated to send any minimum number of search queries. The Services Agreement is non-exclusive and expressly permits Yahoo to use any other search advertising services, including its own service, the services of Microsoft Corporation or other third parties.

Google will pay Yahoo a percentage of the gross revenues from AFS ads displayed on Yahoo Properties or Affiliate Sites. The percentage will vary depending on whether the ads are displayed on U.S. desktop sites, non-U.S. desktop sites or on the tablet or mobile phone versions of the Yahoo Properties or its Affiliate Sites. Yahoo will pay Google fees for requests for image search results or web algorithmic search results.

Either party may terminate the Services Agreement (1) upon a material breach subject to certain limitations; (2) in the event of a change in control (as defined in the Services Agreement); (3) after first discussing with the other party in good faith its concerns and potential alternatives to termination (a) in its entirety or in the U.S. only, if it reasonably anticipates litigation or a regulatory proceeding brought by any U.S. federal or state agency to enjoin the parties from consummating, implementing or otherwise performing the Services Agreement, (b) in part, in a country other than the U.S., if either party reasonably anticipates litigation or a regulatory proceeding or reasonably anticipates that the continued performance under the Services Agreement in such country would have a material adverse impact on any ongoing antitrust proceeding in such country, (c) in its entirety if either party reasonably anticipates a filing by the European Commission to enjoin it from performing the Services Agreement or that continued performance of the Services Agreement would have a material adverse impact on any ongoing antitrust proceeding involving either party in Europe or India, or (d) in its entirety, on 60 days notice if the other party’s exercise of these termination rights in this clause (3) has collectively and materially diminished the economic value of the Services Agreement. Each party agrees to defend or settle any lawsuits or similar actions related to the Services Agreement unless doing so is not commercially reasonable (taking all factors into account, including without limitation effects on a party’s brand or business outside of the scope of the Services Agreement).

In addition, Google may suspend Yahoo’s use of services upon certain events and may terminate the Services Agreement if such events are not cured. Yahoo may terminate the Services Agreement if Google breaches certain service level and server latency specified in the Services Agreement.

In connection with the Services Agreement, Yahoo and Google have agreed to certain procedures with the Antitrust Division of the United States Department of Justice (the “DOJ”) to facilitate review of the Services Agreement by the DOJ, including delaying the implementation of the Services Agreement in the U.S. in order to provide the DOJ with a reasonable period of review.

Where Are We Headed?

Danny Sullivan mentioned the 51% of search share Yahoo! is required to deliver to Bing applies only to desktop traffic & Yahoo! has no such limit on mobile searches. In theory this could mean Yahoo! could quickly become a Google shop, with Microsoft as a backfill partner.

When asked about the future of Gemini on today’s investor conference call Marissa Mayer stated she expected Gemini to continue scaling more on mobile. She also stated she felt the Google deal would help Yahoo! refine their ad mix & give them additional opportunities in international markets. Yahoo! is increasingly reliant on the US & is unable to bid to win marketshare in foreign markets.

(Myopic) Learning Systems

Marissa Mayer sounded both insightful and myopic on today’s conference call. She mentioned how as they scale up Gemini the cost of that is reflected in foregone revenues from optimizing their learning systems and improving their ad relevancy. On its face, that sort of comment sounds totally reasonable.

An unsophisticated or utterly ignorant market participant might even cheer it on, without realizing the additional complexity, management cost & risk they are promoting.

Where the myopic quick win view falls flat is on the other side of the market.

Sure a large web platform can use big data to optimize their performance and squeeze out additional pennies of yield, but for an advertiser these blended networks can be a real struggle. How do they budget for any given network when a single company is arbitrarily mixing between 3 parallel networks? A small shift in Google AdWords ad spend might not be hard to manage, but what happens if an advertiser suddenly gets a bunch of [trending topic] search ad clicks? Or maybe they get a huge slug of mobile clicks which don’t work very well for their business. Do they disable the associated keyword in Yahoo! Gemini? Or Bing Ads? Or Google AdWords? All 3?’

Do they find that when they pause their ads in one network that quickly leads to the second (or third) network quickly carrying their ads across?

Even if you can track and manage it on a granular basis, the additional management time is non-trivial. One of the fundamental keys to a solid online advertising strategy is to have granular control so you can quickly alter distribution. But if you turn your ads off in one network only to find that leads your ads from the second network to get carried across that creates a bit of chaos. The more networks there are in parallel that bleed together the blurrier things get.

This sort of “overlap = bad” mindset is precisely why search engines suggest creating tight ad campaigns and ad groups. But you lose that control when things arbitrarily shift about.

To appreciate how expensive those sorts of costs can be, consider what has happened with programmatic ads:

Platforms that facilitate automated sales for media companies typically take 10% to 20% of the revenue that passes through their hands, according to the IAB report. Networks that service programmatic buys typically mark up inventory, citing the value that they add, by 30% to 50%. And then there are the essential data-management platforms, which take 10% to 15% of a buy, industry executives said.

If you are managing a client budget for paid search, how do you determine a pre-approved budget for each network when the traffic mix & quality might rapidly oscillate across the networks?

Don’t take my word for it though, read the Yahoo! Ads Twitter account

“Consumers and advertisers are overwhelmed by choice. Our industry needs solutions that eliminate fragmentation” @andrew_snyder #videonomics— YahooAds (@YahooAds) October 21, 2015

When Yahoo! tries to manage their yield they will not only be choosing among 3 parallel networks on their end, but they will also have individual advertisers making a wide variety of changes on the other end. And some of those advertisers will not only be influenced by the ad networks, but also the organic rankings which come with the ads.

If one search engine is ranking you well in the organic search results for an important keyword and another is not, then you should bid more aggressively on your ads on the search engine which is ranking your site, because by voting with your budget you may well be voting on which underlying relevancy algorithm is chosen to deliver the associated organic search results accompanying the ads.

That last point was important & I haven’t seen it mentioned anywhere yet, so it is worth repeating: your PPC ad bids may determine which search relevancy algorithm drives Yahoo! Search organic results.

Time to Quit Digging & Drop The Shovel

The other (BIG) issue is that as they give Google more search marketshare they give Google more granular data, which in turn means they

- make buying on their own network less worthy of the management cost & complexity

- make Google more of a “must buy”

- will never close the monetization gap with Google

Even today Google announced a new tool for offering advertisers granular localized search data. Search partners won’t directly benefit from those tools.

The old problem with Yahoo! was they were heavily reliant on search partners who drove down the traffic value. The future problem may well be if the marginally profitable Bing leaves the search market, Google will drive down the amount of revenue they share with Yahoo!.

If the Yahoo! Google search deal gets approved, Bing might shift back to losing money unless Microsoft buys Yahoo! after the Alibaba share spin out.

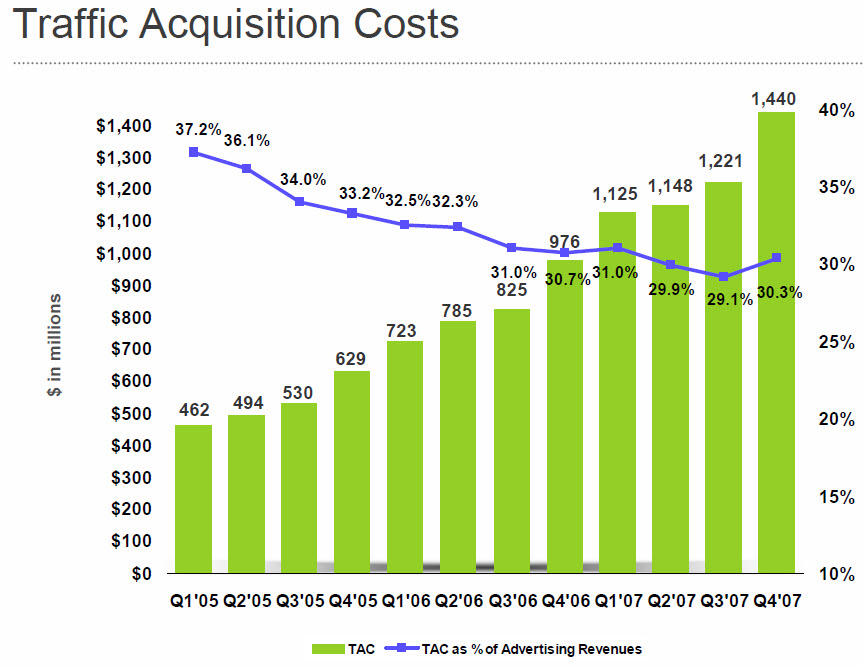

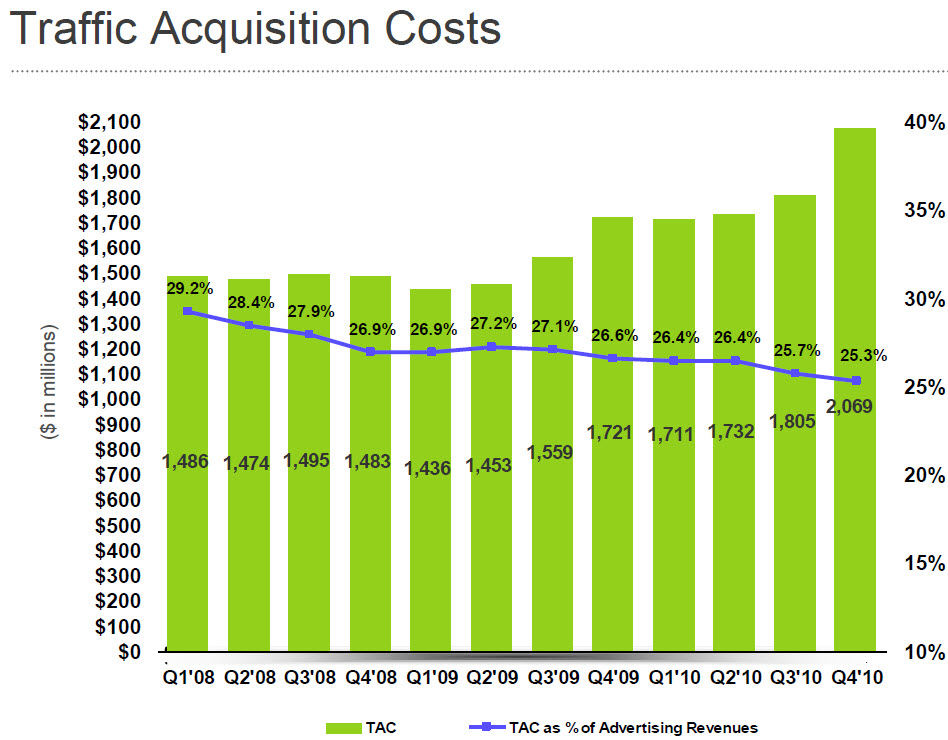

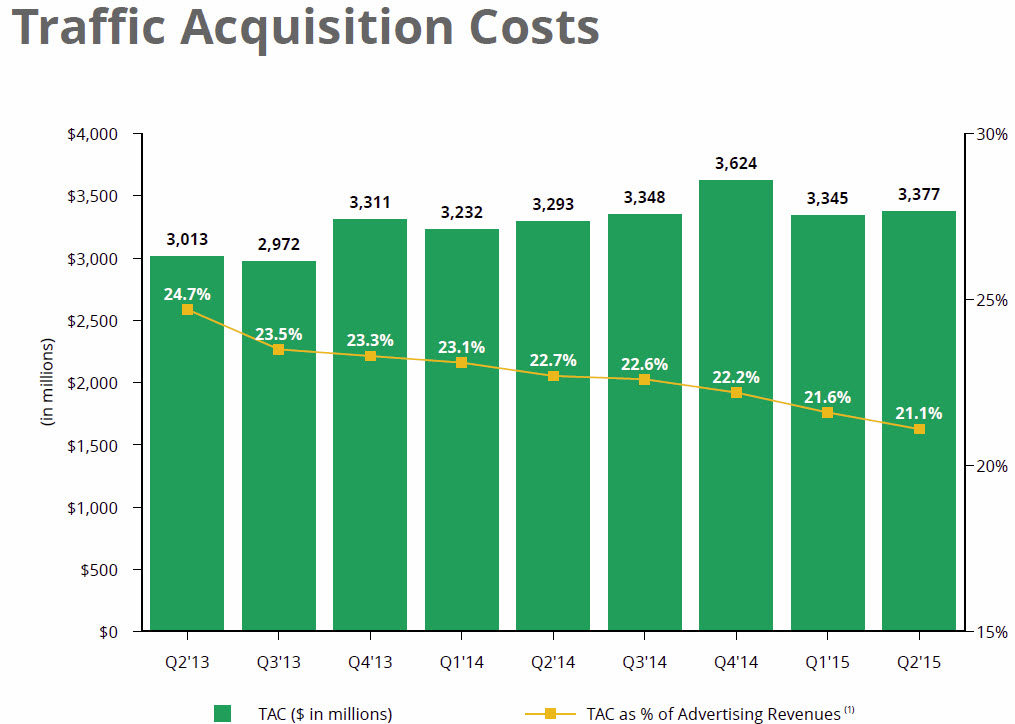

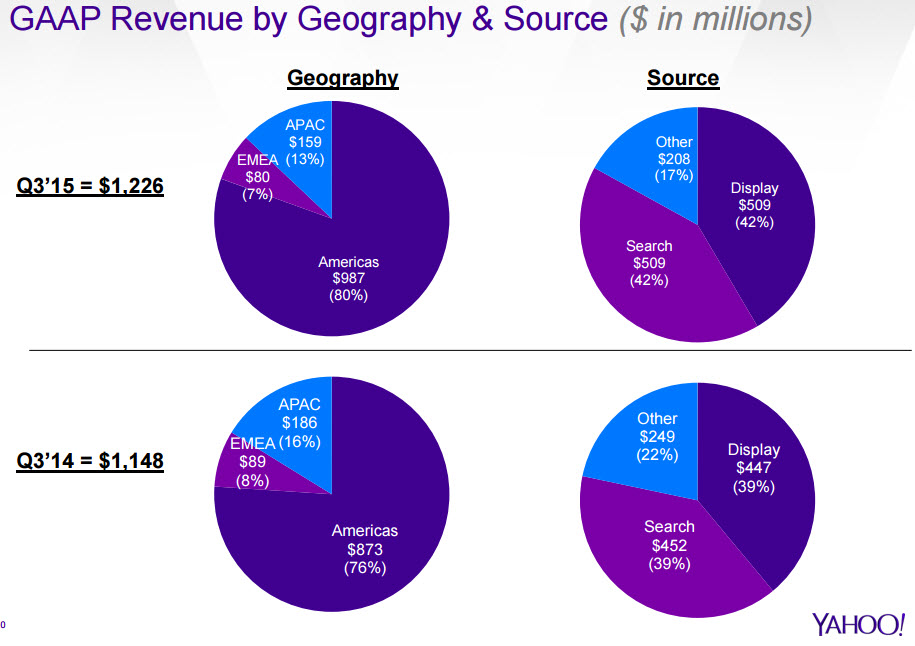

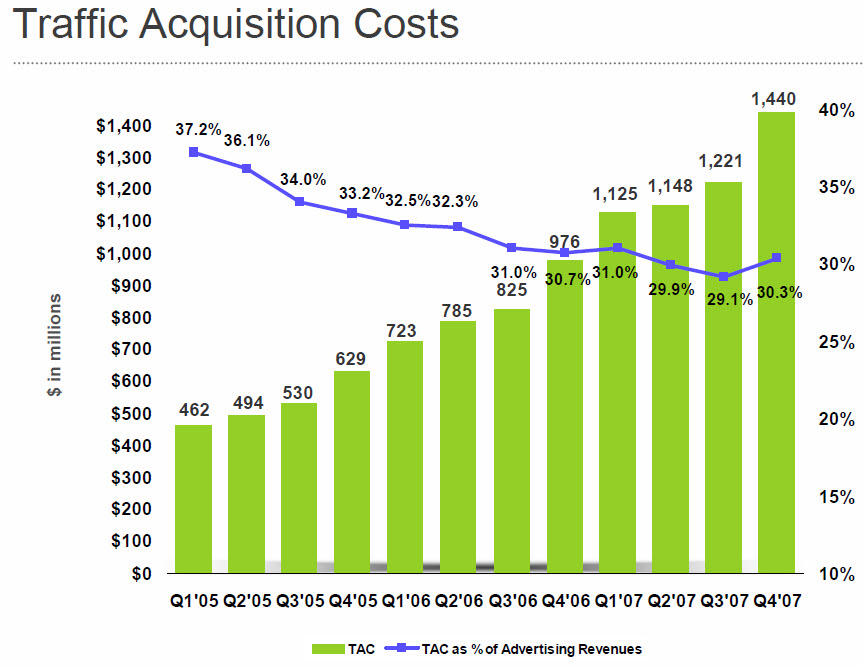

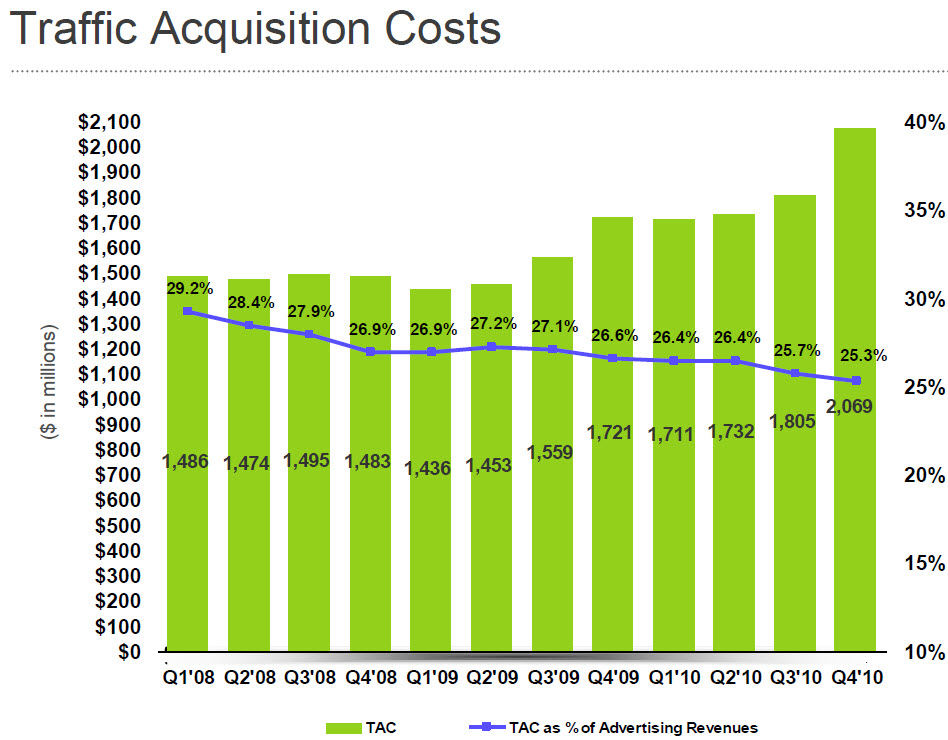

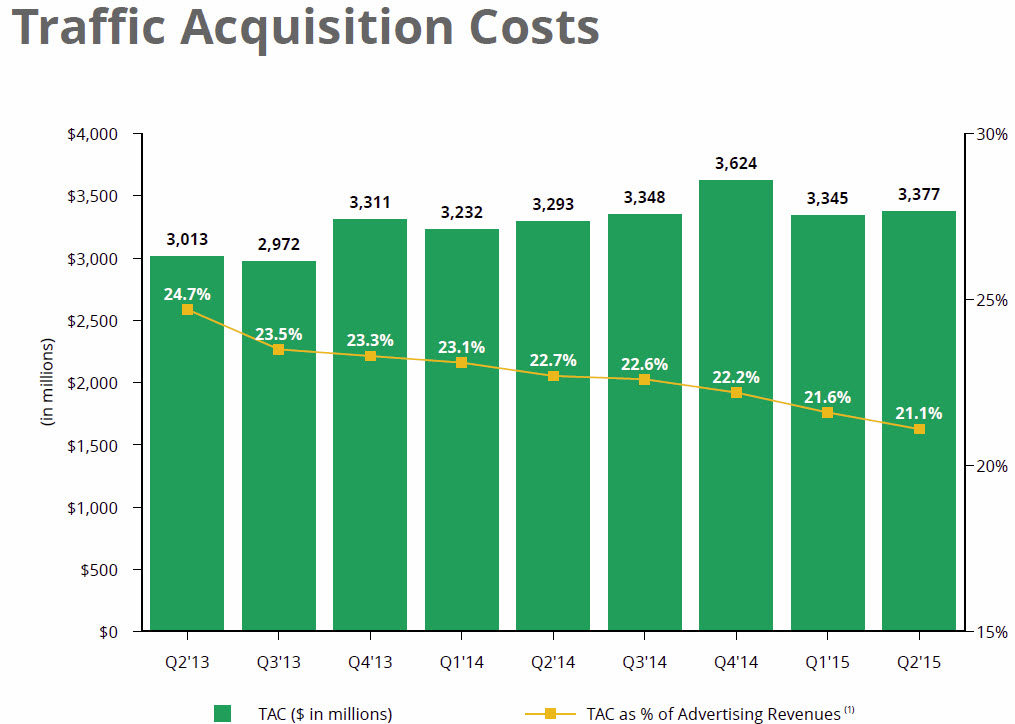

Ever track how Google’s TAC has shifted over the past decade?

It has only been a decade so far, but MAYBE THIS TIME IS DIFFERENT.

Ad Network Ménage à Trois: Bing, Yahoo!, Google

Yahoo! Tests Google Again

Back in July we noticed Yahoo! was testing Google-powered search results. From that post…

When Yahoo! recently renewed their search deal with Microsoft, Yahoo! was once again allowed to sell their own desktop search ads & they are only required to give 51% of the search volume to Bing. There has been significant speculation as to what Yahoo! would do with the carve out. Would they build their own search technology? Would they outsource to Google to increase search ad revenues? It appears they are doing a bit of everything – some Bing ads, some Yahoo! ads, some Google ads.

The Growth of Gemini

Since then Gemini has grown significantly:

Yahoo has moved quickly to bring search ad traffic under Gemini for advertisers that have adopted the platform. For some perspective, in September 2015, Yahoo.com produced a little over 50 percent of the clicks that took place across the Bing Ads and Gemini platforms. For advertisers adopting Gemini, Gemini produced 22 percent of combined Bing and Gemini clicks. Given the device breakdown of Yahoo’s traffic, this amounts to about two-thirds of the traffic it is able to control under the renegotiated agreement.

That growth has come at the expense of Bing ad clicks, which have fallen significantly:

Shared Scale to Compete

Years ago Microsoft was partnered into the Yahoo!/Overture ad network to compete against Google. The idea was the companies together would have better scale to compete against Google in search & ads. Greater scale would lead to a more efficient marketplace, which would lead to better ad matching, higher advertiser bids, etc. This didn’t worked as well as anticipated. Originally under-monetization was blamed on poor ad matching. Yahoo! Panama was a major rewrite of their ad system which was supposed to fix the problem, but it didn’t.

Even if issues like bid jamming were fixed & ad matching was more relevant, it still didn’t fix issues with lower ad depth in emerging markets & arbitrage lowering the value of expensive keywords in the United States.

Understanding the Value of Search Clicks

When a person types a keyword into a search box they are expressing significant intent. When a person clicks a link to land on a page they may still have significant interest, but generally there is at least some level of fall off. If I search for a keyword the value of my click is $x, but if I click a link on a “top searches” box, the value of that click may perhaps only be 5% or 10% what the value of a hand typed search. There is less intent.

Here is a picture of the sort of “trending now” box which appears on the Yahoo! homepage.

Typically those sorts of searches include a bunch of female celebrities, but then in any such box there will be one or two money terms added, like [lower blood pressure] or [iPhone 6s]. People who search for those terms might have $5 or $10 of intent, but people who click those links might only have a quarter or 50 cents of intent.

That difference in value can utterly screw an advertiser who gets their high-value keyword featured while they are sleeping or not actively monitoring & managing their ad campaign.

For what it is worth, even Google has tested some of these sort of these “search” traffic generation approaches during the last recession. On the Google AdSense network Google was buying banner ads telling people to search for [credit cards] & if they clicked on those banner ads they ended up on a search result page for [credit cards].

To this day many companies run contextual ads that drive search volume, but the difference between today & the Yahoo! which failed to monetize search is there is (at least currently) a greater focus on traffic quality.

Under-performance Due to Shady Traffic Partners

Yahoo! continued to under-perform in large part because Yahoo! had a lot of “search” partners with many lower quality traffic sources mixed in their traffic stream & they didn’t even allow advertisers to opt out of the partner network until after Yahoo! decided to exit the search market. As bad as the above sounds, it is actually worse, as some larger partners had access to advertiser information in a way that allowed them to aggressively arbitrage away the value of high advertiser bids wherever and whenever an advertiser overbid.

So you would bid thinking you were buying primarily search traffic based on the user intent of a person searching for something, but you might have been getting various layers of arbitrage of lower quality traffic, traffic from domain lander pages, or even some mix of robotic traffic from clickbots. Those $30 search ad clicks are a sure money loser if it is a clickbot software program doing the click.

And not only were some of Yahoo!’s partners driving down the value of clicks on Yahoo! itself, but Yahoo! was paying some of the larger partners in the high 80s to low 90s percent of revenue. Here is a (made up) example chart for illustration purposes, where the (made up) partner is getting a 90% TAC

| Advertiser Bid | Y! Search Clicks | Partner Clicks | Total Clicks | Total Revs | TAC | Rev after TAC | |

| No Partners | $30 | 3,000 | 0 | 3,000 | $90,000 | $0 | $90,000 |

| Bit of Arb | $25 | 3,000 | 1,000 | 4,000 | $100,000 | $22,500 | $77,500 |

| Heavy Arb | $10 | 3,000 | 6,000 | 9,000 | $90,000 | $54,000 | $36,000 |

Why did Yahoo! allow the above sort of behavior to go on? It is hard to believe they were completely unaware of what was going on, particularly when it was so obvious to outside observers. More likely it was that they were rapidly losing search share & wanted the topline revenue growth to make their quarterly number. By the time they realized what damage they had already done to their ecosystem, they were already too far down the path to correct it & were afraid to do anything which significantly hit revenues.

The rapid rise and fall of a large Yahoo! search partner named Geosign was detailed by the Canadian Financial Post, in an article which is now offline, but available via the Internet Archive Wayback Machine:

Companies fail all the time. Sometimes with little warning. But companies that are highly profitable and only weeks removed from a record-setting venture capital investment? Not so much. Yet in Geosign’s case, the cuts that began last May continued through the summer. Late last year, fewer than 100 employees remained. Today, Geosign itself no longer exists, its still-functioning website an empty reminder of its former promise. And while the national business media has, until now, overlooked the story – surprising, given the size of the investment and the fact that Google played a direct role in the outcome – within Canada’s technology and venture-capital communities, the $160-million investment is known as the deal “that didn’t go well.” When the collapse happened, even jaded industry watchers accustomed to financial debacles in the tech sector were stunned. “I’ve seen a lot of meltdowns,” says Duncan Stewart, a technology and investment analyst in Toronto. “But something happening like this, over just a few weeks, that’s unprecedented in my experience.”

Other traffic sources like domain parking have also sharply declined, due to a variety of factors like: web browsers replacing address bars with multi-purpose search boxes, shift of consumer internet traffic to mobile devices (which increases reliance on search over direct navigation & apps replace some segment of direct navigation), increased smart pricing, lower revenue sharing percentages, and Yahoo! no longer being able to offer a competitive bid against Google.

When Yahoo! shifted their search ads to Microsoft, Microsoft allowed advertisers to opt out of the partner network. Microsoft also clamped down on some of the lower quality traffic sources with smart pricing, which hit some of the arbitrage businesses hard & even forced Yahoo! to seek refunds from some of their partners for delivering low quality traffic.

Shared Scale to Compete

Microsoft launched their own algorithmic search results on Live Search & their own Microsoft adCenter search ads. Microsoft continued to lose share in search at least until they gave their search engine a memorable name in Bing. The Yahoo! Bing ad network seemed to be gaining momentum when Yahoo! signed a deal with Mozilla to become the default search provider for Firefox, but it appears Yahoo! overpaid for the deal as Yahoo! search revenues ex-TAC were off $60 million YoY in the most recent quarter.

In spite of using an ad-heavy search interface Yahoo! has not grown search ad revenues as quickly as the search market has grown. Yahoo! has continually lost marketshare for years (up until the Mozilla Firefox deal). And even as Microsoft has followed Google in broadened their ad matching, a lot of the other “search” traffic partners Yahoo! once relied on to make their numbers are no longer in the marketplace to augment their data.

The Bing / Yahoo! network search traffic is now much cleaner than the Yahoo! “search” traffic quality of many years ago, but Yahoo! hasn’t replaced some of the old search partners which have died off.

Shared Scale No Longer Important?

Yahoo! increasing the share of their ad clicks which are powered by Gemini lowers the network efficiency of the Yahoo!/Bing ad network. All the talk of “synergy” driving value sort of goes up in smoke when Yahoo! shifts a significant share of their ad clicks away from the original network.

Yahoo! announced a new search deal with Google. Here’s the Tweet version…

$YHOO has signed a 3 year partnership with Google to bolster our search capabilities. This is in addition to our relationship with Microsoft— Yahoo Inc. (@YahooInc) October 20, 2015

…the underlying ethos…

“If you love something, set it free; if it comes backs it’s yours, if it doesn’t, it never was.”

…and the long version…

On October 19, 2015, Yahoo! Inc., a Delaware corporation (“Yahoo”), and Google Inc., a Delaware corporation (“Google”), entered into a Google Services Agreement (the “Services Agreement”). The Services Agreement is effective as of October 1, 2015 and expires on December 31, 2018. Pursuant to the Services Agreement, Google will provide Yahoo with search advertisements through Google’s AdSense for Search service (“AFS”), web algorithmic search services through Google’s Websearch Service, and image search services. The results provided by Google for these services will be available to Yahoo for display on both desktop and mobile platforms. Yahoo may use Google’s services on Yahoo’s owned and operated properties (“Yahoo Properties”) and on certain syndication partner properties (“Affiliate Sites”) in the United States (U.S.), Canada, Hong Kong, Taiwan, Singapore, Thailand, Vietnam, Philippines, Indonesia, Malaysia, India, Middle East, Africa, Mexico, Argentina, Brazil, Colombia, Chile, Venezuela, Peru, Australia and New Zealand.

Under the Services Agreement, Yahoo has discretion to select which search queries to send to Google and is not obligated to send any minimum number of search queries. The Services Agreement is non-exclusive and expressly permits Yahoo to use any other search advertising services, including its own service, the services of Microsoft Corporation or other third parties.

Google will pay Yahoo a percentage of the gross revenues from AFS ads displayed on Yahoo Properties or Affiliate Sites. The percentage will vary depending on whether the ads are displayed on U.S. desktop sites, non-U.S. desktop sites or on the tablet or mobile phone versions of the Yahoo Properties or its Affiliate Sites. Yahoo will pay Google fees for requests for image search results or web algorithmic search results.

Either party may terminate the Services Agreement (1) upon a material breach subject to certain limitations; (2) in the event of a change in control (as defined in the Services Agreement); (3) after first discussing with the other party in good faith its concerns and potential alternatives to termination (a) in its entirety or in the U.S. only, if it reasonably anticipates litigation or a regulatory proceeding brought by any U.S. federal or state agency to enjoin the parties from consummating, implementing or otherwise performing the Services Agreement, (b) in part, in a country other than the U.S., if either party reasonably anticipates litigation or a regulatory proceeding or reasonably anticipates that the continued performance under the Services Agreement in such country would have a material adverse impact on any ongoing antitrust proceeding in such country, (c) in its entirety if either party reasonably anticipates a filing by the European Commission to enjoin it from performing the Services Agreement or that continued performance of the Services Agreement would have a material adverse impact on any ongoing antitrust proceeding involving either party in Europe or India, or (d) in its entirety, on 60 days notice if the other party’s exercise of these termination rights in this clause (3) has collectively and materially diminished the economic value of the Services Agreement. Each party agrees to defend or settle any lawsuits or similar actions related to the Services Agreement unless doing so is not commercially reasonable (taking all factors into account, including without limitation effects on a party’s brand or business outside of the scope of the Services Agreement).

In addition, Google may suspend Yahoo’s use of services upon certain events and may terminate the Services Agreement if such events are not cured. Yahoo may terminate the Services Agreement if Google breaches certain service level and server latency specified in the Services Agreement.

In connection with the Services Agreement, Yahoo and Google have agreed to certain procedures with the Antitrust Division of the United States Department of Justice (the “DOJ”) to facilitate review of the Services Agreement by the DOJ, including delaying the implementation of the Services Agreement in the U.S. in order to provide the DOJ with a reasonable period of review.

Where Are We Headed?

Danny Sullivan mentioned the 51% of search share Yahoo! is required to deliver to Bing applies only to desktop traffic & Yahoo! has no such limit on mobile searches. In theory this could mean Yahoo! could quickly become a Google shop, with Microsoft as a backfill partner.

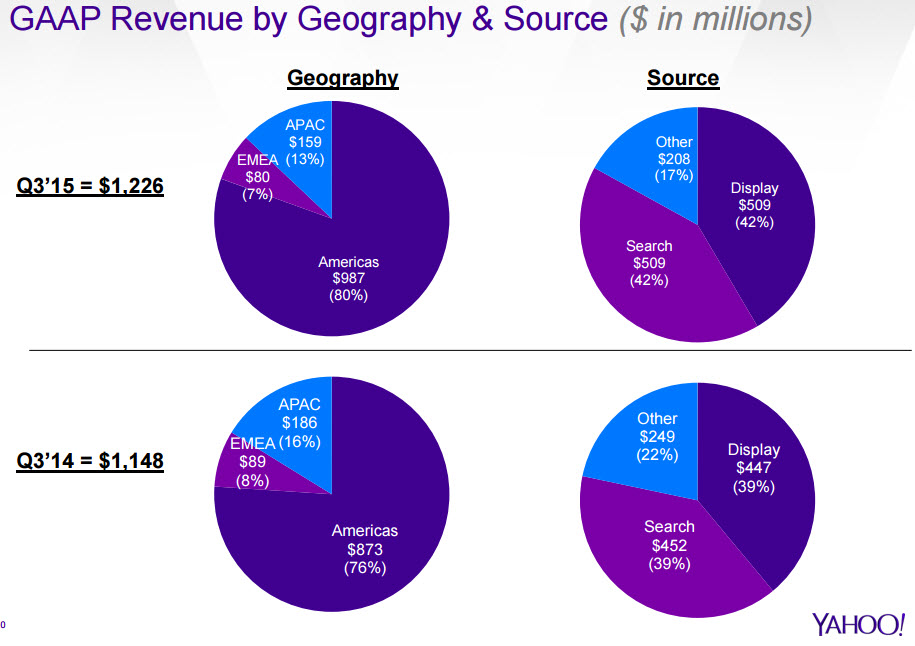

When asked about the future of Gemini on today’s investor conference call Marissa Mayer stated she expected Gemini to continue scaling more on mobile. She also stated she felt the Google deal would help Yahoo! refine their ad mix & give them additional opportunities in international markets. Yahoo! is increasingly reliant on the US & is unable to bid to win marketshare in foreign markets.

(Myopic) Learning Systems

Marissa Mayer sounded both insightful and myopic on today’s conference call. She mentioned how as they scale up Gemini the cost of that is reflected in foregone revenues from optimizing their learning systems and improving their ad relevancy. On its face, that sort of comment sounds totally reasonable.

An unsophisticated or utterly ignorant market participant might even cheer it on, without realizing the additional complexity, management cost & risk they are promoting.

Where the myopic quick win view falls flat is on the other side of the market.

Sure a large web platform can use big data to optimize their performance and squeeze out additional pennies of yield, but for an advertiser these blended networks can be a real struggle. How do they budget for any given network when a single company is arbitrarily mixing between 3 parallel networks? A small shift in Google AdWords ad spend might not be hard to manage, but what happens if an advertiser suddenly gets a bunch of [trending topic] search ad clicks? Or maybe they get a huge slug of mobile clicks which don’t work very well for their business. Do they disable the associated keyword in Yahoo! Gemini? Or Bing Ads? Or Google AdWords? All 3?’

Do they find that when they pause their ads in one network that quickly leads to the second (or third) network quickly carrying their ads across?

Even if you can track and manage it on a granular basis, the additional management time is non-trivial. One of the fundamental keys to a solid online advertising strategy is to have granular control so you can quickly alter distribution. But if you turn your ads off in one network only to find that leads your ads from the second network to get carried across that creates a bit of chaos. The more networks there are in parallel that bleed together the blurrier things get.

This sort of “overlap = bad” mindset is precisely why search engines suggest creating tight ad campaigns and ad groups. But you lose that control when things arbitrarily shift about.

To appreciate how expensive those sorts of costs can be, consider what has happened with programmatic ads:

Platforms that facilitate automated sales for media companies typically take 10% to 20% of the revenue that passes through their hands, according to the IAB report. Networks that service programmatic buys typically mark up inventory, citing the value that they add, by 30% to 50%. And then there are the essential data-management platforms, which take 10% to 15% of a buy, industry executives said.

If you are managing a client budget for paid search, how do you determine a pre-approved budget for each network when the traffic mix & quality might rapidly oscillate across the networks?

Don’t take my word for it though, read the Yahoo! Ads Twitter account

“Consumers and advertisers are overwhelmed by choice. Our industry needs solutions that eliminate fragmentation” @andrew_snyder #videonomics— YahooAds (@YahooAds) October 21, 2015

When Yahoo! tries to manage their yield they will not only be choosing among 3 parallel networks on their end, but they will also have individual advertisers making a wide variety of changes on the other end. And some of those advertisers will not only be influenced by the ad networks, but also the organic rankings which come with the ads.

If one search engine is ranking you well in the organic search results for an important keyword and another is not, then you should bid more aggressively on your ads on the search engine which is ranking your site, because by voting with your budget you may well be voting on which underlying relevancy algorithm is chosen to deliver the associated organic search results accompanying the ads.

That last point was important & I haven’t seen it mentioned anywhere yet, so it is worth repeating: your PPC ad bids may determine which search relevancy algorithm drives Yahoo! Search organic results.

Time to Quit Digging & Drop The Shovel

The other (BIG) issue is that as they give Google more search marketshare they give Google more granular data, which in turn means they

- make buying on their own network less worthy of the management cost & complexity

- make Google more of a “must buy”

- will never close the monetization gap with Google

Even today Google announced a new tool for offering advertisers granular localized search data. Search partners won’t directly benefit from those tools.

The old problem with Yahoo! was they were heavily reliant on search partners who drove down the traffic value. The future problem may well be if the marginally profitable Bing leaves the search market, Google will drive down the amount of revenue they share with Yahoo!.

If the Yahoo! Google search deal gets approved, Bing might shift back to losing money unless Microsoft buys Yahoo! after the Alibaba share spin out.

Ever track how Google’s TAC has shifted over the past decade?

It has only been a decade so far, but MAYBE THIS TIME IS DIFFERENT.

Virtual Real Estate Virtually Disappears

Back in 2009 Google executives were scared of not being able to retain talent with stock options after Google’s stock price cratered with the rest of the market & Google’s ad revenue growth rate slid to zero. That led them to reprice employee stock options. That is as close as Google has come to a “near death” experience since their IPO. They’ve consistently grown & become more dominant.

In November of 2009 I cringed when I saw the future of SEO in Google SERPs where the organic results were outright displaced & even some of the featured map listings had their phone numbers removed.

Investing in Search

In 2012 a Googler named Jon Rockway was more candid than Googlers are typically known for being: “SEO isn’t good for users or the Internet at large. … It’s a bug that you could rank highly in Google without buying ads, and Google is trying to fix the bug.”

It isn’t surprising Google greatly devalued keyword domain names & hit sites like eHow hard. And it isn’t surprising Demand Media is laying off staff and is rumored to be exploring selling their sites. If deleting millions of articles from eHow doesn’t drive a recovery, how much money can they lose on the rehab project before they should just let it go?

“If you want to stop spam, the most straight forward way to do it is to deny people money because they care about the money and that should be their end goal. But if you really want to stop spam, it is a little bit mean, but what you want to do, is break their spirits.” – Matt Cutts

Through a constant ex-post-facto redefinition of “what is spam” to include most anything which is profitable, predictable & accessible, Google engineers work hard to “deny people money.”

Over time SEO became harder & less predictable. The exception being Google investments like Thumbtack, in which case other’s headwind became your tailwind & a list of techniques declared off limits became a strategy guidebook.

Communications got worse, Google stopped even pretending to help the ecosystem, and they went so far as claiming that even asking for a link was spam. All the while, as they were curbing third party investment into the ecosystem (“deny them money”), they work on PR for their various investments & renamed the company from Google to Alphabet so they can expand their scope of investments.

“We also like that it means alpha‑bet (Alpha is investment return above benchmark), which we strive for!” – Larry Page

From Do/Know/Go to Scrape/Displace/Monetize

It takes a lot of effort & most people are probably too lazy to do it, but if you look at the arch of Google’s patents related to search quality, many of the early ones revolved around links. Then many focused on engagement related signals. Chrome & Android changed the pool of signals Google had access to. Things like Project Fi, Gogle Fiber, Nest, and Google’s new OnHub router give them more of that juicy user data. Many of their recently approved patents revolve around expanding the knowledge graph so that they may outright displace the idea of having a neutral third party result set for an increasing share of the overall search pie.

Searchers can instead get bits of “knowledge” dressed in various flavors of ads.

This sort of displacement is having a significant impact on a variety of sites. But for most it is a slow bleed rather than an overnight sudden shift. In that sort of environment, even volunteer run sites will eventually atrophy. They will have fewer new users, and as some of the senior people leave, eventually fewer will rise through the ranks. Or perhaps a greater share of the overall ranks will be driven by money.

Jimmy Wales stated: “It is also false that ‘Wikipedia thrives on clicks,’ at least as compared to ad-revenue driven sites… The relationship between ‘clicks’ and the things we care about: community health and encyclopedia quality is not nothing, but it’s not as direct as some think.”

Most likely the relationship *is* quite direct, but there is a lagging impact. Today’s major editors didn’t join the site yesterday & take time to rise through the ranks.

As the big sites become more closed off the independent voices are pushed aside or outright disappear.

If Google works hard enough at prioritizing “deny people money” as a primary goal, then they will eventually get an index quality that reflects that lack of payment. Plenty of good looking & well-formatted content, but a mix of content which:

- is monetized indirectly & in ways which are not clearly disclosed

- has interstitial ads and slideshows where the ads look like the “next” button & the “next” button is colored the same color as the site’s background

- is done as “me too” micro-reporting with no incremental analysis

- is algorithmically generated

Celebrating Search “Innovation”

There has been a general pattern in search innovation. Google introduces a new feature, pitches it as being the next big thing, gets people to adopt it, collects data on the impact of the feature, clamps down on selectively allowing it, perhaps removes the feature outright from organic search results, permanently adds the feature to their ad units.

This sort of pattern has happened so many times it is hard to count.

Google puts faces in search results for authorship & to promote Google+, Google realizes Google+ is a total loser & disconnects it, new ad units for local services show faces in the search results. What was distracting noise was removed, then it was re-introduced as part of an ad unit.

I’m confused didn’t Google pull authorship cuz faces in the SERPS was a bad experience for the users? pic.twitter.com/mI2NdyGzd7— Michael Gray (@graywolf) July 31, 2015

The same sort of deal exists elsewhere. Google acquires YouTube, launches universal search, offers video snippets, increases size of video snippets. Then video snippets get removed from most listings “because noise.” YouTube gets enlarged video snippets. And, after removing the “noise” of video stills in the search results Google is exploring testing video ads in the search results.

Some sites which bundle software got penalized in organic search and are not even allowed to buy AdWords ads. At an extreme degree, sites which bundled no software, but simply didn’t link to an End User Agreement (EULA) from the download page were penalized. Which leads to uncomfortable conversations like this one:

Google Support: I looked through this, and it seemed that one of the issues was a lack of an End User Agreement (EULA)

Simtec: An EULA is displayed by the setup program before installing starts. Also, the end user license agreements are linked to from here http://www.httpwatch.com/buy/orderingfaq.aspx#licensetypes

Google Support: Hmm, They do want it on the download page itself

Simtec: How come there isn’t one here? google.co.uk/chrome/browser/desktop/

Google Support: LOL

Simtec: No really?

Google Support: That’s a great question

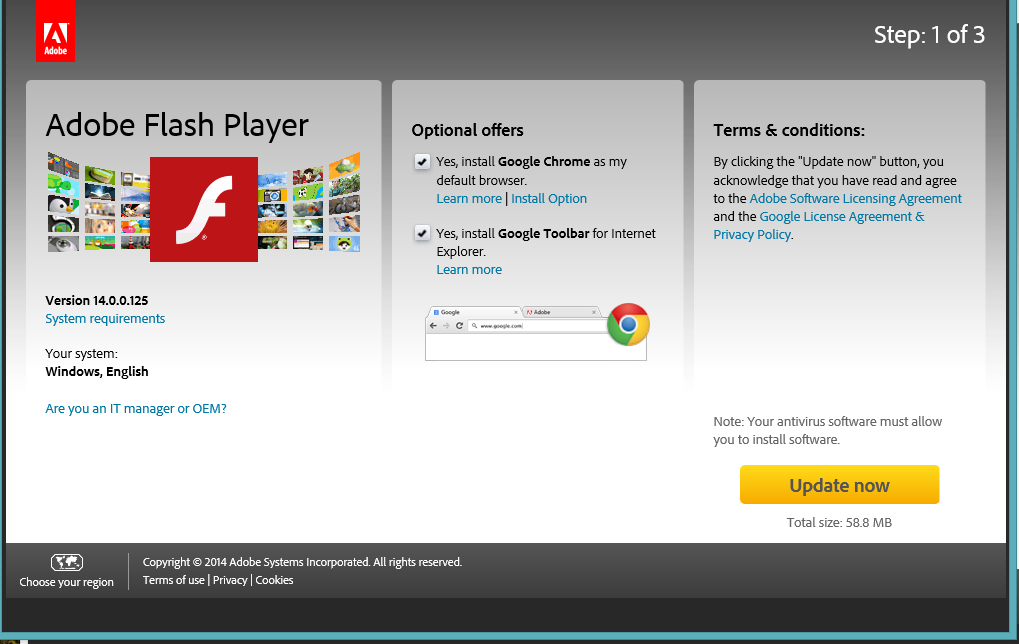

Of course, it goes without saying that much of the Google Chrome install base came from negative option software bundling on Adobe Flash security updates.

Google claimed helpful hotel affiliate sites should be rated as spam, then they put their own affiliate ads in hotel search results & even recommended hotel searches in the knowledge graph on city name searches.

Google created a penalty for sites which have an ad heavy interface. Many of Google’s search results are nothing but ads for the entire first screen.

Google search engineers have recently started complaining about interstitial ads & suggested they might create a “relevancy” signal based on users not liking those. At the same time, an increasing number of YouTube videos have unskippable pre-roll ads. And the volume of YouTube ad views is so large that it is heavily driving down Google’s aggregate ad click price. On top of this, Google also offers a survey tool which publishers can lock content behind & requires users to answer a question before they can see the full article they just saw ranking in the search results.

“Everything is possible, but nothing is real.” – Living Colour

Blue Ocean Opportunity

Amid the growing ecosystem instability & increasing hypocrisy, there have perhaps been only a couple “blue ocean” areas left in organic search: local search & brand.

And it appears Google might be well on their way in trying to take those away.

For years brand has been the solution to almost any SEO problem.

I wonder how many SEOs working for big brands have done absolutely nothing of value since 2012 yet still look like geniuses to executives.— Ross Hudgens (@RossHudgens) August 7, 2015

But Google has been increasing the cost of owning a brand. They are testing other ad formats to drive branded search clicks through more expensive ad formats like PLAs & they have been drastically increasing brand CPCs on text ads. And while that second topic has recently gained broader awareness, it has been a trend for years now: “Over the last 12 months, Brand CPCs on Google have increased 80%” – George Michie, July 30, 2013.

There are other subtle ways Google has attacked brand, including:

- penalties on many of the affiliates of those brands

- launching their own vertical search ad offerings in key big-money verticals

- investing billions in “disruptive” start ups which are exempt from the algorithmic risks other players must deal with

- allowing competitors to target competing brands not only within the search results, but also as custom affinity audiences

- linking to competing businesses in the knowledge graph

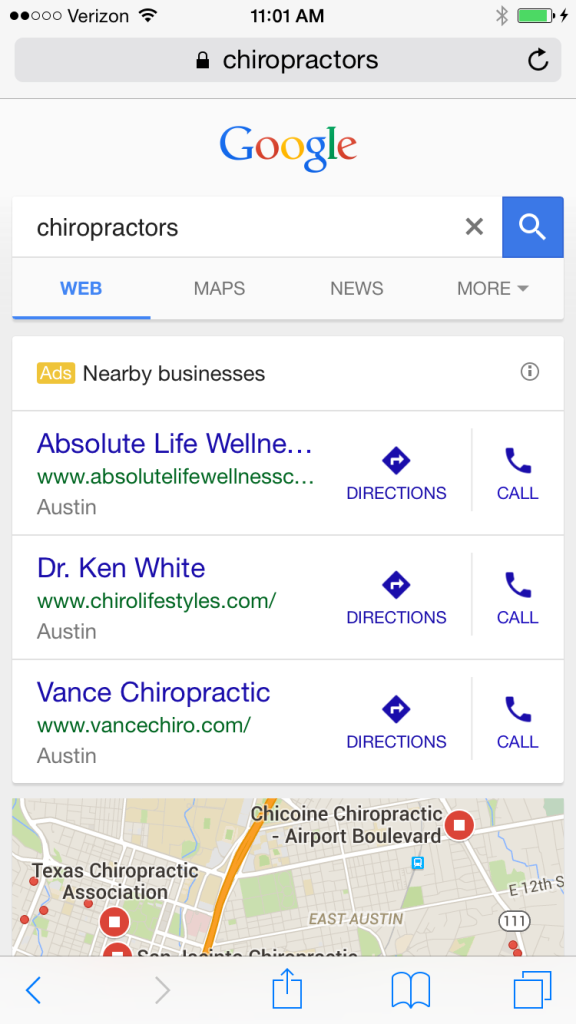

Google has recently dialed up monetization of local search quite aggressively as well. I’ve long highlighted how mobile search results are ad heavy & have grown increasingly so over time. Google has recently announced call only ad formats, a buy button for mobile ads, local service provider ads, appointment scheduling in the SERPs, direct hotel booking, etc.

And, in addition to all the above new ad formats, recently it was noticed Google is now showing 3 ads on mobile devices even for terms without much commercial intent, like [craft beer].

I like how this new-ish search box takes up a ton of space and puts all these ads right in the prime viewing area pic.twitter.com/CcYdW118gf— Jared McKiernan (@jaredmckiernan) August 18, 2015

Now that the mobile search interface is literally nothing but ads above the fold, early data shows a significant increase in mobile ad clicks. Of course it doesn’t matter if there are 2 or 3 ads, if Google shows ad extensions on SERPs with only 2 ads to ensure they drive the organic results “out of sight, out of mind.”

Earlier this month it was also noticed Google replaced 7-pack local results with 3-pack local results for many more search queries, even on desktop search results. On some of these results they only show a call button, on others they show links to sites. It is a stark contrast to the vast array of arbitrary (and even automated) ad extensions in AdWords.

Why would they determine users want to see links to the websites & the phone numbers, then decide overnight users don’t want those?

Why would Google determine for many years that 7 is a good number of results to show, and then overnight shift to showing 3?

If Google listed 7 ads in a row people might notice the absurdity of it and complain. But if Google only shows 3 results, then they can quickly convert it into an ad unit with little blowback.

You don’t have to be a country music fan to know the Austin SEO limits in a search result where the local results are now payola.

Try not to hurt your back while looking down for the organic search results!

Here are two tips to ensure any SEO success isn’t ethereal: don’t be nearby, and don’t be a business. :D

Virtual Real Estate Virtually Disappears

Back in 2009 Google executives were scared of not being able to retain talent with stock options after Google’s stock price cratered with the rest of the market & Google’s ad revenue growth rate slid to zero. That led them to reprice employee stock options. That is as close as Google has come to a “near death” experience since their IPO. They’ve consistently grown & become more dominant.

In November of 2009 I cringed when I saw the future of SEO in Google SERPs where the organic results were outright displaced & even some of the featured map listings had their phone numbers removed.

Investing in Search

In 2012 a Googler named Jon Rockway was more candid than Googlers are typically known for being: “SEO isn’t good for users or the Internet at large. … It’s a bug that you could rank highly in Google without buying ads, and Google is trying to fix the bug.”

It isn’t surprising Google greatly devalued keyword domain names & hit sites like eHow hard. And it isn’t surprising Demand Media is laying off staff and is rumored to be exploring selling their sites. If deleting millions of articles from eHow doesn’t drive a recovery, how much money can they lose on the rehab project before they should just let it go?

“If you want to stop spam, the most straight forward way to do it is to deny people money because they care about the money and that should be their end goal. But if you really want to stop spam, it is a little bit mean, but what you want to do, is break their spirits.” – Matt Cutts

Through a constant ex-post-facto redefinition of “what is spam” to include most anything which is profitable, predictable & accessible, Google engineers work hard to “deny people money.”

Over time SEO became harder & less predictable. The exception being Google investments like Thumbtack, in which case other’s headwind became your tailwind & a list of techniques declared off limits became a strategy guidebook.

Communications got worse, Google stopped even pretending to help the ecosystem, and they went so far as claiming that even asking for a link was spam. All the while, as they were curbing third party investment into the ecosystem (“deny them money”), they work on PR for their various investments & renamed the company from Google to Alphabet so they can expand their scope of investments.

“We also like that it means alpha‑bet (Alpha is investment return above benchmark), which we strive for!” – Larry Page

From Do/Know/Go to Scrape/Displace/Monetize

It takes a lot of effort & most people are probably too lazy to do it, but if you look at the arch of Google’s patents related to search quality, many of the early ones revolved around links. Then many focused on engagement related signals. Chrome & Android changed the pool of signals Google had access to. Things like Project Fi, Gogle Fiber, Nest, and Google’s new OnHub router give them more of that juicy user data. Many of their recently approved patents revolve around expanding the knowledge graph so that they may outright displace the idea of having a neutral third party result set for an increasing share of the overall search pie.

Searchers can instead get bits of “knowledge” dressed in various flavors of ads.

This sort of displacement is having a significant impact on a variety of sites. But for most it is a slow bleed rather than an overnight sudden shift. In that sort of environment, even volunteer run sites will eventually atrophy. They will have fewer new users, and as some of the senior people leave, eventually fewer will rise through the ranks. Or perhaps a greater share of the overall ranks will be driven by money.

Jimmy Wales stated: “It is also false that ‘Wikipedia thrives on clicks,’ at least as compared to ad-revenue driven sites… The relationship between ‘clicks’ and the things we care about: community health and encyclopedia quality is not nothing, but it’s not as direct as some think.”

Most likely the relationship *is* quite direct, but there is a lagging impact. Today’s major editors didn’t join the site yesterday & take time to rise through the ranks.

As the big sites become more closed off the independent voices are pushed aside or outright disappear.

If Google works hard enough at prioritizing “deny people money” as a primary goal, then they will eventually get an index quality that reflects that lack of payment. Plenty of good looking & well-formatted content, but a mix of content which:

- is monetized indirectly & in ways which are not clearly disclosed

- has interstitial ads and slideshows where the ads look like the “next” button & the “next” button is colored the same color as the site’s background

- is done as “me too” micro-reporting with no incremental analysis

- is algorithmically generated

Celebrating Search “Innovation”

There has been a general pattern in search innovation. Google introduces a new feature, pitches it as being the next big thing, gets people to adopt it, collects data on the impact of the feature, clamps down on selectively allowing it, perhaps removes the feature outright from organic search results, permanently adds the feature to their ad units.

This sort of pattern has happened so many times it is hard to count.

Google puts faces in search results for authorship & to promote Google+, Google realizes Google+ is a total loser & disconnects it, new ad units for local services show faces in the search results. What was distracting noise was removed, then it was re-introduced as part of an ad unit.

I’m confused didn’t Google pull authorship cuz faces in the SERPS was a bad experience for the users? pic.twitter.com/mI2NdyGzd7— Michael Gray (@graywolf) July 31, 2015

The same sort of deal exists elsewhere. Google acquires YouTube, launches universal search, offers video snippets, increases size of video snippets. Then video snippets get removed from most listings “because noise.” YouTube gets enlarged video snippets. And, after removing the “noise” of video stills in the search results Google is exploring testing video ads in the search results.

Some sites which bundle software got penalized in organic search and are not even allowed to buy AdWords ads. At an extreme degree, sites which bundled no software, but simply didn’t link to an End User Agreement (EULA) from the download page were penalized. Which leads to uncomfortable conversations like this one:

Google Support: I looked through this, and it seemed that one of the issues was a lack of an End User Agreement (EULA)

Simtec: An EULA is displayed by the setup program before installing starts. Also, the end user license agreements are linked to from here http://www.httpwatch.com/buy/orderingfaq.aspx#licensetypes

Google Support: Hmm, They do want it on the download page itself

Simtec: How come there isn’t one here? google.co.uk/chrome/browser/desktop/

Google Support: LOL

Simtec: No really?

Google Support: That’s a great question

Of course, it goes without saying that much of the Google Chrome install base came from negative option software bundling on Adobe Flash security updates.

Google claimed helpful hotel affiliate sites should be rated as spam, then they put their own affiliate ads in hotel search results & even recommended hotel searches in the knowledge graph on city name searches.

Google created a penalty for sites which have an ad heavy interface. Many of Google’s search results are nothing but ads for the entire first screen.

Google search engineers have recently started complaining about interstitial ads & suggested they might create a “relevancy” signal based on users not liking those. At the same time, an increasing number of YouTube videos have unskippable pre-roll ads. And the volume of YouTube ad views is so large that it is heavily driving down Google’s aggregate ad click price. On top of this, Google also offers a survey tool which publishers can lock content behind & requires users to answer a question before they can see the full article they just saw ranking in the search results.

“Everything is possible, but nothing is real.” – Living Colour

Blue Ocean Opportunity

Amid the growing ecosystem instability & increasing hypocrisy, there have perhaps been only a couple “blue ocean” areas left in organic search: local search & brand.

And it appears Google might be well on their way in trying to take those away.

For years brand has been the solution to almost any SEO problem.

I wonder how many SEOs working for big brands have done absolutely nothing of value since 2012 yet still look like geniuses to executives.— Ross Hudgens (@RossHudgens) August 7, 2015

But Google has been increasing the cost of owning a brand. They are testing other ad formats to drive branded search clicks through more expensive ad formats like PLAs & they have been drastically increasing brand CPCs on text ads. And while that second topic has recently gained broader awareness, it has been a trend for years now: “Over the last 12 months, Brand CPCs on Google have increased 80%” – George Michie, July 30, 2013.

There are other subtle ways Google has attacked brand, including:

- penalties on many of the affiliates of those brands

- launching their own vertical search ad offerings in key big-money verticals

- investing billions in “disruptive” start ups which are exempt from the algorithmic risks other players must deal with

- allowing competitors to target competing brands not only within the search results, but also as custom affinity audiences

- linking to competing businesses in the knowledge graph

Google has recently dialed up monetization of local search quite aggressively as well. I’ve long highlighted how mobile search results are ad heavy & have grown increasingly so over time. Google has recently announced call only ad formats, a buy button for mobile ads, local service provider ads, appointment scheduling in the SERPs, direct hotel booking, etc.

And, in addition to all the above new ad formats, recently it was noticed Google is now showing 3 ads on mobile devices even for terms without much commercial intent, like [craft beer].

I like how this new-ish search box takes up a ton of space and puts all these ads right in the prime viewing area pic.twitter.com/CcYdW118gf— Jared McKiernan (@jaredmckiernan) August 18, 2015

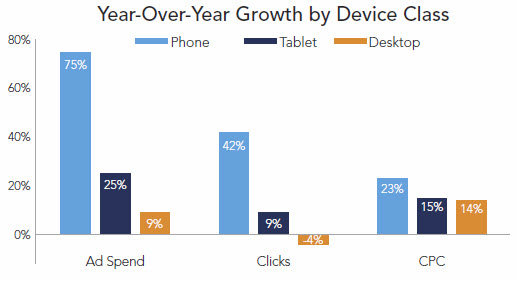

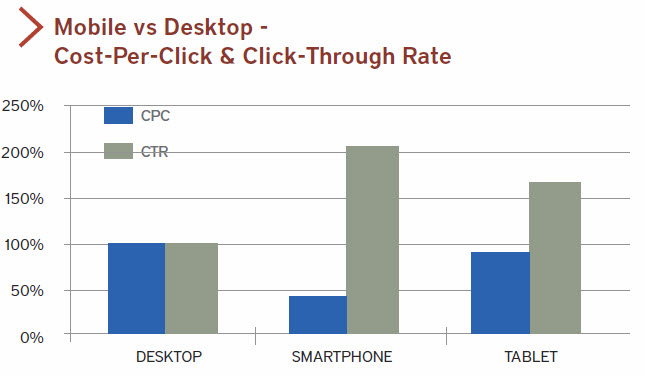

Now that the mobile search interface is literally nothing but ads above the fold, early data shows a significant increase in mobile ad clicks. Of course it doesn’t matter if there are 2 or 3 ads, if Google shows ad extensions on SERPs with only 2 ads to ensure they drive the organic results “out of sight, out of mind.”

Earlier this month it was also noticed Google replaced 7-pack local results with 3-pack local results for many more search queries, even on desktop search results. On some of these results they only show a call button, on others they show links to sites. It is a stark contrast to the vast array of arbitrary (and even automated) ad extensions in AdWords.

Why would they determine users want to see links to the websites & the phone numbers, then decide overnight users don’t want those?

Why would Google determine for many years that 7 is a good number of results to show, and then overnight shift to showing 3?

If Google listed 7 ads in a row people might notice the absurdity of it and complain. But if Google only shows 3 results, then they can quickly convert it into an ad unit with little blowback.

You don’t have to be a country music fan to know the Austin SEO limits in a search result where the local results are now payola.

Try not to hurt your back while looking down for the organic search results!

Here are two tips to ensure any SEO success isn’t ethereal: don’t be nearby, and don’t be a business. :D

Yahoo! Search Testing Google Search Results

Search PandaMonium

A couple days ago Microsoft announced a deal with AOL to have AOL sell Microsoft display ads & for Bing to power AOL’s organic search results and paid search ads for a decade starting in January.

The search landscape is still und…

Yahoo! Search Testing Google Search Results

Search PandaMonium

A couple days ago Microsoft announced a deal with AOL to have AOL sell Microsoft display ads & for Bing to power AOL’s organic search results and paid search ads for a decade starting in January.

The search landscape is still und…

Web Design Resources for Non-Designers

Most of you are too busy monitoring Google’s latest algorithm updates, examining web analytics, and building links and content to stay up to date on the design world.

Usually, creative people who excel at design aren’t very good at the left-brain thinking required to succeed in the highly-technical search engine optimization industry. Likewise, very few people with the analytical mindset required for search engine optimization would do well in the free-spirited design industry.

Unfortunately, in the real world, you’re often expected to do exactly that. And while most people understand that it would be ludicrous to expect their doctor to also troubleshoot their plumbing, they don’t seem to understand why they shouldn’t expect the person responsible for their SEO to also handle their design needs from time to time.

So you’re often forced to design things for your clients from time to time. Or sometimes, you just need to whip up something for yourself instead of trying to find someone who can deliver what you need on Fiverr.

Since you probably won’t start sporting a black turtleneck and talking about crop marks, press checks, or CMYK colors anytime soon, it seems silly to shell out thousands of dollars on software you’ll only use occasionally, so I’ve compiled a list of design resources for non-designers.