Building a Legal Moat for Your SEO Castle

About a month ago a year old case of an SEO firm being sued by it’s client resurfaced via a tweet from Matt Cutts.

I’d like to add something to this conversation that will be helpful for you as a service provider seeking to avoid that really, really scary issue.

Some quick background information if I may? No specifics are allowed but I’ve been a party, on both sides, to actual litigation pertaining to SEO contracts (not services rendered, just contractual issues with a third-party).

I’ve been the plaintiff and the defendant in cases involving contractual disputes and legal obligations so I, much to my dismay, speak from experience.

Suffice to say I’m not a lawyer, don’t act on any of this advice without talking it over with your counsel so they can tailor it to your specific needs and state law.

Basic Protections

There are essentially 3 ways to legally protect yourself and/or your company objectively. I say objectively because anyone can sue you for anything and “service” is a subjective term as are “results” unless they are specifically spelled out in your contract.

Objectively speaking, the law gives you 3 broad arenas for protective measures:

- Contracts

- Entity Selection

- Insurance

Contracts

Get a real lawyer, do not use internet “templates” and do not modify any piece of the contract yourself. Make sure your attorney completely understands what you do. A good lawyer will listen to you. Heck, mine now knows who Matt Cutts is and where the Webmaster Guidelines are located and what “anchor text” is :)

Your contracts need to cover the following scenarios:

- Client services

- Vendor relationships

- Employee/Contractor relationships

For standard client agreements you’ll want to cover some basic areas:

- Names of the legal entities partaking in the agreement

- Duties and nature of services

- Term and termination (who can cancel and when, what are the ramifications, etc)

- Fees

- No exclusive duty (a clause that says you can work with other clients and such)

- Disclaimer, Limitation of Liability

- Confidentiality

- Notices (what is considered legal notice? a letter? certified mail? email?)

- Governing law

- Attorney’s fees (if you need to enforce the contract make sure you can also collect fees)

- Relationship of Parties (spell out the relationship; independent entities? partners? joint ventures? spell out exactly what you are and what you are not

- Scope of Work

- Signatures (you should sign as you are in your entity; member, president, CEO, etc)

Some important notes are needed to discussion a couple of core areas of the contract:

For Governing law go with your home state if possible. Ideally, I try to get an arbitration clause in there rather than state law so in case there is a dispute it goes to a much less expensive form of resolution.

However, you can make an argument that if your contract is signed with your home state as governing law and your language is strong you are better off doing that instead of arbitration where one person makes a decision and no appeal is available.

For Limit of Liability go broad, real broad. You want to spell out that organic search (or just about any service) is not guaranteed to produce results, no promises were made, Google does not fully publish the algorithim thus you can’t be held liable for XYZ that happens.

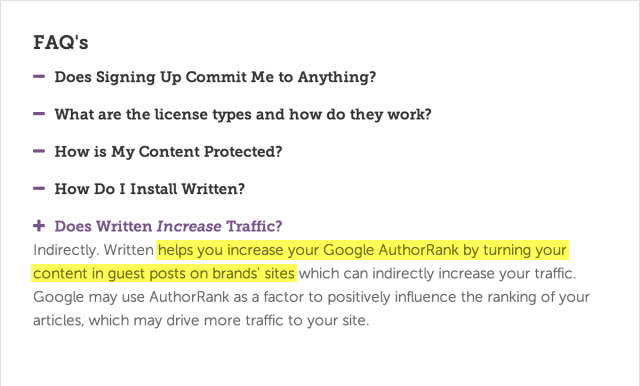

Also, if your client is asking you to do things against webmaster guidelines, and you decide to do them, you NEED to get that documented. Have them email it to you, record the call, something. Here is the liability clause in my contract:

Client agrees and acknowledges that the internet is an organic, constantly shifting entity, and that Client’s ranking and/or performance in a search engine may change for many reasons and be affected by many factors, including but not limited to any actual or alleged non-compliance by Provider to guidelines set forth by Google related to search engine optimization.

Client agrees that no representation, express or implied, and no warranty or guaranty is provided by Provider with respect to the services to be provided by Provider under this Agreement. Provider’s services may be in the form of rendering consultation which Client may or may not choose to act on. To the maximum extent permitted by law, Client agrees to limit the liability of Provider and its officers, owners, agents, and employees to the sum of Provider’s fees actually received from Client.

This limitation will apply regardless of the cause of action or legal theory pled or asserted. In no event shall Provider be liable for any special, incidental, indirect, or consequential damages arising from or related to this Agreement or the Project. Client agrees, as a material inducement for Provider to enter into this Agreement that the success and/or profitability of Client’s business depends on a variety of factors and conditions beyond the control of Provider and the scope of this Agreement. Provider makes no representations or warranties of any kind regarding the success and/or profitability of Client’s business, or lack thereof, and Provider will not be liable in any manner respecting the same.

Client agrees to indemnify and hold harmless Provider and its officers, owners, agents, and employees from and against any damages, claims, awards, and reasonable legal fees and costs arising from or related to any services provided by Provider, excepting only those directly arising from Provider’s gross negligence or willful misconduct.

For vendor and independent contractor agreements you’ll want most of the aforementioned clauses (especially the relationship of parties) in addition to a few more things (for employee stuff, get with your lawyer because states are quite different and a lot of us use remote workers in different states)

- Non-Competition and non-interference

- Non-Solicitation and non-contact

These clauses essentially prohibit the pursuit of your clientele and employees by a vendor/contractor for a specified period of time.

Legal Entity

Don’t be a sole proprietor, ever. If you’re a smaller shop you might consider being a single member LLC (just you), an LLC (you and employees), or an S Corp. If you’re a larger operation you might want to incorporate and go Inc.

The benefits of the LLC set up are:

- Your personal assets are generally untouchable (providing you are not co-mingling funds in a bank account)

- Very easy to administer compared to other options

- Your liability is limited to company assets (pro tip: clear out your business bank account each month minus some operating margin, move it to personal savings)

Benefits of an S Corp are:

- Same protections as LLC

- You save a fair amount on self employment taxes (more below)

With the S Corp there’s more paperwork and filings but if you are earning a fair bit of money it may be worth it to you. Here’s a good article breaking this all down, and a excerpt:

“If you operate your business as a sole proprietorship or partnership/LLC, you will pay roughly 15.3% in self-employment taxes on your $100,000 of profits. The calculations get a little tricky if you want to be really super-precise but you can think about self-employment tax as roughly a 15% tax. So 15% on $100,000 equals $15,000. Roughly.”

“With an S corporation, you split your business profits into two categories: “shareholder wages” and “distributive share.” Only the “shareholder wages” get subjected to the 15.3% tax. The leftover “distributive share” is not subject to 15.3% tax.”

Be careful here (and I’m not a CPA so don’t do anything without consulting with your accountant) not to be absurd with your wages. So, if your net income is 1 million don’t take 25k in wages and 975k as a distribution.

Some final thoughts on entities:

- Most of you will probably fall into the LLC/S S Corp category, get with your attorney and accountant

- Keep everything separate because if you don’t (credit cards, bank accounts, etc) your personal assets might be at risk due to the “piercing of the corporate veil”

Insurance

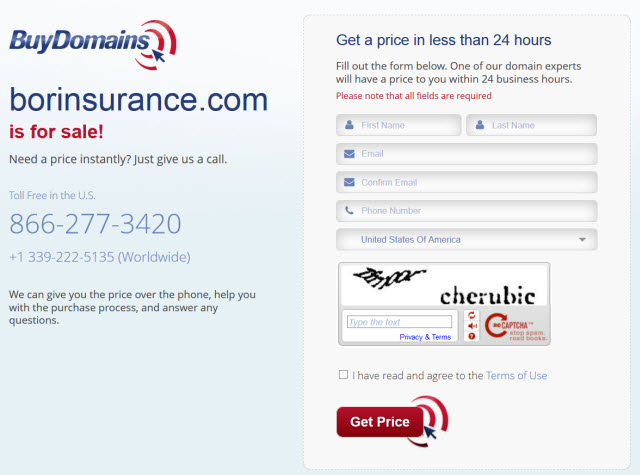

As you would imagine, insurance policies are few and far between for our industry. You can get general liability for your office, workers comp for your employees, disability for yourself, and so on. However, what you might want to look into is a professional liability policy.

You’ll probably end up looking at a miscellaneous one like the one here (marketing consultant?) offered by Travelers. You’ll probably have to educate your agent on your business practices to ensure proper coverage.

This might be worth it just due to the legal protection clause; meaning they will pay for a lawyer to defend you. Having the proper entity classification might protect your assets but paying lawyers is expensive to defend even frivolous lawsuits.

Record Keeping

This is a bit out of the “contract” topic but good record keeping is essential. If you use a project management and/or a CRM system you really should make sure you can export when you need it.

Many online CRM applications and project management applications have limited export capabilities especially when it comes to export comments and notes on things like tasks and records. Most have an API that you can have a developer custom code to export your stuff. I’d look into this as well.

Final Thoughts

Get with your attorney and CPA to get your specific situations up to legal snuff if you haven’t already. Don’t act on my advice as I’m not a lawyer nor a CPA. Contracts and agreements are not fun to negotiate and can be even harder when you work with people you generally trust.

However, when it comes to business dealings and contracts I would save my trust for my lawyer :)

Have We Reached Peak Advertising?

The internet runs on advertising. Google is funded almost entirely by advertising. Facebook , likewise. Digital marketing spends continue to rise:

Internet advertising revenues in the United States totaled $12.1 billion in the fourth quarter of 2013, an increase of 14% from the 2013 third-quarter total of $10.6 billion and an increase of 17% from the 2012 fourth-quarter total of $10.3 billion. 2013 full year internet advertising revenues totaled $42.78 billion, up 17% from the $36.57 billion reported in 2012.

Search advertising spend comes out on top, but that’s starting to change:

Search accounted for 41% of Q4 2013 revenues, down from 44% in Q4 2012, as mobile devices have shifted. Search-related revenues away from the desktop computer. Search revenues totaled $5.0 billion in Q4 2013, up 10% from Q4 2012, when Search totaled $4.6 billion

The growth area for digital advertising lays in mobile:

Mobile revenues totaled 19% of Q4 2013 revenues, or $2.3 billion, up 92% from the $1.2 billion (11% of total) reported in Q4 2012

Prominent venture capitalist, Mary Meeker, recently produced an analysis that also highlights this trend.

So, internet advertising is growing, but web internet adoption is slowing down. Meanwhile, mobile and tablet adoption is increasing fast, yet advertising spend on these mediums is comparatively low. Nice opportunity for mobile, however mobile advertising is proving hard to crack. Not many people are clicking on paid links on mobile. And many mobile ad clicks are accidental, driving down advertiser bids.

This is not just a problem for mobile. There may be a problem with advertising in general. It’s about trust, and lack thereof. This situation also presents a great opportunity for selling SEO.

But first, a little background….

People Know More

Advertising’s golden age was in the 50’s and 60’s.

Most consumers were information poor. At least, they were information poor when it came to getting timely information. This information asymmetry played into the hands of the advertising industry. The advertising agency provided the information that helped match the problems people had with a solution. Of course, they were framing the problem in a way that benefited the advertiser. If there wasn’t a problem, they made one up.

Today, the internet puts real time information about everything in the hands of the consumer. It is easy for people to compare offers, so the basis for advertising – which is essentially biased information provision – is being eroded. Most people see advertising as an intrusion. Just because an advertiser can get in front of a consumer at “the right time” does not necessarily mean people will buy what the advertiser has to offer with great frequency.

Your mobile phone pings. “You’re passing Gordon’s Steak House….come in and enjoy our Mega Feast!” You can compare that offer against a wide range of offers, and they can do so in real time. More than likely, you’ll just resent the intrusion. After all, you may be a happy regular at Susan’s Sushi.

“Knowing things” is not exclusive. Being able to “know things” is a click away. If information is freely available, then people are less likely to opt for whatever is pushed at them by advertisers at that moment. If it’s easy to research, people will do so.

This raises a problem when it comes to the economics of content creation. If advertising becomes less effective for the advertiser, then the advertisers is going to reduce spend, or shift spend elsewhere. If they do, then what becomes of the predominant web content model which is based on advertising?

Free Content Driven By Ads May Be An Unsustainable Model

We’re seeing it in broadcast television, and we’ll see it on the web.

Television is dying and being replaced by the Netflix model. There is a lot of content. There are not enough advertisers paying top dollar as the audience is now highly fragmented. As a result, a lot of broadcast television advertising can be ineffective. However, as we’ve seen with Netflix and Spotify, people are prepared to pay directly for the content they consume in the form of a monthly fee.

The long term trend for advertising engagement on the web is not favourable.

The very first banner advertisement appeared in 1994. The clickthru rate of that banner ad was a staggering 44% It had a novelty value, certainly. The first banner ad also existed in an environment where there wasn’t much information. The web was almost entirely about navigation.

Today, there is no shortage of content. The average Facebook advertisement clickthrough rate is around 0.04%. Advertisers get rather excited if they manage to squeeze 2% or 3% click-thrus rates out of Facebook ads.

Digital advertising is no longer novel, so the click-thru rate has plummeted. Not only do people feel that the advertising isn’t relevant to them, they have learned to ignore advertising even if the ad is talking directly to their needs. 97-98% of the time, people will not click on the ad.

And why should they? Information isn’t hard to come by. So what is the advertiser providing the prospective customer?

Even brand engagement is plummeting on Facebook as the novelty wears off, and Facebook changes policy:

According to a new report from Simply Measured, the total engagement for the top 10 most-followed brands on Facebook has declined 40 percent year-over-year—even as brands have increased the amount of content they’re posting by 20.1 percent.

Is Advertising Already Failing?

Our industry runs on advertising. Much of web publishing runs on advertising.

However, Eric Clemons makes the point that the traditional method of advertising was always bound to fail, mainly because after the novelty wears off, it’s all about interruption, and nobody likes to be interrupted.

But wait! Isn’t the advantage of search that it isn’t interruption advertising? In search, the user requests something. Clemons feels that search results can still be a form of misdirection:

Misdirection, or sending customers to web locations other than the ones for which they are searching. This is Google’s business model. Monetization of misdirection frequently takes the form of charging companies for keywords and threatening to divert their customers to a competitor if they fail to pay adequately for keywords that the customer is likely to use in searches for the companies’ products; that is, misdirection works best when it is threatened rather than actually imposed, and when companies actually do pay the fees demanded for their keywords. Misdirection most frequently takes the form of diverting customers to companies that they do not wish to find, simply because the customer’s preferred company underbid.

He who pays becomes “relevant”:

it is not scalable; it is not possible for every website to earn its revenue from sponsored search and ultimately at least some of them will need to find an alternative revenue model.

The companies that appear high on PPC are the companies who pay. Not every company can be on top, because not every company can pay top dollar. So, what the user sees is not necessarily what the user wants, but the company that has paid the most – along with their quality score – to be there.

But nowadays, the metrics of this channel have changed dramatically, making it impossible or nearly impossible for small and mid-sized business to turn a profit using AdWords. In fact, most small businesses can’t break even using AdWords.This goes for many large businesses as well, but they don’t care. And that is the key difference, and precisely why small brands using AdWords nowadays are being bludgeoned out of existence

Similarly, the organic search results are often dominated by large companies and entities. This is a direct or side-effect of the algorithms. Big entities create a favourable footprint of awareness, engagement and links as a result of PR, existing momentum, brand recognition, and advertising campaigns. It’s a lot harder for small companies to dominate lucrative competitive niches as they can’t create those same footprints.

Certainly when it comes to PPC, the search visitor may be presented with various big player links at the expense of smaller players. Google, like every other advertising driven medium, is beholden to it’s big advertisers. Jacob Nielsen noted in 1998:

Ultimately, those who pay for something control it. Currently, most websites that don’t sell things are funded by advertising. Thus, they will be controlled by advertisers and will become less and less useful to the users”

If Interruption Advertising Is Failing, Is Advertising Scalable?

Being informed has changed customer behaviour.

The problem is not the medium, the problem is the message, and the fact that it is not trusted, not wanted, and not needed.

People don’t trust ads. There is a vast literature to support this. Is it all wrong?

People don’t want ads. Again, there is a vast literature to support this. Think about your own behavior, you own channel surfing and fast forwarding and the timing of when you leave the TV to get a snack. Is it during the content or the commercials?

People don’t need ads. There is a vast amount of trusted content on the net. Again, there is literature on this. But think about how you form your opinion of a product, from online ads or online reviews?

There is no shortage of places to put ads. Competition among them will be brutal. Prices will be driven lower and lower, for everyone but Google.

If the advertising is not scaleable, then a lot of content based on advertising will die. Advertising may not be able to support the net:

Now reality is reasserting itself once more, with familiar results. The number of companies that can be sustained by revenues from internet advertising turns out to be much smaller than many people thought, and Silicon Valley seems to be entering another “nuclear winter”

A lot of Adsense publishers are being kicked from the program. Many are terminated, without reason. Google appear to be systematically culling the publisher herd. Why? Shouldn’t web publishing, supported by advertising, be growing?

The continuing plunge in AdSense is in sharp contrast to robust 20% revenue growth in 2012, which outpaced AdWords’ growth of 19%…..There are serious issues with online advertising affecting the entire industry. Google has reported declining value from clicks on its ads. And the shift to mobile ads is accelerating the decline, because it produces a fraction of the revenue of desktop ads.

Matt Sanchez, CEO of San Francisco based ad network Say Media, recently warnedthat, “Mobile Is Killing Media.”

Digital publishing is headed off a cliff … There’s a five fold gap between mobile revenue and desktop revenue… What makes that gap even starker is how quickly it’s happening… On the industry’s current course, that’s a recipe for disaster.

Prices tumble when consumers have near-perfect real time information. Travel. Consumer goods. Anything generic that can be readily compared is experiencing falling prices and shrinking margins. Sales growth in many consumer categories is coming from the premium offerings. For example, beer consumption is falling across the board except in one area: boutique, specialist brews. That market sector is growing as customers become a lot more aware of options that are not just good enough, but great. Boutique breweries offer a more personal relationship, and they offer something the customer perceives as being great, not just “good enough”.

Mass marketing is expensive. Most of the money spent on it is wasted. Products and services that are “just good enough” will be beaten by products and services that are a precise fit for consumers needs. Good enough is no longer good enough, products and services need to be great and precisely targeted unless you’ve got advertising money to burn.

How Do We Get To These Consumers If They No Longer Trust Paid Advertising?

Consumers will go to information suppliers they trust. There is always demand for a trusted source.

Trip Advisor is a great travel sales channel. It’s a high trust layer over a commodity product. People don’t trust Trip Advisor, per se, they trust the process. Customers talk to each other about the merits, or otherwise, of holiday destinations. It’s transparent. It’s not interruption, misleading or distracting. Consumers seek it out.

Trust models will be one way around the advertising problem. This suits SEOs. If you provide trusted information, especially in a transparent, high-trust form, like Trip Advisor, you will likely win out over those using more direct sales methods. Consumers are getting a lot better at tuning those out.

The trick is to remove the negative experience of advertising by not appearing to be advertising at all. Long term, it’s about developing relationships built on trust, not on interruption and misdirection. It’s a good idea to think about advertising as a relationship process, as opposed to the direct marketing model on which the web is built – which is all about capturing the customer just before point of sale.

Rand Fishkin explained the web purchase process well in this presentation. The process whereby someone becomes a customer, particularly on the web, isn’t all about the late stages of the transaction. We have to think of it in terms of a slow burning relationship developed over time. The consumer comes to us at the end of an information comparison process. Really, it’s an exercise in establishing consumer trust.

Amazon doesn’t rely on advertising. Amazon is a trusted destination. If someone wants to buy something, they often just go direct to Amazon. Amazon’s strategy involves what it calls “the flywheel”, whereby the more things people buy from Amazon, the more they’ll buy from Amazon in future. Amazon builds up a relationship rather than relying on a lot of advertising. Amazon cuts out the middle man and sells direct to customers.

Going viral with content, like Buzzfeed, may be one answer, but it’s likely temporary. It, too, suffers from a trust problem and the novelty will wear off:

Saying “I’m going to make this ad go viral” ignores the fact that the vast majority of viral content is ridiculously stupid. The second strategy, then, is the high-volume approach, same as it ever was. When communications systems wither, more and more of what’s left is the advertising dust. Junk mail at your house, in your email; crappy banner ads on MySpace. Platforms make advertising cheaper and cheaper in a scramble to make up revenue through volume.

It’s not just about supplying content. It could be said newspapers are suffering because bundled news is just another form of interruption and misdirection, mainly because it isn’t specifically targeted:

Following The New York Times on Twitter is just like paging through a print newspaper. Each tweet is about something completely unrelated to the tweets before it. And this is the opposite of why people usually follow people and brands online. It’s not surprising that The New York Times have a huge problem with engagement. They have nothing that people can connect and engage with

Eventually, the social networks will likely suffer from a trust problem, if they don’t already. Their reliance on advertising makes them spies. There is a growing awareness of data privacy and users are unlikely to tolerate invasions of privacy, especially if they are offered an alternative. Or perhaps the answer is to give users a cut themselves. Lady Gaga might be onto something.

Friends “selling” (recommending) to friends is a high trust environment.

A Good Approach To SEO Involves Building Consumer Trust

The serp is low trust. PPC is low trust. Search keyword plus a site that is littered with ads is low trust. So, one good long term future strategy is to move from low to high trust advertising.

A high trust environment doesn’t really look like advertising. It’s could be characterised as a transparent platform. Amazon and Trip Advisor are good examples. They are honest about what they are, and they provide the good along with the bad. It could be something like Wikipedia. Or an advisory site. There are many examples, but it’s fair to say we know it when we see it.

A search on a keyword that finds a specific, relevant site that isn’t an obvious advertisement is high trust. The first visit is the start of a relationship. This is not the time to bombard visitors with your needs. Instead, give the visitor something they can trust. Trip Advisor even spells it out: “Find hotels travelers trust”.

Telsla understands the trust relationship. Recently, they’ve made their patents open-source, which, apart from anything else, is a great form of reputation marketing. It’s clear Telsa is more interested in long term relationships and goodwill than pushing their latest model on you at a special price. Their transparency is endearing.

First, you earn trust. Then you sell them something later. If you don’t earn their trust, then you’re just like any other advertiser. People will compare you. People will seek out information. You’re one of many options, unless you have formed a prior relationship. SEO is a brilliant channel to develop a relationship based on trust. If you’re selling SEO to clients, think about discussing the trust building potential – and value proposition – of SEO with them.

It’s a nice side benefit of SEO. And it’s a hedge against the problems associated with other forms of advertising.

The Rigged Search Game

SEO was all about being clever. Still is, really. However, SEO used to reward the clever, too. The little guy could take on the big guys and munch their lunch by outsmarting them.

It was such an appealing idea.

The promise of the internet was that the old power structures would be swept aside, the playing field would be made level again, and those who played the smartest game would prosper.

Sadly, this promise didn’t last long.

Power

The names may have changed, but traditional power structures were soon reasserted. The old gatekeepers were replaced with the new gatekeepers. The new gatekeepers, like Google, grew fat, rich and powerful. They controlled the game and the game was, once again, rigged in favor of those with the most power. That’s not a Google-specific criticism, it’s just the way commerce works. You get big, you move markets simply by being big and present. In search, we see the power imbalance as a side-effect, namely the way big players are treated in the SERPs compared to small players.

SEO for big, established companies, in terms of strategy, is simple. Make sure the site is crawlable. Run PR campaigns that frequently mention the name of the big, established company – which PR campaigns do anyway – and ensure those mentions include a back link. Talk to a lof of friendly reporters. Publish content, do so often, and make sure the important content is somewhere near the top.

That’s it.

The market reputation of the entity does most of the grunt work when it comes to ranking. So long as their ship is pointed in the right direction, they’re golden.

The main aim of the SEO who works for a big, established entity is to stop the big, established entity doing something stupid. So long as the SEO can prevent the entity doing stupid things – often a difficult task, granted – the big, established entity will likely dominate their niche simply by virtue of established market power.

That didn’t used to be the case.

When SEO started, and for a number of years after, the little guy could dominate niches by being the most relevant. The little guy could become the most relevant by carefully deconstructing the algorithm and giving the search engine what the search engine wanted. If the search engines weren’t careful, they were in very real danger of getting exactly what they asked for!

That temporary inversion of the traditional power structure made SEO a lot of fun. You did some clever stuff. You rose to the top. You collected the rewards. I think it’s grown less fun now because being clever isn’t enough. SEO works, but not quite as well as it used to for small players as the cost/reward equation favors big players.

Do A Lot Of Clever SEO Stuff, Get Nowhere

These days, a glass ceiling exists. SEOBook members can read a detailed post by Aaron outlining the glass ceiling here.

Here’s how it often plays out…

About a 8 months ago we launched one of the most viral pieces of content that we have ever done (particulary for a small site that doesn’t have a huge following) … it was done so well that it was organically referenced/hardcoded into Wikipedia. In addition it was cited on news sites, dozens and dozens of blogs (likely north of 100), a number of colleges, etc. It got like a couple hundred unique linking domains….which effectively doubled the unique linking domains that linked into the parent site. What impact did that have on rankings? Nada.

For link building to work well, the right signals need to exist, too. There needs to have high levels of reach and engagement. Big companies tend to have high levels of reach and engagement due to their market position and wider PR and advertising campaigns. This creates search keyword volume, keyword associations, engagement, and frequent mentions in important places, and all this is difficult to compete with if you have a small budget. The exception appears to be in relatively new niches, and in the regions, where the underlying data concerning engagement, reach and interest is unlikely to be particularly deep and rich.

Yet.

So, the little guy is often fighting a losing battle when it comes to search. Even if they choose a new, fast growing niche, as soon as that niche becomes lucrative enough to attract big players, traditional power will reassert itself. The only long term option for the little guy is to become a big guy, or get bought up by one, or go work for one.

Slavery

Abraham Lincoln thought wage labour was a stage workers pass through, typically in their 20’s or early 30s. Eventually, they become self-employed and keep all the profits of their labour.

Adam Smith maintained markets only work as intended if everyone had enough to participate. They also must have sufficient control over their own means of production. Adam Smith, father of modern capitalism, was not a big fan of corporate capitalism:

Merchants and master manufacturers are . . . the two classes of people who commonly employ the largest capitals, and who by their wealth draw to themselves the greatest share of the public consideration.

A side-effect of big players is they can distort markets. They have more purchasing power and that purchasing power sends a signal about what’s important. To big companies. The result is less diversity.

It’s self evident that power changes the search game. The search results become more about whoever is the most powerful. It seems ironic that Google started as an upstart outsider. The search results are difficult to conquer if you’re an upstart outsider, but pretty easy to do if you’re already a major player. Adwords, quality score being equal, favours those with deep pockets.

What’s happening is the little guy is getting squeezed out of this landscape and many of them will become slaves.

Huh? “Slaves”? By Aristotle’s and Lincoln’s definition, quite possibly:

If we want to have markets, we have to give everybody an equal chance to get into them, or else they don’t work as a means of social liberation; they operate as a means of enslavement.

Enslavement in the sense that the people with enough power, who can get the market to work on their behalf…

Right — bribing politicians to set up the system so that they accumulate more, and other people end up spending all their time working for them. The difference between selling yourself into slavery and renting yourself into slavery in the ancient world was basically none at all, you know. If Aristotle were here, he’d think most people in a country like England or America were slaves.

What’s happening in search is a microcosm of what is happening elsewhere in society. Markets are dominated and distorted by corporations at the direct expense of the small players. Yes, it’s nothing new, but it hasn’t always been this way in search.

So what is my point?

My point is that if you’re not getting the same business benefits from search as you used to, and the game seems that much harder, then it’s not because you’re not clever. It’s because the game is rigged.

Of course, small companies can prosper. You’ll find many examples of them in the SERPs. But their path to getting there via the search channel is now much longer and doesn’t pay as well as it used to. This means fewer SEOs will be hired by small companies because the cost of effective SEO is rising fast whilst the rewards are shrinking. Meanwhile, the big companies are increasing their digital budgets.

Knowing all this, the small operator can change their approach. The small operator has one advantage. They can be nimble, flexible, and change direction quickly. So, looking forward over the not-too-distant horizon, we either need a plan to take advantage of fast emerging markets before the big guys enter them, or we need a plan to scale, or we need to fight differently, such as taking brand/USP centric approaches.

Or go work for one of the big guys.

As a “slave”. Dude :) (Just kidding)

What’s In A Name?

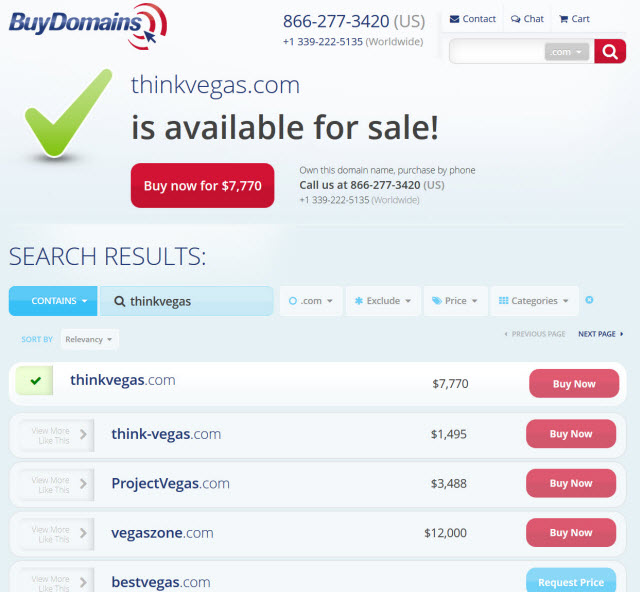

Many SEO love keyword-loaded domain names. The theory is that domains that feature a keyword will result in a boost in ranking. It’s still a contentious topic:

I’ve seen bloggers, webmasters and search aficionados argue the case around the death of EMDs time and time again, despite the evidence staring them in the face: EMDs are still all over the place. What’s more, do a simple bulk backlink analysis via Majestic, and you will find tons which rank in the top 10 while surrounded by far more authoritative domains.

No matter what the truth of the matter as to the ranking value of EMDs, most would agree that finding the right language for describing and profiling our business is important.

Terminology Changes

Consider the term “startup”.

This term, which describes a new small business, feels like it has been around forever. Not so. Conduct a search on the time period 1995-1998 and you won’t find results for start-up:

It’s a word that has grown up with the web and sounds sexier than just business. Just like the word “consultant” or “boutique” sounds better than “mom and pop” or “1 person business”. (You must remember of course when “sanitation engineer” replaced “trash man”.) oI just did a search to see the use of the word startup from the period 1995 to 1998 and came up with zilch in terms of relation to business

Start up does sound sexier than “mom n pop” or “one person business”, or “a few stoner mates avoiding getting a job”. A pitch to a VC that described the business as a “mom n pop” may not be taken seriously, whereas calling it a startup will.

If we want to be taken seriously by our audience, then finding the audience’s language is important.

SEO or Digital Marketing Or…..?

Has SEO become a dirty word? Has it always been a dirty word?

SEO’s don’t tend to see it that way, even if they are aware of the negative connotations. They see SEO as a description of what they do. It’s always been a bit of a misnomer, as we don’t optimize search engines, but for whatever reason, it stuck.

The term SEO is often associated with spam. The ever-amiable Matt Cutts video’s could be accompanied by a stern, animated wagging finger and a “tut tut tut” subtext. The search engines frown on a lot when it comes to SEO. SEO is permanent frown territory. Contrast this with PPC. PPC does not have that negative connotation. There is no reputation issue in saying you’re a PPC provider.

Over the years, this propaganda exercise that has resulted in the “SEO questionable/PPC credible” narrative has been pretty effective. The spammer label, borrowed from the world of email spam, has not been a term the SEO has managed to shrug off. The search engines have even managed to get SEOs to use the term “spammer” as a point of differentiation. “Spam is what the other SEOs do. Not me, of course.” This just goes to show how effective the propaganda has been. Once SEOs used spam to describe their own industry, the fate of the term SEO was sealed. After all, you seldom hear doctors, lawyers and retailers defining what they do against the bad actors in their sector.

As traffic acquisition gets broader, encompassing PR and social media, new titles like Digital Marketer have emerged. These terms have the advantage of not being weighed down by historical baggage. I’m not suggesting people should name themselves one thing or the other. Rather, consider these terms in a strategic sense. What terms best describe who you are and what you do, and cast you in the best possible light to those you wish to serve, at this point in time?

The language moves.

Generic Name Or Brandable?

Keyword loaded names, like business.com, are both valuable and costly. The downside of such names, besides being costly, is they severely limit branding opportunities. The better search engines get, and the more people use social media and other referral channels, the less these generic names will matter.

What matters most in crowded markets is being memorable.

A memorable, unique name is a valuable search commodity. If that name is always associated with you and no one else, then you’ll always be found in the search results. SEMRush, MajesticSEO, and Mo are unlikely to be confused with other companies. “Search Engine Tools”, not so much.

Will the generic name become less valuable because generic names are perhaps only useful at the start of an industry? How mature is your industry? How can you best get differentiation in a crowded market through language alone?

The Strategy Behind Naming

Here are a few points to consider.

1. Start Early

Names are often an afterthought. People construct business plans. They think about how their website looks. They think about their target market. They don’t yet have a name. Try starting with a name and designing everything else around it. The name can set the tone of every other decision you make.

2. Positioning

In mature markets, differentiation is strategically important. Is your proposed name similar to other competitors names? Is it unique enough? If you’re in at the start of a new industry, would a generic, keyword loaded name work best? Is it time for a name change because you’ve got lost in the crowd? Has your business focus changed?

Does your name go beyond mere description and create an emotional connection with your audience? Names that take on their own meaning, like Amazon, are more likely to grow with the business, rather than have the business outgrow the name. Imagine if Amazon.com had called itself Books.com.

3. What Are You All About?

Are you a high-touch consultative company? Or a product based, functional company? Are you on the cutting edge? Or are you catering to a market who like things just the way they are?

Writing down a short paragraph about how you see yourself, how the customers see you, and your position in the market, will help you come up with suitable names. Better yet, write a story.

4. Descriptive Vs Differentiation

Descriptive can be safe. “Internet Search Engine” or “Web Crawler”. There’s no confusing what those businesses do. Compare them with the name Google. Google gives you no idea what the company does, but it’s more iconic, quirky and memorable. There’s no doubt it has grown with the company and become a natural part of their identity in ways that “Internet Search Engine” never could.

Sometimes, mixing descriptions to create something quirky works well. Airbnb is a good example. The juxtaposition of those two words creates something new, whilst at the same time having a ring of the familiar. It’s also nice to know if the domain name is available, and if the name can be trademarked. The more generic the name, the harder it is to trademark, and the less likely the domain name is available.

5. Does Your Name Travel Well?

Hopefully, your name isn’t a swear word in another culture. Nor have negative connotations. Here are a few comical examples where it went wrong:

Nokia’s new smartphone translates in Spanish slang to prostitute, which is unfortunate, but at least the cell phone giant is in good company. The name of international car manufacturer Peugeot translates in southern China to Biao zhi, which means the same thing.

This is not such an issue if your market is local, but if you plan to expand into other markets in future, then it pays to consider this angle.

6. There’s No Right Answer

There is probably no universally good name. At least, when you first come up with a name, you can be assured some people will hate it, some will be indifferent, and some will like it – no matter what name you choose.

This is why it’s important to ground the subjective name-choosing process in something concrete, like your business strategy, or positioning in the market. You name could have come before the business plan. Or it could reflect it. You then test your name with people who will likely buy your product or service. It doesn’t matter what your Mom or your friends think of the name, it’s what you think of the name and what your potential customers think of the name that counts.

7. Diluting Your Name

Does each service line and product in your company need a distinctive name? Maybe, but the risk is that it could dilute the brand. Consider Virgin. They put the exact same name on completely different service lines. That same brand name carries the values and spirit of Virgin to whatever new enterprise they undertake. This also reduces the potential for customer confusion.

Creating a different name for some of your offerings might be a good idea, Say, if you’re predominantly a service-based company, yet you also have one product that you may spin off at some point in future. You may want to clearly differentiate the product from the service so as not to dilute the focus of the service side. Again, this is where strategy comes in. If you’re clear about what your company does, and your position in the market, then it becomes easier to decide how to name new aspects of your business. Or whether you should give them a name at all.

7. Is your name still relevant?

Brands evolve. They can appear outdated if the market moves on. On the other hand, they can built equity through longevity. It seems especially difficult to change internet company names as the inbound linking might be compromised as a result. Transferring the equity of a brand is typically expensive and difficult. All the more reason to place sufficient importance on naming to begin with.

8. More Than A Name

The branding process is more than just a name and identity. It’s the language of your company. It’s the language of your customers. It becomes a keyword on which people search. Your customers have got to remember it. You, and your employees, need to be proud of it. It sets you apart.

The language is important. And strategic.

Pandas, Penguins, and Popsicles

Are you still working through your newsfeed of SEO material on the 101 ways to get out of panda 4.0 written by people that have never actually practiced SEO on their own sites? Aaron and I had concluded that what was rolling through was panda before it was announced that it was panda, but I’m not going to walk here on my treadmill and knock out yet another post on the things you should be doing if you were gut punched by that negative a priori algorithm (hat tip to Terry, another fine SEObook member, for pointing out to me those public discussions that showed the philosophical evolutionary shift towards the default assumption that sites likely deserve to be punished). I’d say 90% of those posts are thinly veiled sales pitches; I should know since I sell infographics to support my nachos habit. Speaking of infographics, there’s already a great one that covers recovery strategies that still work right here.

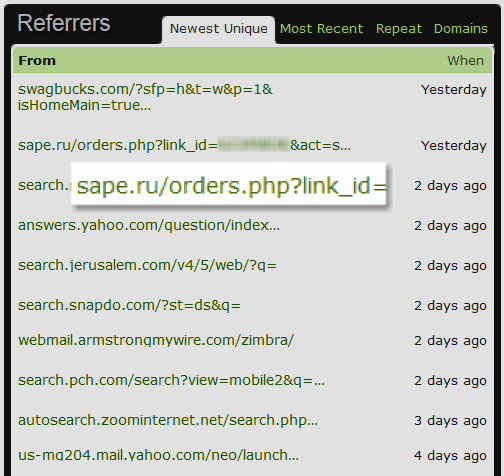

Should I write about penguin? Analysis of that beast consumed the better part of 2 year years of my waking time. Nope. Again, I think it has already been adequately covered in a previous blog post. There’s nothing particularly new to report there either since the next update may be completely different, might be just another refresh that doesn’t take into account those slapped in the 1.0 incarnation of the update, or may actually be the penguin everyone hopes it is, taking into account the countless hours agencies have spent disavowing links and spamming me with fake legal threads should I not remove links they themselves placed. I wouldn’t hold your breathe on that last one. Outside of crowdsourcing pain for future manual penalties, I don’t expect much relief on that front.

Instead, I think I’m going to talk about popsicles. That seems like the kind of tripe that a SEO blog might discuss. I bet I can make it work though. I’m a fat dude in the Phoenix area and we already had our first 100F day, so I’m thinking of frozen treats. Strap in.

Search tactics and I’d even go so far as to say even certain strategies are like popsicles. When they are brand new they are cool and refreshing, but once exposed to the public heat they fade…fast. Really fast. Like a goop of sticky mess, which users of ALN and BMR can probably tell you.

Bear with me.

If you have a tactic that works, why would you expose it to the public? Nothing good can come of that. Sure, you have a tactic that works 100% but since I’m a loyal subscriber you’re willing to share it with me for $297. Seems legit. I’m not saying all services/products pitched this way are inherently ‘bad’, I’m just saying you aren’t going to get a magic bullet, yet alone one hand-wrapped and delivered by filling out a single wufoo form…sans report.

Would you share with a really close friend? I suppose, but even still the popsicle isn’t going to last as long since it is now being consumed at an accelerated rate. There’s the thought of germs, contamination, and other nasty thoughts that’d prevent me from going down that route. Cue the “Two SEOs, one popsicle” reaction videos. No. There are two ways to make the best use out of that popsicle.

- Practionioner: eat it quietly, savor it, make it last.

- Strategist w/ resources: figure out the recipe and mass produce it as quickly as possible, knowing that after enough public heat is on, the popsicles will start melting before they can be eaten, and no one likely that weird, warm orange sticky stuff that tastes like a glucose intolerance test.

There’s another caveat to the two above scenarios. Even if you’re a strategist with deep resources, unless you’re willing to test on your own sites, you’re just effectively selling smoke on an unproven tactic.

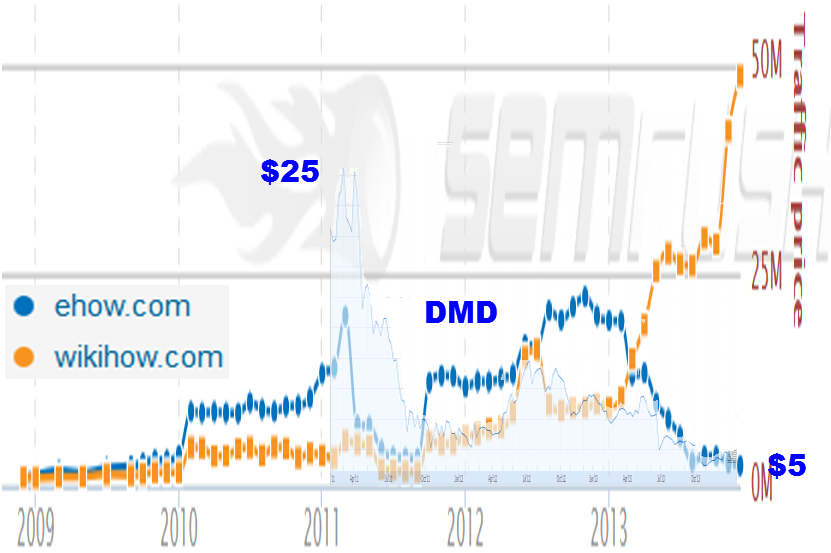

So there you have it, tactics are like popsicles. Disappointed? Good. I’ve been doing SEO since 1997, so here’s a secret: try to create engaging content, supported by authoritative off-page signals. There’s an ebb and flow to this of course, but it can be translated across the full black/white spectrum. Markov content in a free wordpress theme can be engaging when it is cloaked with actionable imagery, with certain % of back-buttons disabled, or when you make the advertising more compelling than the content (just ask eHow). Similarly, well-researched interactive infographics can engage the user on the other side of the spectrum…just more expensive. Comment spam and parasitic hosting on “authority” sites can tap into those authority signals on dark side, as can a thorough native campaign across a bunch of relevant sites backed by a PR campaign, TV commercials, and radio spots for the light side. Budget and objectives are the only difference.

Go enjoy a popsicle everyone. Summer is here; I expect a lot more heat from Google, so you might need one.

About the author: Joe Sinkwitz is the Chief Revenue Officer at CopyPress. He {Tweets / posts / comments / shares his thoughts} on navigating the evolving SEO landscape on Twitter here.

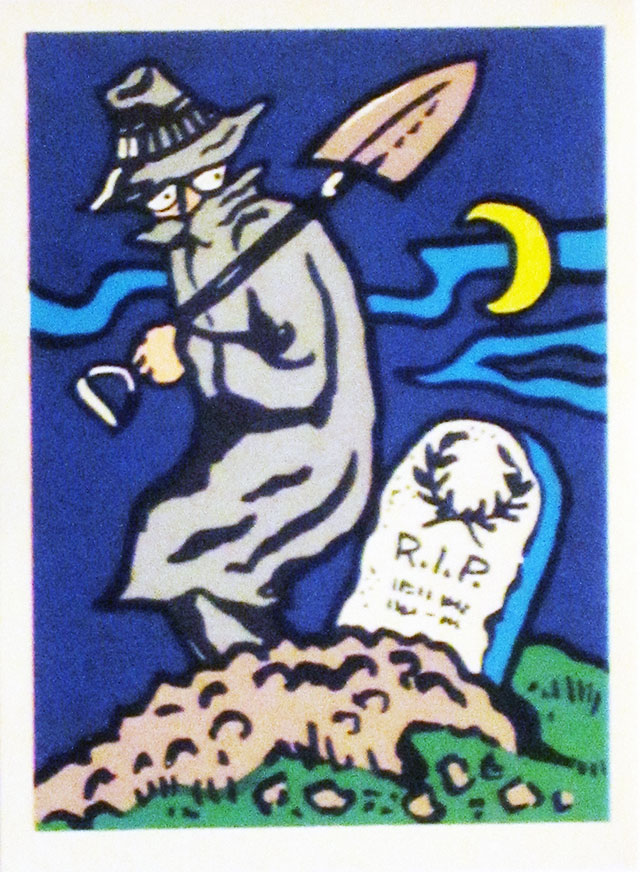

Please Remove My Link. Or Else.

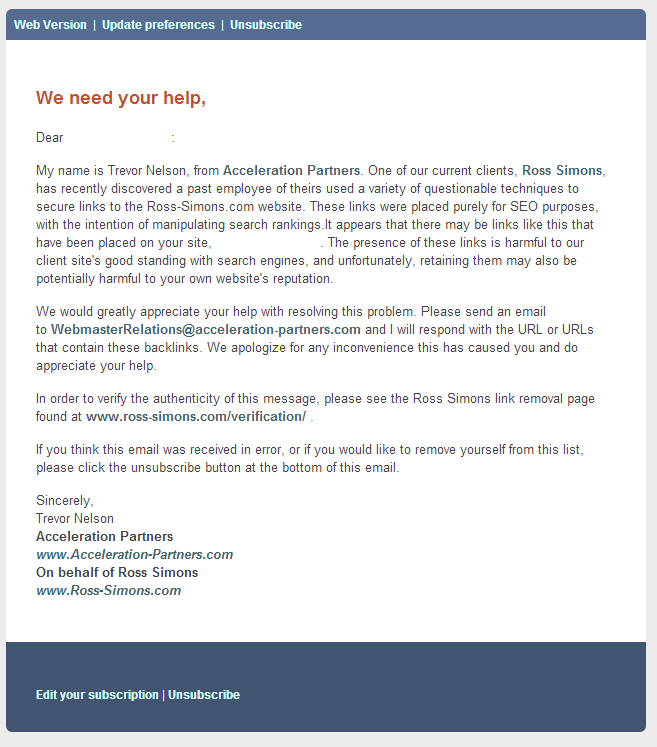

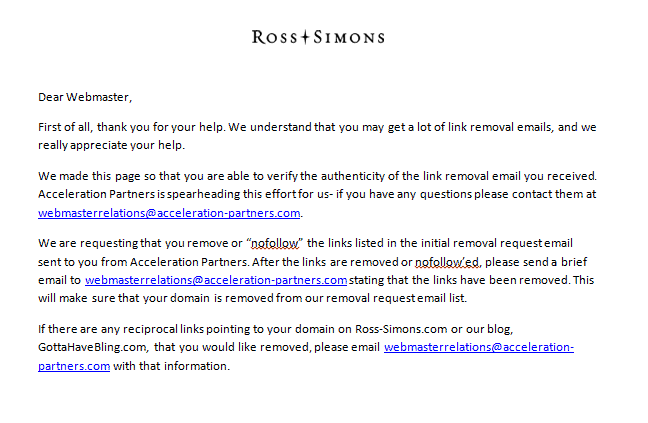

Getting links removed is a tedious business.

It’s just as tedious for the site owner who must remove the links. Google’s annoying practice of “suggesting” webmasters jump through hoops in order to physically remove links that the webmaster suspects are bad, rather than Google simply ignoring the links that they’ve internally flagged, is causing frustration.

Is it a punitive punishment? If so, it’s doing nothing to endear Google to webmasters. Is it a smokescreen? i.e. they don’t know which links are bad, but by having webmasters declare them, this helps Google build up a more comprehensive database? Bit of both? It might also be adding costs to SEO in order to put SEO out of reach of small companies. Perhaps it’s a red herring to make people think links are more important than they actually are.

Hard to be sure.

Collateral Damage

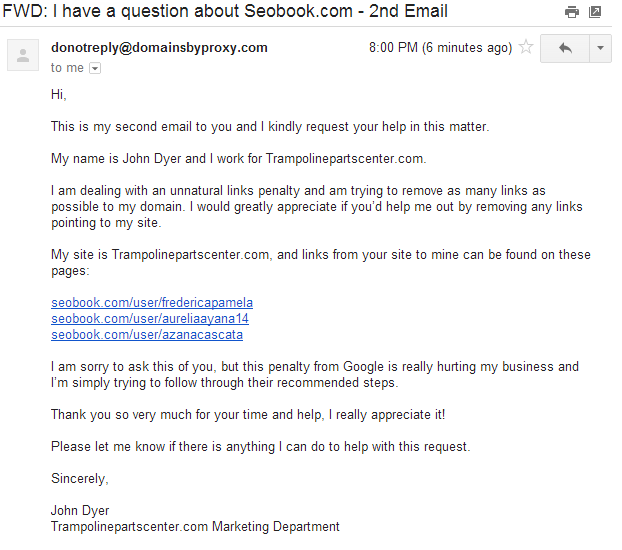

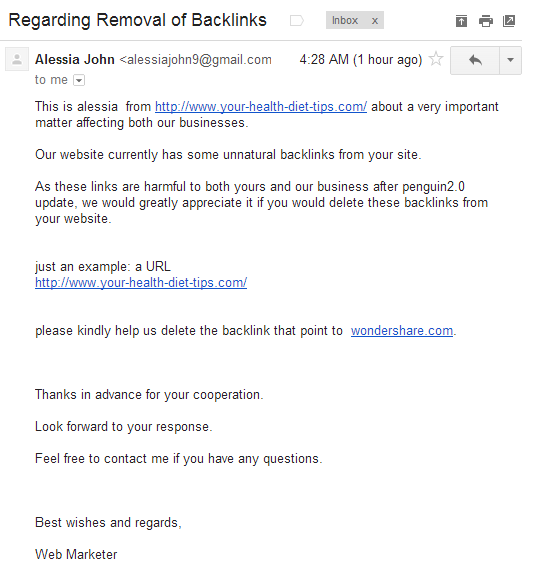

SEOs are accustomed to search engines being coy, punitive and oblique. SEOs accept it as part of the game. However, it becomes rather interesting when webmasters who are not connected to SEO get caught up in the collateral damage:

I received an interesting email the other day from a company we linked to from one of our websites. In short, the email was a request to remove links from our site to their site. We linked to this company on our own accord, with no prior solicitation, because we felt it would be useful to our site visitors, which is generally why people link to things on the Internet.

And check out the subsequent discussion on Hacker News. Matt Cutts first post is somewhat disingenuous:

Situation #1 is by far the most common. If a site gets dinged for linkspam and works to clean up their links, a lot of them send out a bunch of link removal requests on their own prerogative

Webmasters who receive the notification are encouraged by Google to clean up their backlinks, because if they don’t, then their rankings suffer.

But, essentially from our point of view when it comes to unnatural links to your website we want to see that you’ve taken significant steps to actually remove it from the web but if there are some links that you can’t remove yourself or there are some that require payment to be removed then having those in the disavow file is fine as well.

(Emphasis mine)

So, of course webmasters who have received a notification from Google are going to contact websites to get links removed. Google have stated they want to see that the webmaster has gone to considerable effort to remove them, rather than simply use the disavow tool.

The inevitable result is that a webmaster who links to anyone who has received a bad links notification may receive the latest form of email spam known as the “please remove my link” email. For some webmasters, this email has become more common that the “someone has left you millions in a Nigerian bank account” gambit, and is just as persistent and annoying.

From The Webmasters Perspective

Webmasters could justifiably add the phrase “please remove my link” and the word “disavow” to their spam filters.

Let’s assume this webmaster isn’t a bad neighbourhood and is simply caught in the cross-fire. The SEO assumes, perhaps incorrectly, the link is bad and requests a take-down. From the webmasters perspective, they incur a time cost dealing with the link removal requests. A lone request might take a few minutes to physically remove – but hang on a minute – how does the webmaster know this request is coming from the site owner and not from some dishonest competitor? Ownership takes time to verify. And why would the webmaster want to take down this link, anyway? Presumably, they put it up because they deemed it useful to their audience. Or, perhaps some bot put the link there – perhaps as a forum or blog comment link – against the webmasters wishes – and now, to add insult to injury, the SEO wants the webmaster to spend his time taking it down!

Even so, this might be okay if it’s only one link. It doesn’t take long to remove. But, for webmasters who own large sites, it quickly becomes a chore. For large sites with thousands of outbound links built up over years, removal requests can pile up. That’s when the spam filter kicks in.

Then come the veiled threats. “Thanks for linking to us. This is no reflection on you, but if you don’t remove my link I’ll be forced to disavow you and your site will look bad in Google. I don’t want to do this, but I may have to.”

What a guy.

How does the webmaster know the SEO won’t do that anyway? Isn’t that exactly what some SEO conference speakers have been telling other SEOs to do regardless of whether the webmaster takes the link down or not?

So, for a webmaster caught in the cross-fire, there’s not much incentive to remove links, especially if s/he’s read Matt’s suggestion:

higherpurpose, nowhere in the original article did it say that Google said the link was bad. This was a request from a random site (we don’t know which one, since the post dropped that detail), and the op can certainly ignore the link removal request.

In some cases Google does specify links:

We’ve reviewed the links to your site and we still believe that some of them are outside our quality guidelines.

Sample URLs:

ask.metafilter.com/194610/get-me-and-my-stuff-from-point-a-to-point-b-possibly-via-point-cPlease correct or remove all inorganic links, not limited to the samples provided above. This may involve contacting webmasters of the sites with the inorganic links on them.

And they make errors when they specify those links. They’ve flagged DMOZ & other similar links: “Every time I investigate these “unnatural link” claims, I find a comment by a longtime member of MetaFilter in good standing trying to help someone out, usually trying to identify something on Ask MetaFilter.”

Changing Behaviour

Then the webmaster starts thinking.

“Hmmm…maybe linking out will hurt me! Google might penalize me or, even worse, I’ll get flooded with more and more “please remove my link” spam in future.”

So what happens?

The webmaster becomes very wary about linking out. David Naylor mentioned an increasing number of sites adopting a “no linking” policy. Perhaps the webmaster no-follows everything as a precaution. Far from being the life-giving veins of the web, links are seen as potentially malignant. If all outbound links are made no-follow, perhaps the chance of being banned and flooded with “please remove my link”spam is reduced. Then again, even nofollowed links are getting removal requests.

As more webmasters start to see links as problematic, fewer legitimate sites receive links. Meanwhile, the blackhat, who sees their sites occasionally getting burned as a cost of doing business, will likely see their site rise as they’ll be the sites getting all the links, served up from their curated link networks.

A commenter notes:

The Google webspam team seems to prefer psychology over technology to solve the problem, especially recently. Nearly everything that’s come out of Matt Cutt’s mouth in the last 18 months or so has been a scare tactic.

IMO all this does is further encourage the development of “churn and burn” websites from blackhats who have being penalized in their business plan. So why should I risk all the time and effort it takes to generate quality web content when it could all come crashing down because an imperfect and overzealous algorithm thinks it’s spam? Or worse, some intern or non-google employee doing a manual review wrongly decides the site violates webmaster guidelines?

And what’s the point of providing great content when some competitor can just take you out with a dedicated negative SEO campaign, or if Google hits you with a false positive? If most of your traffic comes from Google, then the risk of the web publishing model increases.

Is Google broken? Or is your site broken? That’s the question any webmaster asks when she sees her Google click-throughs drop dramatically. It’s a question that Matt Haughey, founder of legendary Internet forum MetaFilter, has been asking himself for the last year and a half, as declining ad revenues have forced the long-running site to lay off several of its staff.

Then again, Google may just not want what MetaFilter has to offer anymore.

(In)Unintended Consequences

Could this be uncompetitive practice from Google? Are the sites getting hit with penalties predominantly commercial sites? It would be interesting to see how many of them are non-commercial. If so, is it a way to encourage commercial sites to use Adwords as it becomes harder and harder to get a link by organic means? If all it did was raise the cost of doing SEO, it would still be doing its job.

I have no idea, but you could see why people might ask that question.

Let’s say it’s benevolent and Google is simply working towards better results. The unintended consequence is that webmasters will think twice about linking out. And if that happens, then their linking behaviour will start to become more exclusive. When links become harder to get and become more problematic, then PPC and social-media is going to look that much more attractive.

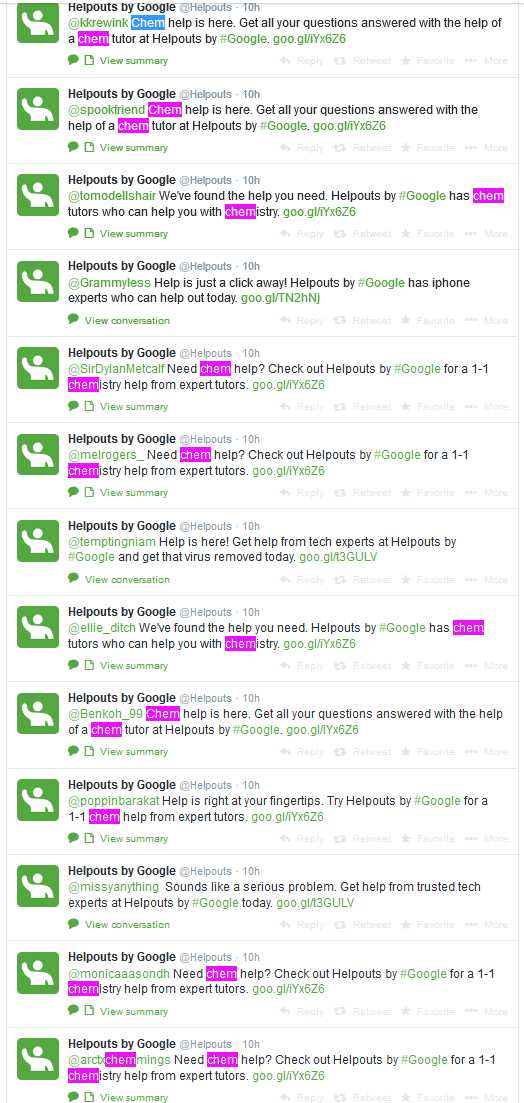

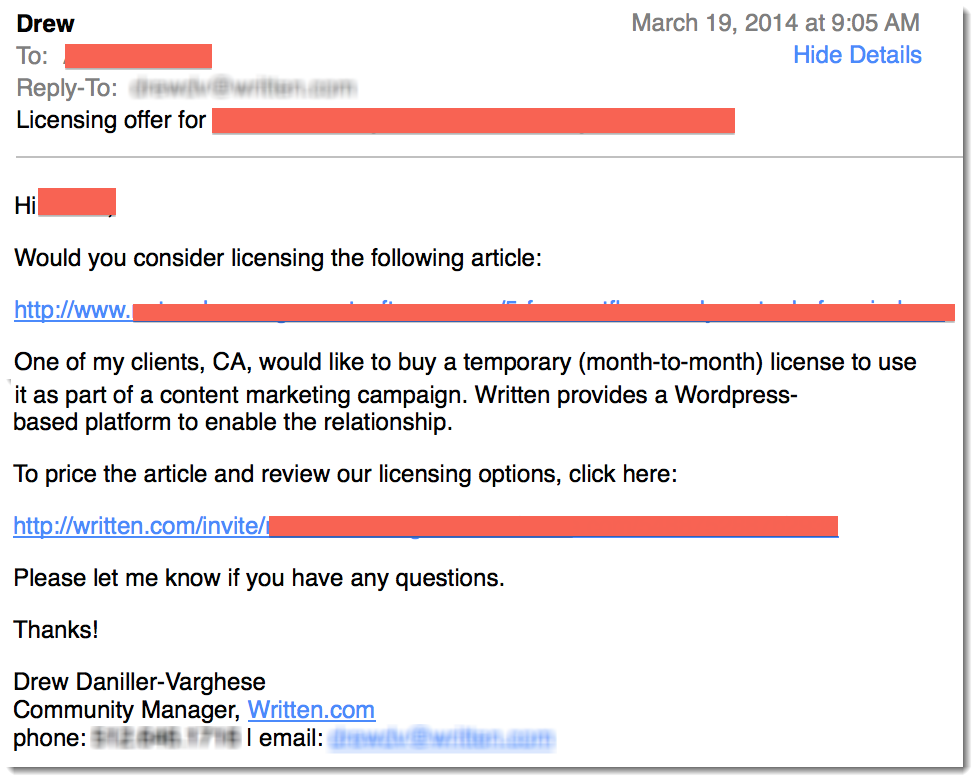

Google Helpouts Twitter Spam (Beta)

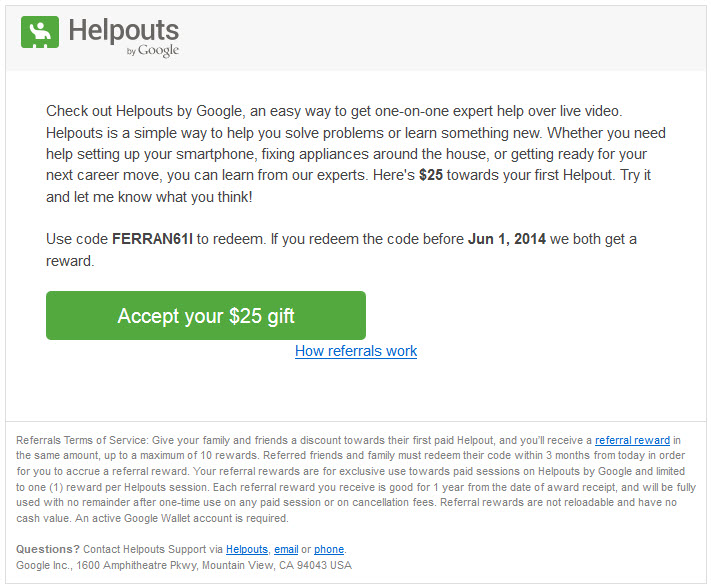

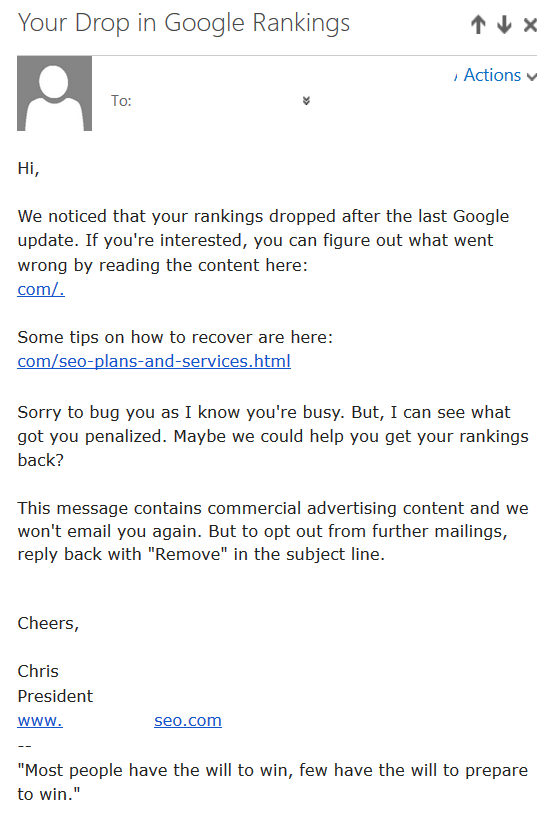

Google is desperate to promote Helpouts. I first realized this when I saw the following spam message in my email inbox.

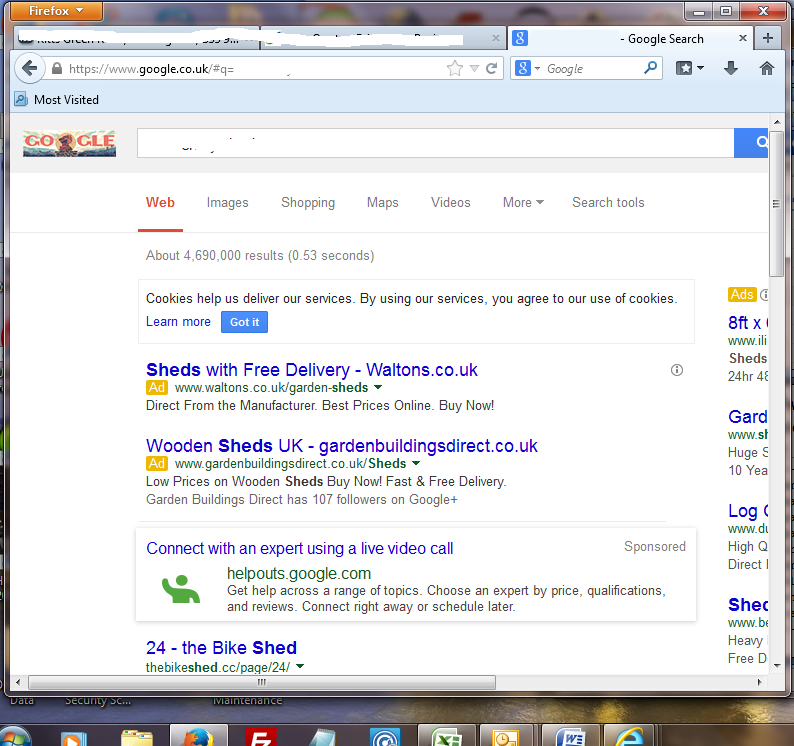

Shortly after a friend sent me a screenshot of a onebox promoting Helpouts in the SERPs.

That’s Google monopoly and those are Google’s services. It is not like they are:

- being anti-competitive

- paying others to spam other websites

Let’s slow down though. Maybe I am getting ahead of myself:

Google has its own remote technology support service similar to Mr. Gupta’s called Google Helpouts. Mr. Gupta’s complaint alleges Google may have been blocking his advertisements so Google Helpouts could get more customers.

Oh, and that first message looked like it could have been an affiliate link. Was it?

Hmm

Let me see

What do we have here?

Google Helpouts connects you to a variety of experts–from doctors, parenting experts, tutors, personal trainers, and more–over live video call. The Google Helpouts Ambassador Program is a unique opportunity to spread the word about Helpouts, earn money, and influence a new Google product–all on your own schedule.

As an Ambassador, you will:

- Earn extra income–receive $25 for each friend you refer who takes their first paid Helpout, up to $1,000 per month for the first 4 months.

- Give direct feedback and help shape a new Google product

- Join a community of innovative Ambassadors around the country

- Receive a Helpouts gift and the chance to win prizes

We all know HELPFUL hotel affiliate websites are spam, but maybe Google HELPouts affiliate marketing isn’t spam.

After all, Google did promise to teach people how to do their affiliate marketing professionally: “We will provide you with an Ambassador Toolkit with tips and suggestions on creative ways you can spread the word. You are encouraged to get creative, be innovative, and utilize different networks (i.e. social media, word of mouth, groups & associations, blogs, etc.) to help you.”

Of course the best way to lead is by example.

And lead they do.

They are highly inclusive in their approach.

@homosexualwentz Help is here! Use the code IFOUNDHELP for $20 off a 1-on-1 session w/ a trusted tech expert today: http://t.co/OtiePzjOIS— Helpouts by Google (@Helpouts) May 12, 2014

Check out this awesome Twitter usage

They’ve more Tweets in the last few months than I’ve made in 7 years. There are 1,440 minutes in a day, so it is quite an achievement to make over 800 Tweets in a day.

@Helpouts did google helpouts really just reply to a tweet of mine?— Emma Klinger (@Emma_Klinger) May 15, 2014

You and many many many many thousands of others, Emma.

Some minutes they are making 2 or 3 Tweets.

And with that sort of engagement & the Google brand name, surely they have built a strong following.

Uh, nope.

They are following over 500 people and have about 4,000 followers. And the 4,000 number is generous, as some of them are people who sell on that platform or are affiliates pushing it.

Let’s take a look at the zero moment of truth:

@Helpouts I really don’t want to pay for support.— Krishna M. Sadasivam (@pcweenies) May 14, 2014

Thanks for your unsolicited commercial message, but I am not interested.

@baileyboo612 @Helpouts what the heck??— logan™ (@logangaspard) May 13, 2014

You’re confusing me. Some context would help.

@Helpouts Hi, who can help me? , do u have an email for talk there?— Pamela López (@karlismkt) May 15, 2014

No email support, but support “sessions”? What is this?

@Helpouts $1.00 a minute? No thanks…— (@Owennn_Marsh) May 14, 2014

Oh, I get it now. Is this a spam bot promoting phone sex?

RT “@Helpouts: Help is just a click away! Helpouts by #Google has Apple tech experts who can help out today.” … No, just no— Johnny Blanchard (@JonnBlanchard) May 15, 2014

Ah, so it isn’t phone sex, but you can help with iPhones. Um, did we forget that whole Steve Jobs thermonuclear war bit? And why is Google offering support for Apple products when Larry Page stated the whole idea of customer support was ridiculous?

@Helpouts MAN I’VE ALREADY TRIED GOOGLE— mya ☪ (@gleebegay) May 15, 2014

OK, so maybe this is more of the same.

@Helpouts hahaha I’m not going to pay money for things that probably won’t even help me.— Nate Morse (@nt4343) May 16, 2014

Cynical, aren’t we?

@Helpouts no, that’s too expensive— Mahalah (@Mahalah_faye) May 16, 2014

And cheap?

@Helpouts im not paying— ononnah❄ (@ononnahh) May 15, 2014

Really cheap. :(

@Helpouts fu I’m not paying for help— Sam (@ttihweimmas) May 14, 2014

And angry?

@Helpouts Chill out!— Atsuro Kihara (@atsuro_enbot) May 15, 2014

And testy?

@Helpouts then why the fuck arent you fixing my mentions if youre such “trusted tech experts”— bianca // KIAN 4/6?? (@lawlorfflower) May 13, 2014

And rude?

@Helpouts no— Abby Williams (@abbywilliams96) May 15, 2014

And curt?

@Helpouts no— spongebobs weave (@googlingcraic) May 15, 2014

Didn’t you already say that???

@Helpouts fuck you— Samuel (@SamuelJones69) May 14, 2014

Didn’t you already say that???

@Helpouts what the heck— hey cal (@holycalum) May 14, 2014

It seems we are having issues communicating here.

@Helpouts ok I fucks with it. Next time try not to spy on my tweets lol— E (@Easy_Eli) May 14, 2014

I’m not sure it is fair to call it spying a half day late.

That was 11 hours ago @Helpouts pic.twitter.com/jRVV7rgtdW— 28 (@5SOSmaryam) May 14, 2014

Better late than never.

@Helpouts I fixed it already maybe? Thank you tho automatic message— Megar (@AH_Megan6) May 15, 2014

Even if automated.

Good catch Megar, as Google has a creepy patent on automating social spam.

@Helpouts Sorry, but I’ll just ask my friends because they are free and I need to do the homework right now.— Emma Raye Mosier (@EmmaRayeMosier) May 15, 2014

Who are your real Google+ friends? Have they all got the bends? Is Google really sinking this low?

@Helpouts Classmates over corporations, Google. I’m not interested.

BTW please don’t censor the European internet.— Hash (@saikorhythm) May 15, 2014

Every journey of a thousand miles begins with a single step.

@Helpouts This is hilarious. If I ever need it I know you guys have this, thanks!— Gabriella (@Gaby441) May 14, 2014

Humorous or sad…depending on your view.

@Helpouts thanks! is there also a Helpout on how to shameless self promote yourself through twitter? just wondering— Etta Grover (@ettafetacheese) May 14, 2014

There’s no wrong way to eat a Reese’s.

Google has THOUSANDS of opportunities available for you to learn how to spam Twitter.

As @Helpouts repeatedly Tweets: “Use the code IFOUNDHELP for $20 off” :D

++++++++

All the above Tweets were from the last few days.

The same sort of anti-social agro spamming campaign has been going on far longer.

@Helpouts fuck off— Dagger Anderson (@daggeranderson) May 8, 2014

When Twitter users said “no thank you”…

@Helpouts THIS SHIT AINT FREE— mariam / 20 (@psychoticamila) May 8, 2014

…Google quickly responded like a Marmaris rug salesman

@Helpouts leave me a lone— mariam / 20 (@psychoticamila) May 9, 2014

Google has a magic chemistry for being able to…

We need to fight spam messages (with MOAR spam messages).

@shelleyamybeth Lets try to get rid of all those Spam messages. Check out #Helpouts by Google for Wordpress help. http://t.co/eTTOMnpIhF— Helpouts by Google (@Helpouts) March 25, 2014

In a recent Youtube video Matt Cutts said: “We got less spam and so it looks like people don’t like the new algorithms as much.” Based on that, perhaps we can presume Helpouts is engaging in a guerrilla marketing campaign to improve user satisfaction with the algorithms.

Or maybe Google is spamming Twitter so they can justify banning Twitter.

Or maybe this is Google’s example of how we should market websites which don’t have the luxury of hard-coding at the top of the search results.

Or maybe Google wasn’t responsible for any of this & once again it was “a contractor.”

What’s Wrong With A/B Testing

A/B testing is an internet marketing standard. In order to optimize response rates, you compare one page against another. You run with the page that gives you the best response rates.

But anyone who has tried A/B testing will know that whilst it sounds simple in concept, it can be problematic in execution. For example, it can be difficult to determine if what you’re seeing is a tangible difference in customer behaviour or simply a result of chance. Is A/B testing an appropriate choice in all cases? Or is it best suited to specific applications? Does A/B testing obscure what customers really want?

In this article, we’ll look at some of the gotchas for those new to A/B testing.

1. Insufficient Sample Size

You set up test. You’ve got one page featuring call to action A and one page featuring call to action B. You enable your PPC campaign and leave it running for a day.

When you stop the test, you’ve found call-to-action A converted at twice the rate of call-to-action B. So call-to-action A is the winner and we should run with it, and eliminate option B.

But this would be a mistake.

The sample size may be insufficient. If we only tested one hundred clicks, we might get a significant difference in results between two pages, but that change doesn’t show up when we get to 1,000 clicks. In fact, the result may even be reversed!

So, how do we determine a sample size that is statistically significant? This excellent article explains the maths. However, there are various online sample size calculators that will do the calculations for you, including Evan’s. Most A/B tracking tools will include sample size calculators, but it’s a good idea to understand what they’re calculating, and how, to ensure the accuracy of your tests.

In short, make sure you’ve tested enough of the audience to determine a trend.

2. Collateral Damage

We might want to test a call to action metric. We want to test the number of people who click on the “find out more” link on a landing page. We find that a lot more people click on this link we use the term “find out more” than if we use the term “buy now”.

Great, right?

But what if the conversion rate for those who actually make a purchase falls as a result? We achieved higher click-thrus on one landing page at the expense of actual sales.

This is why it’s important to be clear about the end goal when designing and executing tests. Also, ensure we look at the process as a whole, especially when we’re chopping the process up into bits for testing purposes. Does a change in one place affect something else further down the line?

In this example, you might A/B test the landing page whilst keeping an eye on your total customer numbers deeming the change effective only if customer numbers also rise. If your aim was only to increase click-thru, say to boost quality scores, then the change was effective.

3. What, Not Why

In the example above, we know the “what”. We changed the wording of a call-to-action link, and we achieved higher click thru’s, although we’re still in the dark as to why. We’re also in the dark as to why the change of wording resulted in fewer sales.

Was it because we attracted more people who were information seekers? Were buyers confused about the nature of the site? Did visitors think they couldn’t buy from us? Were they price shoppers who wanted to compare price information up front?

We don’t really know.

But that’s good, so long as we keep asking questions. These types of questions lead to more ideas for A/B tests. By turning testing into an ongoing process, supported by asking more and hopefully better questions, we’re more likely to discover a whole range of “why’s”.

4. Small Might Be A Problem

If you’re a small company competing directly with big companies, you may already be on the back foot when it comes to A/B testing.

It’s clear that its very modularity can cause problems. But what about in cases where the number of tests that can be run at once is low? While A/B testing makes sense on big websites where you can run hundreds of tests per day and have hundreds of thousands of hits, only a few offers can be tested at one time in cases like direct mail. The variance that these tests reveal is often so low that any meaningful statistical analysis is impossible.

Put simply, you might not have the traffic to generate statistically significant results. There’s no easy way around this problem, but the answer may lay in getting tricky with the maths.

Experimental design massively and deliberately increases the amount of variance in direct marketing campaigns. It lets marketers project the impact of many variables by testing just a few of them. Mathematical formulas use a subset of combinations of variables to represent the complexity of all the original variables. That allows the marketing organization to more quickly adjust messages and offers and, based on the responses, to improve marketing effectiveness and the company’s overall economics

Another thing to consider is that if you’re certain the bigger company is running A/B tests, and achieving good results, then “steal” their landing page*. Take their ideas for landing pages and use that as a test against your existing pages. *Of course, you can’t really steal their landing page, but you can be “influenced by” their approach.

What your competitors do is often a good starting point for your own tests. Try taking their approach and refine it.

5. Might There Be A Better Way?

Are there alternatives to A/B testing?

Some swear by the Multi Armed Bandit methodology:

The multi-armed bandit problem takes its terminology from a casino. You are faced with a wall of slot machines, each with its own lever. You suspect that some slot machines pay out more frequently than others. How can you learn which machine is the best, and get the most coins in the fewest trials?

Like many techniques in machine learning, the simplest strategy is hard to beat. More complicated techniques are worth considering, but they may eke out only a few hundredths of a percentage point of performance.

Then again…..

What multi-armed bandit algorithm does is that it aggressively (and greedily) optimizes for currently best performing variation, so the actual worse performing versions end up receiving very little traffic (mostly in the explorative 10% phase). This little traffic means when you try to calculate statistical significance, there’s still a lot of uncertainty whether the variation is “really” worse performing or the current worse performance is due to random chance. So, in a multi-armed bandit algorithm, it takes a lot more traffic to declare statistical significance as compared to simple randomization of A/B testing. (But, of course, in a multi-armed bandit campaign, the average conversion rate is higher).

Multivariate testing may be suitable if you’re testing a combination of variables, as opposed to just one i.e.

- Product Image: Big vs. Medium vs Small

- Price Text Style: Bold vs Normal

- Price Text Color: Blue vs. Black vs. Red

There would be 3x2x3 different versions to test.

The problem with multivariate tests is they can get complicated pretty quickly and require a lot of traffic to produce statistically significant results. One advantage of multivariate testing over A/B testing is that it can tell you which part of the page is most influential. Was it a graphic? A headline? A video? If you’re testing a page using an A/B test, you won’t know. Multivariate testing will tell you which page sections influence the conversion rate and which don’t.

6. Methodology Is Only One Part Of The Puzzle

So is A/B testing worthwhile? Are the alternatives better?

The methodology we choose will only be as good as the test design. If tests are poorly designed, then the maths, the tests, the data and the software tools won’t be much use.

To construct good tests, you should first take a high level view:

Start the test by first asking yourself a question. Something on the lines of, “Why is the engagement rate of my site lower than that of the competitors…..Collect information about your product from customers before setting up any big test. If you plan to test your tagline, run a quick survey among your customers asking how they would define your product.

Secondly, consider the limits of testing. Testing can be a bit of a heartless exercise. It’s cold. We can’t really test how memorable and how liked one design is over the other, and typically have to go by instinct on some questions. Sometimes, certain designs just work for our audience, and other designs don’t. How do we test if we’re winning not just business, but also hearts and minds?

Does it mean we really understand our customers if they click this version over that one? We might see how they react to an offer, but that doesn’t mean we understand their desires and needs. If we’re getting click-backs most of the time, then it’s pretty clear we don’t understand the visitors. Changing a graphic here, and wording there, isn’t going to help if the underlying offer is not what potential customers want. No amount of testing ad copy will sell a pink train.

The understanding of customers is gained in part by tests, and in part by direct experience with customers and the market we’re in. Understanding comes from empathy. From asking questions. From listening to, and understanding, the answers. From knowing what’s good, and bad, about your competitors. From providing options. From open communication channels. From reassuring people. You’re probably armed with this information already, and that information is highly useful when it comes to constructing effective tests.

Do you really need A/B testing? Used well, it can markedly improve and hone offers. It isn’t a magic bullet. Understanding your audience is the most important thing. Google, a company that uses testing extensively, seem to be most vulnerable when it comes to areas that require a more intuitive understanding of people. Google Glass is a prime example of failing to understand social context. Apple, on the other hand, were driven more by an intuitive approach. Jobs: “We built [the Mac] for ourselves. We were the group of people who were going to judge whether it was great or not. We weren’t going to go out and do market research”

A/B testing is can work wonders, just so long as it isn’t used as a substitute for understanding people.

Learn Local Search Marketing

Last October Vendran Tomic wrote a guide for local SEO which has since become one of the more popular pages on our site, so we decided to follow up with a QnA on some of the latest changes in local search.

Q: Google appears to have settled their monop…