Help Google Search know the best date for your web page

Sometimes, Google shows dates next to listings in its search results. In this post, we’ll answer some commonly-asked questions webmasters have about how these dates are determined and provide some best practices to help improve their accuracy.

How dates are determined

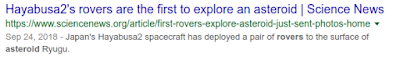

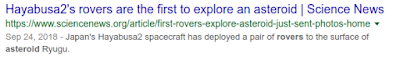

Google shows the date of a page when its automated systems determine that it would be relevant to do so, such as for pages that can be time-sensitive, including news content:

Google determines a date using a variety of factors, including but not limited to: any prominent date listed on the page itself or dates provided by the publisher through structured markup.

Google doesn’t depend on one single factor because all of them can be prone to issues. Publishers may not always provide a clear visible date. Sometimes, structured data may be lacking or may not be adjusted to the correct time zone. That’s why our systems look at several factors to come up with what we consider to be our best estimate of when a page was published or significantly updated.

How to specify a date on a page

To help Google to pick the right date, site owners and publishers should:

- Show a clear date: Show a visible date prominently on the page.

- Use structured data: Use the

datePublishedanddateModifiedschema with the correct time zone designator for AMP or non-AMP pages. When using structured data, make sure to use the ISO 8601 format for dates.

Guidelines specific to Google News

Google News requires clearly showing both the date and the time that content was published or updated. Structured data alone is not enough, though it is recommended to use in addition to a visible date and time. Date and time should be positioned between the headline and the article text. For more guidance, also see our help page about article dates.

If an article has been substantially changed, it can make sense to give it a fresh date and time. However, don’t artificially freshen a story without adding significant information or some other compelling reason for the freshening. Also, do not create a very slightly updated story from one previously published, then delete the old story and redirect to the new one. That’s against our article URLs guidelines.

More best practices for dates on web pages

In addition to the most important requirements listed above, here are additional best practices to help Google determine the best page to consider showing for a web page:

- Show when a page has been updated: If you update a page significantly, also update the visible date (and time, if you display that). If desired, you can show two dates: when a page was originally published and when it was updated. Just do so in a way that’s visually clear to your readers. If showing both dates, it’s also highly recommended to use

datePublishedanddateModifiedfor AMP or non-AMP pages to make it easier for algorithms to recognize. - Use the right time zone: If specifying a time, make sure to provide the correct timezone, taking into account daylight saving time as appropriate.

- Be consistent in usage. Within a page, make sure to use exactly the same date (and, potentially, time) in structured data as well as in the visible part of the page. Make sure to use the same timezone if you specify one on the page.

- Don’t use future dates or dates related to what a page is about: Always use a date for when a page itself was published or updated, not a date linked to something like an event that the page is writing about, especially for events or other subjects that happen in the future (you may use Event markup separately, if appropriate).

- Follow Google’s structured data guidelines: While Google doesn’t guarantee that a date (or structured data in general) specified on a page will be used, following our structured data guidelines does help our algorithms to have it available in a machine-readable way.

- Troubleshoot by minimizing other dates on the page: If you’ve followed the best practices above and find incorrect dates are being selected, consider if you can remove or minimize other dates that may appear on the page, such as those that might be next to related stories.

We hope these guidelines help to make it easier to specify the right date on your website’s pages! For questions or comments on this, or other structured data topics, feel free to drop by our webmaster help forums.

Posted by John Mueller, Developer Advocate, Zurich

Help Google Search know the best date for your web page

Sometimes, Google shows dates next to listings in its search results. In this post, we’ll answer some commonly-asked questions webmasters have about how these dates are determined and provide some best practices to help improve their accuracy.

How dates are determined

Google shows the date of a page when its automated systems determine that it would be relevant to do so, such as for pages that can be time-sensitive, including news content:

Google determines a date using a variety of factors, including but not limited to: any prominent date listed on the page itself or dates provided by the publisher through structured markup.

Google doesn’t depend on one single factor because all of them can be prone to issues. Publishers may not always provide a clear visible date. Sometimes, structured data may be lacking or may not be adjusted to the correct time zone. That’s why our systems look at several factors to come up with what we consider to be our best estimate of when a page was published or significantly updated.

How to specify a date on a page

To help Google to pick the right date, site owners and publishers should:

- Show a clear date: Show a visible date prominently on the page.

- Use structured data: Use the

datePublishedanddateModifiedschema with the correct time zone designator for AMP or non-AMP pages. When using structured data, make sure to use the ISO 8601 format for dates.

Guidelines specific to Google News

Google News requires clearly showing both the date and the time that content was published or updated. Structured data alone is not enough, though it is recommended to use in addition to a visible date and time. Date and time should be positioned between the headline and the article text. For more guidance, also see our help page about article dates.

If an article has been substantially changed, it can make sense to give it a fresh date and time. However, don’t artificially freshen a story without adding significant information or some other compelling reason for the freshening. Also, do not create a very slightly updated story from one previously published, then delete the old story and redirect to the new one. That’s against our article URLs guidelines.

More best practices for dates on web pages

In addition to the most important requirements listed above, here are additional best practices to help Google determine the best page to consider showing for a web page:

- Show when a page has been updated: If you update a page significantly, also update the visible date (and time, if you display that). If desired, you can show two dates: when a page was originally published and when it was updated. Just do so in a way that’s visually clear to your readers. If showing both dates, it’s also highly recommended to use

datePublishedanddateModifiedfor AMP or non-AMP pages to make it easier for algorithms to recognize. - Use the right time zone: If specifying a time, make sure to provide the correct timezone, taking into account daylight saving time as appropriate.

- Be consistent in usage. Within a page, make sure to use exactly the same date (and, potentially, time) in structured data as well as in the visible part of the page. Make sure to use the same timezone if you specify one on the page.

- Don’t use future dates or dates related to what a page is about: Always use a date for when a page itself was published or updated, not a date linked to something like an event that the page is writing about, especially for events or other subjects that happen in the future (you may use Event markup separately, if appropriate).

- Follow Google’s structured data guidelines: While Google doesn’t guarantee that a date (or structured data in general) specified on a page will be used, following our structured data guidelines does help our algorithms to have it available in a machine-readable way.

- Troubleshoot by minimizing other dates on the page: If you’ve followed the best practices above and find incorrect dates are being selected, consider if you can remove or minimize other dates that may appear on the page, such as those that might be next to related stories.

We hope these guidelines help to make it easier to specify the right date on your website’s pages! For questions or comments on this, or other structured data topics, feel free to drop by our webmaster help forums.

Posted by John Mueller, Developer Advocate, Zurich

Collaboration and user management in the new Search Console

As part of our reinvention of Search Console, we have been rethinking the models of facilitating cooperation and accountability for our users. We decided to redesign the product around cooperative team usage and transparency of action history. The new Search Console will gradually provide better history tracking to show who performed which significant property-affecting modifications, such as changing a setting, validating an issue or submitting a new sitemap. In that spirit we also plan to enable all users to see critical site messages.

New features

- User management is now an integral part of Search Console.

- The new Search Console enables you to share a read-only view of many reports, including Index coverage, AMP, and Mobile Usability. Learn more.

- A new user management interface that enables all users to see and (if appropriate), manage user roles for all property users.

New Role definition

- In order to provide a simpler permission model, we are planning to limit the “restricted” user role to read-only status. While being able to see all information, read-only users will no longer be able to perform any state-changing actions, including starting a fix validation or sharing an issue.

Best practices

As a reminder, here are some best practices for managing user permissions in Search Console:

- Grant users only the permission level that they need to do their work. See the permissions descriptions.

- If you need to share an issue details report, click the Share link on that page.

- Revoke permissions from users who no longer work on a property.

- When removing a previous verified owner, be sure to remove all verification tokens for that user.

- Regularly audit and update the user permissions using the Users & Permissions page in new Search Console.

User feedback

As part of our Beta exploration, we released visibility of the user management interface to all user roles. Some users reached out to request more time to prepare for the updated user management model, including the ability of restricted and full users to easily see a list of other collaborators on the site. We’ve taken that feedback and will hold off on that part of the launch. Stay tuned for more updates relating to collaboration tools and changes on our permission models.

As always, we love to hear feedback from our users. Feel free to use the feedback form within Search Console, and we welcome your discussions in our help forums as well!

Posted by John Mueller, Google Switzerland

Our goal: helping webmasters and content creators

- Google Web Fundamentals: Provides technical guidance on building a modern website that takes advantage of open web standards.

- Google Search developer documentation: Describes how Google crawls and indexes a website. Includes authoritative guidance on building a site that is optimized for Google Search.

- Search Console Help Center: Provides detailed information on how to use and take advantage of Search Console, the best way for a website owner to understand how Google sees their site.

- The Search Engine Optimization (SEO) Starter Guide: Provides a complete overview of the basics of SEO according to our recommended best practices.

- Google webmaster guidelines: Describes policies and practices that may lead to a site being removed entirely from the Google index or otherwise affected by an algorithmic or manual spam action that can negatively affect their Search appearance.

- Google Webmasters YouTube Channel

Distrust of the Symantec PKI: Immediate action needed by site operators

We previously announced plans to deprecate Chrome’s trust in the Symantec certificate authority (including Symantec-owned brands like Thawte, VeriSign, Equifax, GeoTrust, and RapidSSL). This post outlines how site operators can determine if they’re affected by this deprecation, and if so, what needs to be done and by when. Failure to replace these certificates will result in site breakage in upcoming versions of major browsers, including Chrome.

Chrome 66

If your site is using a SSL/TLS certificate from Symantec that was issued before June 1, 2016, it will stop functioning in Chrome 66, which could already be impacting your users.

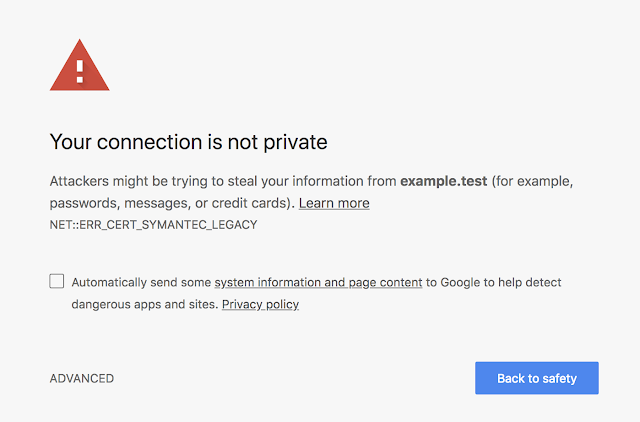

If you are uncertain about whether your site is using such a certificate, you can preview these changes in Chrome Canary to see if your site is affected. If connecting to your site displays a certificate error or a warning in DevTools as shown below, you’ll need to replace your certificate. You can get a new certificate from any trusted CA, including Digicert, which recently acquired Symantec’s CA business.

|

| An example of a certificate error that Chrome 66 users might see if you are using a Legacy Symantec SSL/TLS certificate that was issued before June 1, 2016. |

|

Chrome 66 has already been released to the Canary and Dev channels, meaning affected sites are already impacting users of these Chrome channels. If affected sites do not replace their certificates by March 15, 2018, Chrome Beta users will begin experiencing the failures as well. You are strongly encouraged to replace your certificate as soon as possible if your site is currently showing an error in Chrome Canary.

Chrome 70

Starting in Chrome 70, all remaining Symantec SSL/TLS certificates will stop working, resulting in a certificate error like the one shown above. To check if your certificate will be affected, visit your site in Chrome today and open up DevTools. You’ll see a message in the console telling you if you need to replace your certificate.

| The DevTools message you will see if you need to replace your certificate before Chrome 70. |

If you see this message in DevTools, you’ll want to replace your certificate as soon as possible. If the certificates are not replaced, users will begin seeing certificate errors on your site as early as July 20, 2018. The first Chrome 70 Beta release will be around September 13, 2018.

Expected Chrome Release Timeline

The table below shows the First Canary, First Beta and Stable Release for Chrome 66 and 70. The first impact from a given release will coincide with the First Canary, reaching a steadily widening audience as the release hits Beta and then ultimately Stable. Site operators are strongly encouraged to make the necessary changes to their sites before the First Canary release for Chrome 66 and 70, and no later than the corresponding Beta release dates.

|

Release

|

First Canary

|

First Beta

|

Stable Release

|

|

Chrome 66

|

January 20, 2018

|

~ March 15, 2018

|

~ April 17, 2018

|

|

Chrome 70

|

~ July 20, 2018

|

~ September 13, 2018

|

~ October 16, 2018

|

For information about the release timeline for a particular version of Chrome, you can also refer to the Chromium Development Calendar which will be updated should release schedules change.

In order to address the needs of certain enterprise users, Chrome will also implement an Enterprise Policy that allows disabling the Legacy Symantec PKI distrust starting with Chrome 66. As of January 1, 2019, this policy will no longer be available and the Legacy Symantec PKI will be distrusted for all users.

Special Mention: Chrome 65

As noted in the previous announcement, SSL/TLS certificates from the Legacy Symantec PKI issued after December 1, 2017 are no longer trusted. This should not affect most site operators, as it requires entering in to special agreement with DigiCert to obtain such certificates. Accessing a site serving such a certificate will fail and the request will be blocked as of Chrome 65. To avoid such errors, ensure that such certificates are only served to legacy devices and not to browsers such as Chrome.

Posted by Devon O’Brien, Ryan Sleevi, Emily Stark, Chrome security team

Engaging users through high quality AMP pages

To improve our users’ experience with AMP results, we are making changes to how we enforce our policy on content parity with AMP. Starting Feb 1, 2018, the policy requires that the AMP page content be comparable to the (original) canonical page content…

Engaging users through high quality AMP pages

To improve our users’ experience with AMP results, we are making changes to how we enforce our policy on content parity with AMP. Starting Feb 1, 2018, the policy requires that the AMP page content be comparable to the (original) canonical page content…

#NoHacked: A year in review

We wanted to share with you a summary of our 2016 work as we continue our #NoHacked campaign. Let’s start with some trends on hacked sites from the past year.

State of Website Security in 2016

First off, some unfortunate news. We’ve seen an increase in the number of hacked sites by approximately 32% in 2016 compared to 2015. We don’t expect this trend to slow down. As hackers get more aggressive and more sites become outdated, hackers will continue to capitalize by infecting more sites.

On the bright side, 84% webmasters who do apply for reconsideration are successful in cleaning their sites. However, 61% of webmasters who were hacked never received a notification from Google that their site was infected because their sites weren’t verified in Search Console. Remember to register for Search Console if you own or manage a site. It’s the primary channel that Google uses to communicate site health alerts.

More Help for Hacked Webmasters

We’ve been listening to your feedback to better understand how we can help webmasters with security issues. One of the top requests was easier to understand documentation about hacked sites. As a result we’ve been hard at work to make our documentation more useful.

First, we created new documentation to give webmasters more context when their site has been compromised. Here is a list of the new help documentation:

- Top ways websites get hacked by spammers

- Glossary for Hacked Sites

- FAQs for Hacked Sites

- How do I know if my site is hacked?

Next, we created clean up guides for sites affected by known hacks. We’ve noticed that sites often get affected in similar ways when hacked. By investigating the similarities, we were able to create clean up guides for specific known type of hack. Below is a short description of each of the guides we created:

Gibberish Hack: The gibberish hack automatically creates many pages with non-sensical sentences filled with keywords on the target site. Hackers do this so the hacked pages show up in Google Search. Then, when people try to visit these pages, they’ll be redirected to an unrelated page, like a porn site. Learn more on how to fix this type of hack.

Japanese Keywords Hack: The Japanese keywords hack typically creates new pages with Japanese text on the target site in randomly generated directory names. These pages are monetized using affiliate links to stores selling fake brand merchandise and then shown in Google search. Sometimes the accounts of the hackers get added in Search Console as site owners. Learn more on how to fix this type of hack.

Cloaked Keywords Hack: The cloaked keywords and link hack automatically creates many pages with non-sensical sentence, links, and images. These pages sometimes contain basic template elements from the original site, so at first glance, the pages might look like normal parts of the target site until you read the content. In this type of attack, hackers usually use cloaking techniques to hide the malicious content and make the injected page appear as part of the original site or a 404 error page. Learn more on how to fix this type of hack.

Prevention is Key

As always it’s best to take a preventative approach and secure your site rather than dealing with the aftermath. Remember a chain is only as strong as its weakest link. You can read more about how to identify vulnerabilities on your site in our hacked help guide. We also recommend staying up-to-date on releases and announcements from your Content Management System (CMS) providers and software/hardware vendors.

Looking Forward

Hacking behavior is constantly evolving, and research allows us to stay up to date on and combat the latest trends. You can learn about our latest research publications in the information security research site. Highlighted below are a few specific studies specific to website compromises:

- Cloak of Visibility: Detecting When Machines Browse a Different Web

- Investigating Commercial Pay-Per-Install and the Distribution of Unwanted Software

- Users Really Do Plug in USB Drives They Find

- Ad Injection at Scale: Assessing Deceptive Advertisement Modifications

If you have feedback or specific questions about compromised sites, the Webmaster Help Forums has an active group of Googlers and technical contributors that can address your questions and provide additional technical support.

Posted by Wafa Alnasayan, Trust & Safety Analyst and Eric Kuan, Webmaster Relations

#NoHacked: A year in review

We wanted to share with you a summary of our 2016 work as we continue our #NoHacked campaign. Let’s start with some trends on hacked sites from the past year.

State of Website Security in 2016

First off, some unfortunate news. We’ve seen an increase in the number of hacked sites by approximately 32% in 2016 compared to 2015. We don’t expect this trend to slow down. As hackers get more aggressive and more sites become outdated, hackers will continue to capitalize by infecting more sites.

On the bright side, 84% webmasters who do apply for reconsideration are successful in cleaning their sites. However, 61% of webmasters who were hacked never received a notification from Google that their site was infected because their sites weren’t verified in Search Console. Remember to register for Search Console if you own or manage a site. It’s the primary channel that Google uses to communicate site health alerts.

More Help for Hacked Webmasters

We’ve been listening to your feedback to better understand how we can help webmasters with security issues. One of the top requests was easier to understand documentation about hacked sites. As a result we’ve been hard at work to make our documentation more useful.

First, we created new documentation to give webmasters more context when their site has been compromised. Here is a list of the new help documentation:

- Top ways websites get hacked by spammers

- Glossary for Hacked Sites

- FAQs for Hacked Sites

- How do I know if my site is hacked?

Next, we created clean up guides for sites affected by known hacks. We’ve noticed that sites often get affected in similar ways when hacked. By investigating the similarities, we were able to create clean up guides for specific known type of hack. Below is a short description of each of the guides we created:

Gibberish Hack: The gibberish hack automatically creates many pages with non-sensical sentences filled with keywords on the target site. Hackers do this so the hacked pages show up in Google Search. Then, when people try to visit these pages, they’ll be redirected to an unrelated page, like a porn site. Learn more on how to fix this type of hack.

Japanese Keywords Hack: The Japanese keywords hack typically creates new pages with Japanese text on the target site in randomly generated directory names. These pages are monetized using affiliate links to stores selling fake brand merchandise and then shown in Google search. Sometimes the accounts of the hackers get added in Search Console as site owners. Learn more on how to fix this type of hack.

Cloaked Keywords Hack: The cloaked keywords and link hack automatically creates many pages with non-sensical sentence, links, and images. These pages sometimes contain basic template elements from the original site, so at first glance, the pages might look like normal parts of the target site until you read the content. In this type of attack, hackers usually use cloaking techniques to hide the malicious content and make the injected page appear as part of the original site or a 404 error page. Learn more on how to fix this type of hack.

Prevention is Key

As always it’s best to take a preventative approach and secure your site rather than dealing with the aftermath. Remember a chain is only as strong as its weakest link. You can read more about how to identify vulnerabilities on your site in our hacked help guide. We also recommend staying up-to-date on releases and announcements from your Content Management System (CMS) providers and software/hardware vendors.

Looking Forward

Hacking behavior is constantly evolving, and research allows us to stay up to date on and combat the latest trends. You can learn about our latest research publications in the information security research site. Highlighted below are a few specific studies specific to website compromises:

- Cloak of Visibility: Detecting When Machines Browse a Different Web

- Investigating Commercial Pay-Per-Install and the Distribution of Unwanted Software

- Users Really Do Plug in USB Drives They Find

- Ad Injection at Scale: Assessing Deceptive Advertisement Modifications

If you have feedback or specific questions about compromised sites, the Webmaster Help Forums has an active group of Googlers and technical contributors that can address your questions and provide additional technical support.

Posted by Wafa Alnasayan, Trust & Safety Analyst and Eric Kuan, Webmaster Relations

Protect your site from user generated spam

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

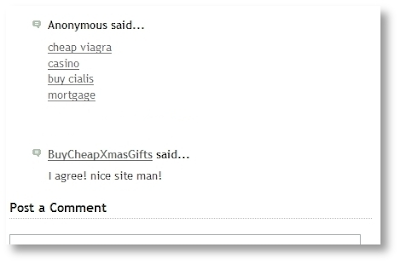

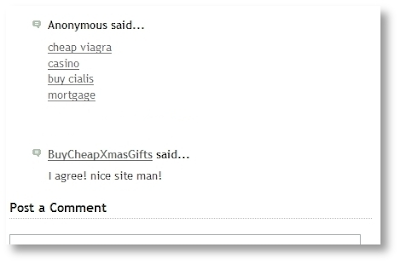

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

Protect your site from user generated spam

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

Enhancing property sets to cover more reports in Search Console

Since initially announcing property sets earlier this year, one of the most popular requests has been to expand this functionality to more sections of Search Console. Thanks to your feedback, we’re now expanding property sets to more features! Propert…

Enhancing property sets to cover more reports in Search Console

Since initially announcing property sets earlier this year, one of the most popular requests has been to expand this functionality to more sections of Search Console. Thanks to your feedback, we’re now expanding property sets to more features! Propert…

Building Indexable Progressive Web Apps

Progressive Web Apps (PWAs) are taking advantage of new technologies to bring the best of mobile sites and native applications to users — and they’re one of the most exciting new ideas on the web. But to truly have an impact, it’s important that they’re indexable and linkable. Every recommendation presented in this article is an existing best practice for indexability — regardless of whether you’re building a Progressive Web App or a simple static website. Nonetheless, we have collated these best practices to provide a checklist to guide you:

Make Your Content Crawlable

Why? Historically, websites would always generate or render their HTML on the server which is the simplest way to ensure your content is directly linkable. Web applications popularised the concept of client-side rendering in which content is updated dynamically on the page as the users navigates without requiring the page to be reloaded.

The modern approach is hybrid rendering, in which server-side rendering is used when a user navigates directly to a URL and client-side rendering is used after the initial page load for subsequent navigation and asynchronous requests.

Our server-side PWA sample demonstrates pure server-side rendering, while our hybrid PWA sample demonstrates the combined approach.

If you are unfamiliar with the server-side and client-side rendering terminology, check out these articles on the web read here and here.

<!– yeah, maybe not http://2.bp.blogspot.com/-41v6n3Vaf5s/UeRN_XJ0keI/AAAAAAAAN2Y/YxIHhddGiaw/s1600/css.gif .boxbox { float:left; min-width: 31%; max-width: 300px; word-wrap:break-word; padding: 0.2em;} .badbox { background-color: #eba; } .goodbox { background-color: #ded; } .avoidbox { background-color: #ffd; } .boxbox h5 { font-size: 1em; font-weight: bold; margin: 0.5em 0;} br.endboxen { clear: both; } –><!–

Best Practice:

box

Avoid:

box

Don’t:

box

–>

Best Practice:

Use server-side or hybrid rendering so users receive the content in the initial payload of their web request.

Always ensure your URLs are independently accessible:

https://www.example.com/product/25/

The above should deep link to that particular resource.

If you can’t support server-side or hybrid rendering for your Progressive Web App and you decide to use client-side rendering, we recommend using the Google Search Console “Fetch as Google tool” to verify your content successfully renders for our search crawler.

Don’t:

Don’t redirect users accessing deep links back to your web app’s homepage.

Additionally, serving an error page to users instead of deep linking should also be avoided.

Provide Clean URLs

Why? Fragment identifiers (#user/24601/ or #!user/24601/) were an effective workaround for browsers to AJAX new content from a server without reloading the page. This design is known as client-side rendering.

However, the fragment identifier syntax isn’t compatible with some web tools, frameworks and protocols such as Facebook’s Open Graph protocol.

The History API enables us to update the URL without fragment identifiers while still fetching resources asynchronously and therefore avoiding page reloads — it’s the best of both worlds. The AJAX crawling scheme (with its #! / escaped-fragment URLs) made sense at its time, but is now no longer recommended.

Our hybrid PWA and client-side PWA samples demonstrate the History API.

Best Practice:

Provide clean URLs without fragment identifiers (# or #!) such as:

https://www.example.com/product/25/

If using client-side or hybrid rendering be sure to support browser navigation with the History API.

Avoid:

Using the #! URL structure to drive unique URLs is discouraged:

https://www.example.com/#!product/25/

It was introduced as a workaround before the advent of the History API. It is considered a separate pattern to the purely # URL structure.

Don’t:

Using the # URL structure without the accompanying ! symbol is unsupported:

https://www.example.com/#product/25/

This URL structure is already a concept in the web and relates to deep linking into content on a particular page.

Specify Canonical URLs

Why? The best way to eliminate confusion for indexing when the same content is available under multiple URLs (be it the same or different domains) is to mark one page as the canonical, and all other pages that duplicate that content to refer to it.

Best Practice:

Include the following tag across all pages mirroring a particular piece of content:

<link rel="canonical" href="https://www.example.com/your-url/" />

If you are supporting Accelerated Mobile Pages be sure to correctly use its counterpart rel=”amphtml” instruction as well.

Avoid:

Avoid purposely duplicating content across multiple URLs and not using the rel=”canonical” link element.

For example, the rel=”canonical” link element can reduce ambiguity for URLs with tracking parameters.

Don’t:

Avoid creating conflicting canonical references between your pages.

Design for Multiple Devices

Why? It’s important that all your users get the best experience possible when viewing your website, regardless of their device.

Make your site responsive in its design — fonts, margins, paddings, buttons and general design of your site should scale dynamically based on screen resolutions and device viewports.

Small images scaled up for desktop or tablet devices give a poor experience. Conversely, super high resolution images take a long time to download on mobile phones and may impact mobile scroll performance.

Read more UX for PWAs here.

Best Practice:

Use “srcset” attribute to fetch different resolution images for different density screens to avoid downloading images larger than the device’s screen is capable of displaying.

Scale your font size and line height to ensure your text is legible no matter the size of the device. Similarly ensure the padding and margins of elements also scale sensibly.

Test various screen resolutions using the Chrome Developer Tool’s Device Mode feature and Mobile Friendly Test tool.

Don’t:

Don’t show different content to users than you show to Google. If you use redirects or user agent detection (a.k.a. browser sniffing or dynamic serving) to alter the design of your site for different devices it’s important that the content itself remains the same.

Use the Search Console “Fetch as Google” tool to verify the content fetched by Google matches the content a user sees.

For usability reasons, avoid using fixed-size fonts.

Develop Iteratively

Why? One of the safest paths to take when adding features to a web application is to make changes iteratively. If you add features one at a time you can observe the impact of each individual change.

Alternatively many developers prefer to view their progressive web application as an opportunity to overhaul their mobile site in one fell swoop — developing the new web app in an isolated environment and swapping it with their existing mobile site once ready.

When developing features iteratively try to break the changes into separate pieces. For example, if you intend to move from server-side rendering to hybrid rendering then tackle that as a single iteration — rather than in combination with other features.

Both approaches have their own pros and cons. Iterating reduces the complexity of dealing with search indexability as the transition is continuous. However, iterating might result in a slower development process and potentially a less innovative overhaul if development is not starting from scratch.

In either case, the most sensitive areas to keep an eye on are your canonical URLs and your site’s robots.txt configuration.

Best Practice:

Iterate on your website incrementally by adding new features piece by piece.

For example, if don’t support HTTPS yet then start by migrating to a secure site.

Avoid:

If you’ve developed your progressive web app in an isolated environment, then avoid launching it without checking the rel-canonical links and robots.txt are setup appropriately.

Ensure your rel-canonical links point to the real site and that your robots.txt configuration allows crawlers to crawl your new site.

Don’t:

It’s logical to prevent crawlers from indexing your in-development site before launch but don’t forget to unblock crawlers from accessing your new site when you launch.

Use Progressive Enhancement

Why? Wherever possible it’s important to detect browser features before using them. Feature detection is also better than testing for browsers that you believe support a given feature.

A common bad practice in the past was to enable or disable features by testing which browser the user had. However, as browsers are constantly evolving with features this technique is strongly discouraged.

Service Worker is a relatively new technology and it’s important to not break compatibility in the pursuit of progress — it’s a perfect example of when to use progressive enhancement.

Best Practice:

Before registering a Service Worker check for the availability of its API:

if ('serviceWorker' in navigator) {

...

Use per API detection method for all your website’s features.

Don’t:

Never use the browser’s user agent to enable or disable features in your web app. Always check whether the feature’s API is available and gracefully degrade if unavailable.

Avoid updating or launching your site without testing across multiple browsers! Check your site analytics to learn which browsers are most popular among your user base.

Test with Search Console

Why? It’s important to understand how Google Search views your site’s content. You can use Search Console to fetch individual URLs from your site and see how Google Search views them using the “Crawl > Fetch as Google“ feature. Search Console will process your JavaScript and render the page when that option is selected; otherwise only the raw HTML response is shown

Google Search Console also analyses the content on your page in a variety of ways including detecting the presence of Structured Data, Rich Cards, Sitelinks & Accelerated Mobile Pages.

Best Practice:

Monitor your site using Search Console and explore its features including “Fetch as Google”.

Provide a Sitemap via Search Console “Crawl > Sitemaps” It can be an effective way to ensure Google Search is aware of all your site’s pages.

Annotate with Schema.org structured data

Why? Schema.org structured data is a flexible vocabulary for summarizing the most important parts of your page as machine-processable data. This can be as general as simply saying that a page is a NewsArticle, or as specific as detailing the location, band name, venue and ticket vendor for a touring band, or summarizing the ingredients and steps for a recipe.

The use of this metadata may not make sense for every page on your web application but it’s recommended where it’s sensible. Google extracts it after the page is rendered.

There are a variety of data types including “NewsArticle”, “Recipe” & “Product” to name a few. Explore all the supported data types here.

Best Practice:

Verify that your Schema.org meta data is correct using Google’s Structured Data Testing Tool.

Check that the data you provided is appearing and there are no errors present.

Don’t:

Avoid using a data type that doesn’t match your page’s actual content. For example don’t use “Recipe” for a T-Shirt you’re selling — use “Product” instead.

Annotate with Open Graph & Twitter Cards

Why? In addition to the Schema.org metadata it can be helpful to add support for Facebook’s Open Graph protocol and Twitter rich cards as well.

These metadata formats improve the user experience when your content is shared on their corresponding social networks.

If your existing site or web application utilises these formats it’s important to ensure they are included in your progressive web application as well for optimal virality.

Best Practice:

Test your Open Graph markup with the Facebook Object Debugger Tool.

Familiarise yourself with Twitter’s metadata format.

Don’t:

Don’t forget to include these formats if your existing site supports them.

Test with Multiple Browsers

Why? Clearly from a user perspective it’s important that a website behaviors the same across all browsers. While the experience might adapt for different screen sizes we all expect a mobile site to work the same on similarly sized devices whether it’s an iPhone or an Android mobile phone.

While the web can be perceived as fragmented due to number of browsers in use around the world, this variety and competition is part of what makes the web such an innovative platform. Thankfully, web standards have never been more mature than they are now and modern tools enable developers to build rich, cross browser compatible websites with confidence.

Best Practice:

Use cross browser testing tools such as BrowserStack.com, Browserling.com or BrowserShots.org to ensure your PWA is cross browser compatible.

Measure Page Load Performance

Why? The faster a website loads for a user the better their user experience will be. Optimizing for page speed is already a well known focus in web development but sometimes when developing a new version of a site the necessary optimizations are not considered a high priority.

When developing a progressive web application we recommend measuring the performance of your page load speed and optimizing before launching the site for the best results.

Best Practice:

Use tools such as Page Speed Insights and Web Page Test to measure the page load performance of your site. While Googlebot has a bit more patience in rendering, research has shown that 40% of consumers will leave a page that takes longer than three seconds to load..

Read more about our web page performance recommendations and the critical rendering path here.

Don’t:

Avoid leaving optimization as a post-launch step. If your website’s content loads quickly before migrating to a new progressive web application then it’s important to not regress in your optimizations.

We hope that the above checklist is useful and provides the right guidance to help you develop your Progressive Web Applications with indexability in mind.

As you get started, be sure to check out our Progressive Web App indexability samples that demonstrate server-side, client-side and hybrid rendering. As always, if you have any questions, please reach out on our Webmaster Forums.

Posted by Tom Greenaway, Developer Advocate

Building Indexable Progressive Web Apps

Progressive Web Apps (PWAs) are taking advantage of new technologies to bring the best of mobile sites and native applications to users — and they’re one of the most exciting new ideas on the web. But to truly have an impact, it’s important that they’re indexable and linkable. Every recommendation presented in this article is an existing best practice for indexability — regardless of whether you’re building a Progressive Web App or a simple static website. Nonetheless, we have collated these best practices to provide a checklist to guide you:

Make Your Content Crawlable

Why? Historically, websites would always generate or render their HTML on the server which is the simplest way to ensure your content is directly linkable. Web applications popularised the concept of client-side rendering in which content is updated dynamically on the page as the users navigates without requiring the page to be reloaded.

The modern approach is hybrid rendering, in which server-side rendering is used when a user navigates directly to a URL and client-side rendering is used after the initial page load for subsequent navigation and asynchronous requests.

Our server-side PWA sample demonstrates pure server-side rendering, while our hybrid PWA sample demonstrates the combined approach.

If you are unfamiliar with the server-side and client-side rendering terminology, check out these articles on the web read here and here.

<!– yeah, maybe not http://2.bp.blogspot.com/-41v6n3Vaf5s/UeRN_XJ0keI/AAAAAAAAN2Y/YxIHhddGiaw/s1600/css.gif .boxbox { float:left; min-width: 31%; max-width: 300px; word-wrap:break-word; padding: 0.2em;} .badbox { background-color: #eba; } .goodbox { background-color: #ded; } .avoidbox { background-color: #ffd; } .boxbox h5 { font-size: 1em; font-weight: bold; margin: 0.5em 0;} br.endboxen { clear: both; } –><!–

Best Practice:

box

Avoid:

box

Don’t:

box

–>

Best Practice:

Use server-side or hybrid rendering so users receive the content in the initial payload of their web request.

Always ensure your URLs are independently accessible:

https://www.example.com/product/25/

The above should deep link to that particular resource.

If you can’t support server-side or hybrid rendering for your Progressive Web App and you decide to use client-side rendering, we recommend using the Google Search Console “Fetch as Google tool” to verify your content successfully renders for our search crawler.

Don’t:

Don’t redirect users accessing deep links back to your web app’s homepage.

Additionally, serving an error page to users instead of deep linking should also be avoided.

Provide Clean URLs

Why? Fragment identifiers (#user/24601/ or #!user/24601/) were an effective workaround for browsers to AJAX new content from a server without reloading the page. This design is known as client-side rendering.

However, the fragment identifier syntax isn’t compatible with some web tools, frameworks and protocols such as Facebook’s Open Graph protocol.

The History API enables us to update the URL without fragment identifiers while still fetching resources asynchronously and therefore avoiding page reloads — it’s the best of both worlds. The AJAX crawling scheme (with its #! / escaped-fragment URLs) made sense at its time, but is now no longer recommended.

Our hybrid PWA and client-side PWA samples demonstrate the History API.

Best Practice:

Provide clean URLs without fragment identifiers (# or #!) such as:

https://www.example.com/product/25/

If using client-side or hybrid rendering be sure to support browser navigation with the History API.

Avoid:

Using the #! URL structure to drive unique URLs is discouraged:

https://www.example.com/#!product/25/

It was introduced as a workaround before the advent of the History API. It is considered a separate pattern to the purely # URL structure.

Don’t:

Using the # URL structure without the accompanying ! symbol is unsupported:

https://www.example.com/#product/25/

This URL structure is already a concept in the web and relates to deep linking into content on a particular page.

Specify Canonical URLs

Why? The best way to eliminate confusion for indexing when the same content is available under multiple URLs (be it the same or different domains) is to mark one page as the canonical, and all other pages that duplicate that content to refer to it.

Best Practice:

Include the following tag across all pages mirroring a particular piece of content:

<link rel="canonical" href="https://www.example.com/your-url/" />

If you are supporting Accelerated Mobile Pages be sure to correctly use its counterpart rel=”amphtml” instruction as well.

Avoid:

Avoid purposely duplicating content across multiple URLs and not using the rel=”canonical” link element.

For example, the rel=”canonical” link element can reduce ambiguity for URLs with tracking parameters.

Don’t:

Avoid creating conflicting canonical references between your pages.

Design for Multiple Devices

Why? It’s important that all your users get the best experience possible when viewing your website, regardless of their device.

Make your site responsive in its design — fonts, margins, paddings, buttons and general design of your site should scale dynamically based on screen resolutions and device viewports.

Small images scaled up for desktop or tablet devices give a poor experience. Conversely, super high resolution images take a long time to download on mobile phones and may impact mobile scroll performance.

Read more UX for PWAs here.

Best Practice:

Use “srcset” attribute to fetch different resolution images for different density screens to avoid downloading images larger than the device’s screen is capable of displaying.

Scale your font size and line height to ensure your text is legible no matter the size of the device. Similarly ensure the padding and margins of elements also scale sensibly.

Test various screen resolutions using the Chrome Developer Tool’s Device Mode feature and Mobile Friendly Test tool.

Don’t:

Don’t show different content to users than you show to Google. If you use redirects or user agent detection (a.k.a. browser sniffing or dynamic serving) to alter the design of your site for different devices it’s important that the content itself remains the same.

Use the Search Console “Fetch as Google” tool to verify the content fetched by Google matches the content a user sees.

For usability reasons, avoid using fixed-size fonts.

Develop Iteratively

Why? One of the safest paths to take when adding features to a web application is to make changes iteratively. If you add features one at a time you can observe the impact of each individual change.

Alternatively many developers prefer to view their progressive web application as an opportunity to overhaul their mobile site in one fell swoop — developing the new web app in an isolated environment and swapping it with their existing mobile site once ready.

When developing features iteratively try to break the changes into separate pieces. For example, if you intend to move from server-side rendering to hybrid rendering then tackle that as a single iteration — rather than in combination with other features.

Both approaches have their own pros and cons. Iterating reduces the complexity of dealing with search indexability as the transition is continuous. However, iterating might result in a slower development process and potentially a less innovative overhaul if development is not starting from scratch.

In either case, the most sensitive areas to keep an eye on are your canonical URLs and your site’s robots.txt configuration.

Best Practice:

Iterate on your website incrementally by adding new features piece by piece.

For example, if don’t support HTTPS yet then start by migrating to a secure site.

Avoid:

If you’ve developed your progressive web app in an isolated environment, then avoid launching it without checking the rel-canonical links and robots.txt are setup appropriately.

Ensure your rel-canonical links point to the real site and that your robots.txt configuration allows crawlers to crawl your new site.

Don’t:

It’s logical to prevent crawlers from indexing your in-development site before launch but don’t forget to unblock crawlers from accessing your new site when you launch.

Use Progressive Enhancement

Why? Wherever possible it’s important to detect browser features before using them. Feature detection is also better than testing for browsers that you believe support a given feature.

A common bad practice in the past was to enable or disable features by testing which browser the user had. However, as browsers are constantly evolving with features this technique is strongly discouraged.

Service Worker is a relatively new technology and it’s important to not break compatibility in the pursuit of progress — it’s a perfect example of when to use progressive enhancement.

Best Practice:

Before registering a Service Worker check for the availability of its API:

if ('serviceWorker' in navigator) {

...

Use per API detection method for all your website’s features.

Don’t:

Never use the browser’s user agent to enable or disable features in your web app. Always check whether the feature’s API is available and gracefully degrade if unavailable.

Avoid updating or launching your site without testing across multiple browsers! Check your site analytics to learn which browsers are most popular among your user base.

Test with Search Console

Why? It’s important to understand how Google Search views your site’s content. You can use Search Console to fetch individual URLs from your site and see how Google Search views them using the “Crawl > Fetch as Google“ feature. Search Console will process your JavaScript and render the page when that option is selected; otherwise only the raw HTML response is shown

Google Search Console also analyses the content on your page in a variety of ways including detecting the presence of Structured Data, Rich Cards, Sitelinks & Accelerated Mobile Pages.

Best Practice:

Monitor your site using Search Console and explore its features including “Fetch as Google”.

Provide a Sitemap via Search Console “Crawl > Sitemaps” It can be an effective way to ensure Google Search is aware of all your site’s pages.

Annotate with Schema.org structured data

Why? Schema.org structured data is a flexible vocabulary for summarizing the most important parts of your page as machine-processable data. This can be as general as simply saying that a page is a NewsArticle, or as specific as detailing the location, band name, venue and ticket vendor for a touring band, or summarizing the ingredients and steps for a recipe.

The use of this metadata may not make sense for every page on your web application but it’s recommended where it’s sensible. Google extracts it after the page is rendered.

There are a variety of data types including “NewsArticle”, “Recipe” & “Product” to name a few. Explore all the supported data types here.

Best Practice:

Verify that your Schema.org meta data is correct using Google’s Structured Data Testing Tool.

Check that the data you provided is appearing and there are no errors present.

Don’t:

Avoid using a data type that doesn’t match your page’s actual content. For example don’t use “Recipe” for a T-Shirt you’re selling — use “Product” instead.

Annotate with Open Graph & Twitter Cards

Why? In addition to the Schema.org metadata it can be helpful to add support for Facebook’s Open Graph protocol and Twitter rich cards as well.

These metadata formats improve the user experience when your content is shared on their corresponding social networks.

If your existing site or web application utilises these formats it’s important to ensure they are included in your progressive web application as well for optimal virality.

Best Practice:

Test your Open Graph markup with the Facebook Object Debugger Tool.

Familiarise yourself with Twitter’s metadata format.

Don’t:

Don’t forget to include these formats if your existing site supports them.

Test with Multiple Browsers

Why? Clearly from a user perspective it’s important that a website behaviors the same across all browsers. While the experience might adapt for different screen sizes we all expect a mobile site to work the same on similarly sized devices whether it’s an iPhone or an Android mobile phone.

While the web can be perceived as fragmented due to number of browsers in use around the world, this variety and competition is part of what makes the web such an innovative platform. Thankfully, web standards have never been more mature than they are now and modern tools enable developers to build rich, cross browser compatible websites with confidence.

Best Practice:

Use cross browser testing tools such as BrowserStack.com, Browserling.com or BrowserShots.org to ensure your PWA is cross browser compatible.

Measure Page Load Performance

Why? The faster a website loads for a user the better their user experience will be. Optimizing for page speed is already a well known focus in web development but sometimes when developing a new version of a site the necessary optimizations are not considered a high priority.

When developing a progressive web application we recommend measuring the performance of your page load speed and optimizing before launching the site for the best results.

Best Practice:

Use tools such as Page Speed Insights and Web Page Test to measure the page load performance of your site. While Googlebot has a bit more patience in rendering, research has shown that 40% of consumers will leave a page that takes longer than three seconds to load..

Read more about our web page performance recommendations and the critical rendering path here.

Don’t:

Avoid leaving optimization as a post-launch step. If your website’s content loads quickly before migrating to a new progressive web application then it’s important to not regress in your optimizations.

We hope that the above checklist is useful and provides the right guidance to help you develop your Progressive Web Applications with indexability in mind.

As you get started, be sure to check out our Progressive Web App indexability samples that demonstrate server-side, client-side and hybrid rendering. As always, if you have any questions, please reach out on our Webmaster Forums.

Posted by Tom Greenaway, Developer Advocate

Best practices for bloggers reviewing free products they receive from companies

As a form of online marketing, some companies today will send bloggers free products to review or give away in return for a mention in a blogpost. Whether you’re the company supplying the product or the blogger writing the post, below are a few best practices to ensure that this content is both useful to users and compliant with Google Webmaster Guidelines.

- Use the nofollow tag where appropriate

Links that pass PageRank in exchange for goods or services are against Google guidelines on link schemes. Companies sometimes urge bloggers to link back to:

- the company’s site

- the company’s social media accounts

- an online merchant’s page that sells the product

- a review service’s page featuring reviews of the product

- the company’s mobile app on an app store

Bloggers should use the nofollow tag on all such links because these links didn’t come about organically (i.e., the links wouldn’t exist if the company hadn’t offered to provide a free good or service in exchange for a link). Companies, or the marketing firms they’re working with, can do their part by reminding bloggers to use nofollow on these links.

- Disclose the relationship

Users want to know when they’re viewing sponsored content. Also, there are laws in some countries that make disclosure of sponsorship mandatory. A disclosure can appear anywhere in the post; however, the most useful placement is at the top in case users don’t read the entire post.

- Create compelling, unique content

The most successful blogs offer their visitors a compelling reason to come back. If you’re a blogger you might try to become the go-to source of information in your topic area, cover a useful niche that few others are looking at, or provide exclusive content that only you can create due to your unique expertise or resources.

For more information, please drop by our Google Webmaster Central Help Forum.

Posted by the Google Webspam Team

First Click Free update

Around ten years ago when we introduced a policy called “First Click Free,” it was hard to imagine that the always-on, multi-screen, multiple device world we now live in would change content consumption so much and so fast. The spirit of the First Click Free effort was – and still is – to help users get access to high quality news with a minimum of effort, while also ensuring that publishers with a paid subscription model get discovered in Google Search and via Google News.

In 2009, we updated the FCF policy to allow a limit of five articles per day, in order to protect publishers who felt some users were abusing the spirit of this policy. Recently we have heard from publishers about the need to revisit these policies to reflect the mobile, multiple device world. Today we are announcing a change to the FCF limit to allow a limit of three articles a day. This change will be valid on both Google Search and Google News.

Google wants to play its part in connecting users to quality news and in connecting publishers to users. We believe the FCF is important in helping achieve that goal, and we will periodically review and update these policies as needed so they continue to benefit users and publishers alike. We are listening and always welcome feedback.

Questions and answers about First Click Free

Q: Do the rest of the old guidelines still apply?

A: Yes, please check the guidelines for Google News as well as the guidelines for Web Search and the associated blog post for more information.

Q: Can I apply First Click Free to only a section of my site / only for Google News (or only for Web Search)?

A: Sure! Just make sure that both Googlebot and users from the appropriate search results can view the content as required. Keep in mind that showing Googlebot the full content of a page while showing users a registration page would be considered cloaking.

Q: Do I have to sign up to use First Click Free?

A: Please let us know about your decision to use First Click Free if you are using it for Google News. There’s no need to inform us of the First Click Free status for Google Web Search.

Q: What is the preferred way to count a user’s accesses?

A: Since there are many different site architectures, we believe it’s best to leave this up to the publisher to decide.

(Please see our related blog post for more information on First Click Free for Google News.)

Posted by John Mueller, Google Switzerland

Are you a robot? Introducing “No CAPTCHA reCAPTCHA”

But, we figured it would be easier to just directly ask our users whether or not they are robots—so, we did! We’ve begun rolling out a new API that radically simplifies the reCAPTCHA experience. We’re calling it the “No CAPTCHA reCAPTCHA” and this is how it looks:

On websites using this new API, a significant number of users will be able to securely and easily verify they’re human without actually having to solve a CAPTCHA. Instead, with just a single click, they’ll confirm they are not a robot.

A brief history of CAPTCHAs

While the new reCAPTCHA API may sound simple, there is a high degree of sophistication behind that modest checkbox. CAPTCHAs have long relied on the inability of robots to solve distorted text. However, our research recently showed that today’s Artificial Intelligence technology can solve even the most difficult variant of distorted text at 99.8% accuracy. Thus distorted text, on its own, is no longer a dependable test.

To counter this, last year we developed an Advanced Risk Analysis backend for reCAPTCHA that actively considers a user’s entire engagement with the CAPTCHA—before, during, and after—to determine whether that user is a human. This enables us to rely less on typing distorted text and, in turn, offer a better experience for users. We talked about this in our Valentine’s Day post earlier this year.

The new API is the next step in this steady evolution. Now, humans can just check the box and in most cases, they’re through the challenge.

Are you sure you’re not a robot?

However, CAPTCHAs aren’t going away just yet. In cases when the risk analysis engine can’t confidently predict whether a user is a human or an abusive agent, it will prompt a CAPTCHA to elicit more cues, increasing the number of security checkpoints to confirm the user is valid.

Making reCAPTCHAs mobile-friendly

This new API also lets us experiment with new types of challenges that are easier for us humans to use, particularly on mobile devices. In the example below, you can see a CAPTCHA based on a classic Computer Vision problem of image labeling. In this version of the CAPTCHA challenge, you’re asked to select all of the images that correspond with the clue. It’s much easier to tap photos of cats or turkeys than to tediously type a line of distorted text on your phone.

As more websites adopt the new API, more people will see “No CAPTCHA reCAPTCHAs”. Early adopters, like Snapchat, WordPress, Humble Bundle, and several others are already seeing great results with this new API. For example, in the last week, more than 60% of WordPress’ traffic and more than 80% of Humble Bundle’s traffic on reCAPTCHA encountered the No CAPTCHA experience—users got to these sites faster. To adopt the new reCAPTCHA for your website, visit our site to learn more.

Humans, we’ll continue our work to keep the Internet safe and easy to use. Abusive bots and scripts, it’ll only get worse—sorry we’re (still) not sorry.

Posted by Vinay Shet, Product Manager, reCAPTCHA

Webmaster Academy now available in 22 languages

Today, the new Webmaster Academy goes live in 22 languages! New or beginner webmasters speaking a multitude of languages can now learn the fundamentals of making a great site, providing an enjoyable user experience, and ranking well in search results. And if you think you’re already familiar with these topics, take the quizzes at the end of each module to prove it :).

So give Webmaster Academy a read in your preferred language and let us know in the comments or help forum what you think. We’ve gotten such great and helpful feedback after the English version launched this past March so we hope this straightforward and easy-to-read guide can be helpful (and fun!) to everyone.

Let’s get great sites and searchable content up and running around the world.

Posted by Mary Chen, Webmaster Outreach

Introducing the new Webmaster Academy

Our Webmaster Academy is now available with new and targeted content!

Two years ago, Webmaster Academy launched to teach new and beginner webmasters how to make great websites. In addition to adding new content, we’ve now expanded and improved information on three important topics:

- Making a great site that’s valuable to your audience (Module 1)

- Learning how Google sees and understands your site (Module 2)

- Communicating with Google about your site (Module 3)

Enjoy, learn, and share your feedback!

Posted by Mary Chen, Webmaster Outreach Team