Why website security affects SEO rankings (and what you can do about it)

Hubspot found that 82% of a consumer survey would leave a website that was not secure. Four steps to get started on security, and why it will help your SEO.

The post Why website security affects SEO rankings (and what you can do about it) appeared first on Search Engine Watch.

How to use WordPress: Answering 12 common WordPress questions

WordPress is huge. According to the latest stats, WordPress powers almost 35% of the web — and growing quickly. With so many sites using the CMS and so many new sites bursting onto the scene, there’re a lot of new users taking their first steps in the wonderful world of WordPress. People from all walks […]

The post How to use WordPress: Answering 12 common WordPress questions appeared first on Yoast.

WordPress SEO

The ONLY tutorial you need to boost your search engine traffic by improving your WordPress SEO. Tips on WordPress SEO plugin usage, structuring your site and optimizing your theme!

The post WordPress SEO appeared first on Yoast.

Why & how to secure your website with the HTTPS protocol

You can find the whole session, about one hour long, in this video:

- What HTTPS encryption is, and why it is important to protect your visitors and yourself,

- How HTTPS enables a more modern web,

- What are the usual complaints about HTTPS, and are they still true today?

- “But HTTPS certificates cost so much money!”

- “But switching to HTTPS will destroy my SEO!”

- “But “mixed content” is such a headache!”

- “But my ad revenue will get destroyed!”

- “But HTTPS is sooooo sloooow!”

- Some practical advice to run the migration. Those are an aggregation of:

- The “site move with URL changes” documentation

- General level advice on which HTTPS specifications to choose (HSTS, encryption key strength, etc…)

Introducing reCAPTCHA v3: the new way to stop bots

A Frictionless User Experience

Over the last decade, reCAPTCHA has continuously evolved its technology. In reCAPTCHA v1, every user was asked to pass a challenge by reading distorted text and typing into a box. To improve both user experience and security, we introduced reCAPTCHA v2 and began to use many other signals to determine whether a request came from a human or bot. This enabled reCAPTCHA challenges to move from a dominant to a secondary role in detecting abuse, letting about half of users pass with a single click. Now with reCAPTCHA v3, we are fundamentally changing how sites can test for human vs. bot activities by returning a score to tell you how suspicious an interaction is and eliminating the need to interrupt users with challenges at all. reCAPTCHA v3 runs adaptive risk analysis in the background to alert you of suspicious traffic while letting your human users enjoy a frictionless experience on your site.

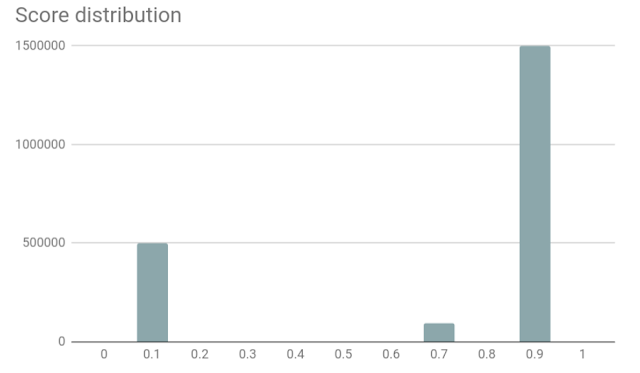

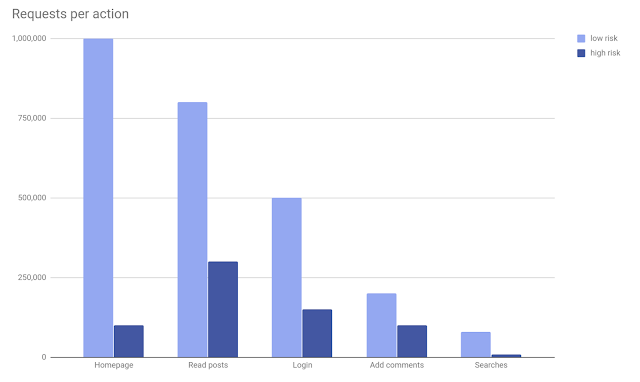

More Accurate Bot Detection with “Actions”

In reCAPTCHA v3, we are introducing a new concept called “Action”—a tag that you can use to define the key steps of your user journey and enable reCAPTCHA to run its risk analysis in context. Since reCAPTCHA v3 doesn’t interrupt users, we recommend adding reCAPTCHA v3 to multiple pages. In this way, the reCAPTCHA adaptive risk analysis engine can identify the pattern of attackers more accurately by looking at the activities across different pages on your website. In the reCAPTCHA admin console, you can get a full overview of reCAPTCHA score distribution and a breakdown for the stats of the top 10 actions on your site, to help you identify which exact pages are being targeted by bots and how suspicious the traffic was on those pages.

Fighting Bots Your Way

Another big benefit that you’ll get from reCAPTCHA v3 is the flexibility to prevent spam and abuse in the way that best fits your website. Previously, the reCAPTCHA system mostly decided when and what CAPTCHAs to serve to users, leaving you with limited influence over your website’s user experience. Now, reCAPTCHA v3 will provide you with a score that tells you how suspicious an interaction is. There are three potential ways you can use the score. First, you can set a threshold that determines when a user is let through or when further verification needs to be done, for example, using two-factor authentication and phone verification. Second, you can combine the score with your own signals that reCAPTCHA can’t access—such as user profiles or transaction histories. Third, you can use the reCAPTCHA score as one of the signals to train your machine learning model to fight abuse. By providing you with these new ways to customize the actions that occur for different types of traffic, this new version lets you protect your site against bots and improve your user experience based on your website’s specific needs.

In short, reCAPTCHA v3 helps to protect your sites without user friction and gives you more power to decide what to do in risky situations. As always, we are working every day to stay ahead of attackers and keep the Internet easy and safe to use (except for bots).

Ready to get started with reCAPTCHA v3? Visit our developer site for more details. Posted by Wei Liu, Google Product Manager

Google I/O 2018 – What sessions should SEOs and Webmasters watch live ?

However, you don’t have to physically attend the event to take advantage of this once-a-year opportunity: many conferences and talks are live streamed on YouTube for anyone to watch. You will find the full-event schedule here.

Distrust of the Symantec PKI: Immediate action needed by site operators

We previously announced plans to deprecate Chrome’s trust in the Symantec certificate authority (including Symantec-owned brands like Thawte, VeriSign, Equifax, GeoTrust, and RapidSSL). This post outlines how site operators can determine if they’re affected by this deprecation, and if so, what needs to be done and by when. Failure to replace these certificates will result in site breakage in upcoming versions of major browsers, including Chrome.

Chrome 66

If your site is using a SSL/TLS certificate from Symantec that was issued before June 1, 2016, it will stop functioning in Chrome 66, which could already be impacting your users.

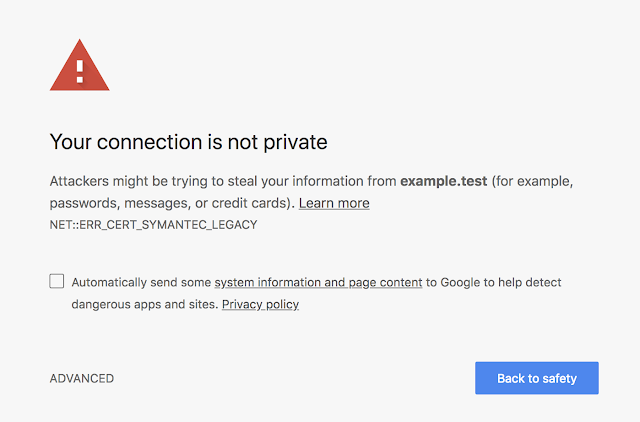

If you are uncertain about whether your site is using such a certificate, you can preview these changes in Chrome Canary to see if your site is affected. If connecting to your site displays a certificate error or a warning in DevTools as shown below, you’ll need to replace your certificate. You can get a new certificate from any trusted CA, including Digicert, which recently acquired Symantec’s CA business.

|

| An example of a certificate error that Chrome 66 users might see if you are using a Legacy Symantec SSL/TLS certificate that was issued before June 1, 2016. |

|

Chrome 66 has already been released to the Canary and Dev channels, meaning affected sites are already impacting users of these Chrome channels. If affected sites do not replace their certificates by March 15, 2018, Chrome Beta users will begin experiencing the failures as well. You are strongly encouraged to replace your certificate as soon as possible if your site is currently showing an error in Chrome Canary.

Chrome 70

Starting in Chrome 70, all remaining Symantec SSL/TLS certificates will stop working, resulting in a certificate error like the one shown above. To check if your certificate will be affected, visit your site in Chrome today and open up DevTools. You’ll see a message in the console telling you if you need to replace your certificate.

| The DevTools message you will see if you need to replace your certificate before Chrome 70. |

If you see this message in DevTools, you’ll want to replace your certificate as soon as possible. If the certificates are not replaced, users will begin seeing certificate errors on your site as early as July 20, 2018. The first Chrome 70 Beta release will be around September 13, 2018.

Expected Chrome Release Timeline

The table below shows the First Canary, First Beta and Stable Release for Chrome 66 and 70. The first impact from a given release will coincide with the First Canary, reaching a steadily widening audience as the release hits Beta and then ultimately Stable. Site operators are strongly encouraged to make the necessary changes to their sites before the First Canary release for Chrome 66 and 70, and no later than the corresponding Beta release dates.

|

Release

|

First Canary

|

First Beta

|

Stable Release

|

|

Chrome 66

|

January 20, 2018

|

~ March 15, 2018

|

~ April 17, 2018

|

|

Chrome 70

|

~ July 20, 2018

|

~ September 13, 2018

|

~ October 16, 2018

|

For information about the release timeline for a particular version of Chrome, you can also refer to the Chromium Development Calendar which will be updated should release schedules change.

In order to address the needs of certain enterprise users, Chrome will also implement an Enterprise Policy that allows disabling the Legacy Symantec PKI distrust starting with Chrome 66. As of January 1, 2019, this policy will no longer be available and the Legacy Symantec PKI will be distrusted for all users.

Special Mention: Chrome 65

As noted in the previous announcement, SSL/TLS certificates from the Legacy Symantec PKI issued after December 1, 2017 are no longer trusted. This should not affect most site operators, as it requires entering in to special agreement with DigiCert to obtain such certificates. Accessing a site serving such a certificate will fail and the request will be blocked as of Chrome 65. To avoid such errors, ensure that such certificates are only served to legacy devices and not to browsers such as Chrome.

Posted by Devon O’Brien, Ryan Sleevi, Emily Stark, Chrome security team

Migrating HTTP to HTTPS: A step-by-step guide

On February 8th 2018 Google announced that, beginning in July of this year, Chrome will now be marking all HTTP sites as ‘not secure’. If you are yet to make the switch, we’ve put together this guide to help you migrate to HTTPS.

10 tips to make your Magento online store more secure

An estimated 240,000 ecommerce stores use Magento for their online operations, which accounts for nearly 30% of the ecommerce platform market. Unfortunately, this not only makes clear that Magento is a worthwhile program, it makes clear something else: It’s a focus area for cyber criminals across the globe.

Moving your website to HTTPS / SSL: tips & tricks

In 2014, we decided to switch over to the (now) commonly-used HTTPS to encrypt sensitive data that’s being sent across our website. This post describes some useful tips based on our own experiences that might come in handy if you’re considering switching. A little backstory Back in 2014 HTTPS became a hot-topic after the Heartbleed […]

Periodic Account Access Review; Add it to Your To Do List!

Do you review who has access to the business’s online accounts, such as Twitter, Google Analytics, email, etc? Previous employees’ access to a business’s online accounts presents a huge risk. Periodic account access review is crucial, as Rishi Lakhani explains in this guest post.

Post from State of Digital Guest Contributor

Why I’m Blaming WordPress For Its Own Security Flaws

The WordPress CMS has some major security flaws that can be fixed easily. Barry Adams lists some of these flaws and propose straightforward fixes to help prevent hacks and make WordPress sites more secure.

Post from Barry Adams

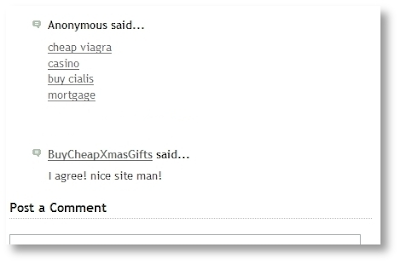

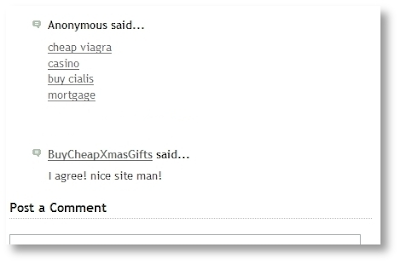

Protect your site from user generated spam

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

Protect your site from user generated spam

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

My bullets are green, but my post doesn’t rank?!

The Yoast SEO plugin helps you to easily optimize the text of your post. This could definitely result in higher rankings. But unfortunately, green bullets do not magically put you on top of the search results. In this post, I’ll discuss a number of possible reasons why a post doesn’t rank, even though the text has […]

Ask Yoast: security measures new domain

There are several reasons to move your website to a new domain. Maybe you’ve gained access to a much stronger domain. Perhaps you’re changing direction or you’re rebranding. Or you’d like to start over with a new name and a new site. Assuming you have a good reason for moving your site to a new […]

Here’s to more HTTPS on the web!

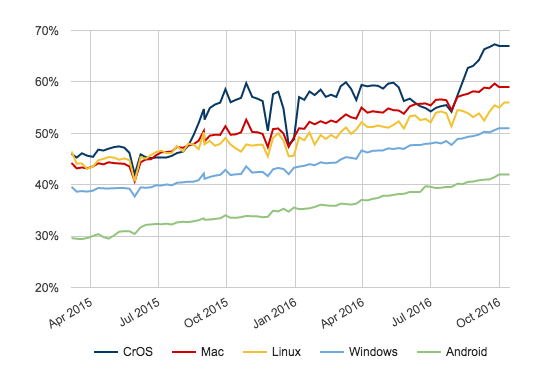

Security has always been critical to the web, but challenges involved in site migration have inhibited HTTPS adoption for several years. In the interest of a safer web for all, at Google we’ve worked alongside many others across the online ecosystem to better understand and address these challenges, resulting in real change. A web with ubiquitous HTTPS is not the distant future. It’s happening now, with secure browsing becoming standard for users of Chrome.

Today, we’re adding a new section to the HTTPS Report Card in our Transparency Report that includes data about how HTTPS usage has been increasing over time. More than half of pages loaded and two-thirds of total time spent by Chrome desktop users occur via HTTPS, and we expect these metrics to continue their strong upward trajectory.

Posted by Adrienne Porter Felt and Emily Schechter, Chrome Security Team

Here’s to more HTTPS on the web!

Security has always been critical to the web, but challenges involved in site migration have inhibited HTTPS adoption for several years. In the interest of a safer web for all, at Google we’ve worked alongside many others across the online ecosystem to better understand and address these challenges, resulting in real change. A web with ubiquitous HTTPS is not the distant future. It’s happening now, with secure browsing becoming standard for users of Chrome.

Today, we’re adding a new section to the HTTPS Report Card in our Transparency Report that includes data about how HTTPS usage has been increasing over time. More than half of pages loaded and two-thirds of total time spent by Chrome desktop users occur via HTTPS, and we expect these metrics to continue their strong upward trajectory.

Posted by Adrienne Porter Felt and Emily Schechter, Chrome Security Team

Google comes under fire for its privacy policy change

Earlier this year, Google made a change to its privacy policy that is now drawing criticism from privacy proponents.

More Safe Browsing Help for Webmasters

(Crossposted from the Google Security Blog.)

For more than nine years, Safe Browsing has helped webmasters via Search Console with information about how to fix security issues with their sites. This includes relevant Help Center articles, example URLs to assist in diagnosing the presence of harmful content, and a process for webmasters to request reviews of their site after security issues are addressed. Over time, Safe Browsing has expanded its protection to cover additional threats to user safety such as Deceptive Sites and Unwanted Software.

To help webmasters be even more successful in resolving issues, we’re happy to announce that we’ve updated the information available in Search Console in the Security Issues report.

The updated information provides more specific explanations of six different security issues detected by Safe Browsing, including malware, deceptive pages, harmful downloads, and uncommon downloads. These explanations give webmasters more context and detail about what Safe Browsing found. We also offer tailored recommendations for each type of issue, including sample URLs that webmasters can check to identify the source of the issue, as well as specific remediation actions webmasters can take to resolve the issue.

We on the Safe Browsing team definitely recommend registering your site in Search Console even if it is not currently experiencing a security issue. We send notifications through Search Console so webmasters can address any issues that appear as quickly as possible.

Our goal is to help webmasters provide a safe and secure browsing experience for their users. We welcome any questions or feedback about the new features on the Google Webmaster Help Forum, where Top Contributors and Google employees are available to help.

For more information about Safe Browsing’s ongoing work to shine light on the state of web security and encourage safer web security practices, check out our summary of trends and findings on the Safe Browsing Transparency Report. If you’re interested in the tools Google provides for webmasters and developers dealing with hacked sites, this video provides a great overview.

Posted by Kelly Hope Harrington, Safe Browsing Team