Closing down for a day

Even in today’s “always-on” world, sometimes businesses want to take a break. There are times when even their online presence needs to be paused. This blog post covers some of the available options so that a site’s search presence isn’t affected.

Option: Block cart functionality

If a site only needs to block users from buying things, the simplest approach is to disable that specific functionality. In most cases, shopping cart pages can either be blocked from crawling through the robots.txt file, or blocked from indexing with a robots meta tag. Since search engines either won’t see or index that content, you can communicate this to users in an appropriate way. For example, you may disable the link to the cart, add a relevant message, or display an informational page instead of the cart.

Option: Always show interstitial or pop-up

If you need to block the whole site from users, be it with a “temporarily unavailable” message, informational page, or popup, the server should return a 503 HTTP result code (“Service Unavailable”). The 503 result code makes sure that Google doesn’t index the temporary content that’s shown to users. Without the 503 result code, the interstitial would be indexed as your website’s content.

Googlebot will retry pages that return 503 for up to about a week, before treating it as a permanent error that can result in those pages being dropped from the search results. You can also include a “Retry after” header to indicate how long the site will be unavailable. Blocking a site for longer than a week can have negative effects on the site’s search results regardless of the method that you use.

Option: Switch whole website off

Turning the server off completely is another option. You might also do this if you’re physically moving your server to a different data center. For this, have a temporary server available to serve a 503 HTTP result code for all URLs (with an appropriate informational page for users), and switch your DNS to point to that server during that time.

- Set your DNS TTL to a low time (such as 5 minutes) a few days in advance.

- Change the DNS to the temporary server’s IP address.

- Take your main server offline once all requests go to the temporary server.

- … your server is now offline …

- When ready, bring your main server online again.

- Switch DNS back to the main server’s IP address.

- Change the DNS TTL back to normal.

We hope these options cover the common situations where you’d need to disable your website temporarily. If you have any questions, feel free to drop by our webmaster help forums!

PS If your business is active locally, make sure to reflect these closures in the opening hours for your local listings too!

Posted by John Mueller, Webmaster Trends Analyst, Switzerland

Closing down for a day

Note: This post is specific to Google’s organic web-search. For Google’s other services, please check with the appropriate help center (e.g., for Google Shopping) or help forum.

Even in today’s “always-on” world, sometimes businesses want to take a break. There are times when even their online presence needs to be paused. This blog post covers some of the available options so that a site’s search presence isn’t affected.

Option: Block cart functionality

If a site only needs to block users from buying things, the simplest approach is to disable that specific functionality. In most cases, shopping cart pages can either be blocked from crawling through the robots.txt file, or blocked from indexing with a robots meta tag. Since search engines either won’t see or index that content, you can communicate this to users in an appropriate way. For example, you may disable the link to the cart, add a relevant message, or display an informational page instead of the cart.

Option: Always show interstitial or pop-up

If you need to block the whole site from users, be it with a “temporarily unavailable” message, informational page, or popup, the server should return a 503 HTTP result code (“Service Unavailable”). The 503 result code makes sure that Google doesn’t index the temporary content that’s shown to users. Without the 503 result code, the interstitial would be indexed as your website’s content.

Googlebot will retry pages that return 503 for up to about a week, before treating it as a permanent error that can result in those pages being dropped from the search results. You can also include a “Retry after” header to indicate how long the site will be unavailable. Blocking a site for longer than a week can have negative effects on the site’s search results regardless of the method that you use.

Option: Switch whole website off

Turning the server off completely is another option. You might also do this if you’re physically moving your server to a different data center. For this, have a temporary server available to serve a 503 HTTP result code for all URLs (with an appropriate informational page for users), and switch your DNS to point to that server during that time.

- Set your DNS TTL to a low time (such as 5 minutes) a few days in advance.

- Change the DNS to the temporary server’s IP address.

- Take your main server offline once all requests go to the temporary server.

- … your server is now offline …

- When ready, bring your main server online again.

- Switch DNS back to the main server’s IP address.

- Change the DNS TTL back to normal.

We hope these options cover the common situations where you’d need to disable your website temporarily. If you have any questions, feel free to drop by our webmaster help forums!

PS If your business is active locally, make sure to reflect these closures in the opening hours for your local listings too!

Posted by John Mueller, Webmaster Trends Analyst, Switzerland

Introducing the Mobile-Friendly Test API

With so many users on mobile devices, having a mobile-friendly web is important to us all. The Mobile-Friendly Test is a great way to check individual pages manually. We’re happy to announce that this test is now available via API as well. The Mobile-…

Introducing the Mobile-Friendly Test API

With so many users on mobile devices, having a mobile-friendly web is important to us all. The Mobile-Friendly Test is a great way to check individual pages manually. We’re happy to announce that this test is now available via API as well. The Mobile-…

Protect your site from user generated spam

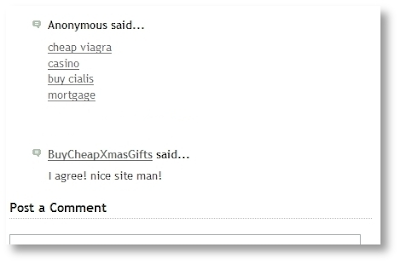

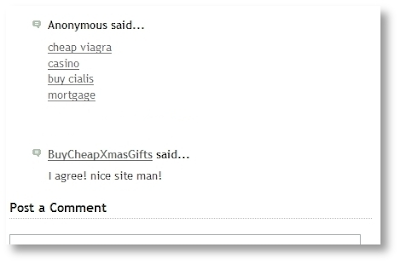

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

Protect your site from user generated spam

As a website owner, you might have come across some auto-generated content in comments sections or forum threads. When such content is created on your pages, not only does it disrupt those visiting your site, but it also shows some content that you may not want to be associated with your site to Google and other search engines.

In this blog post, we will give you tips to help you deal with this type of spam in your site and forum.

Some spammers abuse sites owned by others by posting deceiving content and links, in an attempt to get more traffic to their sites. Here are a few examples:

Comments and forum threads can be a really good source of information and an efficient way of engaging a site’s users in discussions. This valuable content should not be buried by auto-generated keywords and links placed there by spammers.

There are many ways of securing your site’s forums and comment threads and making them unattractive to spammers:

- Keep your forum software updated and patched. Take the time to keep your software up-to-date and pay special attention to important security updates. Spammers take advantage of security issues in older versions of blogs, bulletin boards, and other content management systems.

- Add a CAPTCHA. CAPTCHAs require users to confirm that they are not robots in order to prove they’re a human being and not an automated script. One way to do this is to use a service like reCAPTCHA, Securimage and Jcaptcha .

- Block suspicious behavior. Many forums allow you to set time limits between posts, and you can often find plugins to look for excessive traffic from individual IP addresses or proxies and other activity more common to bots than human beings. For example, phpBB, Simple Machines, myBB, and many other forum platforms enable such configurations.

- Check your forum’s top posters on a daily basis. If a user joined recently and has an excessive amount of posts, then you probably should review their profile and make sure that their posts and threads are not spammy.

- Consider disabling some types of comments. For example, It’s a good practice to close some very old forum threads that are unlikely to get legitimate replies.

If you plan on not monitoring your forum going forward and users are no longer interacting with it, turning off posting completely may prevent spammers from abusing it. - Make good use of moderation capabilities. Consider enabling features in moderation that require users to have a certain reputation before links can be posted or where comments with links require moderation.

If possible, change your settings so that you disallow anonymous posting and make posts from new users require approval before they’re publicly visible.

Moderators, together with your friends/colleagues and some other trusted users can help you review and approve posts while spreading the workload. Keep an eye on your forum’s new users by looking on their posts and activities on your forum. - Consider blacklisting obviously spammy terms. Block obviously inappropriate comments with a blacklist of spammy terms (e.g. Illegal streaming or pharma related terms) . Add inappropriate and off-topic terms that are only used by spammers, learn from the spam posts that you often see on your forum or other forums. Built-in features or plugins can delete or mark comments as spam for you.

- Use the “nofollow” attribute for links in the comment field. This will deter spammers from targeting your site. By default, many blogging sites (such as Blogger) automatically add this attribute to any posted comments.

- Use automated systems to defend your site. Comprehensive systems like Akismet, which has plugins for many blogs and forum systems are easy to install and do most of the work for you.

For detailed information about these topics, check out our Help Center document on User Generated Spam and comment spam. You can also visit our Webmaster Central Help Forum if you need any help.

Posted by Anouar Bendahou, Search Quality Strategist, Google Ireland

What Crawl Budget Means for Googlebot

Recently, we’ve heard a number of definitions for “crawl budget”, however we don’t have a single term that would describe everything that “crawl budget” stands for externally. With this post we’ll clarify what we actually have and what it means for Goo…

What Crawl Budget Means for Googlebot

Recently, we’ve heard a number of definitions for “crawl budget”, however we don’t have a single term that would describe everything that “crawl budget” stands for externally. With this post we’ll clarify what we actually have and what it means for Goo…

Enhancing property sets to cover more reports in Search Console

Since initially announcing property sets earlier this year, one of the most popular requests has been to expand this functionality to more sections of Search Console. Thanks to your feedback, we’re now expanding property sets to more features! Propert…

Enhancing property sets to cover more reports in Search Console

Since initially announcing property sets earlier this year, one of the most popular requests has been to expand this functionality to more sections of Search Console. Thanks to your feedback, we’re now expanding property sets to more features! Propert…

An update on Google’s feature-phone crawling & indexing

Limited mobile devices, “feature-phones”, require a special form of markup or a transcoder for web content. Most websites don’t provide feature-phone-compatible content in WAP/WML any more. Given these developments, we’ve made changes in how we crawl f…

An update on Google’s feature-phone crawling & indexing

Limited mobile devices, “feature-phones”, require a special form of markup or a transcoder for web content. Most websites don’t provide feature-phone-compatible content in WAP/WML any more. Given these developments, we’ve made changes in how we crawl f…

Saying goodbye to Content Keywords

In the early days – back when Search Console was still called Webmaster Tools – the content keywords feature was the only way to see what Googlebot found when it crawled a website. It was useful to see that Google was able to crawl your pages at all, o…

Saying goodbye to Content Keywords

In the early days – back when Search Console was still called Webmaster Tools – the content keywords feature was the only way to see what Googlebot found when it crawled a website. It was useful to see that Google was able to crawl your pages at all, o…

Rich Cards expands to more verticals

At Google I/O in May, we launched Rich Cards for Movies and Recipes, creating a new way for site owners to present previews of their content on the Search results page. Today, we’re expanding to two new verticals for US-based sites: Local restaurants and Online courses.

Evolution of search results for queries like [best New Orleans restaurants] and [leadership courses]: with rich cards, results are presented in new UIs, like carousels that are easy to browse by scrolling left and right, or a vertical three-pack that displays more individual courses

By building Rich Cards, you have a new opportunity to attract more engaged users to your page. Users can swipe through restaurant recommendations from sites like TripAdvisor, Thrillist, Time Out, Eater, and 10Best. In addition to food, users can browse through courses from sites like Coursera, LinkedIn Learning, EdX, Harvard, Udacity, FutureLearn, Edureka, Open University, Udemy, Canvas Network, and NPTEL.

If you have a site that contains local restaurant information or offers online courses, check out our developer docs to start building Rich Cards in the Local restaurant and Online courses verticals.

While AMP HTML is not required for Local restaurant pages and Online Courses rich cards, AMP provides Google Search users with a consistently fast experience, so we recommend that you create AMP pages to further engage users. Users consuming AMP’d content will be able to swipe near instantly from restaurant to restaurant or from recipe to recipe within your site.

Users who tap on your Rich Card will be taken near instantly to your AMP page, and be able to swipe between pages within your site.

Check out our developer site for implementation details.

To make it easier for you to create Rich Cards, we made some changes in our tools:

- The Structured Data Testing Tool displays markup errors and a preview card for Local restaurant content as it might appear on Search.

- The Rich Cards report in Search Console shows which cards across verticals contain errors, and which ones could be enhanced with more markup.

- The AMP Test helps validate AMP pages as well as mark up on the page.

What’s next?

We are actively experimenting with new verticals globally to provide more opportunities for you to display richer previews of your content.

If you have questions, find us in the dedicated Structured data section of our forum, on Twitter or on Google+.

Post by Stacie Chan, Global Product Partnerships

Rich Cards expands to more verticals

At Google I/O in May, we launched Rich Cards for Movies and Recipes, creating a new way for site owners to present previews of their content on the Search results page. Today, we’re expanding to two new verticals for US-based sites: Local restaurants and Online courses.

Evolution of search results for queries like [best New Orleans restaurants] and [leadership courses]: with rich cards, results are presented in new UIs, like carousels that are easy to browse by scrolling left and right, or a vertical three-pack that displays more individual courses

By building Rich Cards, you have a new opportunity to attract more engaged users to your page. Users can swipe through restaurant recommendations from sites like TripAdvisor, Thrillist, Time Out, Eater, and 10Best. In addition to food, users can browse through courses from sites like Coursera, LinkedIn Learning, EdX, Harvard, Udacity, FutureLearn, Edureka, Open University, Udemy, Canvas Network, and NPTEL.

If you have a site that contains local restaurant information or offers online courses, check out our developer docs to start building Rich Cards in the Local restaurant and Online courses verticals.

While AMP HTML is not required for Local restaurant pages and Online Courses rich cards, AMP provides Google Search users with a consistently fast experience, so we recommend that you create AMP pages to further engage users. Users consuming AMP’d content will be able to swipe near instantly from restaurant to restaurant or from recipe to recipe within your site.

Users who tap on your Rich Card will be taken near instantly to your AMP page, and be able to swipe between pages within your site.

Check out our developer site for implementation details.

To make it easier for you to create Rich Cards, we made some changes in our tools:

- The Structured Data Testing Tool displays markup errors and a preview card for Local restaurant content as it might appear on Search.

- The Rich Cards report in Search Console shows which cards across verticals contain errors, and which ones could be enhanced with more markup.

- The AMP Test helps validate AMP pages as well as mark up on the page.

What’s next?

We are actively experimenting with new verticals globally to provide more opportunities for you to display richer previews of your content.

If you have questions, find us in the dedicated Structured data section of our forum, on Twitter or on Google+.

Post by Stacie Chan, Global Product Partnerships

Building Indexable Progressive Web Apps

Progressive Web Apps (PWAs) are taking advantage of new technologies to bring the best of mobile sites and native applications to users — and they’re one of the most exciting new ideas on the web. But to truly have an impact, it’s important that they’re indexable and linkable. Every recommendation presented in this article is an existing best practice for indexability — regardless of whether you’re building a Progressive Web App or a simple static website. Nonetheless, we have collated these best practices to provide a checklist to guide you:

Make Your Content Crawlable

Why? Historically, websites would always generate or render their HTML on the server which is the simplest way to ensure your content is directly linkable. Web applications popularised the concept of client-side rendering in which content is updated dynamically on the page as the users navigates without requiring the page to be reloaded.

The modern approach is hybrid rendering, in which server-side rendering is used when a user navigates directly to a URL and client-side rendering is used after the initial page load for subsequent navigation and asynchronous requests.

Our server-side PWA sample demonstrates pure server-side rendering, while our hybrid PWA sample demonstrates the combined approach.

If you are unfamiliar with the server-side and client-side rendering terminology, check out these articles on the web read here and here.

<!– yeah, maybe not http://2.bp.blogspot.com/-41v6n3Vaf5s/UeRN_XJ0keI/AAAAAAAAN2Y/YxIHhddGiaw/s1600/css.gif .boxbox { float:left; min-width: 31%; max-width: 300px; word-wrap:break-word; padding: 0.2em;} .badbox { background-color: #eba; } .goodbox { background-color: #ded; } .avoidbox { background-color: #ffd; } .boxbox h5 { font-size: 1em; font-weight: bold; margin: 0.5em 0;} br.endboxen { clear: both; } –><!–

Best Practice:

box

Avoid:

box

Don’t:

box

–>

Best Practice:

Use server-side or hybrid rendering so users receive the content in the initial payload of their web request.

Always ensure your URLs are independently accessible:

https://www.example.com/product/25/

The above should deep link to that particular resource.

If you can’t support server-side or hybrid rendering for your Progressive Web App and you decide to use client-side rendering, we recommend using the Google Search Console “Fetch as Google tool” to verify your content successfully renders for our search crawler.

Don’t:

Don’t redirect users accessing deep links back to your web app’s homepage.

Additionally, serving an error page to users instead of deep linking should also be avoided.

Provide Clean URLs

Why? Fragment identifiers (#user/24601/ or #!user/24601/) were an effective workaround for browsers to AJAX new content from a server without reloading the page. This design is known as client-side rendering.

However, the fragment identifier syntax isn’t compatible with some web tools, frameworks and protocols such as Facebook’s Open Graph protocol.

The History API enables us to update the URL without fragment identifiers while still fetching resources asynchronously and therefore avoiding page reloads — it’s the best of both worlds. The AJAX crawling scheme (with its #! / escaped-fragment URLs) made sense at its time, but is now no longer recommended.

Our hybrid PWA and client-side PWA samples demonstrate the History API.

Best Practice:

Provide clean URLs without fragment identifiers (# or #!) such as:

https://www.example.com/product/25/

If using client-side or hybrid rendering be sure to support browser navigation with the History API.

Avoid:

Using the #! URL structure to drive unique URLs is discouraged:

https://www.example.com/#!product/25/

It was introduced as a workaround before the advent of the History API. It is considered a separate pattern to the purely # URL structure.

Don’t:

Using the # URL structure without the accompanying ! symbol is unsupported:

https://www.example.com/#product/25/

This URL structure is already a concept in the web and relates to deep linking into content on a particular page.

Specify Canonical URLs

Why? The best way to eliminate confusion for indexing when the same content is available under multiple URLs (be it the same or different domains) is to mark one page as the canonical, and all other pages that duplicate that content to refer to it.

Best Practice:

Include the following tag across all pages mirroring a particular piece of content:

<link rel="canonical" href="https://www.example.com/your-url/" />

If you are supporting Accelerated Mobile Pages be sure to correctly use its counterpart rel=”amphtml” instruction as well.

Avoid:

Avoid purposely duplicating content across multiple URLs and not using the rel=”canonical” link element.

For example, the rel=”canonical” link element can reduce ambiguity for URLs with tracking parameters.

Don’t:

Avoid creating conflicting canonical references between your pages.

Design for Multiple Devices

Why? It’s important that all your users get the best experience possible when viewing your website, regardless of their device.

Make your site responsive in its design — fonts, margins, paddings, buttons and general design of your site should scale dynamically based on screen resolutions and device viewports.

Small images scaled up for desktop or tablet devices give a poor experience. Conversely, super high resolution images take a long time to download on mobile phones and may impact mobile scroll performance.

Read more UX for PWAs here.

Best Practice:

Use “srcset” attribute to fetch different resolution images for different density screens to avoid downloading images larger than the device’s screen is capable of displaying.

Scale your font size and line height to ensure your text is legible no matter the size of the device. Similarly ensure the padding and margins of elements also scale sensibly.

Test various screen resolutions using the Chrome Developer Tool’s Device Mode feature and Mobile Friendly Test tool.

Don’t:

Don’t show different content to users than you show to Google. If you use redirects or user agent detection (a.k.a. browser sniffing or dynamic serving) to alter the design of your site for different devices it’s important that the content itself remains the same.

Use the Search Console “Fetch as Google” tool to verify the content fetched by Google matches the content a user sees.

For usability reasons, avoid using fixed-size fonts.

Develop Iteratively

Why? One of the safest paths to take when adding features to a web application is to make changes iteratively. If you add features one at a time you can observe the impact of each individual change.

Alternatively many developers prefer to view their progressive web application as an opportunity to overhaul their mobile site in one fell swoop — developing the new web app in an isolated environment and swapping it with their existing mobile site once ready.

When developing features iteratively try to break the changes into separate pieces. For example, if you intend to move from server-side rendering to hybrid rendering then tackle that as a single iteration — rather than in combination with other features.

Both approaches have their own pros and cons. Iterating reduces the complexity of dealing with search indexability as the transition is continuous. However, iterating might result in a slower development process and potentially a less innovative overhaul if development is not starting from scratch.

In either case, the most sensitive areas to keep an eye on are your canonical URLs and your site’s robots.txt configuration.

Best Practice:

Iterate on your website incrementally by adding new features piece by piece.

For example, if don’t support HTTPS yet then start by migrating to a secure site.

Avoid:

If you’ve developed your progressive web app in an isolated environment, then avoid launching it without checking the rel-canonical links and robots.txt are setup appropriately.

Ensure your rel-canonical links point to the real site and that your robots.txt configuration allows crawlers to crawl your new site.

Don’t:

It’s logical to prevent crawlers from indexing your in-development site before launch but don’t forget to unblock crawlers from accessing your new site when you launch.

Use Progressive Enhancement

Why? Wherever possible it’s important to detect browser features before using them. Feature detection is also better than testing for browsers that you believe support a given feature.

A common bad practice in the past was to enable or disable features by testing which browser the user had. However, as browsers are constantly evolving with features this technique is strongly discouraged.

Service Worker is a relatively new technology and it’s important to not break compatibility in the pursuit of progress — it’s a perfect example of when to use progressive enhancement.

Best Practice:

Before registering a Service Worker check for the availability of its API:

if ('serviceWorker' in navigator) {

...

Use per API detection method for all your website’s features.

Don’t:

Never use the browser’s user agent to enable or disable features in your web app. Always check whether the feature’s API is available and gracefully degrade if unavailable.

Avoid updating or launching your site without testing across multiple browsers! Check your site analytics to learn which browsers are most popular among your user base.

Test with Search Console

Why? It’s important to understand how Google Search views your site’s content. You can use Search Console to fetch individual URLs from your site and see how Google Search views them using the “Crawl > Fetch as Google“ feature. Search Console will process your JavaScript and render the page when that option is selected; otherwise only the raw HTML response is shown

Google Search Console also analyses the content on your page in a variety of ways including detecting the presence of Structured Data, Rich Cards, Sitelinks & Accelerated Mobile Pages.

Best Practice:

Monitor your site using Search Console and explore its features including “Fetch as Google”.

Provide a Sitemap via Search Console “Crawl > Sitemaps” It can be an effective way to ensure Google Search is aware of all your site’s pages.

Annotate with Schema.org structured data

Why? Schema.org structured data is a flexible vocabulary for summarizing the most important parts of your page as machine-processable data. This can be as general as simply saying that a page is a NewsArticle, or as specific as detailing the location, band name, venue and ticket vendor for a touring band, or summarizing the ingredients and steps for a recipe.

The use of this metadata may not make sense for every page on your web application but it’s recommended where it’s sensible. Google extracts it after the page is rendered.

There are a variety of data types including “NewsArticle”, “Recipe” & “Product” to name a few. Explore all the supported data types here.

Best Practice:

Verify that your Schema.org meta data is correct using Google’s Structured Data Testing Tool.

Check that the data you provided is appearing and there are no errors present.

Don’t:

Avoid using a data type that doesn’t match your page’s actual content. For example don’t use “Recipe” for a T-Shirt you’re selling — use “Product” instead.

Annotate with Open Graph & Twitter Cards

Why? In addition to the Schema.org metadata it can be helpful to add support for Facebook’s Open Graph protocol and Twitter rich cards as well.

These metadata formats improve the user experience when your content is shared on their corresponding social networks.

If your existing site or web application utilises these formats it’s important to ensure they are included in your progressive web application as well for optimal virality.

Best Practice:

Test your Open Graph markup with the Facebook Object Debugger Tool.

Familiarise yourself with Twitter’s metadata format.

Don’t:

Don’t forget to include these formats if your existing site supports them.

Test with Multiple Browsers

Why? Clearly from a user perspective it’s important that a website behaviors the same across all browsers. While the experience might adapt for different screen sizes we all expect a mobile site to work the same on similarly sized devices whether it’s an iPhone or an Android mobile phone.

While the web can be perceived as fragmented due to number of browsers in use around the world, this variety and competition is part of what makes the web such an innovative platform. Thankfully, web standards have never been more mature than they are now and modern tools enable developers to build rich, cross browser compatible websites with confidence.

Best Practice:

Use cross browser testing tools such as BrowserStack.com, Browserling.com or BrowserShots.org to ensure your PWA is cross browser compatible.

Measure Page Load Performance

Why? The faster a website loads for a user the better their user experience will be. Optimizing for page speed is already a well known focus in web development but sometimes when developing a new version of a site the necessary optimizations are not considered a high priority.

When developing a progressive web application we recommend measuring the performance of your page load speed and optimizing before launching the site for the best results.

Best Practice:

Use tools such as Page Speed Insights and Web Page Test to measure the page load performance of your site. While Googlebot has a bit more patience in rendering, research has shown that 40% of consumers will leave a page that takes longer than three seconds to load..

Read more about our web page performance recommendations and the critical rendering path here.

Don’t:

Avoid leaving optimization as a post-launch step. If your website’s content loads quickly before migrating to a new progressive web application then it’s important to not regress in your optimizations.

We hope that the above checklist is useful and provides the right guidance to help you develop your Progressive Web Applications with indexability in mind.

As you get started, be sure to check out our Progressive Web App indexability samples that demonstrate server-side, client-side and hybrid rendering. As always, if you have any questions, please reach out on our Webmaster Forums.

Posted by Tom Greenaway, Developer Advocate

Building Indexable Progressive Web Apps

Progressive Web Apps (PWAs) are taking advantage of new technologies to bring the best of mobile sites and native applications to users — and they’re one of the most exciting new ideas on the web. But to truly have an impact, it’s important that they’re indexable and linkable. Every recommendation presented in this article is an existing best practice for indexability — regardless of whether you’re building a Progressive Web App or a simple static website. Nonetheless, we have collated these best practices to provide a checklist to guide you:

Make Your Content Crawlable

Why? Historically, websites would always generate or render their HTML on the server which is the simplest way to ensure your content is directly linkable. Web applications popularised the concept of client-side rendering in which content is updated dynamically on the page as the users navigates without requiring the page to be reloaded.

The modern approach is hybrid rendering, in which server-side rendering is used when a user navigates directly to a URL and client-side rendering is used after the initial page load for subsequent navigation and asynchronous requests.

Our server-side PWA sample demonstrates pure server-side rendering, while our hybrid PWA sample demonstrates the combined approach.

If you are unfamiliar with the server-side and client-side rendering terminology, check out these articles on the web read here and here.

<!– yeah, maybe not http://2.bp.blogspot.com/-41v6n3Vaf5s/UeRN_XJ0keI/AAAAAAAAN2Y/YxIHhddGiaw/s1600/css.gif .boxbox { float:left; min-width: 31%; max-width: 300px; word-wrap:break-word; padding: 0.2em;} .badbox { background-color: #eba; } .goodbox { background-color: #ded; } .avoidbox { background-color: #ffd; } .boxbox h5 { font-size: 1em; font-weight: bold; margin: 0.5em 0;} br.endboxen { clear: both; } –><!–

Best Practice:

box

Avoid:

box

Don’t:

box

–>

Best Practice:

Use server-side or hybrid rendering so users receive the content in the initial payload of their web request.

Always ensure your URLs are independently accessible:

https://www.example.com/product/25/

The above should deep link to that particular resource.

If you can’t support server-side or hybrid rendering for your Progressive Web App and you decide to use client-side rendering, we recommend using the Google Search Console “Fetch as Google tool” to verify your content successfully renders for our search crawler.

Don’t:

Don’t redirect users accessing deep links back to your web app’s homepage.

Additionally, serving an error page to users instead of deep linking should also be avoided.

Provide Clean URLs

Why? Fragment identifiers (#user/24601/ or #!user/24601/) were an effective workaround for browsers to AJAX new content from a server without reloading the page. This design is known as client-side rendering.

However, the fragment identifier syntax isn’t compatible with some web tools, frameworks and protocols such as Facebook’s Open Graph protocol.

The History API enables us to update the URL without fragment identifiers while still fetching resources asynchronously and therefore avoiding page reloads — it’s the best of both worlds. The AJAX crawling scheme (with its #! / escaped-fragment URLs) made sense at its time, but is now no longer recommended.

Our hybrid PWA and client-side PWA samples demonstrate the History API.

Best Practice:

Provide clean URLs without fragment identifiers (# or #!) such as:

https://www.example.com/product/25/

If using client-side or hybrid rendering be sure to support browser navigation with the History API.

Avoid:

Using the #! URL structure to drive unique URLs is discouraged:

https://www.example.com/#!product/25/

It was introduced as a workaround before the advent of the History API. It is considered a separate pattern to the purely # URL structure.

Don’t:

Using the # URL structure without the accompanying ! symbol is unsupported:

https://www.example.com/#product/25/

This URL structure is already a concept in the web and relates to deep linking into content on a particular page.

Specify Canonical URLs

Why? The best way to eliminate confusion for indexing when the same content is available under multiple URLs (be it the same or different domains) is to mark one page as the canonical, and all other pages that duplicate that content to refer to it.

Best Practice:

Include the following tag across all pages mirroring a particular piece of content:

<link rel="canonical" href="https://www.example.com/your-url/" />

If you are supporting Accelerated Mobile Pages be sure to correctly use its counterpart rel=”amphtml” instruction as well.

Avoid:

Avoid purposely duplicating content across multiple URLs and not using the rel=”canonical” link element.

For example, the rel=”canonical” link element can reduce ambiguity for URLs with tracking parameters.

Don’t:

Avoid creating conflicting canonical references between your pages.

Design for Multiple Devices

Why? It’s important that all your users get the best experience possible when viewing your website, regardless of their device.

Make your site responsive in its design — fonts, margins, paddings, buttons and general design of your site should scale dynamically based on screen resolutions and device viewports.

Small images scaled up for desktop or tablet devices give a poor experience. Conversely, super high resolution images take a long time to download on mobile phones and may impact mobile scroll performance.

Read more UX for PWAs here.

Best Practice:

Use “srcset” attribute to fetch different resolution images for different density screens to avoid downloading images larger than the device’s screen is capable of displaying.

Scale your font size and line height to ensure your text is legible no matter the size of the device. Similarly ensure the padding and margins of elements also scale sensibly.

Test various screen resolutions using the Chrome Developer Tool’s Device Mode feature and Mobile Friendly Test tool.

Don’t:

Don’t show different content to users than you show to Google. If you use redirects or user agent detection (a.k.a. browser sniffing or dynamic serving) to alter the design of your site for different devices it’s important that the content itself remains the same.

Use the Search Console “Fetch as Google” tool to verify the content fetched by Google matches the content a user sees.

For usability reasons, avoid using fixed-size fonts.

Develop Iteratively

Why? One of the safest paths to take when adding features to a web application is to make changes iteratively. If you add features one at a time you can observe the impact of each individual change.

Alternatively many developers prefer to view their progressive web application as an opportunity to overhaul their mobile site in one fell swoop — developing the new web app in an isolated environment and swapping it with their existing mobile site once ready.

When developing features iteratively try to break the changes into separate pieces. For example, if you intend to move from server-side rendering to hybrid rendering then tackle that as a single iteration — rather than in combination with other features.

Both approaches have their own pros and cons. Iterating reduces the complexity of dealing with search indexability as the transition is continuous. However, iterating might result in a slower development process and potentially a less innovative overhaul if development is not starting from scratch.

In either case, the most sensitive areas to keep an eye on are your canonical URLs and your site’s robots.txt configuration.

Best Practice:

Iterate on your website incrementally by adding new features piece by piece.

For example, if don’t support HTTPS yet then start by migrating to a secure site.

Avoid:

If you’ve developed your progressive web app in an isolated environment, then avoid launching it without checking the rel-canonical links and robots.txt are setup appropriately.

Ensure your rel-canonical links point to the real site and that your robots.txt configuration allows crawlers to crawl your new site.

Don’t:

It’s logical to prevent crawlers from indexing your in-development site before launch but don’t forget to unblock crawlers from accessing your new site when you launch.

Use Progressive Enhancement

Why? Wherever possible it’s important to detect browser features before using them. Feature detection is also better than testing for browsers that you believe support a given feature.

A common bad practice in the past was to enable or disable features by testing which browser the user had. However, as browsers are constantly evolving with features this technique is strongly discouraged.

Service Worker is a relatively new technology and it’s important to not break compatibility in the pursuit of progress — it’s a perfect example of when to use progressive enhancement.

Best Practice:

Before registering a Service Worker check for the availability of its API:

if ('serviceWorker' in navigator) {

...

Use per API detection method for all your website’s features.

Don’t:

Never use the browser’s user agent to enable or disable features in your web app. Always check whether the feature’s API is available and gracefully degrade if unavailable.

Avoid updating or launching your site without testing across multiple browsers! Check your site analytics to learn which browsers are most popular among your user base.

Test with Search Console

Why? It’s important to understand how Google Search views your site’s content. You can use Search Console to fetch individual URLs from your site and see how Google Search views them using the “Crawl > Fetch as Google“ feature. Search Console will process your JavaScript and render the page when that option is selected; otherwise only the raw HTML response is shown

Google Search Console also analyses the content on your page in a variety of ways including detecting the presence of Structured Data, Rich Cards, Sitelinks & Accelerated Mobile Pages.

Best Practice:

Monitor your site using Search Console and explore its features including “Fetch as Google”.

Provide a Sitemap via Search Console “Crawl > Sitemaps” It can be an effective way to ensure Google Search is aware of all your site’s pages.

Annotate with Schema.org structured data

Why? Schema.org structured data is a flexible vocabulary for summarizing the most important parts of your page as machine-processable data. This can be as general as simply saying that a page is a NewsArticle, or as specific as detailing the location, band name, venue and ticket vendor for a touring band, or summarizing the ingredients and steps for a recipe.

The use of this metadata may not make sense for every page on your web application but it’s recommended where it’s sensible. Google extracts it after the page is rendered.

There are a variety of data types including “NewsArticle”, “Recipe” & “Product” to name a few. Explore all the supported data types here.

Best Practice:

Verify that your Schema.org meta data is correct using Google’s Structured Data Testing Tool.

Check that the data you provided is appearing and there are no errors present.

Don’t:

Avoid using a data type that doesn’t match your page’s actual content. For example don’t use “Recipe” for a T-Shirt you’re selling — use “Product” instead.

Annotate with Open Graph & Twitter Cards

Why? In addition to the Schema.org metadata it can be helpful to add support for Facebook’s Open Graph protocol and Twitter rich cards as well.

These metadata formats improve the user experience when your content is shared on their corresponding social networks.

If your existing site or web application utilises these formats it’s important to ensure they are included in your progressive web application as well for optimal virality.

Best Practice:

Test your Open Graph markup with the Facebook Object Debugger Tool.

Familiarise yourself with Twitter’s metadata format.

Don’t:

Don’t forget to include these formats if your existing site supports them.

Test with Multiple Browsers

Why? Clearly from a user perspective it’s important that a website behaviors the same across all browsers. While the experience might adapt for different screen sizes we all expect a mobile site to work the same on similarly sized devices whether it’s an iPhone or an Android mobile phone.

While the web can be perceived as fragmented due to number of browsers in use around the world, this variety and competition is part of what makes the web such an innovative platform. Thankfully, web standards have never been more mature than they are now and modern tools enable developers to build rich, cross browser compatible websites with confidence.

Best Practice:

Use cross browser testing tools such as BrowserStack.com, Browserling.com or BrowserShots.org to ensure your PWA is cross browser compatible.

Measure Page Load Performance

Why? The faster a website loads for a user the better their user experience will be. Optimizing for page speed is already a well known focus in web development but sometimes when developing a new version of a site the necessary optimizations are not considered a high priority.

When developing a progressive web application we recommend measuring the performance of your page load speed and optimizing before launching the site for the best results.

Best Practice:

Use tools such as Page Speed Insights and Web Page Test to measure the page load performance of your site. While Googlebot has a bit more patience in rendering, research has shown that 40% of consumers will leave a page that takes longer than three seconds to load..

Read more about our web page performance recommendations and the critical rendering path here.

Don’t:

Avoid leaving optimization as a post-launch step. If your website’s content loads quickly before migrating to a new progressive web application then it’s important to not regress in your optimizations.

We hope that the above checklist is useful and provides the right guidance to help you develop your Progressive Web Applications with indexability in mind.

As you get started, be sure to check out our Progressive Web App indexability samples that demonstrate server-side, client-side and hybrid rendering. As always, if you have any questions, please reach out on our Webmaster Forums.

Posted by Tom Greenaway, Developer Advocate

Mobile-first Indexing

Today, most people are searching on Google using a mobile device. However, our ranking systems still typically look at the desktop version of a page’s content to evaluate its relevance to the user. This can cause issues when the mobile page has less content than the desktop page because our algorithms are not evaluating the actual page that is seen by a mobile searcher.

To make our results more useful, we’ve begun experiments to make our index mobile-first. Although our search index will continue to be a single index of websites and apps, our algorithms will eventually primarily use the mobile version of a site’s content to rank pages from that site, to understand structured data, and to show snippets from those pages in our results. Of course, while our index will be built from mobile documents, we’re going to continue to build a great search experience for all users, whether they come from mobile or desktop devices.

We understand this is an important shift in our indexing and it’s one we take seriously. We’ll continue to carefully experiment over the coming months on a small scale and we’ll ramp up this change when we’re confident that we have a great user experience. Though we’re only beginning this process, here are a few recommendations to help webmasters prepare as we move towards a more mobile-focused index.

- If you have a responsive site or a dynamic serving site where the primary content and markup is equivalent across mobile and desktop, you shouldn’t have to change anything.

- If you have a site configuration where the primary content and markup is different across mobile and desktop, you should consider making some changes to your site.

- Make sure to serve structured markup for both the desktop and mobile version.

- Sites can verify the equivalence of their structured markup across desktop and mobile by typing the URLs of both versions into the Structured Data Testing Tool and comparing the output.

- When adding structured data to a mobile site, avoid adding large amounts of markup that isn’t relevant to the specific information content of each document.

- Use the robots.txt testing tool to verify that your mobile version is accessible to Googlebot.

- Sites do not have to make changes to their canonical links; we’ll continue to use these links as guides to serve the appropriate results to a user searching on desktop or mobile.

- If you are a site owner who has only verified their desktop site in Search Console, please add and verify your mobile version.

- If you only have a desktop site, we’ll continue to index your desktop site just fine, even if we’re using a mobile user agent to view your site.

- If you are building a mobile version of your site, keep in mind that a functional desktop-oriented site can be better than a broken or incomplete mobile version of the site. It’s better for you to build up your mobile site and launch it when ready.

If you have any questions, feel free to contact us via the Webmaster forums or our public events. We anticipate this change will take some time and we’ll update you as we make progress on migrating our systems.

Posted by Doantam Phan, Product Manager

Mobile-first Indexing

Today, most people are searching on Google using a mobile device. However, our ranking systems still typically look at the desktop version of a page’s content to evaluate its relevance to the user. This can cause issues when the mobile page has less content than the desktop page because our algorithms are not evaluating the actual page that is seen by a mobile searcher.

To make our results more useful, we’ve begun experiments to make our index mobile-first. Although our search index will continue to be a single index of websites and apps, our algorithms will eventually primarily use the mobile version of a site’s content to rank pages from that site, to understand structured data, and to show snippets from those pages in our results. Of course, while our index will be built from mobile documents, we’re going to continue to build a great search experience for all users, whether they come from mobile or desktop devices.

We understand this is an important shift in our indexing and it’s one we take seriously. We’ll continue to carefully experiment over the coming months on a small scale and we’ll ramp up this change when we’re confident that we have a great user experience. Though we’re only beginning this process, here are a few recommendations to help webmasters prepare as we move towards a more mobile-focused index.

- If you have a responsive site or a dynamic serving site where the primary content and markup is equivalent across mobile and desktop, you shouldn’t have to change anything.

- If you have a site configuration where the primary content and markup is different across mobile and desktop, you should consider making some changes to your site.

- Make sure to serve structured markup for both the desktop and mobile version.

Sites can verify the equivalence of their structured markup across desktop and mobile by typing the URLs of both versions into the Structured Data Testing Tool and comparing the output.

When adding structured data to a mobile site, avoid adding large amounts of markup that isn’t relevant to the specific information content of each document.

- Use the robots.txt testing tool to verify that your mobile version is accessible to Googlebot.

- Sites do not have to make changes to their canonical links; we’ll continue to use these links as guides to serve the appropriate results to a user searching on desktop or mobile.

- If you are a site owner who has only verified their desktop site in Search Console, please add and verify your mobile version.

- If you only have a desktop site, we’ll continue to index your desktop site just fine, even if we’re using a mobile user agent to view your site.

If you are building a mobile version of your site, keep in mind that a functional desktop-oriented site can be better than a broken or incomplete mobile version of the site. It’s better for you to build up your mobile site and launch it when ready.

If you have any questions, feel free to contact us via the Webmaster forums or our public events. We anticipate this change will take some time and we’ll update you as we make progress on migrating our systems.

Posted by Doantam Phan, Product Manager