Google’s handling of new top level domains

Q: How will new gTLDs affect search? Is Google changing the search algorithm to favor these TLDs? How important are they really in search?

A: Overall, our systems treat new gTLDs like other gTLDs (like .com & .org). Keywords in a TLD do not give any advantage or disadvantage in search.

Q: What about IDN TLDs such as .みんな? Can Googlebot crawl and index them, so that they can be used in search?

A: Yes. These TLDs can be used the same as other TLDs (it’s easy to check with a query like [site:みんな]). Google treats the Punycode version of a hostname as being equivalent to the unencoded version, so you don’t need to redirect or canonicalize them separately. For the rest of the URL, remember to use UTF-8 for the path & query-string in the URL, when using non-ASCII characters.

Q: Will a .BRAND TLD be given any more or less weight than a .com?

A: No. Those TLDs will be treated the same as a other gTLDs. They will require the same geotargeting settings and configuration, and they won’t have more weight or influence in the way we crawl, index, or rank URLs.

Q: How are the new region or city TLDs (like .london or .bayern) handled?

A: Even if they look region-specific, we will treat them as gTLDs. This is consistent with our handling of regional TLDs like .eu and .asia. There may be exceptions at some point down the line, as we see how they’re used in practice. See our help center for more information on multi-regional and multilingual sites, and set geotargeting in Search Console where relevant.

Q: What about real ccTLDs (country code top-level domains) : will Google favor ccTLDs (like .uk, .ae, etc.) as a local domain for people searching in those countries?

A: By default, most ccTLDs (with exceptions) result in Google using these to geotarget the website; it tells us that the website is probably more relevant in the appropriate country. Again, see our help center for more information on multi-regional and multilingual sites.

Q: Will Google support my SEO efforts to move my domain from .com to a new TLD? How do I move my website without losing any search ranking or history?

A: We have extensive site move documentation in our Help Center. We treat these moves the same as any other site move. That said, domain changes can take time to be processed for search (and outside of search, users expect email addresses to remain valid over a longer period of time), so it’s generally best to choose a domain that will fit your long-term needs.

We hope this gives you more information on how the new top level domains are handled. If you have any more questions, feel free to drop them here, or ask in our help forums.

Posted by John Mueller, Webmaster Trends Analyst

App deep linking with goo.gl

Starting now, goo.gl short links function as a single link you can use to all your content — whether that content is in your Android app, iOS app, or website. Once you’ve taken the necessary steps to set up App Indexing for Android and iOS, goo.gl URLs will send users straight to the right page in your app if they have it installed, and everyone else to your website. This will provide additional opportunities for your app users to re-engage with your app.

This feature works for both new short URLs and retroactively, so any existing goo.gl short links to your content will now also direct users to your app.

Share links that ‘do the right thing’

You can also make full use of this feature by integrating the URL Shortener API into your app’s share flow, so users can share links that automatically redirect to your native app cross-platform. This will also allow others to embed links in their websites and apps which deep link directly to your app.

Take Google Maps as an example. With the new cross-platform goo.gl links, the Maps share button generates one link that provides the best possible sharing experience for everyone. When opened, the link auto-detects the user’s platform and if they have Maps installed. If the user has the app installed, the short link opens the content directly in the Android or iOS Maps app. If the user doesn’t have the app installed or is on desktop, the short link opens the page on the Maps website.

Try it out for yourself! Don’t forget to use a phone with the Google Maps app installed: http://goo.gl/maps/xlWFj.

How to set it up

To set up app deep linking on goo.gl:

- Complete the necessary steps to participate in App Indexing for Android and iOS at g.co/AppIndexing. Note that goo.gl deep links are open to all iOS developers, unlike deep links from Search currently. After this step, existing goo.gl short links will start deep linking to your app.

- Optionally integrate the URL Shortener API with your app’s share flow, your email campaigns, etc. to programmatically generate links that will deep link directly back to your app.

We hope you enjoy this new functionality and happy cross-platform sharing!

Posted by Fabian Schlup, Software Engineer

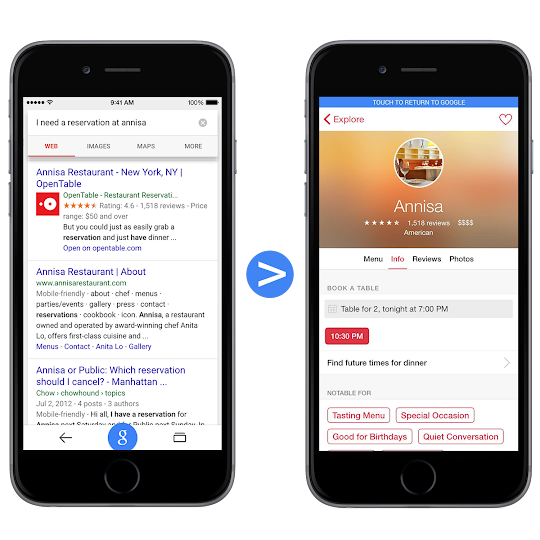

Surfacing content from iOS apps in Google Search

We’ve been helping users discover relevant content from Android apps in Google search results for a while now. Starting today, we’re bringing App Indexing to iOS apps as well. This means users on both Android and iOS will be able to open mobile app content straight from Google Search.

Indexed links from an initial group of apps we’ve been working with will begin appearing on iOS in search results both in the Google App and Chrome for signed-in users globally in the coming weeks:

How to get your iOS app indexed

While App Indexing for iOS is launching with a small group of test partners initially, we’re working to make this technology available to more app developers as soon as possible. In the meantime, here are the steps to get a head start on App Indexing for iOS:

- Add deep linking support to your iOS app.

- Make sure it’s possible to return to Search results with one click.

- Provide deep link annotations on your site.

- Let us know you’re interested. Keep in mind that expressing interest does not automatically guarantee getting app deep links in iOS search results.

If you happen to be attending Google I/O this week, stop by our talk titled “Get your app in the Google index” to learn more about App Indexing. You’ll also find detailed documentation on App Indexing for iOS at g.co/AppIndexing. If you’ve got more questions, drop by our Webmaster help forum.

Posted by Eli Wald, Product Manager

Helping users fill out online forms

A lot of websites rely on forms for important goals completion, such as completing a transaction on a shopping site or registering on a news site. For many users, online forms mean repeatedly typing common information like their names, emails, phone numbers or addresses, on different sites across the web. In addition to being tedious, this task is also error-prone, which can lead many users to abandon the flow entirely. In a world where users browse the internet using their mobile devices more than their laptops or desktops, having forms that are easy and quick to fill out is crucial! Three years ago, we announced the support for a new “autocomplete” attribute in Chrome, to make form-filling faster, easier and smarter. Now, Chrome fully supports the “autocomplete” attribute for form fields according to the current WHATWG HTML Standard. This allows webmasters and web developers to label input element fields with common data types, such as ‘name’ or ‘street-address’, without changing the user interface or the backend. Numerous webmasters have increased the rate of form completions on their sites by marking up their forms for auto-completion.

For example, marking up an email address field on a form to allow auto-completion would look like this (with a full sample form available):

<input type="text" name="customerEmail" autocomplete="email"/>

Making websites friendly and easy to browse for users on mobile devices is very important. We hope to see many forms marked up with the “autocomplete” attribute in the future. For more information, you can check out our specifications about Label and name inputs in Web Fundamentals. And as usual, if you have any questions, please post in our Webmasters Help Forums.

Posted by Mathieu Perreault, Chrome Software Engineer, and Zineb Ait Bahajji, Webmaster Trends Analyst

An update on doorway pages

We have a long-standing view that doorway pages that created solely for search engines can harm the quality of the user’s search experience.

- Is the purpose to optimize for search engines and funnel visitors into the actual usable or relevant portion of your site, or are they an integral part of your site’s user experience?

- Are the pages intended to rank on generic terms yet the content presented on the page is very specific?

- Do the pages duplicate useful aggregations of items (locations, products, etc.) that already exist on the site for the purpose of capturing more search traffic?

- Are these pages made solely for drawing affiliate traffic and sending users along without creating unique value in content or functionality?

- Do these pages exist as an “island?” Are they difficult or impossible to navigate to from other parts of your site? Are links to such pages from other pages within the site or network of sites created just for search engines?

Posted by Brian White, Google Webspam Team

Deprecation of the old Webmaster Tools API

Last fall we announced the new Webmaster Tools API, which helps you to automate a number of important aspects using code. With the pending shutdown of ClientLogin, we’re going to turn down the old Webmaster Tools API on April 20, 2015. If you’re…

Unblocking resources with Webmaster Tools

Webmasters often use linked images, CSS, and JavaScript files in web pages to make them pretty and functional. If these resources are blocked from crawling, then Googlebot can’t use them when it renders those pages for search. Google Webmaster Tools no…

Easier website development with Web Components and JSON-LD

JSON-LD is a JSON-based data format that can be used to implement structured data describing content on your site to Google and other search engines. For example, if you have a list of events, cafes, people or more, you can include this data in your pages in a structured way using the schema.org vocabulary embedded in webpages as a JSON-LD snippet. The structured data helps Google understand your pages better and highlight your content in search features, such events in the Knowledge Graph and rich snippets.

Web Components are a nascent set of technologies to define custom, reusable user interface widgets and their behavior. Any web developer can build a Web Component. You start by defining a template for a distinct part of the user interface, which you import into the pages on which you want to use the Web Component. A Custom Element is used to define the behavior of the Web Component. Because you’re bundling the display and logic for part of the user interface into the Web Component, you can share and reuse the bundle on other pages and with other developers, thus simplifying web development.

JSON-LD and Web Components work really well together. The Custom Element functions as the presentation layer and the JSON-LD functions as the data layer that the custom element and search engines consume. This means you can build custom elements for any schema.org type, such as schema.org/Event and schema.org/LocalBusiness.

Your architecture would then look like this. Your structured data is stored in your database, for example, the store locations in your chain. This data is embedded into your webpage as a JSON-LD snippet, which means it’s available to be consumed by the Custom Element to display to a human visitor and for Googlebot to retrieve for Google indexing.

To learn more and get started with your own custom elements, please see:

- Our latest article on HTML5 Rocks and the accompanying code examples.

- The JSON-LD website, and the W3C spec

- Web Components wiki and the Web Components community on webcomponents.org

- schema.org

- Google’s structured data documentation

Posted by Ewa Gasperowicz, Developer Programs Engineer, Mano Marks, Developer Advocate, Pierre Far, Webmaster Trends Analyst

Safe Browsing and Google Analytics: Keeping More Users Safe, Together

The following was originally posted on the Google Online Security Blog.

If you run a web site, you may already be familiar with Google Webmaster Tools and how it lets you know if Safe Browsing finds something problematic on your site. For example, we’ll notify you if your site is delivering malware, which is usually a sign that it’s been hacked. We’re extending our Safe Browsing protections to automatically display notifications to all Google Analytics users via familiar Google Analytics Notifications.

Google Safe Browsing has been protecting people across the Internet for over eight years and we’re always looking for ways to extend that protection even further. Notifications like these help webmasters like you act quickly to respond to any issues. Fast response helps keep your site—and your visitors—safe.

Posted by: Stephan Somogyi, Product Manager, Security and Privacy

Finding more mobile-friendly search results

Webmaster level: all

When it comes to search on mobile devices, users should get the most relevant and timely results, no matter if the information lives on mobile-friendly web pages or apps. As more people use mobile devices to access the internet, our algorithms have to adapt to these usage patterns. In the past, we’ve made updates to ensure a site is configured properly and viewable on modern devices. We’ve made it easier for users to find mobile-friendly web pages and we’ve introduced App Indexing to surface useful content from apps. Today, we’re announcing two important changes to help users discover more mobile-friendly content:

1. More mobile-friendly websites in search results

Starting April 21, we will be expanding our use of mobile-friendliness as a ranking signal. This change will affect mobile searches in all languages worldwide and will have a significant impact in our search results. Consequently, users will find it easier to get relevant, high quality search results that are optimized for their devices.

To get help with making a mobile-friendly site, check out our guide to mobile-friendly sites. If you’re a webmaster, you can get ready for this change by using the following tools to see how Googlebot views your pages:

- If you want to test a few pages, you can use the Mobile-Friendly Test.

- If you have a site, you can use your Webmaster Tools account to get a full list of mobile usability issues across your site using the Mobile Usability Report.

2. More relevant app content in search results

Starting today, we will begin to use information from indexed apps as a factor in ranking for signed-in users who have the app installed. As a result, we may now surface content from indexed apps more prominently in search. To find out how to implement App Indexing, which allows us to surface this information in search results, have a look at our step-by-step guide on the developer site.

If you have questions about either mobile-friendly websites or app indexing, we’re always happy to chat in our Webmaster Help Forum.

Posted by Takaki Makino, Chaesang Jung, and Doantam Phan

Case Studies: Fixing Hacked Sites

Webmaster Level: All Every day, thousands of websites get hacked. Hacked sites can harm users by serving malicious software, collecting personal information, or redirecting them to sites they didn’t intend to visit. Webmasters want to fix hacked sites …

Crawling and indexing of locale-adaptive pages

Webmaster level: advanced

Locale-adaptive pages change their content to reflect the user’s language or perceived geographic location. Since, by default, Googlebot requests pages without setting an Accept-Language HTTP request header and uses IP addresses that appear to be located in the USA, not all content variants of locale-adaptive pages may be indexed completely.

Today we’re introducing new locale-aware crawl configurations for Googlebot for pages that we detect may adapt the content they serve based on the request’s language and perceived location. These are:

- Geo-distributed crawling where Googlebot would start to use IP addresses that appear to be coming from outside the USA, in addition to the current IP addresses that appear to be from the USA that Googlebot currently uses.

- Language-dependent crawling where Googlebot would start to crawl with an Accept-Language HTTP header in the request.

As these new crawling configurations are enabled automatically for pages we detect to be locale-adaptive, you may notice changes in how we crawl and show your site in Google search results without you altering your CMS or server settings.

Note that these new configurations do not alter our recommendation to use separate URLs with rel=alternate hreflang annotations for each locale. We continue to support and recommend using separate URLs as they are still the best way for users to interact and share your content, and also to maximize indexing and better ranking of all variants of your content.

As always, if you have any questions or feedback, please tell us in the internationalization Webmaster Help Forum.

Posted by Qin Yin, Software Engineer Search Infrastructure, and Pierre Far, Webmaster Trends Analyst

Upcoming Events In The Knowledge Graph

Last year, we launched a new way for musical artists to list their upcoming events on Google: schema.org markup on their official websites. Now we’re expanding this program in four ways:

1. Official Ticket Links

For artists: if you mark up ticketing links along with the events on your official website, we’ll show an expanded answer card for your events in Google search, including the on-sale date, availability, and a direct link to your preferred ticketing site.

As before, you may write the event markup directly into your site’s HTML, or simply install an event widget that builds in the markup for you automatically—like Bandsintown, BandPage, GigPress, ReverbNation or Songkick.

2. Delegated Event Listings

What if you can’t add markup or an event widget to your official website—for example, if your website doesn’t list your events at all? Now you can use delegation markup to tell us to source your events from a page of your choice on another website. Just add the following markup to your home page, making sure to customize the three red values:

<script type="application/ld+json">

{"@context" : "http://schema.org",

"@type" : "MusicGroup",

"name" : "Your Band or Performer Name",

"url" : "http://your-official-website.com",

"event" : "http://other-event-site.com/your-event-listing-page/"

}

</script>

The marked-up events found on the other event site’s page will then be eligible for Google events features. Examples of sites you can point to in the “event” field include bandpage.com, bandsintown.com, songkick.com, and ticketmaster.com.

3. Comedian Events

Hey funny people! We want your performances to show up on Google, too. Just add ComedyEvent markup to your official website. Or, if another site like laughstub.com has your complete event listings, use delegation markup on your home page to point us their way.

4. Venue Events

Last but definitely not least: we’re starting to show venue event listings in Google Search. Concert venues, theaters, libraries, fairgrounds, and so on: make your upcoming events eligible for display across Google by adding Event markup to your official website.

As with artist events, you have a choice of writing the event markup directly into your site’s HTML, or using a widget or plugin that builds in the markup for you. Also, if all your events are ticketed by a primary ticketer whose website provides markup, you don’t have to do anything! Google will read the ticketer’s markup and apply it toward your venue’s event listings.

For example, venues ticketed by Ticketmaster, including its international sites and TicketWeb, will automatically be covered. The same goes for venues that list events with Ticketfly, AXS, LaughStub, Wantickets, Holdmyticket, ShowClix, Stranger Tickets, Ticket Alternative, Digitick, See Tickets, Tix, Fnac Spectacles, Ticketland.ru, iTickets, MIDWESTIX, Ticketleap, or Instantseats. All of these have already implemented ticketer events markup.

Please see our Developer Site for full documentation of these features, including a video tutorial on how to write and test event markup. Then add the markup, help new fans discover your events, and play to a packed house!

Posted by Justin Boyan, Product Manager, Google Search

New Structured Data Testing Tool, documentation, and more

Structured data markup helps your content get discovered in search results and across Google properties. We’re excited to share several updates to help you author and publish markup on your website:

- A new Structured Data Testing Tool to better reflect Google’s interpretation of your content

- Improved documentation and policy guidelines for Google features powered by structured data on the web

- Expanded support for the JSON-LD markup syntax

Structured Data Testing Tool

It provides the following features:

- Validation for all Google features powered by structured data

- Support for markup in the JSON-LD syntax, including in dynamic HTML pages

- Clean display of the structured data items on your page

- Syntax highlighting of markup problems right in your HTML source code

New documentation and simpler policy

We’ve clarified our documentation for the vocabulary supported in structured data based on webmasters’ feedback. The new documentation explains the markup you need to add to enable different search features for your content, along with code examples in the supported syntaxes. We’ll be retiring the old documentation soon.

We’ve also simplified and clarified our policies on using structured data. If you believe that another site is abusing Google’s rich snippets quality guidelines, please let us know using the rich snippets spam report form.

Expanded support for JSON-LD

We’ve extended our support for schema.org vocabulary in JSON-LD syntax to new use cases: company logos and contacts, social profile links, events in the Knowledge Graph, the sitelinks search box, and event rich snippets. We’re working on expanding support to additional markup-powered features in the future.

As always, we welcome your feedback and questions; please post in our Webmaster Help forums.

Posted by Pierre Far, Webmaster Trends Analyst, Tatsiana Sakhar, Search Quality Analyst, Zach Clifford, Software Engineer

Google Public DNS and Location-Sensitive DNS Responses

Webmaster level: advanced

Recently the Google Public DNS team, in collaboration with Akamai, reached an important milestone: Google Public DNS now propagates client location information to Akamai nameservers. This effort significantly improves the accuracy of approximately 30% of the location-sensitive DNS responses returned by Google Public DNS. In other words, client requests to Akamai hosted content can be routed to closer servers with lower latency and greater data transfer throughput. Overall, Google Public DNS resolvers serve 400 billion responses per day and more than 50% of them are location-sensitive.

DNS is often used by Content Distribution Networks (CDNs) such as Akamai to achieve location-based load balancing by constructing responses based on clients’ IP addresses. However, CDNs usually see the DNS resolvers’ IP address instead of the actual clients’ and are therefore forced to assume that the resolvers are close to the clients. Unfortunately, the assumption is not always true. Many resolvers, especially those open to the Internet at large, are not deployed at every single local network.

To solve this issue, a group of DNS and content providers, including Google, proposed an approach to allow resolvers to forward the client’s subnet to CDN nameservers in an extension field in the DNS request. The subnet is a portion of the client’s IP address, truncated to preserve privacy. The approach is officially named edns-client-subnet or ECS.

This solution requires that both resolvers and CDNs adopt the new DNS extension. Google Public DNS resolvers automatically probe to discover ECS-aware nameservers and have observed the footprint of ECS support from CDNs expanding steadily over the past years. By now, more than 4000 nameservers from approximately 300 content providers support ECS. The Google-Akamai collaboration marks a significant milestone in our ongoing efforts to ensure DNS contributes to keeping the Internet fast. We encourage more CDNs to join us by supporting the ECS option.

For more information about Google Public DNS, please visit our website. For CDN operators, please also visit “A Faster Internet” for more technical details.

Posted by Yunhong Gu, Tech Lead, Google Public DNS

The four steps to appiness

Webmaster Level: intermediate to advanced

App deep links are the new kid on the block in organic search, and they’re picking up speed faster than you can say “schema.org ViewAction”! For signed-in users, 15% of Google searches on Android now return deep links to apps through App Indexing. And over just the past quarter, we’ve seen the number of clicks on app deep links jump by 10x.

We’ve gotten a lot of feedback from developers and seen a lot of implementations gone right and others that were good learning experiences since we opened up App Indexing back in June. We’d like to share with you four key steps to monitor app performance and drive user engagement:

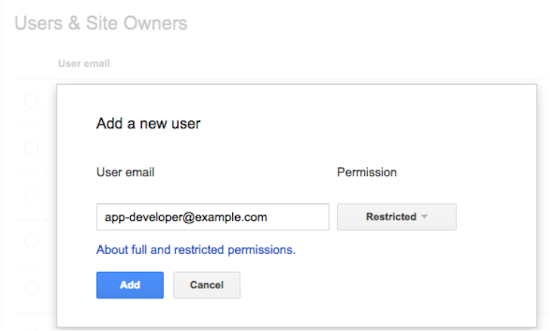

1. Give your app developer access to Webmaster Tools

App indexing is a team effort between you (as a webmaster) and your app development team. We show information in Webmaster Tools that is key for your app developers to do their job well. Here’s what’s available right now:

- Errors in indexed pages within apps

- Weekly clicks and impressions from app deep link via Google search

- Stats on your sitemap (if that’s how you implemented the app deep links)

…and we plan to add a lot more in the coming months!

We’ve noticed that very few developers have access to Webmaster Tools. So if you want your app development team to get all of the information they need to fix app-related issues, it’s essential for them to have access to Webmaster Tools.

Any verified site owner can add a new user. Pick restricted or full permissions, depending on the level of access you’d like to give:

2. Understand how your app is doing in search results

How are users engaging with your app from search results? We’ve introduced two new ways for you to track performance for your app deep links:

- We now send a weekly clicks and impressions update to the Message center in your Webmaster Tools account.

- You can now track how much traffic app deep links drive to your app using referrer information – specifically, the referrer extra in the ACTION_VIEW intent. We’re working to integrate this information with Google Analytics for even easier access. Learn how to track referrer information on our Developer site.

3. Make sure key app resources can be crawled

Blocked resources are one of the top reasons for the “content mismatch” errors you see in Webmaster Tools’ Crawl Errors report. We need access to all the resources necessary to render your app page. This allows us to assess whether your associated web page has the same content as your app page.

To help you find and fix these issues, we now show you the specific resources we can’t access that are critical for rendering your app page. If you see a content mismatch error for your app, look out for the list of blocked resources in “Step 5” of the details dialog:

4. Watch out for Android App errors

To help you identify errors when indexing your app, we’ll send you messages for all app errors we detect, and will also display most of them in the “Android apps” tab of the Crawl errors report.

In addition to the currently available “Content mismatch” and “Intent URI not supported” error alerts, we’re introducing three new error types:

- APK not found: we can’t find the package corresponding to the app.

- No first-click free: the link to your app does not lead directly to the content, but requires login to access.

- Back button violation: after following the link to your app, the back button did not return to search results.

In our experience, the majority of errors are usually caused by a general setting in your app (e.g. a blocked resource, or a region picker that pops up when the user tries to open the app from search). Taking care of that generally resolves it for all involved URIs.

Good luck in the pursuit of appiness! As always, if you have questions, feel free to drop by our Webmaster help forum.

Posted by Mariya Moeva, Webmaster Trends Analyst

Are you a robot? Introducing “No CAPTCHA reCAPTCHA”

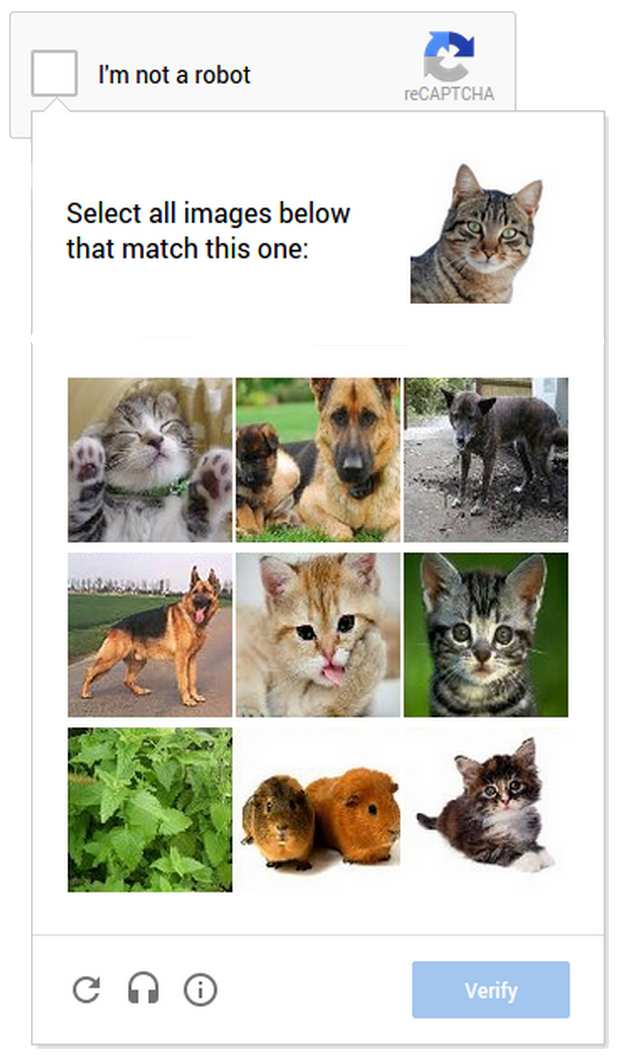

But, we figured it would be easier to just directly ask our users whether or not they are robots—so, we did! We’ve begun rolling out a new API that radically simplifies the reCAPTCHA experience. We’re calling it the “No CAPTCHA reCAPTCHA” and this is how it looks:

On websites using this new API, a significant number of users will be able to securely and easily verify they’re human without actually having to solve a CAPTCHA. Instead, with just a single click, they’ll confirm they are not a robot.

A brief history of CAPTCHAs

While the new reCAPTCHA API may sound simple, there is a high degree of sophistication behind that modest checkbox. CAPTCHAs have long relied on the inability of robots to solve distorted text. However, our research recently showed that today’s Artificial Intelligence technology can solve even the most difficult variant of distorted text at 99.8% accuracy. Thus distorted text, on its own, is no longer a dependable test.

To counter this, last year we developed an Advanced Risk Analysis backend for reCAPTCHA that actively considers a user’s entire engagement with the CAPTCHA—before, during, and after—to determine whether that user is a human. This enables us to rely less on typing distorted text and, in turn, offer a better experience for users. We talked about this in our Valentine’s Day post earlier this year.

The new API is the next step in this steady evolution. Now, humans can just check the box and in most cases, they’re through the challenge.

Are you sure you’re not a robot?

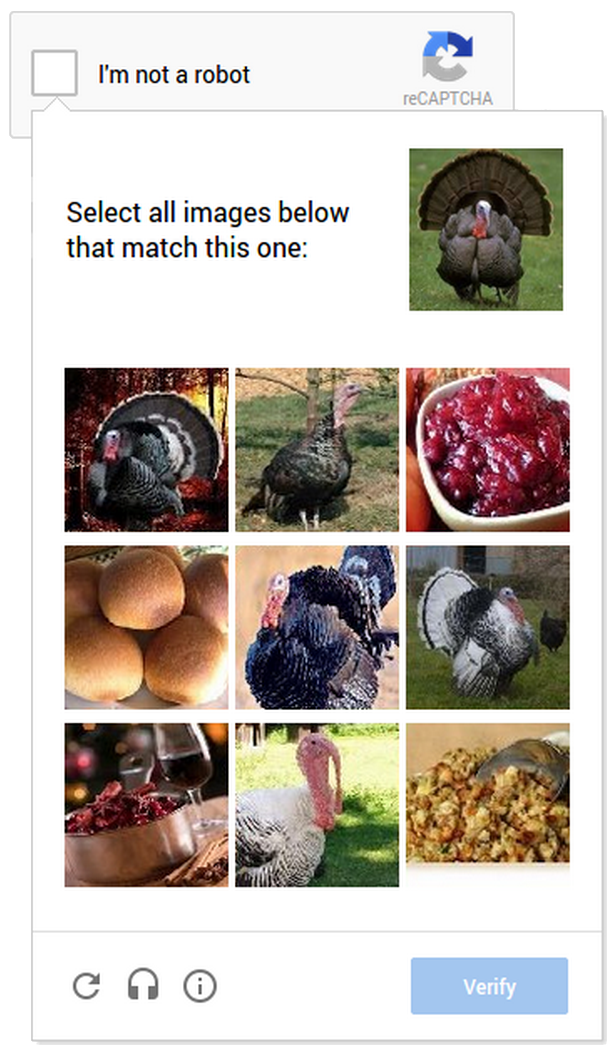

However, CAPTCHAs aren’t going away just yet. In cases when the risk analysis engine can’t confidently predict whether a user is a human or an abusive agent, it will prompt a CAPTCHA to elicit more cues, increasing the number of security checkpoints to confirm the user is valid.

Making reCAPTCHAs mobile-friendly

This new API also lets us experiment with new types of challenges that are easier for us humans to use, particularly on mobile devices. In the example below, you can see a CAPTCHA based on a classic Computer Vision problem of image labeling. In this version of the CAPTCHA challenge, you’re asked to select all of the images that correspond with the clue. It’s much easier to tap photos of cats or turkeys than to tediously type a line of distorted text on your phone.

As more websites adopt the new API, more people will see “No CAPTCHA reCAPTCHAs”. Early adopters, like Snapchat, WordPress, Humble Bundle, and several others are already seeing great results with this new API. For example, in the last week, more than 60% of WordPress’ traffic and more than 80% of Humble Bundle’s traffic on reCAPTCHA encountered the No CAPTCHA experience—users got to these sites faster. To adopt the new reCAPTCHA for your website, visit our site to learn more.

Humans, we’ll continue our work to keep the Internet safe and easy to use. Abusive bots and scripts, it’ll only get worse—sorry we’re (still) not sorry.

Posted by Vinay Shet, Product Manager, reCAPTCHA

Helping users find mobile-friendly pages

Have you ever tapped on a Google Search result on your mobile phone, only to find yourself looking at a page where the text was too small, the links were tiny, and you had to scroll sideways to see all the content? This usually happens when the website has not been optimized to be viewed on a mobile phone.

This can be a frustrating experience for our mobile searchers. Starting today, to make it easier for people to find the information that they’re looking for, we’re adding a “mobile-friendly” label to our mobile search results.

- Avoids software that is not common on mobile devices, like Flash

- Uses text that is readable without zooming

- Sizes content to the screen so users don’t have to scroll horizontally or zoom

- Places links far enough apart so that the correct one can be easily tapped

- Check your pages with the Mobile-Friendly Test

- Read our updated documentation on our Webmasters Mobile Guide on how to create and improve your mobile site

- See the Mobile usability report in Google Webmaster Tools, which highlights major mobile usability issues across your entire site, not just one page

- Check our how-to guide for third-party software like WordPress or Joomla, in order to migrate your website hosted on a CMS (Content Management System) to use a mobile-friendly template

We see these labels as a first step in helping mobile users to have a better mobile web experience. We are also experimenting with using the mobile-friendly criteria as a ranking signal.

Posted by Ryoichi Imaizumi and Doantam Phan, Google Mobile Search

Tracking mobile usability in Webmaster Tools

Webmaster Level: intermediate

Mobile is growing at a fantastic pace – in usage, not just in screen size. To keep you informed of issues mobile users might be seeing across your website, we’ve added the Mobile Usability feature to Webmaster Tools.

The new feature shows mobile usability issues we’ve identified across your website, complete with graphs over time so that you see the progress that you’ve made.

A mobile-friendly site is one that you can easily read & use on a smartphone, by only having to scroll up or down. Swiping left/right to search for content, zooming to read text and use UI elements, or not being able to see the content at all make a site harder to use for users on mobile phones. To help, the Mobile Usability reports show the following issues: Flash content, missing viewport (a critical meta-tag for mobile pages), tiny fonts, fixed-width viewports, content not sized to viewport, and clickable links/buttons too close to each other.

We strongly recommend you take a look at these issues in Webmaster Tools, and think about how they might be resolved; sometimes it’s just a matter of tweaking your site’s template! More information on how to make a great mobile-friendly website can be found in our Web Fundamentals website (with more information to come soon).

If you have any questions, feel free to join us in our webmaster help forums (on your phone too)!

Posted by John Mueller, Webmaster Trends Analyst, Zurich

Updating our technical Webmaster Guidelines

Webmaster level: All

We recently announced that our indexing system has been rendering web pages more like a typical modern browser, with CSS and JavaScript turned on. Today, we’re updating one of our technical Webmaster Guidelines in light of this announcement.

For optimal rendering and indexing, our new guideline specifies that you should allow Googlebot access to the JavaScript, CSS, and image files that your pages use. This provides you optimal rendering and indexing for your site. Disallowing crawling of Javascript or CSS files in your site’s robots.txt directly harms how well our algorithms render and index your content and can result in suboptimal rankings.

Updated advice for optimal indexing

Historically, Google indexing systems resembled old text-only browsers, such as Lynx, and that’s what our Webmaster Guidelines said. Now, with indexing based on page rendering, it’s no longer accurate to see our indexing systems as a text-only browser. Instead, a more accurate approximation is a modern web browser. With that new perspective, keep the following in mind:

- Just like modern browsers, our rendering engine might not support all of the technologies a page uses. Make sure your web design adheres to the principles of progressive enhancement as this helps our systems (and a wider range of browsers) see usable content and basic functionality when certain web design features are not yet supported.

- Pages that render quickly not only help users get to your content easier, but make indexing of those pages more efficient too. We advise you follow the best practices for page performance optimization, specifically:

- Eliminate unnecessary downloads

- Optimize the serving of your CSS and JavaScript files by concatenating (merging) your separate CSS and JavaScript files, minifying the concatenated files, and configuring your web server to serve them compressed (usually gzip compression)

- Make sure your server can handle the additional load for serving of JavaScript and CSS files to Googlebot.

Testing and troubleshooting

In conjunction with the launch of our rendering-based indexing, we also updated the Fetch and Render as Google feature in Webmaster Tools so webmasters could see how our systems render the page. With it, you’ll be able to identify a number of indexing issues: improper robots.txt restrictions, redirects that Googlebot cannot follow, and more.

And, as always, if you have any comments or questions, please as in our Webmaster Help forum.

Posted by Pierre Far, Webmaster Trends Analyst